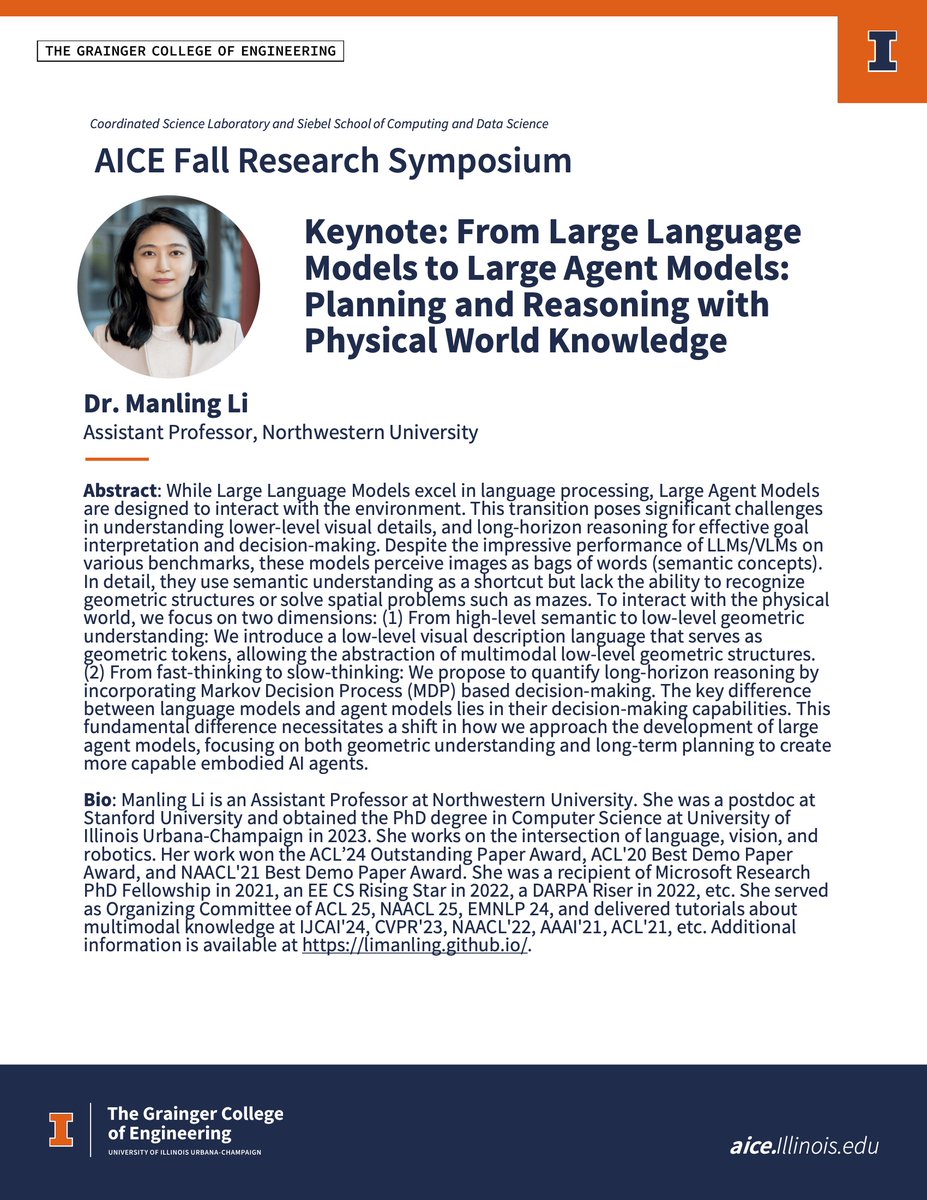

Manling Li

@ManlingLi_

Followers

6K

Following

435

Media

42

Statuses

313

Assistant Professor@Northwestern, Postdoc@Stanford, PhD@UIUC #NLP #CV Language+Vision/EmbodiedAI, Reasoning, Planning, Compositionality,Trustworthiness

Stanford, CA

Joined November 2017

📢I am committed to be an advisor like her! I have 2-5 PhD positions and 1 postdoc position for Fall 2025 on multimodality (NLP/CV/Robotics), especially on #Reasoning, #Planning, and #Compositionality. 🌟See more at my talk slides:

🎉I am so thrilled to be back at UIUC, my dear home. I feel incredibly lucky to have my best PhD advisor in the world @hengjinlp! I've been long thinking of what it means to be "a good advisor", I've come to realize a key sign is students' steadily growing confidence. It is.

8

59

513

I am excited to join @northwesterncs as an assistant professor in Fall24 and @StanfordSVL as a postdoc with @jiajunwu_cs. I cannot say how much I appreciate the help from my advisor @elgreco_winter, references @ShihFuChang @kchonyc @JiaweiHan @kathymckeown and many many people.

38

15

388

Honored to be on the list! I am looking for PhD/master/postdocs to work on Knowledge Foundation Models, especially for multimodal data (Language + X, where X can be images, videos, robotics, audio, etc). Please find more info at

Here's a list of NLP superstars 🌟 who are beginning their journey 🚀 in the 2023/24 academic year. @jieyuzhao11 .@GabrielSaadia.@acbuller.@Lianhuiq.@ManlingLi_.@yuntiandeng.@rajammanabrolu.@YueDongCS.@tanyaagoyal.@MinaLee__.@yuntiandeng.@alsuhr .@wellecks.@hllo_wrld.@Xinya16.

3

23

267

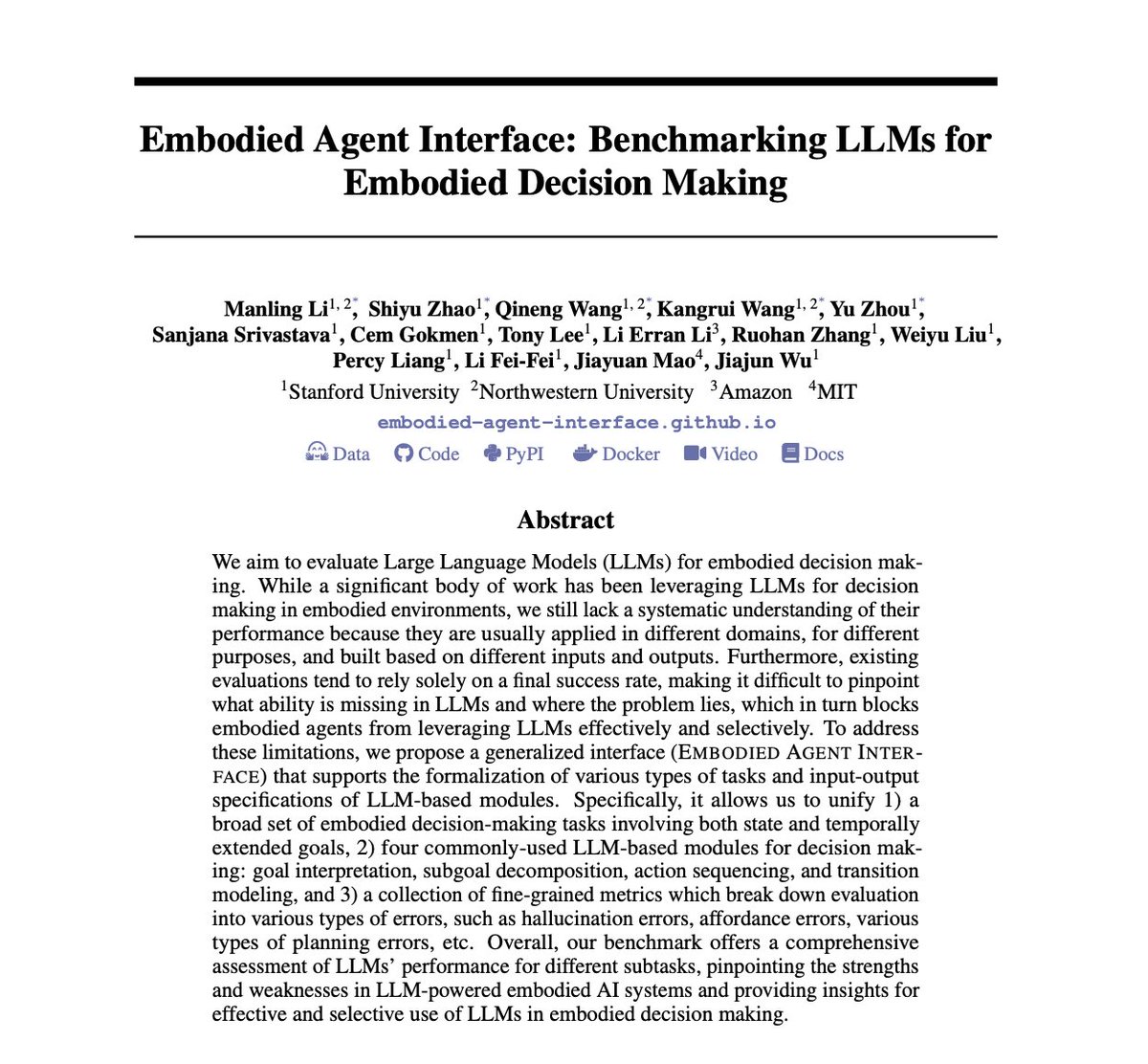

🏆We are thrilled that our Embodied Agent Interface ( received the Best Paper Award at SoCal NLP 2024 (~top 0.4%)!. ❤️A big thank you to our incredible team @jiajunwu_cs @drfeifei @percyliang @maojiayuan @RuohanZhang76 @Weiyu_Liu_ @shiyuzhao @qineng_wang.

Congratulations Prof. Manling Li @ManlingLi_ and Stanford team @jiajunwu_cs @drfeifei on winning the Best Paper Award at the South California NLP Symposium!

16

19

220

🤖Reasoning Agent: A General-Purpose Agent Learning Framework. 💫DeepSeek-R1 training approach for agents, no SFT, just RL training. 💫<state, action, reward> sequences: can be applied to any domain where you can define states, actions, and rewards. 💫 Strong generalization.

🚀 Introducing RAGEN—the world’s first reproduction of DeepSeek-R1(-Zero) methods for training agentic AI models!. We’re betting big on the future of RL + LLM + Agents 🤖✨. This release is a minimally viable leap toward that vision. Code and more intro 🔗:

3

40

201

I have two PhD positions in Fall24. Welcome to talk with me at ACL if you are interested.

I am excited to join @northwesterncs as an assistant professor in Fall24 and @StanfordSVL as a postdoc with @jiajunwu_cs. I cannot say how much I appreciate the help from my advisor @elgreco_winter, references @ShihFuChang @kchonyc @JiaweiHan @kathymckeown and many many people.

13

35

182

Knowledge vs Large Models? .Welcome to our #CVPR23 tutorial "Knowledge-Driven Vision-Language Encoding" with.@Xudong_Lin_AI @jayleicn @mohitban47 @cvondrick @Shih-Fu Chang @elgreco_winter.Jun 19: 9:00-12:30.Loc: East 8.Website:Zoom:

2

41

179

📢We also released 100+ page detailed analysis on 18 LLMs for embodied decision making. arXiv: Website:

[NeurIPS D&B Oral] Embodied Agent Interface: Benchmarking LLMs for Embodied Agents. A single line of code to evaluate your model!. 🌟Standardize Goal Specifications: LTL.🌟Standardize Modules and Interfaces: 4 modules, 438 tasks, 1475 goals.🌟Standardize Fine-grained Metrics: 18

0

30

154

I will be at Seattle for #CVPR2024 this week! Excited to talk about LLMs/VLMs for embodied agents and physical world knowledge. I also have PhD/postdoc/research intern positions. Please feel free to DM me!.

1

6

139

🎉I am so thrilled to be back at UIUC, my dear home. I feel incredibly lucky to have my best PhD advisor in the world @hengjinlp! I've been long thinking of what it means to be "a good advisor", I've come to realize a key sign is students' steadily growing confidence. It is.

The most proud academic moment = introducing my academic daughter as a keynote speaker! Prof. Manling Li @ManlingLi_ is hiring! Go to work with her on these super exciting topics!

2

2

119

Northwestern also has a NU PhD Application Feedback Program

If you are planning to apply for CS PhD program this year, you should definitely sign up for PAMS! .You’ll be matched with one CSE PhD student, have zoom meetings with them & get lots feedback on your SOP. I mentored some via this program, one of whom is now at UW CSE PhD 😎.

1

15

115

Welcome to our @IJCAIconf AIGC tutorial “Beyond Human Creativity: A Tutorial on Advancements in AI Generated Content” with @BangL93 @chenyu_hugo @hengjinlp @Teddy_LFWU.Join us this afternoon! #IJCAI24 #GenerativeAI #AIGC #LLM . Website: Time: 2024-08-03

2

22

117

We got the #ACL2024 Outstanding Paper for “LM-Steer: Word Embeddings Are Steers for Language Models”! . A big shoutout and congrats to our amazing leader Chi Han @Glaciohound and @hengjinlp, and to our wonderful team @liamjxu @YiFung10 @chenkai_sun @nanjiang_cs @TarekAbdelzaher!.

Steering LLMs towards desired stances and intentions:.Toxic → Non-toxic.Negative → Positive.Biased → Unbiased.And more. How do we achieve this? Our research proves the role of word embeddings as effective steers. The best part? These steers are compositional!. Check out.

3

6

102

Also, I am on the job market for faculty positions. My research focus is Multimodal Knowledge Extraction and Reasoning (. I'd love to meet if you're interested!.

What is the value of knowledge in the era of large-scale pretraining? Welcome to our #AAAI23 tutorial "Knowledge-Driven Vision-Language Pretraining" with @Xudong_Lin_AI @jayleicn @mohitban47 @Shih-Fu Chang @elgreco_winter .Feb 8: 2-6pm.Loc: Room 201.Zoom:

1

11

98

I am thrilled to receive Microsoft Research PhD Fellowhship @MSFTResearch ! Great thanks to the best advisor Heng @elgreco_winter and the support and endorsement from @JiaweiHan @Shih-Fu Chang @ChenguangZhu2 and my wonderful mentors at MSR @RuochenXu1 @shuohangw @luowei_zhou !.

Congratulations @ManlingLi_ for receiving Microsoft Research PhD fellowship!

5

3

99

Tomorrow is the day! We cannot wait to see you at #ACL2024 @aclmeeting Knowledgeable LMs workshop!. Super excited for keynotes by Peter Clark @LukeZettlemoyer @tatsu_hashimoto @IAugenstein @ehovy Hannah Rashkin!. Will announce a Best Paper Award ($500) and a Outstanding Paper

1

16

92

#EMNLP2024 Welcome to join our Birds of Feather session on LLMs for Embodied Agents! . 📢We are excited to talk with you about Reasoning and Planning, with amazing Prof. Joyce Chai @SLED_AI @Kordjamshidi @shuyanzhxyc @Xudong_Lin_AI!

2

14

89

The papers I talked about today about Multimodal Computational Social Science:.📰SmartBook: 📰LLM-Steers for stance-controlled generation / red teaming: 📰InfoPattern for information propagation pattern discovery in social media:.

Excited to discuss Multimodal Computational Social Science at #SICSS! I am always fascinated by how foundational models influence human perception through a psychological lens. "We shape our buildings; thereafter they shape us." (Sir Winston Churchill). Language is not just.

1

9

87

What is the value of knowledge in the era of large-scale pretraining? Welcome to our #AAAI23 tutorial "Knowledge-Driven Vision-Language Pretraining" with @Xudong_Lin_AI @jayleicn @mohitban47 @Shih-Fu Chang @elgreco_winter .Feb 8: 2-6pm.Loc: Room 201.Zoom:

1

17

85

🚀 Key Findings on 18 LLMs for Embodied Decision Making:. 🤖 Insight #1 Large Reasoning Models (o1) vs LLMs:. -- o1 performs better than all other 16 models in action sequencing (o1 81%, others 60%) and subgoal decomposition (o1 62%, others 48%). -- But NOT in goal.

📢We also released 100+ page detailed analysis on 18 LLMs for embodied decision making. arXiv: Website:

2

17

85

📢Our 2nd Knowledgeable Foundation Model workshop will be at AAAI 25!. Submission Deadline: Dec 1st. Thanks to the wonderful organizer team @ZoeyLi20 @megamor2 @Glaciohound @XiaozhiWangNLP @shangbinfeng @silingao and advising committee @hengjinlp @IAugenstein @mohitban47 !

2

19

84

[KnowledgeLM @ ACL24] @lm_knowledge. 🚨 Update: We've extended the paper submission deadline to May 30 to accommodate COLM review releasing. 📢 We welcome submissions of Finding papers to present at our workshop!. We have lined up wonderful speakers, and we are eager to engage

0

18

83

Steering LLMs towards desired stances and intentions:.Toxic → Non-toxic.Negative → Positive.Biased → Unbiased.And more. How do we achieve this? Our research proves the role of word embeddings as effective steers. The best part? These steers are compositional!. Check out.

Excited about LM-Steer accepted at ACL2024! It offered surprising insights:. 1. What is the role of word embedding in LMs? They are generation steers!.2. A simple linear transformation enables flexible, transparent & compositional control!. Find out more:

1

15

77

Low-level perception has been a bottleneck for large multimodal models. We try to bridge the gap between low-level perception and high-level reasoning with SVG and intermediate symbolic representations. Check it out at

Large multimodal models often lack precise low-level perception needed for high-level reasoning, even with simple vector graphics. We bridge this gap by proposing an intermediate symbolic representation that leverages LLMs for text-based reasoning. 🧵1/4

1

10

71

📢Northwestern will hire new tenure-track faculties in Embodied Intelligence this year! . The new center to improve robot dexterity selected to receive up to $52 million (. Happy to talk more if you are interested in faculty positions at NU!.

Excited about the new robotics center at Northwestern! #EmbodiedAI.

0

3

68

#ACL24 Monday 2-3pm Poster Session 2. Steering LLMs towards desired stances and intentions:.Toxic → Non-toxic.Negative → Positive.Biased → Unbiased.And more. How do we achieve this? Our research proves the role of word embeddings as effective steers. The best part? These.

🚨My awesome labmate Chi @Glaciohound has put together this beautiful #ACL2024NLP main venue poster on: "Word Embeddings Are Steers for Language Models". 🚀Want to chat about compositional/controllable LLM reasoning?. 🌟Check out our presentation Mon 2-3pm at Poster Session 2!

1

6

63

I would be happy to share experiences regarding faculty job search and PhD/master studies. Welcome to ping me if you would like talk at #ACL2023NLP.

3

3

63

Thanks for the nice organization of SFU@NeurIPS 2024! . Excited to talk about our Embodied Agent Interface, and how to make VLMs to understand space.

Attending NeurIPS'24?.Please mark your calendar for our special event "SFU@NeurIPS 2024" 9 speakers from both academia & industry!.Only a 10-min walk from the convention center!.Let’s enjoy exciting talks and open discussions!

2

8

61

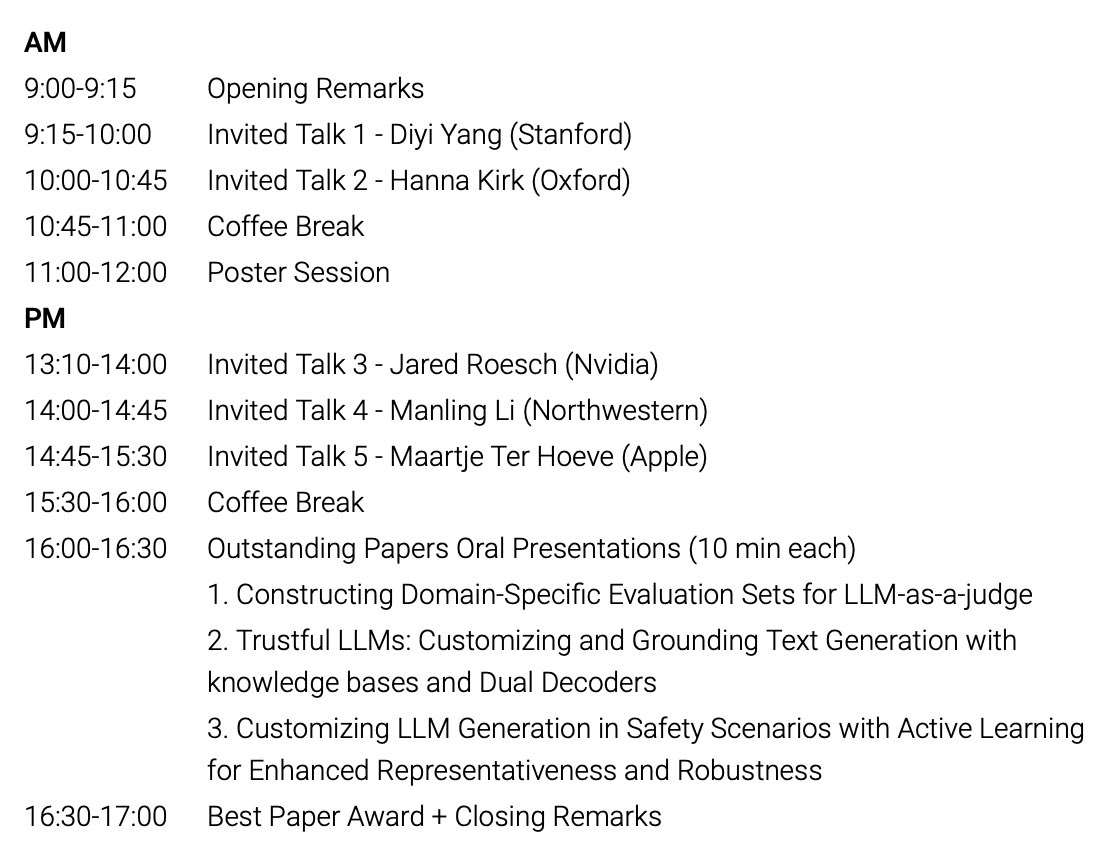

I will do a talk about customizing Large Language Models to Large Agent Models to interact with physical world at @customnlp4u If you are interested in Reasoning and Planning, come join us!

We are honored to have the following incredible keynote speakers lined up to share their expertise and diverse perspectives on customization and personalization (stay tuned for talk titles and abstracts!):. @Diyi_Yang.@hannahrosekirk.@roeschinc.@ManlingLi_.@maartjeterhoeve.

0

8

61

Amazing timing 🎉 Looking forward to it for a long time!! Exactly what I needed when designing my first NLP class. Thanks @jurafsky !.

It's back-to-school time and so here's the Fall '24 release of draft chapters for Speech and Language Processing!

0

1

54

#Neurips2023 Talk on the role of graphs in multimodal foundation models at the New Frontiers in Graph Learning Workshop today in Hall C2. Come and say hi!.

🚀Join us tomorrow (Dec 15) at NeurIPS GLFrontiers Workshop from 8:45 am in Hall C2! Explore the latest in Graphs, LLMs, RAGs, AI for Science, and more! Keynotes from UIUC, Harvard, NVIDIA, Deepmind, plus panel discussions. A day packed with cutting-edge research and networking!

0

5

52

HourVideo: New benchmark for hour-long egocentric videos!. Most challenging benchmark so far (SOTA 37.3% → Humans 85.0%). A comprehensive task suite design with 18 tasks. Especially, with Vision-Centric Tasks: the first to provide spatial reasoning questions for long egocentric.

1/ [NeurIPS D&B] Introducing HourVideo: A benchmark for hour-long video-language understanding!🚀. 500 egocentric videos, 18 total tasks & ~13k questions!. Performance:.GPT-4➡️25.7%.Gemini 1.5 Pro➡️37.3%.Humans➡️85.0%. We highlight a significant gap in multimodal capabilities🧵👇

1

7

52

I will be at Chicago next week, please feel free to let me know if you are around and would like to meet! Looking forward to the workshop!.

🚀As July winds down, we're just 1 week away from the TTIC Multimodal AI Workshop! This rare gathering features an incredible lineup of keynote/speakers @mohitban47 @sainingxie @RanjayKrishna @ManlingLi_ @pulkitology @xiaolonw from diverse fields. Excited

0

8

51

Excited to discuss Multimodal Computational Social Science at #SICSS! I am always fascinated by how foundational models influence human perception through a psychological lens. "We shape our buildings; thereafter they shape us." (Sir Winston Churchill). Language is not just.

🎤 Exciting times at the Summer Institute in Computational Social Science at Chicago 2024! @ManlingLi_ @northwesterncs is currently delivering a fascinating lecture on state-of-the-art multi-modal analysis. #SICSSChicago2024

0

6

51

Thrilled to see Prof. Ralph Grishman receive the #ACL Lifetime Achievement Award! Your wisdom and kindness have shaped so many careers, including mine, which is is truly remarkable. Still remember vividly the joy and happiness during the lunch with you and @hengjinlp in March -.

Congratulations to my best PhD advisor in the world, Prof. Ralph Grishman, on winning the ACL Lifetime Achievement Award! Thank you for bringing me up with your wonderful wisdom, humor and kindness!

1

2

50

Excited to share the KnowledgeLM workshop at ACL 24 @aclmeeting with @ZoeyLi20 @hengjinlp @eunsolc @mjqzhang @megamor2 @peterbhase. We will also have six amazing speakers and panelists!!. Submission By: May 24, 2024.Website: Let’s make LLMs knowledgeable!.

🚀 Knowledgeable Language Model Workshop at ACL24 @aclmeeting. Are you ever curious about how much LLMs know?. Do you ever wish that LLMs could become smarter with more knowledge? . Or maybe you are thinking about removing certain facts from its memory?.

0

12

51

Looking forward to the #ACL24 workshop on Spatial Language Understanding and Grounded Communication for Robotics! #LLMs #EmbodiedAI #Spatial. Will be excited to talk about our recent work on (1) Embodied Agent Interface for benchmarking LLMs for embodied decision making and (2).

As ACL kicks off in beautiful Bangkok, check SpLU-RoboNLP workshop with an exciting lineup of speakers and presentations! @aclmeeting #NLProc #ACL2024NLP @yoavartzi @malihealikhani @ziqiao_ma @zhan1624 @xwang_lk @Merterm @dan_fried @ManlingLi_ @ysu_nlp.

0

5

46

Highly recommend this book!! I have read it twice and listed it as a must-read in my own group. If I am allowed to use one word to summarize what I learned, that would be “courage” – to boldly face challenging problems and to fearlessly pose new questions. Throughout my journey.

First time on the beautiful and vibrant @BostonCollege campus! About to meet all the first year students to discuss my book 🤩.

1

0

44

💡IKEA at Work: Moving procedure planning from 2D to 3D space! . 🎯 Understanding low-level visual details (like how parts connect) has been a major bottleneck towards spatial intelligence. 🏆 First comprehensive benchmark to evaluate how well models grasp fine-grained 3D.

💫🪑Introducing IKEA Manuals at Work: The first multimodal dataset with extensive 4D groundings of assembly in internet videos! We track furniture parts’ 6-DoF poses and segmentation masks through the assembly process, revealing how parts connect in both 2D and 3D space. With

0

5

41

Thank you for the invitation! I am particularly amazed by the passion everyone here at OSU has for research. It has been such a fruitful and inspiring trip!.

A great pleasure for @osunlp to host an NLP rising star @ManlingLi_ from UIUC. Thanks for the great talk on multimedia information extraction! Looking forward to possible collaborations! Manling is on the faculty job market; Check out her profile if your department is hiring!

1

2

37

@wzihanw is very resourceful, and I am super lucky to have him as my very first student 😄.

[Long Tweet Ahead] I just have to say, I’m genuinely impressed by DeepSeek. 💡.It’s no wonder their reports are so elegant and fluffless. Here’s what I noticed about their culture, a space where real innovation thrives, during my time there ↓. — — — — —.🌟 1. Be nice and careful.

2

3

37

Highly recommend @elliottszwu for PhD applicants, especially who are interested in Computer Vision! I am really amazed by the wholehearted trust from every intern working with him at @StanfordSVL and they all crush their PhD applications!.

If you're interested in doing a PhD with us, apply to the Engineering Department by Dec 3!. Also, consider applying for the Gates Scholarship (Oct deadline for US citizens): as well as the ELLIS Fellowship:

2

1

35

We appreciate the support from our wonderful advisory committee @mohitban47 @preslav_nakov @Meng_CS @JiaweiHan! . We are excited to learn from the amazing speakers @PeterClark @LukeZettlemoyer @YangLiu @EdHovy and other incoming speakers!! . We will also have a Best Paper Award.

Excited to share the KnowledgeLM workshop at ACL 24 @aclmeeting with @ZoeyLi20 @hengjinlp @eunsolc @mjqzhang @megamor2 @peterbhase. We will also have six amazing speakers and panelists!!. Submission By: May 24, 2024.Website: Let’s make LLMs knowledgeable!.

1

3

33

#NeurIPS2024 We will present HourVideo at Thu 12 Dec 4:30-7:30 p.m. PST, West Ballroom A-D #5109. Welcome to talk with us!.

Super proud of this work by my student @keshigeyan and our collaborators on pushing the benchmarking of LLMs for spatial and temporal reasoning onto another level! We’ll present this at #NeurIPS2024 !🔥🎥.

0

2

30

EMNLP 2024 System Demonstration Track deadline is approaching (August 5 anytime on earth)! Cannot wait to meet with you in Miami about latest advances in NLP applications, especially with these powerful foundation models! #EMNLP #NLP #LLM @emnlpmeeting.

🚨 The EMNLP 2024 call for system demonstration papers is out! Submission deadline is August 5 (UTC-12). Please read the updated submission guidelines! #NLProc #EMNLP2024.

1

5

28

Congrats to Prof Han on this well deserved award! Not only a brilliant researcher but also an incredible mentor, inspiring countless students including me!.

Prof. Jiawei Han received the Distinguished Research Contributions Award at #PAKDD2024! @pakdd_social . Photo credit: Prof. Jian Pei @jian_pei

0

2

29

Our afternoon session is ongoing with wonderful speakers @IAugenstein @ehovy @HannahRashkin and a panel discussion on hot takes of Knowledge + LLMs! . #ACL2024 Lotus Room 10 (Zoom:

Tomorrow is the day! We cannot wait to see you at #ACL2024 @aclmeeting Knowledgeable LMs workshop!. Super excited for keynotes by Peter Clark @LukeZettlemoyer @tatsu_hashimoto @IAugenstein @ehovy Hannah Rashkin!. Will announce a Best Paper Award ($500) and a Outstanding Paper

0

3

27

Excited to see you here!.

📢 Join us for the ACL Mentorship Session in person at EMNLP & Zoom! #EMNLP2024 @emnlpmeeting . Session Link: Ask Questions: Mentors:.• @pliang279 (@MIT).• @jieyuzhao11 (@USC).• @ManlingLi_ (@NorthwesternU).• @ZhijingJin

1

2

27

Congrats to Cheng Han Chiang @dcml0714 and Hung-yi Lee @HungyiLee2 for the Best Paper Award at ACL24 Knowledgeable LMs workshop!. Merging Facts, Crafting Fallacies: Evaluating the Contradictory Nature of Aggregated Factual Claims in Long-Form Generations.Cheng-Han Chiang, Hung-yi

Tomorrow is the day! We cannot wait to see you at #ACL2024 @aclmeeting Knowledgeable LMs workshop!. Super excited for keynotes by Peter Clark @LukeZettlemoyer @tatsu_hashimoto @IAugenstein @ehovy Hannah Rashkin!. Will announce a Best Paper Award ($500) and a Outstanding Paper

0

2

26

The #EMNLP2024 Call for System Demonstration Paper is out! . I am co-chairing EMNLP demo track with @Hoper_Tom @dirazuhf this year. Looking forward to talking with you at Miami!.

🚨 The EMNLP 2024 call for system demonstration papers is out! Submission deadline is August 5 (UTC-12). Please read the updated submission guidelines! #NLProc #EMNLP2024.

0

4

26

Excited!.

We are thrilled to welcome new faculty members Karan Ahuja, Manling Li, and Kate Smith to the Northwestern CS community! @realkaranahuja @ManlingLi_.

3

1

26

#EMNLP2024 Come and join us this afternoon to check best papers! We will also announce two paper awards in the demo track!.

📢 #EMNLP2024 is coming to a close with our awards and closing sessions today at 16:00-17:30! . We will be announcing future conference info/venue and awards (not yet announced), so be sure to attend and hear these exciting announcements (and maybe pleasant surprises) in person!

0

1

26

Excited about the new robotics center at Northwestern! #EmbodiedAI.

HAND ERC will develop dexterous robot hands with the ability to assist humans with manufacturing, caregiving, handling precious or dangerous materials, and more. The NSF grant marks the first time Northwestern has led an Engineering Research Center.

1

1

26

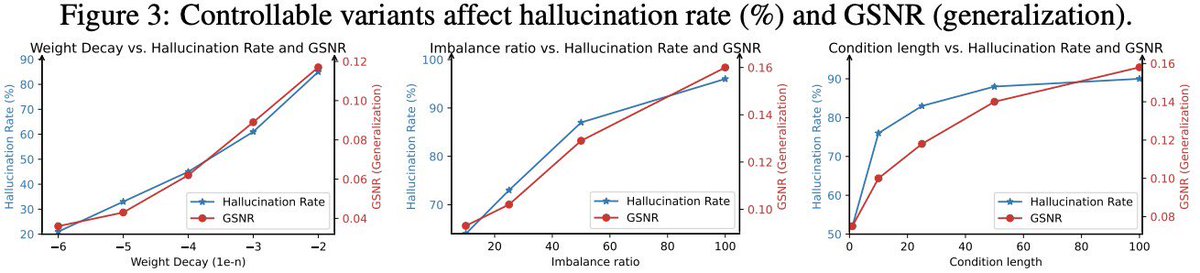

Use “knowledge overshadowing” as an early warning signal to forecast hallucinations!. When your brain goes "Uhh. " before confidently stating something uncertain. That moment of doubt? It is like the built-in hallucination alarm. Catch hallucinations early, avoid the trap of.

🔍 New Preprint! Why do LLMs generate hallucinations even when trained on all truths? 🤔 Check out our paper [. 💡 We find that universally, data imbalance causes LLMs to over-generalize popular knowledge and produce amalgamated hallucinations. 📊

1

1

25

Congrats to Peter Baile Chen, Yi Zhang, @DanRothNLP for the Outstanding Paper Award at #ACL2024 Workshop on Knowledgeable LMs!. Is Table Retrieval a Solved Problem? Exploring Join-Aware Multi-Table Retrieval.Peter Baile Chen, Yi Zhang, Dan Roth.

Tomorrow is the day! We cannot wait to see you at #ACL2024 @aclmeeting Knowledgeable LMs workshop!. Super excited for keynotes by Peter Clark @LukeZettlemoyer @tatsu_hashimoto @IAugenstein @ehovy Hannah Rashkin!. Will announce a Best Paper Award ($500) and a Outstanding Paper

1

5

25

#NeurIPS22 The poster presentation of our paper VidIL will be held on Wed 30 Nov 4 p.m. CST — 6 p.m. CST at Hall J #1041. Looking forward to see you there!

Can GPT-3 understand videos? Glad to share our new work VidIL on prompting LLMs to understand videos using image descriptors (frame caption + visual token). We show strong few-shot video-to-text generation ability WITHOUT the need to train on ANY videos:

0

3

25

So excited about it! @drfeifei's "from seeing to doing" vision is actually the one that sparked my shift from my PhD work of video events/planning to pursuing embodied AI in my postdoc. I still vividly remember listening to her talk two years ago where I learned this vision,.

Excited to be at #NeurIPS , still working on my slides for my keynote on Wednesday Dec 11 at 2:30pm! 🤞

0

2

24

Thanks much @drfeifei! . We will present Embodied Agent Interface in the oral session right after @drfeifei’s keynote. Come and join us! #NeurIPS2024. 📆Oral: Wed 11 Dec 3:50-4:10pm PST, Oral Session 2A Agents .📆Poster: Wed 11 Dec 4:30-7:30 pm PST, West Ballroom A-D #5402.

1

2

23

Congrats @xingyaow_ !! Big move! Cannot wait to see the game-changing open-source agents!.

Excited to share that @allhands_ai has raised $5M -- and it's finally time to announce a new chapter in my life: I'm taking a leave from my PhD to focus full-time on All Hands AI. Let's push open-source agents forward together, in the open!.

0

0

21

Thank you so much @kaiwei_chang !.

Here's a list of NLP superstars 🌟 who are beginning their journey 🚀 in the 2023/24 academic year. @jieyuzhao11 .@GabrielSaadia.@acbuller.@Lianhuiq.@ManlingLi_.@yuntiandeng.@rajammanabrolu.@YueDongCS.@tanyaagoyal.@MinaLee__.@yuntiandeng.@alsuhr .@wellecks.@hllo_wrld.@Xinya16.

0

0

21

⌛️Last six hours left! #EMNLP2024 System Demo Track.

EMNLP 2024 System Demonstration Track deadline is approaching (August 5 anytime on earth)! Cannot wait to meet with you in Miami about latest advances in NLP applications, especially with these powerful foundation models! #EMNLP #NLP #LLM @emnlpmeeting.

0

2

18

Welcome to check our work tomorrow about multimodal graph script induction.

📣 I will be presenting this work in #ACL2023 In-Person Poster Session 7 between 11:00 - 12:30 on Wednesday, July 12th (Frontenac Ballroom and Queen’s Quay). Please feel free to drop by for a chat!. #ACL #ACL2023NLP #NLProc #NLP.

0

1

18

Happening now at Lotus Room 10 (Zoom: .

Excellent talk by @ehovy at the #ACL2024 KnowLLM workshop providing an overview of problems involving knowledge in LLMs: control vs. declarative vs. procedural knowledge, requiring different approaches. #ACL2024NLP #NLProc

0

1

16

Excited to see GenAI finally moves from surface pixels to 3D geometry!. Even more intriguing to me is how this mirrors human spatial cognition: we do not just passively see pixels, we construct and maintain mental models of 3D spaces. Amazing to see all these perspective-taking.

Very excited to share with you what our team @theworldlabs has been up to! No matter how one theorizes the idea, it's hard to use words to describe the experience of interacting with 3D scenes generated by a photo or a sentence. Hope you enjoy this blog! 🤩❤️🔥.

0

1

17

I am attending the very first @COLM_conf and super excited to discuss more on these exciting topics! Please feel free to ping me!.

📢I am committed to be an advisor like her! I have 2-5 PhD positions and 1 postdoc position for Fall 2025 on multimodality (NLP/CV/Robotics), especially on #Reasoning, #Planning, and #Compositionality. 🌟See more at my talk slides:

1

0

12

@WenhuChen Exactly, like when I replied to meet ups at EMNLP: “Would it be possible if we meet later on Friday or Saturday? I am catching CVPR. ”.

0

0

11

🛠️ Particularly, the evaluation also runs on BEHAVIOR (@drfeifei @jiajunwu , which is the first to feature complicated goal annotations (with quantifiers for alternative goal options) and long-sequence trajectory (avg length 14.6), making it the most.

[NeurIPS D&B Oral] Embodied Agent Interface: Benchmarking LLMs for Embodied Agents. A single line of code to evaluate your model!. 🌟Standardize Goal Specifications: LTL.🌟Standardize Modules and Interfaces: 4 modules, 438 tasks, 1475 goals.🌟Standardize Fine-grained Metrics: 18

0

0

10

#NeurIPS2024 We will present how to transfer procedural planning from 2D to 3D space. @yunongliu1 is amazing to lead this large project efforts and is looking for PhD positions. Reach out to her if you are interested! . 📅Wednesday, Dec11, 4:30-7:30 PM PST .🗺️West Ballroom.

💫🪑Introducing IKEA Manuals at Work: The first multimodal dataset with extensive 4D groundings of assembly in internet videos! We track furniture parts’ 6-DoF poses and segmentation masks through the assembly process, revealing how parts connect in both 2D and 3D space. With

4

1

8

@Xudong_Lin_AI @jayleicn @mohitban47 @cvondrick @Shih @elgreco_winter Welcome to our #CVPR23 @CVPR @CVPRConf tutorial tomorrow morning (Mon 9:00-12:30), with slides and other materials at

0

0

7

🛠️ Particularly, the evaluation also runs on BEHAVIOR (@drfeifei @jiajunwu , which is the first to feature complicated goal annotations (with quantifiers for alternative goal options) and long-sequence trajectory (avg. length 14.6), making it the most.

One year ago, we first introduced BEHAVIOR-1K, which we hope will be an important step towards human-centered robotics. After our year-long beta, we’re thrilled to announce its full release, which our team just presented at NVIDIA #GTC2024. 1/n

1

3

7

🚀 Key Findings on 18 LLMs for Embodied Decision Making:. 🤖 Insight #1 Large Reasoning Models (o1) vs LLMs:.-- o1 performs better than all other 16 models in action sequencing (o1 81%, others 60%) and subgoal decomposition (o1 62%, others 48%).-- But NOT in goal interpretation.

1

2

7

#NeurIPS2024 We will present it as an oral presentation at NeurIPS Oral Session 2A: Agents, Wed 11 Dec 5:30 - 6:30 pm CST. Come and join us if you are interested in reasoning and planning!.

1

0

7

A big shoutout and thank you for our wonderful team @jiajunwu_cs @maojiayuan @drfeifei @percyliang @shiyuzhao @Inevitablevalor @James_KKW @yu_bryan_zhou @RuohanZhang76 @Weiyu_Liu_ @tonyh_lee @cgokmenAI @sanjana__z @erranli !.

1

0

6

Happening now at LOTUS SUITE 1 & 2 (Zoom:

Looking forward to the #ACL24 workshop on Spatial Language Understanding and Grounded Communication for Robotics! #LLMs #EmbodiedAI #Spatial. Will be excited to talk about our recent work on (1) Embodied Agent Interface for benchmarking LLMs for embodied decision making and (2).

0

1

6

A big shoutout to Chi Han @Glaciohound ! He is the leading author of both LM-Steer (ACL24 Outstanding Paper) and LM-Infinite (NAACL24 Outstanding Paper)!!. He has an amazingly sharp view on uncovering and transforming LLM to trenscend themselves, and he will be on the job market.

🎖Excited that "LM-Steer: Word Embeddings Are Steers for Language Models" became my another 1st-authored Outstanding Paper #ACL2024 (besides LM-Infinite). We revealed steering roles of word embeddings for continuous, compositional, efficient, interpretable& transferrable control!

0

0

6

Huge congrats @NivArora !.

We are delighted to announce the recipient of the ACM Doctoral Dissertation Award, @NivArora of @NorthwesternU , and Honorable Mentions, @gabrfarina of the @MIT and @WilliamKuszmaul of @Harvard . Here's to a new generation of computing excellence:

2

0

6

@yoavartzi @chris_j_paxton @anne_youw @hengjinlp Yeah we also find models are bottlenecked by low-level geometric understanding, like ‘scatter’ is more complicated for geometric/positional understanding than ‘tower’. We really appreciate the effort of NLVR, and actually tried our method on it the exact day when it is released!.

0

0

5

@universeinanegg not sure it is what you are looking for, we are doing some work from nlp perspective related to historical pattern discovery (we call it event schema induction .

1

0

4

@jiajunwu_cs @drfeifei @percyliang @maojiayuan @RuohanZhang76 @Weiyu_Liu_ @shiyuzhao @qineng_wang Thank you so much again for your super hands-on guidance! The project cannot be finished without you. I have also shared at SoCal NLP that the main figure (Figure 1) is actually done by you. Amazing leader!.

0

0

4