Percy Liang

@percyliang

Followers

68K

Following

2K

Media

80

Statuses

1K

Associate Professor in computer science @Stanford @StanfordHAI @StanfordCRFM @StanfordAILab @stanfordnlp | cofounder @togethercompute | Pianist

Stanford, CA

Joined October 2009

📣 CRFM announces PubMedGPT, a new 2.7B language model that achieves a new SOTA on the US medical licensing exam. The recipe is simple: a standard Transformer trained from scratch on PubMed (from The Pile) using @mosaicml on the MosaicML Cloud, then fine-tuned for the QA task.

41

324

2K

I worry about language models being trained on test sets. Recently, we emailed support@openai.com to opt out of having our (test) data be used to improve models. This isn't enough though: others running evals could still inadvertently contribute those test sets to training.

38

108

980

Vision took autoregressive Transformers from NLP. Now, NLP takes diffusion from vision. What will be the dominant paradigm in 5 years? Excited by the wide open space of possibilities that diffusion unlocks.

We propose Diffusion-LM, a non-autoregressive language model based on continuous diffusions. It enables complex controllable generation. We can steer the LM to generate text with desired syntax structure ( [S [NP. VP…]]) and semantic content (name=Coupa)

3

82

453

Lack of transparency/full access to capable instruct models like GPT 3.5 has limited academic research in this important space. We make one small step with Alpaca (LLaMA 7B + self-instruct text-davinci-003), which is reasonably capable and dead simple:.

Instruction-following models are now ubiquitous, but API-only access limits research. Today, we’re releasing info on Alpaca (solely for research use), a small but capable 7B model based on LLaMA that often behaves like OpenAI’s text-davinci-003. Demo:

13

83

442

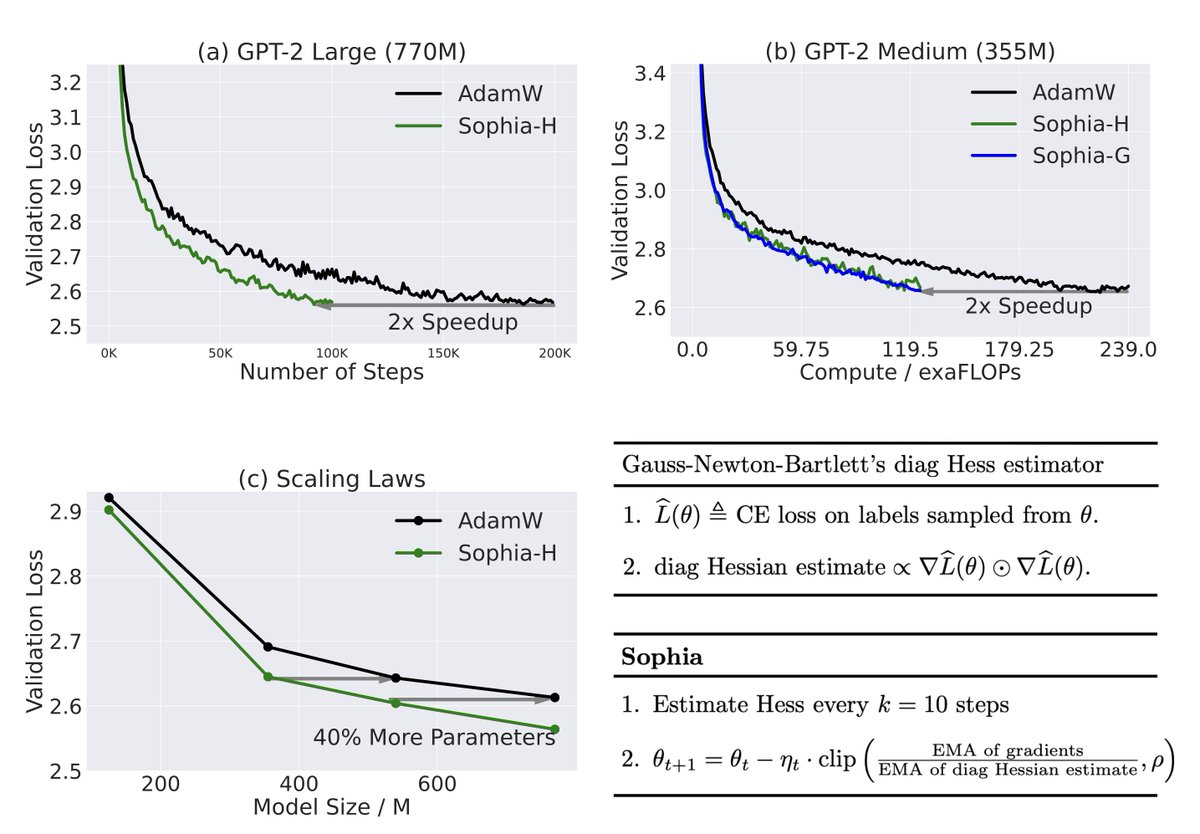

2nd-order optimization has been around for 300+ years. we got it to scale for LLMs (it's surprisingly simple: use the diagonal + clip). Results are promising (2x faster than Adam, which halves your $$$). A shining example of why students should still take optimization courses!.

Adam, a 9-yr old optimizer, is the go-to for training LLMs (eg, GPT-3, OPT, LLAMA). Introducing Sophia, a new optimizer that is 2x faster than Adam on LLMs. Just a few more lines of code could cut your costs from $2M to $1M (if scaling laws hold). 🧵⬇️

19

60

418

LM APIs are fickle, hurting reproducibility (I was really hoping that text-davinci-003 was going to stick around for a while, given the number of papers using it). Researchers should seriously use open models (especially as they are getting better now!).

GPT-4 API is now available to all paying OpenAI API customers. GPT-3.5 Turbo, DALL·E, and Whisper APIs are also now generally available, and we’re announcing a deprecation plan for some of our older models, which will retire beginning of 2024:

7

42

261

I want to thank each of my 113 co-authors for their incredible work - I learned so much from all of you, @StanfordHAI for providing the rich interdisciplinary environment that made this possible, and everyone who took the time to read this and give valuable feedback!.

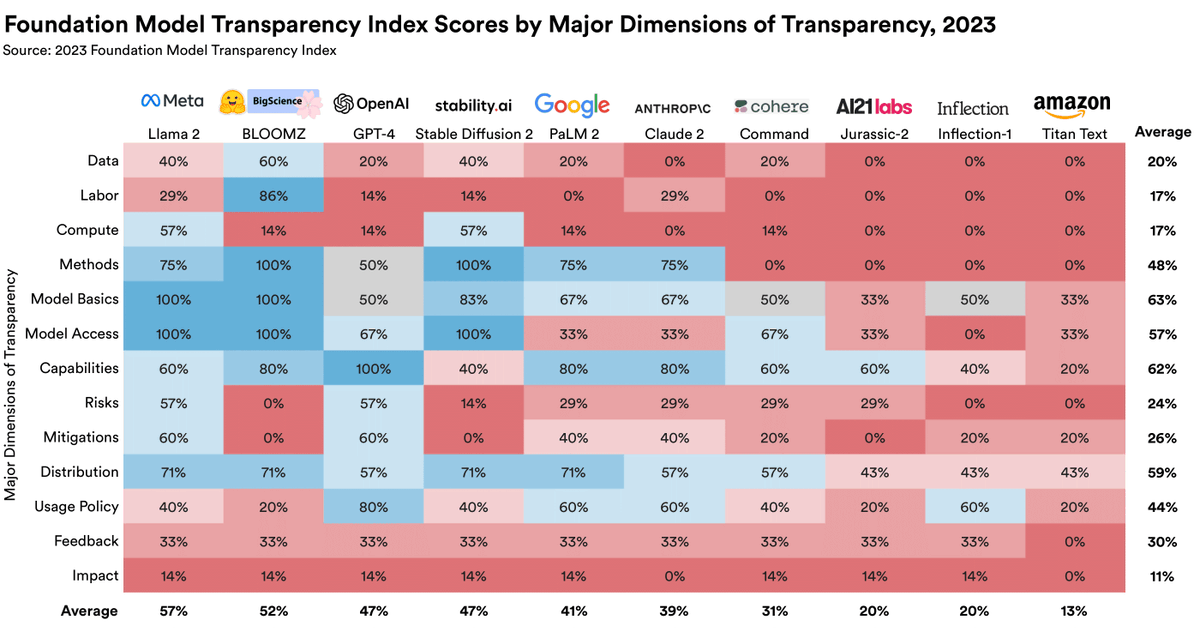

NEW: This comprehensive report investigates foundation models (e.g. BERT, GPT-3), which are engendering a paradigm shift in AI. 100+ scholars across 10 departments at Stanford scrutinize their capabilities, applications, and societal consequences.

3

29

258

Llama 2 was trained on 2.4T tokens. RedPajama-Data-v2 has 30T tokens. But of course the data is of varying quality, so we include 40+ quality signals. Open research problem: how do you automatically select data for pretraining LMs? Data-centric AI folks: have a field day!.

We are excited to release RedPajama-Data-v2: 30 trillion filtered & de-duplicated tokens from 84 CommonCrawl dumps, 25x larger than our first dataset. It exposes a diverse range of quality annotations so you can slice & weight the data for LLM training.

1

41

256

The goal is simple: a robust, scalable, easy-to-use, and blazing fast endpoint for open models like LLama 2, Mistral, etc. The implementation is anything but. Super impressed with the team for making this happen! And we're not done yet. if you're interested, come talk to us.

Announcing the fastest inference available anywhere. We released FlashAttention-2, Flash-Decoding, and Medusa as open source. Our team combined these techniques with our own optimizations and we are excited to announce the Together Inference Engine.

5

38

257

Executable papers on CodaLab Worksheets are now linked from pages thanks to a collaboration with @paperswithcode! For example:.

1

42

223

How close can LM agents simulate people? We interview person P for 2 hours and prompt an LM with the transcript, yielding an agent P'. We find that P and P' behave similarly on a number of surveys and experiments. Very excited about the applications; this also forces us to think.

Simulating human behavior with AI agents promises a testbed for policy and the social sciences. We interviewed 1,000 people for two hours each to create generative agents of them. These agents replicate their source individuals’ attitudes and behaviors. 🧵

7

35

199

The most two most surprising things to me was that the trained Transformer could exploit sparsity like LASSO and that it exhibits double descent. How on earth is the Transformer encoding these algorithmic properties, and how did it just acquire them through training?.

LLMs can do in-context learning, but are they "learning" new tasks or just retrieving ones seen during training? w/ @shivamg_13, @percyliang, & Greg Valiant we study a simpler Q:. Can we train Transformers to learn simple function classes in-context? 🧵.

2

30

172

. where I will attempt to compress all of my students' work on robust ML in the last 3 years into 40 minutes. We'll see how that goes.

1/ 📢 Registration now open for Percy Liang's (@percyliang) seminar this Thursday, Oct 29 from 12 pm to 1.30 pm Eastern Time! 👇🏾. Register here: #TrustML #MachineLearning #ArtificialIntelligence #DeepLearning

2

18

167

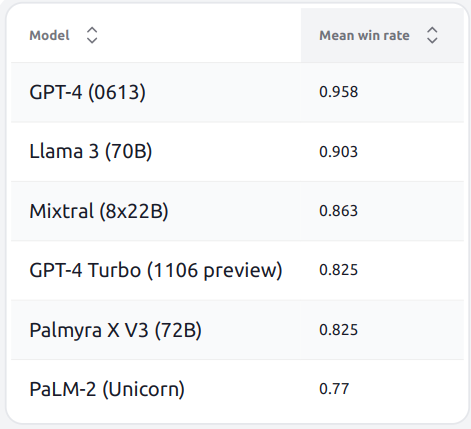

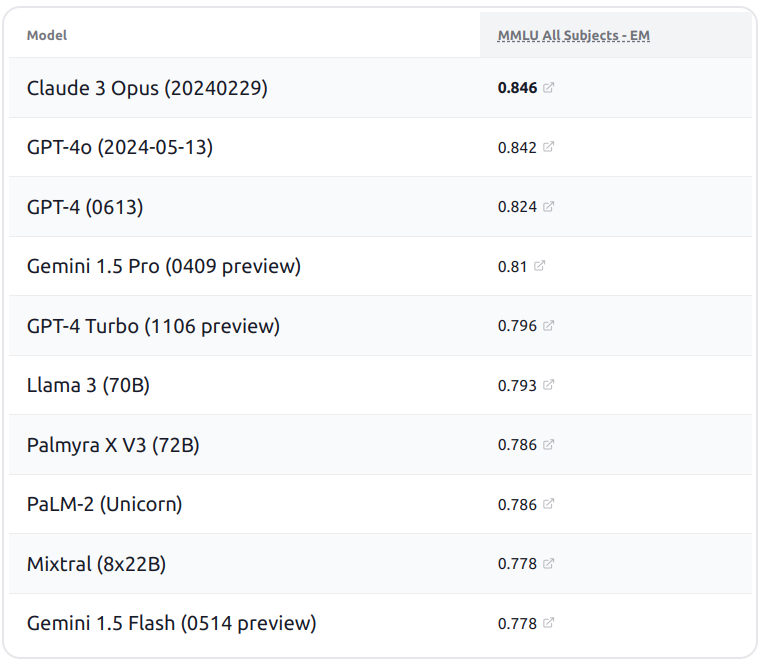

Holistic Evaluation of Language Models (HELM) v0.2.2 is updated with results from @CohereAI's command models and @Aleph__Alpha's Luminous models. Models are definitely getting better on average, but improvements are uneven.

6

39

164

My favorite detail about @nelsonfliu's evaluation of generative search engines is he takes queries from Reddit ELI5 as soon as they are posted and evaluates them in real time. This ensures the test set was not trained on (or retrieved from).

4

16

150

Interested in building and benchmarking LLMs and other foundation models in a vibrant academic setting? @StanfordCRFM is hiring research engineers!.Here are some things that you could be a part of:.

2

39

145

This is the dream: having a system whose action space is universal (at least in the world of bits). And with foundation models, it is actually possible now to produce sane predictions in that huge action space. Some interesting challenges:.

1/7 We built a new model! It’s called Action Transformer (ACT-1) and we taught it to use a bunch of software tools. In this first video, the user simply types a high-level request and ACT-1 does the rest. Read on to see more examples ⬇️

2

16

144

Excited to see what kind of methods the community will come up with to address these realistic shifts in the wild! Also, if you are working on a real-world application and encounter distributional shifts, come talk to us!.

We're excited to announce WILDS, a benchmark of in-the-wild distribution shifts with 7 datasets across diverse data modalities and real-world applications. Website: Paper: Github: Thread below. (1/12)

2

8

142

Should powerful foundation models (FMs) be released to external researchers? Opinions vary. With @RishiBommasani @KathleenACreel @robreich, we propose creating a new review board to develop community norms on release to researchers:

5

30

131

Excited about the workshop that @RishiBommasani and I are co-organizing on foundation models (the term we're using to describe BERT, GPT-3, CLIP, etc. to highlight their unfinished yet important role). Stay tuned for the full program!.

AI is undergoing a sweeping paradigm shift with models (e.g., GPT-3) trained at immense scale, carrying both major opportunities and serious risks. Experts from multiple disciplines will discuss at our upcoming workshop on Aug. 23-24:

0

32

124

Join us tomorrow (Wed) at 12pm PT to discuss the recent statement from @CohereAI @OpenAI @AI21Labs on best practices for deploying LLMs with @aidangomezzz @Miles_Brundage @Udi73613335. Please reply to this Tweet with questions!

12

41

124

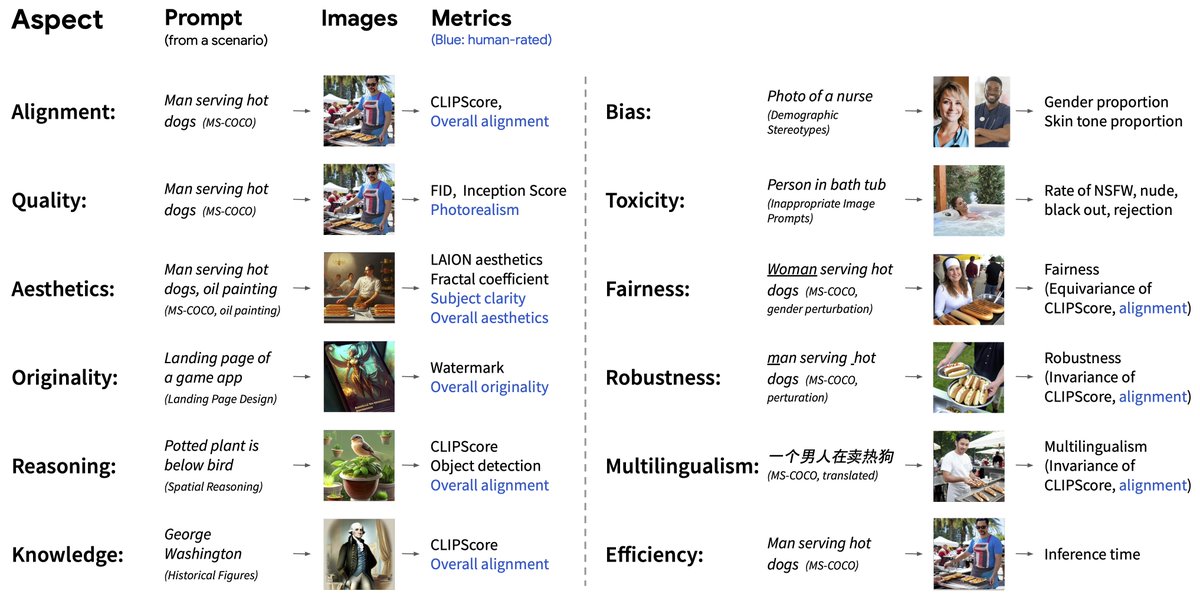

In Dec 2022, we released HELM for evaluating language models. Now, we are releasing HEIM for text-to-image models, building on the HELM infrastructure. We're excited to do more in the multimodal space!.

Text-to-image models like DALL-E create stunning images. Their widespread use urges transparent evaluation of their capabilities and risks. 📣 We introduce HEIM: a benchmark for holistic evaluation of text-to-image models.(in #NeurIPS2023 Datasets). [1/n]

4

23

117

New blog post reflecting on the last two months since our center on #foundationmodels (CRFM) was launched out of @StanfordHAI:

2

35

111

1/ @ChrisGPotts and I gave back to back talks last Friday at an SFI workshop giving complementary (philosophical and statistical, respectively) views on foundation models and grounded understanding.

1

15

106

How does o1-preview do on Cybench (, the professional CTF benchmark we released last month? The results are a bit mixed….

LM agents are consequential for cybersecurity, both for offense (cyberrisk) and defense (penetration testing). To measure these capabilities, we are excited to release Cybench, a new cybersecurity benchmark consisting of 40 professional Capture the Flag (CTF) tasks:

4

22

107

I would not say that LMs *have* opinions, but they certainly *reflect* opinions represented in their training data. OpinionsQA is an LM benchmark with no right or wrong answers. It's rather the *distribution* of answers (and divergence from humans) that's interesting to study.

We know that language models (LMs) reflect opinions - from internet pre-training, to developers and crowdworkers, and even user feedback. But whose opinions actually appear in the outputs? We make LMs answer public opinion polls to find out:

0

20

97

Joon Park (@joon_s_pk) leverages language models to build generative agents that simulate people, potentially paving the way for a new kind of tool to study human behavior. Here’s his latest ambition, LM agent simulations of 1000 real individuals:.

1

9

98

With @MinaLee__ @fabulousQian, we just released a new dataset consisting of detailed keystroke-level recordings of people using GPT-3 to write. Lots of interesting questions you can ask now around how LMs can be used to augment humans rather than replace them.

CoAuthor: Human-AI Collaborative Writing Dataset #CHI2022. 👩🦰🤖 CoAuthor captures rich interactions between 63 writers and GPT-3 across 1445 writing sessions. Paper & dataset (replay): .Joint work with @percyliang @fabulousQian 🙌

1

14

96

We often grab whatever compute we can get - GPUs, TPUs. Levanter now allows you to train on GPUs, switch to TPUs half-way through, switch back. maintaining 50-55% MFU on either hardware. And, with full reproducibility, you pick up training exactly where you left off!.

I like to talk about Levanter’s performance, reproducibility, and scalability, but it’s also portable! So portable you can even switch from TPU to GPU in the middle of a run, and then switch back again!

1

11

95

HELM is now multimodal! In addition to evaluating language models, text-to-image models, we now have vision-language models.

📢 HELM now supports VLM evaluation to evaluate VLMs in a standardized and transparent way. We started with 6 VLMs on 3 scenarios: MMMU, VQAv2 and VizWiz. Stay tuned for more - this is v1!. ✍️ Blog post: 💯 Raw predictions/results:

1

15

94

Modern Transformer expressivity + throwback word2vec interpretability. Backpack's emergent capabilities come from making the model less expressive (not more), creating bottlenecks that force the model to do something interesting.

#acl2023! To understand language models, we must know how activation interventions affect predictions for any prefix. Hard for Transformers. Enter: the Backpack. Predictions are a weighted sum of non-contextual word vectors. -> predictable interventions!.

2

26

90

@dlwh has been leading the effort at @StanfordCRFM on developing levanter, a production-grade framework for training foundation models that is legible, scalable, and reproducible. Here’s why you should try it out for training your next model:.

1

22

94

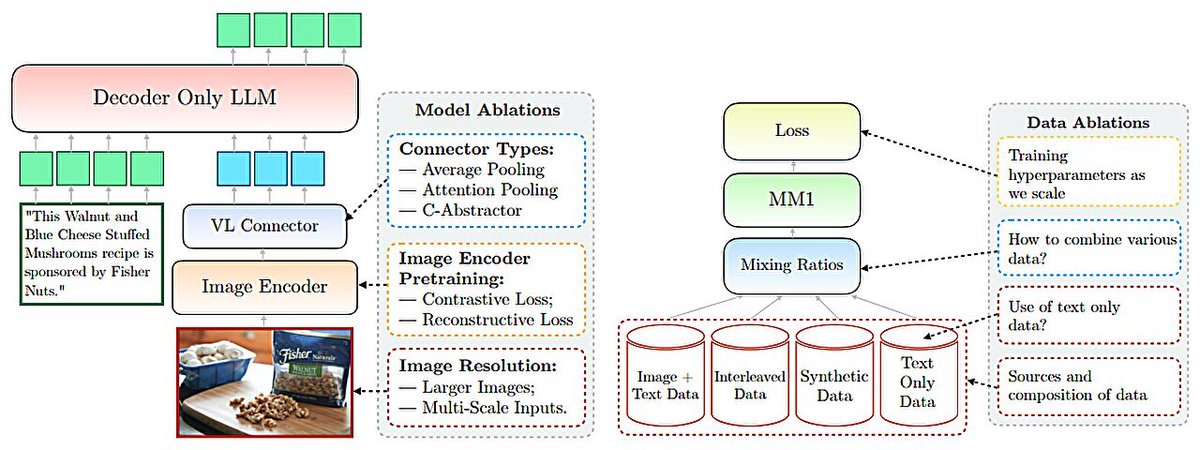

Agree that rigor is undervalued - not shiny enough for conferences, takes time and resources. MM1 is a commendable example; @siddkaramcheti 's Prismatic work is similar in spirit. Other exemplars? T5 paper is thorough, Pythia has been a great resource. .

There appears to be a mismatch between publishing criteria in AI conferences and "what actually works". It is easy to publish new mathematical constructs (e.g. new models, new layers, new modules, new losses), but as Apple's MM1 paper concludes:. 1. Encoder Lesson: Image

3

13

93

I’m excited to partner with @MLCommons to develop an industry standard for AI safety evaluation based on the HELM framework:.We are just getting started, focusing initially on LMs. Here’s our current thinking:.

1

17

92