Siddharth Karamcheti

@siddkaramcheti

Followers

3K

Following

5K

Media

52

Statuses

981

PhD student @stanfordnlp & @StanfordAILab. Robotics Intern @ToyotaResearch. I like language, robots, and people. On the academic job market!

Stanford, CA

Joined September 2018

Thrilled to introduce Vocal Sandbox ( – our new framework for situated human-robot collaboration. We'll be @corl_conf all week; don't miss @jenngrannen's oral today (Session 3 @ 5 PM) or our poster tomorrow!. Why am I so proud of this paper? 🧵👇.

Introducing 🆚Vocal Sandbox: a framework for building adaptable robot collaborators that learns new 🧠high-level behaviors and 🦾low-level skills from user feedback in real-time. ✅. Appearing today at @corl_conf as an Oral Presentation (Session 3, 11/6 5pm). 🧵(1/6)

1

5

41

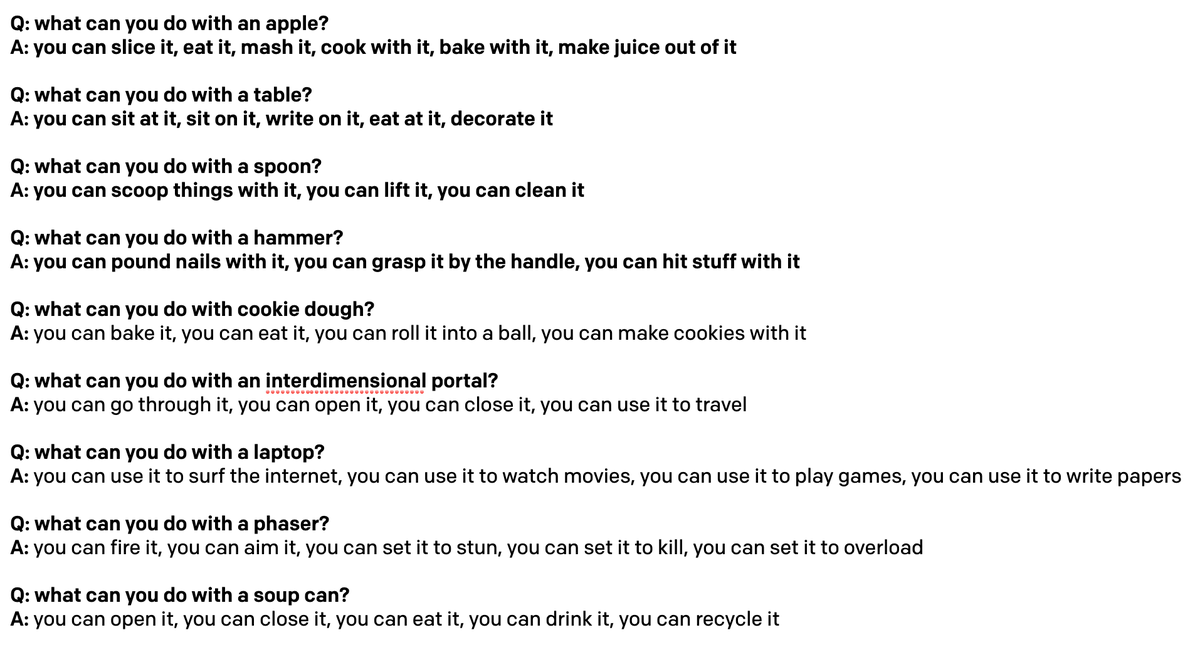

Since getting academic access, I’ve been thinking about GPT-3’s applications to grounded language understanding — e.g. for robotics and other embodied agents. In doing so, I came up with a new demo: . Objects to Affordances: “what can I do with an object?”. cc @gdb

18

63

491

Thrilled to announce I'm joining @huggingface 🤗 as a research intern while doing my PhD! . Step 1: scalable training that's accessible and transparent w/ @StasBekman and Thomas Wang. Step 2+: multimodality, robotics, RL!. Huge thanks to @Thom_Wolf and team for the opportunity!.

8

13

290

Super honored (and very embarrassed) that @karpathy took the time to look at some of our code and fix an inefficiency (*cough*, bug, *cough*) I introduced 😅. Loving the @huggingface open source community today 🤗!.

1

3

165

Incredibly excited (and still a bit in shock) that our #ACL2021 paper with the amazing @RanjayKrishna @drfeifei and @chrmanning won an Outstanding Paper award!. This paper has a fun story that doesn’t quite fit in 8 pages; blog post, paper, and all code up soon!.

5

16

158

How do we build adaptive language interfaces that learn through interaction with real human users? . New work w/ my amazing advisors @DorsaSadigh and @percyliang, to be presented at the @intexsempar2020 workshop at #emnlp2020. Link: A thread 🧵(1 / N).

1

31

136

Proud to share "LILA: Language-Informed Latent Actions," our paper at #CoRL2021. How can we build assistive controllers by fusing language & shared autonomy?. Jointly authored w/ @megha_byte, with my advisors @percyliang & @DorsaSadigh. 📜: 🧵: (1 / 10)

2

24

89

Want to build robots that adapt to language corrections in real-time?. Introducing "No, to the Right – Online Language Corrections for Manipulation via Shared Autonomy" ( w/ @YuchenCui1, Raj, Nidhya, @percyliang & @DorsaSadigh at #HRI2023 - 🧵👇 (1/N).

2

25

89

Incredibly honored that Voltron is one of the nominees for Best Paper at #RSS2023!. Just landed in Daegu, South Korea and can’t wait to present on Tuesday (catch our talk in Session 4 at 3 PM KST). Excited to meet everyone, and stoked for a week of awesome talks and demos!.

How can we use language supervision to learn better visual representations for robotics?. Introducing Voltron: Language-Driven Representation Learning for Robotics!. Paper: Models: Evaluation: 🧵👇(1 / 12)

4

17

87

I've been struggling to put words to Professor Charniak's passing. I can count on one hand the people in my life that have single-handedly reshaped the trajectory of my life – he's at the top of that list. He was a man of honor, humility, and boundless energy.

@BrownCSDept is mourning the loss of University Professor Emeritus of Computer Science and Cognitive Science Eugene Charniak, one of our founding faculty members. He passed away on June 13, just a few days after his seventy-seventh birthday. (1 of 3)

1

7

68

How do we build visually guided controllers that help humans operate complex robots?. Thrilled to share our #L4DC paper "Learning Visually Guided Latent Actions for Assistive Teleoperation" with Albert Zhai, @loseydp, and @DorsaSadigh!. Paper: A 🧵 [1/7]

1

17

68

How do we conduct ethical research from the *start*? . At Hugging Face, we've started working on multimodal pretraining (💬, 📸, 🎤, 🎥), involving collecting a dataset & training models. Ethics can't be an afterthought! A 🧵⬇️ (1/3).

How do we integrate ethical principles into the ML research cycle?. A few months ago, we kicked off a project at Hugging Face on multimodal datasets and models.🐙. Instead of discussing ethics at the end, we wrote down our ethical values from the start!.

1

7

67

Thrilled to announce OpenVLA ( – a vision-language-action policy for robotic control!. Shout out to my co-leads @moo_jin_kim & @KarlPertsch; see their threads for overviews of our work. Here though, I want to talk about observations & next steps! 🧵⬇️.

✨ Introducing 𝐎𝐩𝐞𝐧𝐕𝐋𝐀 — an open-source vision-language-action model for robotics! 👐. - SOTA generalist policy.- 7B params.- outperforms Octo, RT-2-X on zero-shot evals 🦾.- trained on 970k episodes from OpenX dataset 🤖.- fully open: model/code/data all online 🤗. 🧵👇

2

12

67

Extremely honored to be named an RSS Pioneer! Thrilled to get to know the rest of the cohort in Delft this summer — thank you @RSSPioneers for organizing such a wonderful event!.

We are thrilled to announce our #RSSPioneers2024 cohort! 🎉 Congratulations to these 30 rising stars in robotics! We thank all of our applicants for their inspiring submissions, and our selection committee and reviewers for their participation and insight.

7

4

66

Huge congratulations to @ethayarajh for being named a Facebook AI PhD Fellow in NLP! . Kawin is a brilliant researcher, collaborator, and friend who has taught me so much; this is incredibly well-deserved and I'm so proud!.

1

8

61

Finally, I’d like to thank @sh_reya and @notsleepingturk for putting together an incredibly easy to use Github Repo ( for putting together these interactive demos. What an awesome resource!.

2

3

50

Just touched down in London for #CoRL2021 — what a beautiful city!. Looking forward to meeting lots of new folks, please email/DM me if you’re down to chat robotics & NLP or shared autonomy for manipulation or (better yet) both!. Super excited 🗣🤖!.

2

1

52

Our #RSS2022 workshop on Learning from Diverse, Offline Data (#LDOD) is on Monday 6/27!. Amazing set of papers, incredible speakers (below), and a full panel moderated by @chelseabfinn & @DorsaSadigh! . Sneak Peek: 1-1 chats between students & speakers:

2

8

50

Professor Charniak gave me my start in ML. Each week, I’d come to his office and we’d talk ideas — no judgement, no feeling I needed to prove anything. He’d always meet me with patience and joy. There’s no way I’d be where I am without those meetings. Congrats on retirement!.

First seen in the pages of Conduit, the annual @BrownCSDept magazine, we're excited to share an extensive look back at Professor Eugene Charniak's work and life as he enters retirement:

1

6

48

S4 (by the amazing @_albertgu and @krandiash) is a new sequence model that can reliably scale to *huge* contexts. To dive into how it works, @srush_nlp and I wrote a code library (~200 lines of JAX) and blog post: "The Annotated S4" (. Check it out!.

The Annotated S4 (/w @siddkaramcheti) . A step-by-step guide for building your own 16,000-gram language model.

0

6

45

Check out the Robotics section (§2.3), discussing opportunities in applying #foundationmodels across the robotics pipeline. Challenges await! Collecting the right data, ensuring safety are crucial. But tackling these problems now – *before* building models – is key!

NEW: This comprehensive report investigates foundation models (e.g. BERT, GPT-3), which are engendering a paradigm shift in AI. 100+ scholars across 10 departments at Stanford scrutinize their capabilities, applications, and societal consequences.

1

9

39

Honored to be presenting our work "Mind Your Outliers" ( at the #ACL2021NLP Best Paper Session today at 4 PM PST (23:00 UTC+0). ACL-Internal link w/ Q&A: Video (I'll present a "punchier" version tonight):

Congrats to @siddkaramcheti, @RanjayKrishna, @drfeifei & @chrmanning for #ACL2021NLP Outstanding Paper Mind Your Outliers! Investigating the Negative Impact of Outliers on Active Learning for Visual Question Answering. Code #NLProc

4

8

40

Paper and code is out! . Getting to this point, this paper – which is fundamentally about a negative result – wasn't a linear path, but one that took months. Excited to share that story (the one that didn't make it into the paper) with y'all. Stay tuned for the blog post!.

Congrats to @siddkaramcheti, @RanjayKrishna, @drfeifei & @chrmanning for #ACL2021NLP Outstanding Paper Mind Your Outliers! Investigating the Negative Impact of Outliers on Active Learning for Visual Question Answering. Code #NLProc

0

6

37

Incredibly honored to be named a 2019 Open Philanthropy AI Fellow. Thanks so much to all my advisors, mentors, friends, and family who helped me get this far!.

Excited to announce the 2019 class of the Open Phil AI Fellowship. Eight machine learning students will collectively receive up to $2 million in PhD fellowship support over the next five years. Meet the 2019 fellows:

1

1

37

This is amazing news, and truly incredible. I'm so lucky to have @DorsaSadigh as an advisor, and really looking forward to the awesome work our brilliant lab will come out with over the next few years! Congratulations @DorsaSadigh!!!.

Congratulations to @StanfordAILab faculty Dorsa Sadigh on receiving an MIT Tech Review TR-35 award for her work on teaching robots to be better collaborators with people.

1

0

35

I just finished the Residency this past August, and it was one of the most enriching experiences I’ve ever had — I got to work with amazing people on really hard research, and I learned a ton!. I highly recommend applying — opportunities like this are few and far between!.

Applications for the Facebook AI Residency program are open. US (NYC, Seattle, Menlo Park): UK (London): Deadline: 2020-01-31

1

1

35

Interestingly, @_eric_mitchell_ and I found that if you “prime” GPT-3 with “natural” (less structured) text, you get less ambiguous action associations (set it to stun vs. stun). Maybe this provides insight on how to structure your “prompts” — the more “natural” the better!

4

6

32

How do reading comprehension models select supporting evidence? How does this evidence compare to those chosen by human users? . Very excited to share our new #emnlp2019 paper ( w/ @EthanJPerez, Rob Fergus, @jaseweston, @douwekiela, and @kchonyc!.

What evidence do people find convincing? Often, the same evidence that Q&A models find convincing. Check out our #emnlp2019 paper: And blog post: w/ @siddkaramcheti Rob Fergus @jaseweston @douwekiela.@kchonyc

0

3

34

Congrats to my advisor @DorsaSadigh for being named a 2022 Sloan Fellow! I’m incredibly lucky and grateful to be one of your students!.

0

2

32

🎉 Stoked to share our #NeurIPS2021 paper "ELLA: Exploration through Learned Language Abstraction." . How can language help RL agents solve sparse-reward tasks more efficiently?. Led by @suvir_m (applying to PhDs now!), with my advisor @DorsaSadigh!. 🔗:

Training RL agents to complete language instructions can be difficult & sample-inefficient. A key challenge is exploration. Our method, ELLA, helps guide exploration in terms of simpler subtasks. Paper: Talk: #NeurIPS21

3

2

31

Very excited for this line-up of amazing speakers, and to be presenting our work "Learning Adaptive Language Interfaces through Decomposition" ( w/ @DorsaSadigh and @percyliang!. If you're at #emnlp2020 tomorrow, definitely stop by!.

Looking forward to see you tomorrow (19/11) at the Interactive and Executable Semantic Parsing workshop, starting at 8:15am PT!. On our page you'll find our schedule, list of invited speakers and link to the zoom session:

0

6

29

Stoked to kick-off the 2020 @stanfordnlp seminar series ( with a talk from @_jessethomason_ on "From Human Language to Agent Action.". As a student working in #RoboNLP, I can't wait to hear about his recent work/perspective on the field as a whole!

1

4

29

The Bay Area Robotics Symposium 2021 is in full swing - we’ve got a full house! #BARS2021. Catch the second set of faculty talks now: 1:15 PT: Keynotes by @percyliang and @robreich + buzzing afternoon session w/ more faculty, student, and sponsor talks!

1

3

27

Really excited to see this at #CoRL2021 tomorrow! @coreylynch and the entire Google robotics team have really inspired my research (especially with their RoboNLP work). Super stoked to hear more about implicit behavioral cloning — y’all should make it to the poster if you can!.

How can robots learn to imitate precise 🎯 and multimodal 🔀 human behaviors?. “Implicit Behavioral Cloning” 🦾🔷🟦💛🟨.paper, videos, code: See IBC learning combinatorial sorting and 1mm precision insertion from vision, tasks explicit BC struggles with.

1

1

27

Really grateful to @StanfordHAI for covering our work on Vocal Sandbox - a framework for building robots that can seamlessly work with and learn from you in the real world (w/ @jenngrannen @suvir_m @percyliang @DorsaSadigh). In case you missed it:

A new robot system called Vocal Sandbox is the first of many systems that promise to help integrate robots into our daily lives. Learn about the prototype that @Stanford researchers presented at the 8th annual Conference on Robot Learning.

0

6

26

🎉 Incredibly thrilled to share our work "Targeted Data Acquisition for Evolving Negotiation Agents" to be presented at #ICML2021, led by my inspirational labmate @MinaeKwon, Mariano-Florentino Cuéllar, and @DorsaSadigh!. Reasons why I find this work exciting - a 🧵.

Excited to share our #ICML2021 paper “Targeted Data Acquisition for Evolving Negotiation Agents” with the amazing @siddkaramcheti, Mariano-Florentino Cuéllar, and @DorsaSadigh!. Paper: Talk: 🧵👇 [1/6]

1

1

25

How can we teach humans to provide better demonstration data for robotic manipulation?. Check out our #CoRL2022 paper on "Eliciting Compatible Demonstrations for Multi-Human Imitation Learning" ( w/ @gandhikanishk @madelineliao & @DorsaSadigh – 🧵👇 (1/N).

Are you collecting demonstrations for imitation learning from multiple demonstrators? Naively collecting demonstrations might actually hurt performance!! We present a simple way to teach people to teach robots better! Appearing at CoRL ‘22. 🧵 (1/5)

1

2

24

David (@dlwh) is an incredible friend and mentor. Highly recommend following his work — he not only dives deep into understanding *all* the parts of the systems he works with, but also cares about sharing these insights in a way that’s accessible. Levanter is just one example!.

Recommend following David Hall (@dlwh) and the Levanter project from @StanfordCRFM . Just no nonsense details about fixing the pain-points of scaling LLM training, one at a time.

1

3

24

@kevin_zakka Never to late to start working on robotics & NLP! It’s such a wonderful time, so many great questions to explore!.

1

0

23

This is going to be a fantastic talk - can’t wait for Thursday!.

We’re very excited to kick off our 2021 Stanford NLP Seminar series with Ian Tenney (@iftenney) of Google Research presenting on “BERTology and Beyond”! Thursday 10am PT. Open to the public non-Stanford people register at

0

5

22

I'm really loving alphaXiv (from a great team of Stanford students including @rajpalleti314)! . Beyond just reading arXiv papers – it's an awesome platform for discussion, collaborative annotation, and note-taking. Give it a try – clear win for open-science. .

How do LLMs learn new facts while pre-training? . Excited to have authors @hoyeon_chang and Jinho Park.answer questions on their latest paper "How Do Large Language Models Acquire Factual Knowledge During Pretraining?". Leave questions for the authors:

2

6

22

The affordance prediction is fairly good (e.g. interdimensional portal), but it’s not perfect (e.g. soup can). That being said, I think this has potential for text-based games (@jaseweston, @mark_riedl), Nethack (@_rockt, @egrefen), and more importantly robotic manipulation!.

5

2

21

In addition to the codebase, @laurel_orr1 and I wrote up a blog post (with the rest of the Propulsion team!) describing a bit more about Mistral and our journey in more detail. Check it out here, and we'd love to hear your thoughts: [1/5].

We're excited to open-source Mistral 🚀 - a codebase for accessible large-scale LM training, built as part of Stanford's CRFM (. We're releasing 10 GPT-2 Small & Medium models with different seeds & 600+ checkpoints per run!. [1/4].

1

7

22

This is incredibly cool — I really really like this line of work on learning to quickly deploy language-aware robots to completely new environments. Amazing work!.

How can we train data-efficient robots that can respond to open-ended queries like “warm up my lunch” or “find a blue book”?.Introducing CLIP-Field, a semantic neural field trained w/ NO human labels & only w/ web-data pretrained detectors, VLMs, and LLMs

1

5

19

I had a great time compiling this post. There’s some really exciting and compelling work coming out of Stanford in a lot of different areas. Very proud to call these people my peers!.

The International Conference on Machine Learning (ICML) 2020 is being hosted virtually this week. We’re excited to share all the work from SAIL that’s being presented, and you’ll find links to papers, videos and more in our latest blog post:.

0

6

21

This is the best kind of paper by my labmates @suneel_belkhale and @YuchenCui1 — it starts with a simple punchline (data quality matters for imitation learning) but really really drives home exactly what “good data” looks like. Definitely worth a read!.

In imitation learning (IL), we often focus on better algorithms, but what about improving the data? What does it mean for a dataset to be high quality?. Our work takes a first step towards formalizing and analyzing data quality. (1/5).

0

2

20

Thrilled to have @ybisk from @SCSatCMU at this week's @stanfordnlp Seminar (Thursday @ 10 AM PST - open to the public: !. @ybisk's work in #RoboNLP has been truly inspirational - I can't wait to learn from him and get a taste of where the field is moving!

1

4

19

My incredible mother is talking with fellow professionals tomorrow about managing mental health in the midst of the India COVID Crisis on @Radiozindagisfo. This is an incredibly important discussion to be having, for those here and abroad. Please tune in if you can!

0

0

18

Really excited by this work from my incredible labmate @priyasun_! . Sketches are an intuitive and expressive way of specifying not just a goal, but also *how* to perform a task — can’t wait to see sketches + language + gestures in the context of rich, collaborative robotics!.

We can tell our robots what we want them to do, but language can be underspecified. Goal images are worth 1,000 words, but can be overspecified. Hand-drawn sketches are a happy medium for communicating goals to robots!. 🤖✏️Introducing RT-Sketch: 🧵1/11

1

1

18

Everyone needs a bit of @douwekiela in their life! . Tune into this week's Stanford NLP Seminar this Thursday at 10 AM PST (open to the public - register here: where he'll talk about "Rethinking Benchmarking in AI" and Dynabench (!

1

0

18

Grounding, embodiment, and interaction - really excited to see this, and can’t wait to explore these areas throughout the rest of my PhD!.

"You can't learn language from the radio." 📻. Why does NLP keep trying to?. In we argue that physical and social grounding are key because, no matter the architecture, text-only learning doesn't have access to what language is *about* and what it *does*.

1

0

18

Incredible to see LXMERT added to Transformers - it’s a clean and impressive implementation that’s really going to make building vision-and-language applications more accessible and widespread. Excited to see people adopt it!.

Amazing effort by @avalmendoz+@haotan5 & @huggingface @LysandreJik @qlhoest @Thom_wolf on LXMERT demo+backend! Comes with flexible dataset generation via HF/datasets for feat+box predn from bottomup-FRCNN w ultra-fast access; allows extension to other mmodal tasks by community 🤗.

0

2

18

Check out IDEFICS - an open vision-language model that can accept sequences of images and text, for use in tasks like visual dialogue, dense captioning, and more!. Demo & Models:

Introducing IDEFICS, the first open state-of-the-art visual language model at the 80B scale!. The model accepts arbitrary sequences of images and texts and produces text. A bit like a multimodal ChatGPT!. Blogpost: Playground:.

1

5

17

This is an incredible initiative! In addition, if you want to chat about grad school/applying, please feel free to DM/email me - I couldn’t have gotten into grad school without the support of older students and mentors, and I’d love to do what I can to help out!.

We’ve started a Student-Applicant Support program for underrepresented students. If you’re considering applying to the Computer Science PhD program at Stanford, we will do our best to give you one round of feedback on your application. Apply by October 31.

0

1

16

Very cool work presenting a brand new language-centric collaborative task pairing a human user and a bot situated in a grounded environment! Very thorough evaluation, including a bonafide human eval. Really exciting stuff - we need more tasks like this.

Upcoming in EMNLP: Executing Instructions in Situated Collaborative Interactions (. New language collaboration environment and large dataset, modeling and learning methods, and a new evaluation protocol for sequential instructions.

0

5

16

Thank you so much @michellearning and @andrey_kurenkov for everything you’ve done for the @StanfordAILab Blog!. I learned so much from you both; really looking forward to continuing what you started with the amazing @nelsonfliu, @jmschreiber91, and @megha_byte!.

It's official! I am now an "alumni editor" of the @StanfordAILab blog! It was an amazing journey to have started the blog and led it as the co-editor-in-chief with @andrey_kurenkov, but even more amazing to see the new editorial board take over!.

0

1

16

Fantasy fans rejoice! Very excited that our paper introducing the LIGHT dialogue dataset was accepted at EMNLP! . Can't wait to see others build on the fantasy text adventure platform and develop new grounded agents capable of speaking and acting in the world.

Accepted at EMNLP! Built in ParlAI. Learning to Speak and Act in a Fantasy Text Adventure Game. @JackUrbs Angela Fan @siddkaramcheti Saachi Jain, Samuel Humeau, Emily Dinan @_rockt @douwekiela kiela, Arthur Szlam, @jaseweston .

0

4

15

When faced with a socially ambiguous cleanup task (a half-complete Lego model, a Starbucks cup), what should a robot do?. Our approach – iterate an LLM "reasoner" with active perception/VQA: "move above the cup" --> "is it empty?" (yes) --> `cleanup(cup)`. See @MinaeKwon's 🧵👇.

How can 🤖s act in a socially appropriate manner without human specification?. Our 🤖s reason socially by actively gathering missing info in the real world. We release the MessySurfaces dataset to assess socially appropriate behavior. 🧵👇

0

3

15

Really grateful to have the chance to present our work at @HRI_Conference this week! Had so much fun in Stockholm - lots of great papers and new friends.

Takayuki Kanda has just announced the start of the Human-robot communication – 1 session 🗣.Enjoy! ✨. #hri2023 #hri

0

2

15

I’ve been incredibly honored to be an OpenPhil Fellow and part of the fellows community!. It’s a great group of people and a wonderful program, so I highly recommend current (and incoming) PhD students apply!.

Applications are open for the Open Phil AI Fellowship!. This program extends full support to a community of current & incoming PhD students, in any area of AI/ML, who are interested in making the long-term, large-scale impacts of AI a focus of their work.

0

1

15

Dilip and I met in a class. I was a lonely transfer student, and didn’t really know anyone. In a stroke of fate, @StefanieTellex helped pair us together. Years later, we’re PhD students at the same school, trade ideas all the time, and (COVID-permitting) get KBBQ once a quarter.

Academic love letters: A thread on how you met your closest collaborator, intellectual soulmate, favorite coauthor or other kindred spirit. Do tell.

2

0

15

It's a wonderful week! Really proud of my advisor @percyliang for being named a AI2050 fellow! Thrilled for you, and ever so grateful to be one of your students!.

0

1

15

#LDOD was yesterday, and we had a blast! Thanks to everyone for coming out!. In case you missed it, congratulations to our outstanding paper - "Challenges and Opportunities in Offline Reinforcement Learning from Visual Observations.". Looking forward to next time!

Our #RSS2022 workshop on Learning from Diverse, Offline Data (#LDOD) is on Monday 6/27!. Amazing set of papers, incredible speakers (below), and a full panel moderated by @chelseabfinn & @DorsaSadigh! . Sneak Peek: 1-1 chats between students & speakers:

1

5

12

Very lucky to have @ebiyik_ as a labmate and friend. His work is insightful, thorough, and just plain cool!. He's also on the academic job market this year 🎉.

#HRIPioneers2022 Erdem Bıyık is working on “Learning from Humans for Adaptive Interaction”. Erdem’s website: Twitter: And check out our full list of participants on our website:

1

0

13

Incredibly excited to see this new paper at @corl_conf - scalable language-conditioned policy learning for manipulation using CLIP + Transporter networks. Also comes with a great suite of benchmark tasks!. Stoked to build off this in future work - congrats @mohito1905! #RoboNLP.

1

0

13

At 10:20 PDT, @laurel_orr1 and I will be talking at the Workshop for #FoundationModels ( about Mistral, as well as our journey towards transparent and accessible training. We hope to see you there - bring your questions! [2/4].

1

4

14

Incredible work by @tonyzzhao on low-cost, fine-grained bimanual teleoperation. This work is clean, open, and is a game changer for data collection and enabling new, complex tasks. Check out the demos — they’re the real deal.

Introducing ALOHA 🏖: 𝐀 𝐋ow-cost 𝐎pen-source 𝐇𝐀rdware System for Bimanual Teleoperation. After 8 months iterating @stanford and 2 months working with beta users, we are finally ready to release it!. Here is what ALOHA is capable of:

0

1

14

Excited to be at #ICML2021 this week! Catch @MinaeKwon's amazing talk at the "Reinforcement Learning 2" session tomorrow from 7 - 8 AM PDT (lots of other fantastic work too - don't miss it!). We'll also be at our poster from 8 - 11 AM PDT at Section C4! Let's chat negotiation!.

🎉 Incredibly thrilled to share our work "Targeted Data Acquisition for Evolving Negotiation Agents" to be presented at #ICML2021, led by my inspirational labmate @MinaeKwon, Mariano-Florentino Cuéllar, and @DorsaSadigh!. Reasons why I find this work exciting - a 🧵.

0

2

13

John is going to be an incredible advisor — apply apply apply!. (And if you’re a Columbia student, take all his classes too!).

I’m joining the Columbia Computer Science faculty as an assistant professor in fall 2025, and hiring my first students this upcoming cycle!!. There’s so much to understand and improve in neural systems that learn from language — come tackle this with me!

0

0

13

Fantastic work from my labmate @michiyasunaga around leveraging error messages to perform program repair in code generation/editing style tasks! . Learning from feedback is a pretty general principle - excited to see other applications of this in related work!.

Excited to share our work, DrRepair: "Graph-based, Self-Supervised Program Repair from Diagnostic Feedback"! . #ICML2020. When writing code, we spend a lot of time debugging. Can we use machine learning to automatically repair programs from errors? [1/5]

0

7

13

Amazing work from @LeoTronchon @HugoLaurencon @SanhEstPasMoi and others at HF on extending VLMs for *interleaved* images and text. Really cool to see the open-source multimodal instruct data (Cauldron), high-res image support, and a super efficient image encoding scheme!.

New multimodal model in town: Idefics2! . 💪 Strong 8B-parameters model: often on par with open 30B counterparts. 🔓Open license: Apache 2.0. 🚀 Strong improvement over Idefics1: +12 points on VQAv2, +30 points on TextVQA while having 10x fewer parameters. 📚 Better data:.

0

3

13

Join us as we scale Mistral ( and tackle research around responsibly training/understanding large-scale language models!.And looking forward – multimodality: models for language + video, robotics, amongst others. Please share & DMs open for questions!.

The Stanford Center for Research on Foundation Models (CRFM) is looking for a research engineer to join our development team! Interested in large-scale training / being immersed in an interdisciplinary research environment? Please apply!

1

3

13

Hugging Face is an incredible place to work, and I’ve been so lucky to learn from a diverse and kind group of researchers, engineers, and other interns. We’ve got some great stuff on the horizon; definitely apply!.

🥳 We are hiring researchers and research interns! Apply here: People with characteristics that are underrepresented in tech are especially encouraged to apply. We will also be having a residency program soon @HuggingFace, stay tuned! 🤗.

0

0

13

Very excited to be co-organizing the *2nd* Workshop on Learning from Diverse, Offline Data at ICRA this year!. Submissions due March 23rd – super excited to see all the amazing work in this area for the second year in a row!.

Announcing the 2nd Workshop on Learning from Diverse, Offline Data (L-DOD) at @ICRA2023 in London on June 2!. There has been tremendous progress in scaling AI systems with data - can we apply this paradigm to generalizable robotic systems?.

0

1

12

Very appreciative of the thoughtful summary of our work! Thanks for the highlight @MosaicML - stoked to follow your progress towards more efficient ML!.

New year, new summaries! Let's look at dataset quality and its impact on sample efficiency. This paper ( studies the ineffectiveness of active learning on visual question answering (VQA) datasets and points to *collective outliers* as the culprit. (1/8).

0

0

12

Question for @ReviewAcl: on submitting reviews, I can see the full names of my fellow reviewers. Is this intentional (I don't think this was the case pre-OpenReview). Could potentially bias the discussion period (junior vs. senior voices)?.

1

0

11

Big thanks to everyone who helped us build Mistral -- from @Thom_Wolf & @StasBekman who helped us navigate @huggingface Transformers, to @carey_phelps for providing support with @weights_biases. Also huge shoutout to @BlancheMinerva from #EleutherAI for providing feedback! [3/5].

1

0

11

For almost two years, I’ve been incredibly lucky to learn from the @AiEleuther community — from sharing tips around training LLMs, to discussing open research problems. Huge congrats to my friend @BlancheMinerva and the entire community! Can’t wait to see what’s up next!.

Over the past two and a half years, EleutherAI has grown from a group of hackers on Discord to a thriving open science research community. Today, we are excited to announce the next step in our evolution: the formation of a non-profit research institute.

0

0

11

Incredible work on automatically learning perturbations/augmentations (views) for contrastive learning without domain expertise!. Congratulations @AlexTamkin @mike_h_wu and Noah Goodman!.

New work on learning views for contrastive learning!. Our Viewmaker Networks learn perturbations for image, speech, and sensor data, matching or outperforming human-designed views. w/ @mike_h_wu and Noah Goodman at @stanfordnlp and @stanfordailab. ⬇️ 1/

1

1

11

These GPT-3 results have me really excited about exploring its potential for spatial reasoning and grounded language understanding, including applications for robot navigation given textual representations of state. @gdb - would love an invite!.

0

0

11

@__apf__ Hyperion by Dan Simmons is a revelation (even just the first novel)!. If you're willing to delve into science fantasy, the Book of the New Sun series by Gene Wolf (1980-1983) is phenomenal. I also really enjoyed Permutation City by Greg Egan (1995) - but might be a bit intense.

1

1

7

Finally, a big thanks to my advisors @percyliang and @DorsaSadigh for their support and fait in me! I'm really excited to see what the next year looks like, both in terms of research and open-source. And robots! Don't forget the robots!.

1

0

11

Phenomenal work by my amazing labmates. Really excited to see this paper go public!.

🍔🍟"In-N-Out: Pre-Training and Self-Training using Auxiliary Information for Out-of-Distribution Robustness". Real-world tasks (crop yield prediction from satellites) are often label-scarce. Only some countries have labels - how do we generalize globally?

0

1

11

Ranjay is not only a phenomenal Computer Vision / HCI researcher, but an incredible and supportive mentor. I'm so grateful to be learning from him, and I know that he'll be a strong addition to any department out there. Best of luck @RanjayKrishna!.

🎓 I'm on the faculty job market this year! Please send me a message if your department (or one you know) is interested in a Computer Vision / HCI researcher who designs models inspired by human perception and social interaction!. My application materials:

0

1

10