Sang Michael Xie

@sangmichaelxie

Followers

3K

Following

2K

Media

56

Statuses

366

Research Scientist at Meta GenAI / LLaMA. AI + ML + NLP + data. Prev: CS PhD @StanfordAILab @StanfordNLP @Stanford, @GoogleAI Brain/DeepMind

Stanford, CA

Joined May 2019

Releasing an open-source PyTorch implementation of DoReMi! The pretraining data mixture is a secret sauce of LLM training. Optimizing your data mixture for robust learning with DoReMi can reduce training time by 2-3x. Train smarter, not longer!

Should LMs train on more books, news, or web data?. Introducing DoReMi🎶, which optimizes the data mixture with a small 280M model. Our data mixture makes 8B Pile models train 2.6x faster, get +6.5% few-shot acc, and get lower pplx on *all* domains!. 🧵⬇️

2

68

271

How can large language models (LMs) do tasks even when given random labels? While traditional supervised learning would fail, viewing in-context learning (ICL) as Bayesian inference explains how this can work!. Blog post with @sewon__min:

2

58

238

I’m presenting 2 papers at #NeurIPS2023 on data-centric ML for large language models:. DSIR (targeted data selection): Wed Dec 13 @ 5pm.DoReMi (pretraining data mixtures): Thu Dec 14 @ 10:45am. Excited to chat about large language models, data, pretraining/adaptation, and more!

7

31

215

Fine-tuning destroys some pre-trained info. Freezing parameters *preserves* it and *simplifies* the learning problem -> better ID and OOD accuracy. Excited to present Composed Fine-Tuning as a long talk at #ICML2021! . Paper: Talk:

2

20

108

Simplifying Models with Unlabeled Output Data.Joint w/ @tengyuma @percyliang. Can “unlabeled” outputs help in semi-supervised learning? In problems with rich output spaces like code, images, or molecules, unlabeled outputs help with modeling valid outputs.

2

20

88

Summary of #foundationmodels for robustness to distribution shifts 🧵:. Robustness problem: Real-world ML systems need to be robust to distribution shifts (training data distribution differs from test). Report:

2

9

60

Excited to work and learn with @AdamsYu @hieupham789 @quocleix over the summer at Google Brain in Mountain View! Hope to make some nice improvements in pretraining. Now if only I can figure out how to do all these onboarding tasks….

2

4

48

GPT3 explaining our abstract on tradeoff between robustness and accuracy to 2 year olds:."Sometimes when you train a machine learning model, it gets better at handling bad situations, but it gets worse at handling good situations."🤣😳. cred: Aditi Raghunathan & @siddkaramcheti.

1

2

46

How can we mitigate the tradeoff between adversarial robustness and accuracy? w/ Aditi Raghunathan, Fanny Yang, John Duchi, @percyliang. Come chat with us #ICML2020: 11am-12pm PST, 10-11pm PST video: paper:

2

11

46

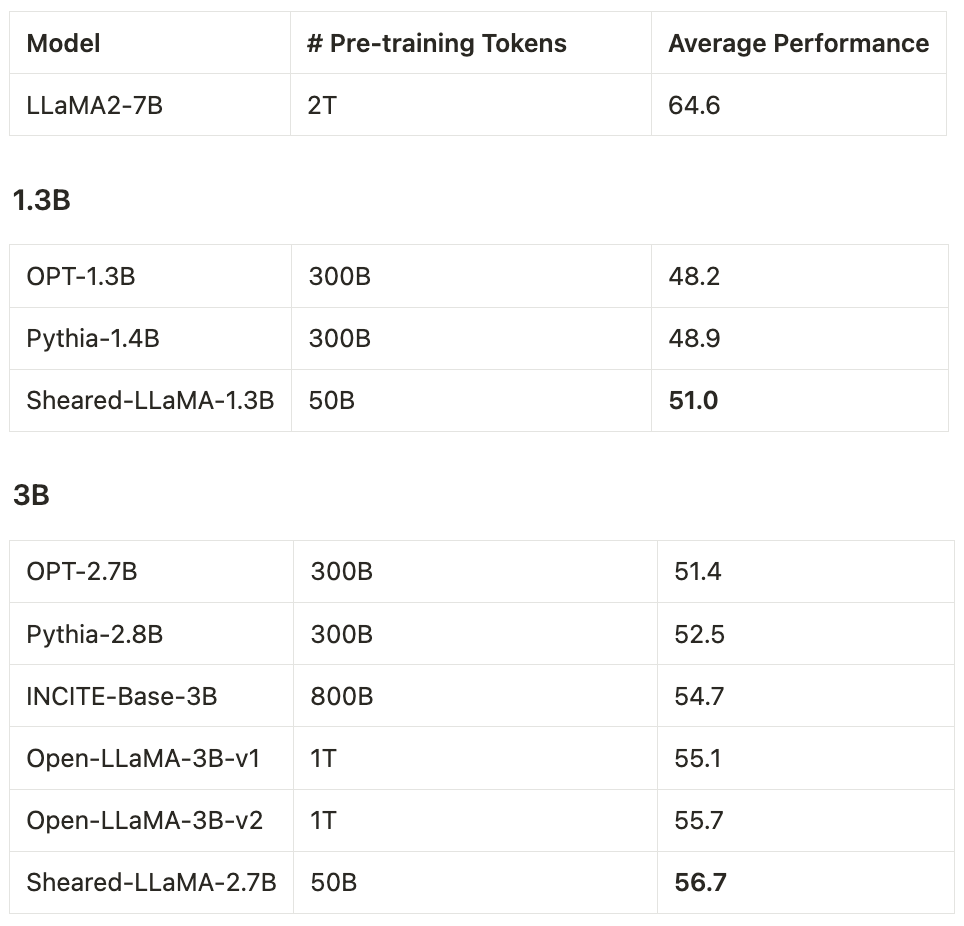

ShearedLLaMA uses DoReMi with a scaling law as the reference model to optimize the data mixture on RedPajama -> strong results at 1.3B and 3B!.

We release the strongest public 1.3B and 3B models so far – the ShearedLLaMA series. Structured pruning from a large model to a small one is far more cost-effective (only 3%!) than pre-training them from scratch!. Check out our paper and models at: [1/n]

0

3

40

Had a great time talking to prospective Stanford grad students at #Tapia2020! GL to everyone! . If you’re applying to the Stanford CS PhD program this fall: for an app review with a current student. Priority for students from under represented minorities.

1

6

34

Excited to co-organize this ICLR 2024 workshop! I think better data will be crucial for the next big advances in foundation models. The submission date is Feb 3 - details at

Excited to announce the ICLR 2024 Workshop on Data Problems for Foundation Models (DPFM)! .- Topics of Interest: data quality, generation, efficiency, alignment, AI safety, ethics, copyright, and more. - Paper Submission Date: Feb 3, 2024 | Workshop Date: May 11, 2024 (Vienna and

0

4

28

I will be presenting "Reparameterizable Subset Sampling via Continuous Relaxations" @IJCAIconf.tomorrow, work with @ermonste! End-to-end training of models using subset sampling for model explanations, deep kNN, and a new way to do t-SNE.

1

3

27

WILDS collects real world distribution shifts to benchmark robust models! I’m particularly excited about the remote sensing datasets (PovertyMap and FMoW) - spatiotemporal shift is a real problem, and space/time shifts compound upon one another. Led by @PangWeiKoh @shiorisagawa.

We're excited to announce WILDS, a benchmark of in-the-wild distribution shifts with 7 datasets across diverse data modalities and real-world applications. Website: Paper: Github: Thread below. (1/12)

0

2

27

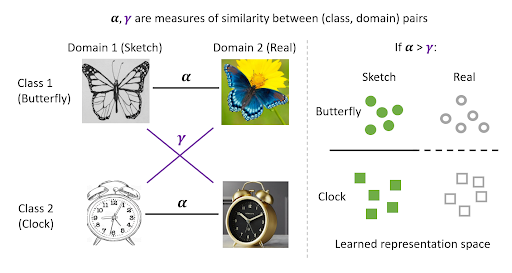

Pretraining is surprisingly powerful for domain adaptation, and doesn’t rely on domain invariance! The magic comes from properties of the data augmentations used, leading to disentangled class and domain info. With this, finetuning on class labels allows for ignoring the domain.

Pretraining is ≈SoTA for domain adaptation: just do contrastive learning on *all* unlabeled data + finetune on source labels. Features are NOT domain-invariant, but disentangle class & domain info to enable transfer. Theory & exps:

0

2

25

Interestingly, pretraining on unlabeled source/target+finetuning doesn’t improve much over just supervised learning on source in iWildcam-WILDS. Correspondingly, the connectivity conditions on the success of contrastive pretraining for UDA ( also fail!.

What's the best way to use unlabeled target data for unsupervised domain adaptation (UDA)?. Introducing Connect Later: pretrain on unlabeled data + apply *targeted augmentations* designed for the dist shift during fine-tuning ➡️ SoTA UDA results!. 🧵👇

1

5

26

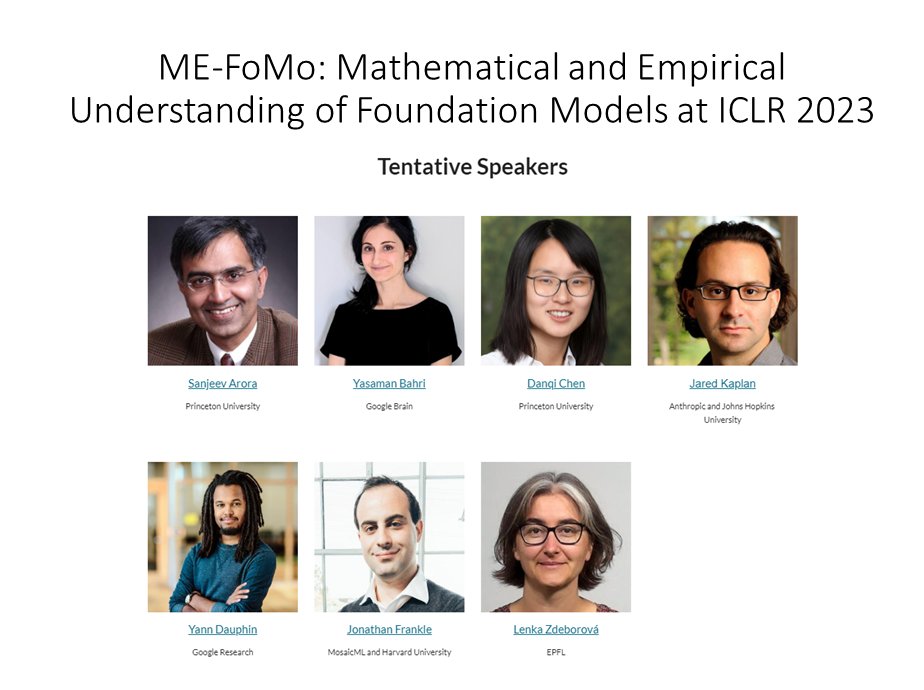

We’re organizing a workshop on understanding foundation models at #ICLR2023! We welcome any unpublished or ongoing work on empirical or theoretical understanding, as well as highlighting phenomena like scaling laws or emergence. Deadline is Feb 3, 2023.

Foundation models (BERT, DALLE-2, ChatGPT) have led to a paradigm shift in ML, but are poorly understood. Announcing ME-FoMo, an #ICLR2023 workshop on understanding foundation models. Deadline: Feb 3, 2023.Topics: Pretraining, transfer, scaling laws, etc

0

3

23

Some of the most powerful abilities of language models (GPT-3) are its intuitive language interface and its few shot learning abilities. These abilities can make it easier to design the rewards for an RL agent, with just a text description or a few demonstrations.

Can we make it easier to specify objectives to an RL agent?. We allow users to specify their objectives more cheaply and intuitively using language! . 🧵👇.Paper: Code: Talk:

0

3

22

Check out our #ICLR2021 poster on In-N-Out at 5-7pm PST tomorrow (Wed May 5): we show how to improve OOD performance by *leveraging* spurious correlations and unlabeled data, and we test it on real world remote sensing tasks!.

This appears in #ICLR2021. Please check out our paper, videos, poster, code, etc! .ICLR poster link: ArXiv: Codalab: Github:

1

4

22

Very cool paper on high-dimensional calibration / online learning by my brother Stephan @stephofx, who is applying to PhD programs this year!.

Super excited about a new paper (with applications to conformal prediction and large action space games) that asks what properties online predictions should have such that acting on them gives good results. Calibration would be nice, but is too hard in high dimensions. But.

0

1

21

Our foundation models (GPT3, BERT) report has a section on how they improve robustness to distribution shifts, what they may not solve, and future directions, w/ @tatsu_hashimoto @ananyaku @rtaori13 @shiorisagawa @PangWeiKoh and TonyL!. thx to @percyliang for leading the effort!

NEW: This comprehensive report investigates foundation models (e.g. BERT, GPT-3), which are engendering a paradigm shift in AI. 100+ scholars across 10 departments at Stanford scrutinize their capabilities, applications, and societal consequences.

0

4

21

Great effort led by @AlbalakAlon to corral the wild west of LM data selection!. A meta-issue: how do we make data work (esp. for pretraining) more accessible? Not everyone can train 7B LMs, but a first bar is to show that the benefits don't shrink with scale, at smaller scales.

{UCSB|AI2|UW|Stanford|MIT|UofT|Vector|Contextual AI} present a survey on🔎Data Selection for LLMs🔍. Training data is a closely guarded secret in industry🤫with this work we narrow the knowledge gap, advocating for open, responsible, collaborative progress.

1

1

19

Adversarial Training can Hurt Generalization - even if there is no conflict with infinite data and the problem is convex. With @Aditi_Raghunathan and @Fanny_Yang. #icml2019 Identifying and Understanding Deep Learning Phenomena.

1

0

13

This course assignment was developed with the help of @percyliang and the rest of the course instructors @tatsu_hashimoto, Chris Ré @HazyResearch, and @RishiBommasani. Class lecture materials are here:

0

1

13

The blog post is based on two papers: (An Explanation of In-context Learning as Implicit Bayesian Inference) and (Rethinking the Role of Demonstrations: What Makes In-Context Learning Work?) by @sewon__min.

1

2

13

Joint w/ @ananyaku, @rmjones96, @___fereshte___, @tengyuma, @percyliang. We also presented this work at the Climate Change AI workshop at NeurIPS, check it out!.

0

2

11

WILDS includes some nice examples of real world distribution shifts, including spatial and temporal shifts in satellite image tasks: poverty (PovertyMap) and building/land use prediction (FMoW). Hope these help spur progress in ML for sustainability + social good! #ICML2021.

We’re presenting WILDS, a benchmark of in-the-wild distribution shifts, as a long talk at ICML!. Check out @shiorisagawa's talk + our poster session from 9-11pm Pacific on Thurs. Talk: Poster: Web: 🧵(1/n)

0

5

11

Blog on @StanfordCRFM for more details: Thanks to @togethercompute for providing compute resources for testing the codebase!.

0

0

11

Check out the GitHub and paper for more details:. Github: Paper: Thanks to code contributions and tests by @tianxie233 from @SFResearch!.

0

2

10

This was work done during an internship at @GoogleAI, jointly with @quocleix, @percyliang, @tengyuma, @hyhieu226, @AdamsYu, @yifenglou, @XuanyiDong, @Hanxiao_6. Thanks also to @edchi for the support at the Brain team!.

1

0

10

@PreetumNakkiran Agreed it's not "standard" learning, but labels still matter - there is still a small drop with random labels in Sewon's paper, and ( "Pushing the Bounds. ") shows that if you re-map the labels, the model will learn the remapping

1

0

8

Please fill out or help circulate this form for those interested in reviewing for our ICLR workshop on understanding foundation models! .

Call for reviewers for our #ICLR2023 workshop on Mathematical and Empirical Understanding of Foundation Models. Fill out this form if you are interested.and we will aim to get back to you asap. Paper deadline: 3 Feb.Tentative reviewing period: 10-24 Feb.

0

2

8

CubeChat is really cool! Your webcam video is on the side of a cube and you can move and jump around in a 3D world. You can even share your screen and it looks like a drive in movie theater for cubes 😄.

Our team just launched CubeChat! 3D video chat with spatial audio--can be multiple conversations going on at once in a room! Please DM me if you are interested in trying it out.

0

4

8

DSIR log-importance weights are also included in RedPajamav2 from @togethercompute as quality scores!.

1

1

7

To become a 10x researcher simply commit to 10x more deadlines, during deadlines you can do in a day what would normally take you 2 weeks 💪.

10x researchers. Professors, if you ever come across this rare breed of researchers, grab them. If you have a 10x researcher as part of your lab, you increase the odds of winning a Turing award significantly. OK, here is a tough question. How do you spot a 10x researcher?.

0

0

6

Please see the report (Sec 4.8 for more details! Thanks to w/ @tatsu_hashimoto @ananyaku @rtaori13 @shiorisagawa @PangWeiKoh and TonyL for the writing efforts, and @percyliang and @RishiBommasani for organizing!.

1

0

6

@andersonbcdefg @Google @GoogleAI @Stanford @StanfordAILab @stanfordnlp @quocleix @percyliang @AdamsYu @hyhieu226 @XuanyiDong We could probably handle hundreds of domains with a large enough batch size. But handling a large number of domains well is a next step!.

1

0

4

In multi-task linear regression, we show pre-training provably improves robustness to *arbitrary* covariate shift and provides complementary gains with self-training. This could provide theoretical explanations for recent empirical works by @DanHendrycks @barret_zoph @quocleix.

1

0

5

Many thanks to collaborators and colleagues that shaped this post! @LukeZettlemoyer @percyliang @tengyuma @megha_byte @RishiBommasani @gabriel_ilharco @wittgen_ball @ananyaku @OfirPress @yasaman_razeghi @mzhangio.

1

1

5