Barret Zoph

@barret_zoph

Followers

19K

Following

1K

Media

43

Statuses

254

VP Research (Post-Training) @openai Past: Research Scientist at Google Brain.

San Francisco, CA

Joined November 2016

Can simply copying and pasting objects from one image to another be used to create more data to improve state-of-the-art instance segmentation?. Yes!. With Copy&Paste, we achieve 57.3 box AP and 49.1 mask AP on COCO. This is SoTA wrt @paperswithcode.

9

92

488

Super excited this is rolling out! Real time speech to speech will be a powerful feature -- I am very bullish on multi-modal being a core component of AI products. This was a great collaboration with post-training (h/t to @kirillov_a_n & @shuchaobi + team on post-training) and.

Advanced Voice is rolling out to all Plus and Team users in the ChatGPT app over the course of the week. While you’ve been patiently waiting, we’ve added Custom Instructions, Memory, five new voices, and improved accents. It can also say “Sorry I’m late” in over 50 languages.

7

6

161

Great video summary of some of my recent work! Thanks @ykilcher!.

A bit late to the party, but 💃NEW VIDEO🕺 on Switch Transformers by @GoogleAI. Hard Routing, selective dropout, mixed precision & more to achieve a 🔥ONE TRILLION parameters🔥 language model. Watch to learn how it's done🧙💪.@LiamFedus @barret_zoph

0

6

82

Pleasure working with you -- learned quite a lot! Excited for what you do next.

Hi everyone yes, I left OpenAI yesterday. First of all nothing "happened" and it’s not a result of any particular event, issue or drama (but please keep the conspiracy theories coming as they are highly entertaining :)). Actually, being at OpenAI over the last ~year has been.

1

0

59

@jacobandreas @jacobaustin132 @_jasonwei Yes I have also found this for math. If you append "I am a math tutor" it starts to answer with higher accuracy.

1

1

58

Yes --- I think spending more time thinking about what to work on vs actually working on the thing is hugely important.

The best meta- advice I've gotten is from @barret_zoph. It took me a year to begin to understand it. It went something like:. Notice that many researchers work hard. Yet some are far more successful. This means the project you choose defines the upper-bound for your success.

2

2

55

Exciting see sparse MoE models being 10x more calibrated than their dense LM counterparts. Better model calibration is a key research direction into better understand what models do vs don't know.

Overall, sparse models perform as well as dense models which use ~2x more inference cost, but they are as well calibrated as dense models using ~10x more inference compute.

2

3

41

Nice work from @IrwanBello on his paper “LambdaNetworks: Modeling Long-Range Interactions without Attention”. An interesting scalable alternative to self-attention with strong empirical results in computer vision!. Link:

1

4

35

Yes +1. I remember studying parts of the Feynman lectures which showed me how much more clear my thought process could be. When reading his description of simple algebra and complex numbers I thought "wow I really am not thinking clearly enough":

Looking back, my most valuable college classes were physics, but for general problem solving intuitions alone:.- modeling systems with increasingly more complex terms.- extrapolating variables to check behaviors at limits.- pursuit of the simplest most powerful solutions. .

3

2

32

AI progress has continually exceeded my expectations since I first started working in the space in 2015. The saying that people overestimate what they can do in a short amount of time and underestimate what can be achieved in longer periods of time definitely resonates w/ me.

10 yrs ago @karpathy wrote a blog post on the outlook of AI: in which he describes how difficult it would be for an AI to understand a given photo, concluding "we are very, very far and this depresses me.".Today, our Flamingo steps up to the challenge.

1

1

25

Very excited to be able to release these sparse checkpoints to the research community!.

Today we're releasing all Switch Transformer models in T5X/JAX, including the 1.6T param Switch-C and the 395B param Switch-XXL models. Pleased to have these open-sourced!. All thanks to the efforts of James Lee-Thorp, @ada_rob, and @hwchung27.

2

1

26

It was a pleasure to be part of this effort! Very bullish on the impact this will have for the future of LLMs. Also very impressed with the leadership for this project --- coordinating all of this to happen is nothing short of incredible!.

After 2 years of work by 442 contributors across 132 institutions, I am thrilled to announce that the paper is now live: BIG-bench consists of 204 diverse tasks to measure and extrapolate the capabilities of large language models.

1

3

25

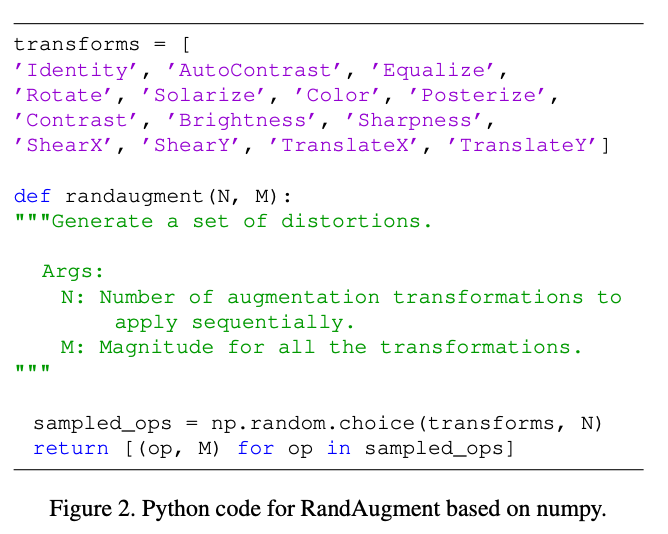

This is a great description of RandAugment! Thanks so much.

This video explains the new RandAugment AutoML Data Augmentation algorithm from @GoogleAI, improving on previous techniques (AutoAugment/PBA) on ImageNet and dramatically reducing the search space, making AutoML for Data Aug much easier!. #100DaysOfMLCode.

1

5

23

Nice paper showing the power of simple scaling and training methods for video recognition!. Follows the line of "RS" research I have done with some of these collaborators for Image Classification ( and Object Detection (.

Wondering how simple 3D-ResNets perform on video recognition given all the recent architecture craze?. In Revisiting 3D ResNets for Video Recognition, we study the impact of improved training and scaling methods on 3D ResNets.

1

3

17

Really fun chatting! Thanks for having us on.

New interview with Barret Zoph (@barret_zoph) and William Fedus (@LiamFedus) of Google Brain on Sparse Expert Models. We talk about Switch Transformers, GLAM, information routing, distributed systems, and how to scale to TRILLIONS of parameters. Watch now:.

1

1

17

To find these interest prompts, should we be looking at the pre-training data? Is "step by step" mentioned the most frequently in documents when an explanation comes next?. Automatic prompt discovery from inspecting the pre-training data feels promising.

Big language models can generate their own chain of thought, even without few-shot exemplars. Just add "Let's think step by step". Look me in the eye and tell me you don't like big language models.

2

1

14

Wow that is a very strong imagenet result! Cool to see further progress being made in semi-supervised methods for computer vision!.

Some nice improvement on ImageNet: 90% top-1 accuracy has been achieved :-). This result is possible by using Meta Pseudo Labels, a semi-supervised learning method, to train EfficientNet-L2. More details here:

1

0

15

Excited to be giving it! Thanks for the invite.

📢 Next Wed at 5 pm, we’ll have (@barret_zoph ) from Gooogle Brain who will talk about the use of sparsity for large Transformer models:."Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity".zoom info: ai-info@ku.edu.tr or just DM!

0

2

13

Thanks @jeremiecharris for having me on your podcast! . Super fun chatting about mixture-of-expert models and how they fit into the current large language model landscape. Podcast:

1

3

12

Yes this is a very important principle to keep in mind --- even when doing a single research project. It's often hard to find the right experimentation scale such that the "smaller" scale ideas have a higher probability of working at a "larger scale".

Just making sure everyone read “The Bitter Lesson”, as it is one of the best compact pieces of insight into nature of progress in AI. Good habit to keep checking ideas on whether they pass the bitter lesson gut check

0

0

12

Fantastic video on some our recent work! Really great job @CShorten30 .

"Rethinking Pre-training and Self-Training" from researchers @GoogleAI shows we get better results from self-training than either supervised or self-supervised pre-training. Demonstrated on Object Detection and Semantic Segmentation!. #100DaysOfMLCode

0

0

12

@giffmana The T5 paper did something very similar right? Do the normal warmup, decay by 1/sqrt(step), then linearly decay by last 10% of training.

1

0

10

Happy to see our work on ResNet-RS made it to NeurIPS!.

To appear #NeurIPS2021 as a spotlight - congrats team.

0

0

10

Impressive results w/ the continued scale of large LMs. On certain tasks there were large discontinuous performance improvements not predicted by scaling curves . Great leadership / coordination on this project to make it happen --- nice work team!.

Introducing the 540 billion parameter Pathways Language Model. Trained on two Cloud #TPU v4 pods, it achieves state-of-the-art performance on benchmarks and shows exciting capabilities like mathematical reasoning, code writing, and even explaining jokes.

0

0

9

Super excited to see the co-evolution of game design with these types of models. Open world games that could automatically generate new environments based on what the player has enjoyed so far would be so cool --- I often felt games got stale due to a lack of new environments.

0

0

9

Nice summary of a lot of the great work done by Google Research in the past year.

As in past years, I've spent part of the holiday break summarizing much of the work we've done in @GoogleResearch over the last year. On behalf of @Google's research community, I'm delighted to share this writeup (this year grouped into five themes).

0

0

8

This really hit homes --- the amount of hand holding for experiments and models can be quite frustrating. You would think that this area would have more progress given these are the issues people training the models are having :).

The AGI I want is one that realizes I made a dumb mistake with batch size which makes it OOM on a supercomputer and tries a smaller one for me - while I am sleeping so I don’t have to babysit the models and increases the throughput in experimentation!.

0

1

7

Authors: Golnaz Ghiasi, @YinCui1, @AravSrinivas, @RuiQian3, @TsungYiLin1, @ekindogus, @quocleix, @barret_zoph.

1

0

7

@_arohan_ @borisdayma Yea +1 also to the power of these GLU/GELU FFN variants (like in . These work very well.

0

0

6

Nice architectural improvements from my collaborators at Google!.

Today we present SpineNet, a novel alternative to standard scale-decreased backbone models for visual recognition tasks, which uses reordered network blocks with cross-scale connections to better preserve spatial information. Learn more below:

0

0

5

Great thread describing some of the approaches for getting models to perform well on tasks we care about!.

📢 A 🧵on the future of NLP model inputs. What are the options and where are we going? 🔭. 1. Task-specific finetuning (FT).2. Zero-shot prompting.3. Few-shot prompting.4. Chain of thought (CoT).5. Parameter-efficient finetuning (PEFT).6. Dialog . [1/]

0

1

5