Ofir Press

@OfirPress

Followers

11,307

Following

3,671

Media

279

Statuses

2,017

I build tough benchmarks for LMs and then I get the LMs to solve them. Postdoc @Princeton . PhD from @nlpnoah @UW . Ex-visiting researcher @MetaAI & @MosaicML .

Joined June 2016

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

SEVENTEEN

• 1097411 Tweets

Taylor

• 223357 Tweets

FELIP TAKES ON BBPHSTAGE

• 100582 Tweets

The Next Prince Q9

• 90992 Tweets

#TimnasDay

• 82056 Tweets

Carat

• 76435 Tweets

オーストラリア

• 63348 Tweets

#サッカー日本代表

• 49171 Tweets

Tattvadarshi Sant Rampal Ji

• 48430 Tweets

BBPH ANN1V WITH BINI

• 42509 Tweets

#あのクズ

• 41130 Tweets

Asnawi

• 37624 Tweets

オウンゴール

• 30728 Tweets

最終予選

• 28076 Tweets

ミンギュ

• 27182 Tweets

誹謗中傷

• 26601 Tweets

Zaplana

• 26040 Tweets

ジョシュア

• 20624 Tweets

ドギョム

• 20075 Tweets

スングァン

• 19643 Tweets

نعيم قاسم

• 19274 Tweets

Witan

• 17482 Tweets

كوريا

• 16619 Tweets

Arhan

• 14969 Tweets

中村敬斗

• 12469 Tweets

Tuchel

• 11549 Tweets

報道ステーション

• 10833 Tweets

Last Seen Profiles

@TheSeaMouse

Of course they would. He's one of the smartest people in ML.

I disagree with his views but I'm sure that lots of VCs either agree with him or don't care about those things.

16

2

497

ChatGPT can solve novel, undergrad-level problems in *computational complexity* 🤯

"Please prove that the following problem is NP-hard..."

Solution in next tweet -->

Credit:

@TzvikaGeft

(1/3)

15

67

444

If someone doesn't stop Tim soon he's gonna run Guanaco-65B on a globally distributed cluster of 5 toasters and 3 electric toothbrushes at 70 tokens/sec.

5

30

375

Transformers are made of interleaved self-attention and feedforward sublayers. Can we find a better pattern?

New work on *improving transformers by reordering their sublayers* with

@nlpnoah

and

@omerlevy_

6

81

326

Now that I'm done with the PhD I can tell you what I really think about my advisor

@nlpnoah

:

4

2

276

New preprint by

@Ale_Raganato

et al. shows that NMT models with manually engineered, fixed (i.e. position-based) attention patterns perform as well as models that learn how to attend. Super cool!

1

48

214

Apple Intelligence's on-device LM uses our weight tying method 😀

Looking forward to receiving my first royalty check in the mail soon

@tim_cook

4

9

203

SciCode is our new benchmark, with 338 programming challenges written by PhDs in physics, math, and bio, based on papers in their fields. A bunch of the questions are from Nobel-winning papers!

I hope this becomes the new HumanEval.

7

24

201

According to the EvalPlus Leaderboard, Gemini Pro is nowhere near GPT-4 (or even 3.5) on HumanEval.

by

@JiaweiLiu_

&

@steven_xia_

et al

11

28

195

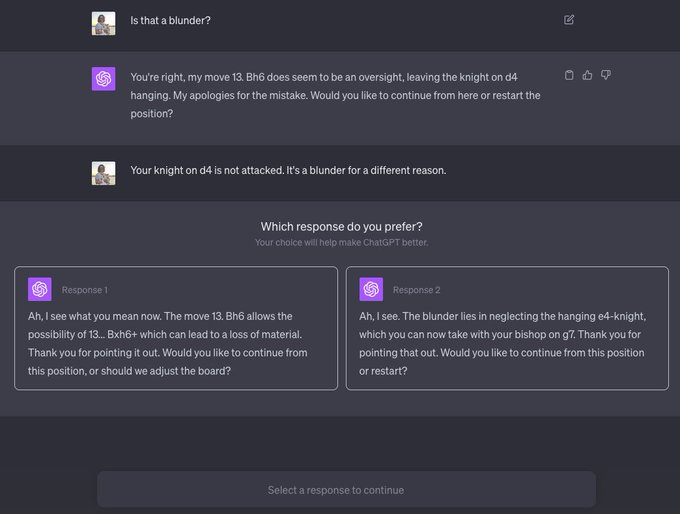

I believe that in 6-12 months we'll have an open source GPT-4 replication.

But GPT-5 will be built based on immense amounts of human feedback collected like shown here and I'm not sure how the open community will replicate that

17

14

163

Tim is singlehandedly developing more LM technology than most tech companies.

@karpathy

Super excited to push this even further:

- Next week: bitsandbytes 4-bit closed beta that allows you to finetune 30B/65B LLaMA models on a single 24/48 GB GPU (no degradation vs full fine-tuning in 16-bit)

- Two weeks: Full release of code, paper, and a collection of 65B models

39

193

1K

3

11

165

Sandwiches will be served at ACL 2020! In our updated paper, we show that sandwiching improves strong models in word *and* character-level language modeling. We match the results of Deepmind's Compressive Transformer on enwik8 even though our model is both much faster and smaller

Transformers are made of interleaved self-attention and feedforward sublayers. Can we find a better pattern?

New work on *improving transformers by reordering their sublayers* with

@nlpnoah

and

@omerlevy_

6

81

326

1

22

158

Sparks of stupidity?

We've found a wide array of questions that lead GPT-4 & ChatGPT to hallucinate so badly, to where in a separate chat session they can point out that what they previously said was incorrect.

@zhang_muru

et al🧵⬇️

9

29

158

Cool new paper by

@XiangLisaLi2

and

@percyliang

that shows that you can train small, continuous vectors to act as 'prompts' for different downstream tasks in GPT-2 and BART.

1

38

157

Love is all you need, and attention may not be all you need! We show that a simple, attentionless translation model that uses a constant amount of memory performs on par with the Bahdanau attention model. with

@nlpnoah

2

58

154

Combining Self-ask + Google Search + a Python Interpreter leads to a super powerful LM that is really easy to implement and run!

Super excited what else people do with this :)

4

29

155

The progress on SWE-bench is nuts. I think my prediction of 2 systems surpassing 35% pass

@1

on the full test set by Aug 1 will come true.

When we launched in October, nobody wanted to work on the dataset because it was considered "too hard" or "impossible". Acc was 1.96% then.

10

13

134

AI assistants have been improving but they still can't answer complex but natural questions like "Which restaurants near me have vegan and gluten-free entrées for under $25?"

Today we're launching a new benchmark to evaluate this ability.

I hope this leads to better assistants!

6

22

130

We built a super tough benchmark to test whether models can browse the web to correctly attribute scientific claims.

The GPT-4o-powered agent gets 35%.

Also- the first author is my brother

@_jasonwei

+

@JerryWeiAI

: we're coming for you 🤠

4

9

126

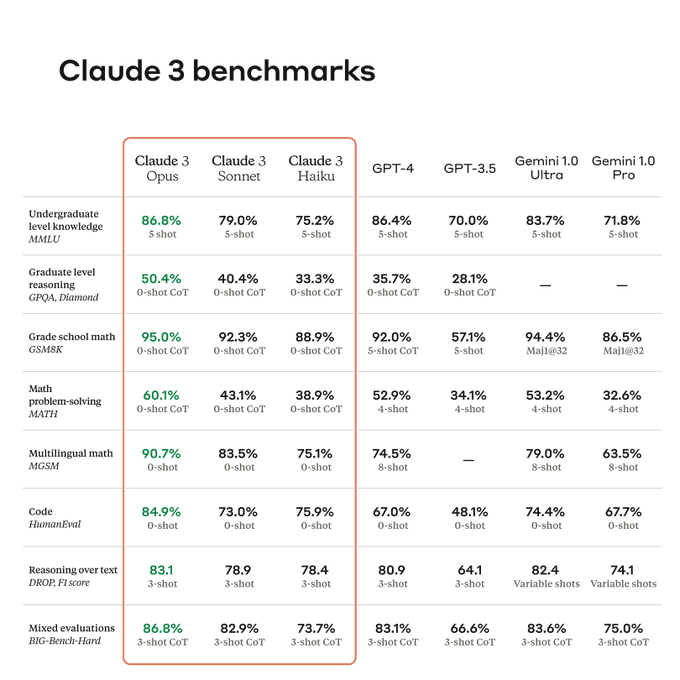

The only two numbers worth looking at here are GPQA and HumanEval

On GPQA the result is very impressive. On HumanEval, they compare to GPT-4's perf at launch. GPT-4 is now much better- see the EvalPlus leaderboard, where it gets 88.4

I bet OpenAI will respond with GPT-4.5 soon

6

10

126

Big result in the SWE-agent preprint:

The pass

@6

rate is 32.67% SWE-bench Lite! That's cool!

6

16

126

Asking Goldman Sachs about AI is as productive as asking a group of penguins about architecture.

AI is going to make programmers much more efficient, it's going to do other things as well, but just that programming bit is going to be worth more than $1TN.

12

8

106

If you ask Google "When did

@chrmanning

's PhD advisor finish their PhD?" it won't answer correctly.

Self-ask + Google Search answers this correctly!

(Green text is generated by GPT-3, blue is retrieved from Google)

Play with this demo at:

5

11

105

I disagree- there's a lot still left to explore in how we can build *on top* of LMs to make them much more useful.

SWE-agent took GPT-4 from 1% on SWE-bench to 12%.

We didn't do any finetuning or training, so this type of research is super accessible to academics.

3

6

101

Hi Dan- I've got about ~1,700 questions for you that no existing AI system can solve, you can get them at and you don't even have to pay me.

~250 of these unsolved questions have also been verified by humans as being definitely solvable.

5

1

102

We just launched SWE-bench Multimodal, a brand new benchmark with 617 tasks *all of which have an image*.

This benchmark challenges agents in new but realistic angles.

We also launch SWE-agent Multimodal to start tackling some of these issues.

We're launching SWE-bench Multimodal to eval agents' ability to solve visual GitHub issues.

- 617 *brand new* tasks from 17 JavaScript repos

- Each task has an image!

Existing agents struggle here! We present SWE-agent Multimodal to remedy some issues

Led w/

@_carlosejimenez

🧵

8

50

245

2

6

113

I love this! Earlier layers in transformers make worse predictions than later ones so we can improve decoding performance by biasing against tokens that were assigned a lot of probability by earlier layers.

(1/5)🚨Can LLMs be more factual without retrieval or finetuning?🤔 -yes✅

🦙We find factual knowledge often lies in higher layers of LLaMA

💪Contrast high/low layers can amplify factuality & boost TruthfulQA by 12-17%

📝

🧑💻

#NLProc

1

62

285

2

14

96

About Scaled LLaMA:

If you train on 2k tokens and then extrapolate to 8k using this, your LM will actually only be looking back at 2k tokens during each timestep. So you are able to input longer sequences but perf. doesn't improve.

I explain this at

@ggerganov

@yacineMTB

Regular LLaMA 7B:

arc_c: 0.41 arc_e: 0.52 piqa: 0.77 wic: 0.5

Scaled LLaMA 7B:

arc_c: 0.37 arc_e: 0.48 piqa: 0.75 wic: 0.49

So it does seem to have a slight performance downgrade, but this is with zero finetuning (!)

5

7

83

10

17

93

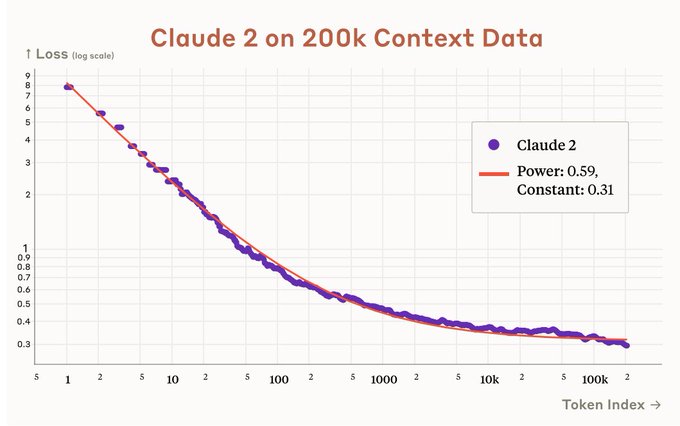

AFAIK this is the first release of data that shows what people are actually using LMs for.

The top 2 uses:

30% is for generating/explaining code.

18% is for text manipulation: summarization, expansion, translation, QA about a given text.

(1/2)

2

6

89

Has anyone even shown that transformer LMs can effectively use 4k context tokens? Very interesting that OpenAI went to 32k already.

8

7

87

If you want to start working with and extending SWE-agent,

@KLieret

just wrote this detailed overview of the architecture of SWE-agent:

0

15

85

OpenAI just released a small subset of SWE-bench tasks, verified by humans to be solvable.

I would treat this subset as "SWE-bench Easy"- useful for debugging your system.

But eventually when you're ready for launch, we still recommend running on SWE-bench Lite or the full set

4

10

83

I don't think it's productive or effective for a PhD student to ever lead more than 1 project simultaneously.

If anything, I think leading 0.5 projects is even better (see SWE-bench & SWE-agent which Carlos and John co-led)

Focusing is really important.

5

2

82

Just spoke

@WeizmannScience

about building benchmarks that are tough, natural & easily checkable

i.e.

A guy I didn't recognize in the front kept on asking questions the entire talk

After the talk I asked who it was

It was Adi Shamir, the S of RSA! OMG!!

3

2

82

"it is possible to distill an approximation of Stockfish 16 into a transformer via standard supervised training. The resulting predictor generalizes well to unseen board states, and, when used in a policy, leads to strong chess play (Lichess Elo of 2895 against humans)"

Awesome!

3

7

80

Me and my brother are both at NeurIPS!! He's presenting a paper (poster on Wednesday), I'm just here for the vibes.

I'm at

#NeurIPS

presenting my work on infinitely long ImageNet-C and test time adaptation. You can stream the dataset right now (), with no download required! Feel free to reach out to chat about robustness, domain adaptation, or related topics 😀

1

2

39

2

0

78

I disagree- lots of things to work on in language modeling:

1. Find weird phenomenon in LMs and understand why they happen- hallucination, the compositionality gap, the reversal curse.

2. Take my self-ask + google search system and use it to build a better

@perplexity_ai

🧵

2

2

77

mpt-7b is powered by ALiBi! 😎

It performs as well as LLaMA-7B on downstream tasks and is fully open source

Our team at

@MosaicML

has been working on releasing something special:

We're proud to announce that we are OPEN SOURCING a 7B LLM trained to 1T tokens

The MPT model outperforms ALL other open source models!

Code:

Blog:

🧵

27

221

1K

5

8

75