Georgi Gerganov

@ggerganov

Followers

44K

Following

3K

Media

251

Statuses

1K

24th at the Electrica puzzle challenge | https://t.co/baTQS2bdia

Joined May 2015

sam.cpp 👀. Inference of Meta's Segment Anything Model on the CPU. Project by @YavorGI - powered by

35

277

2K

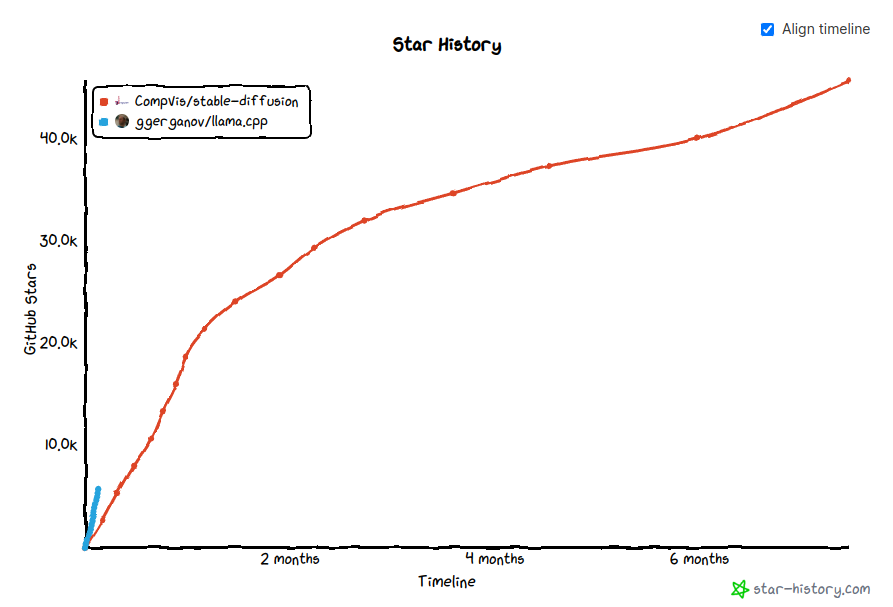

The future of on-device inference is ggml + Apple Silicon. You heard it here first!.

Watching llama.cpp do 40 tok/s inference of the 7B model on my M2 Max, with 0% CPU usage, and using all 38 GPU cores. Congratulations @ggerganov ! This is a triumph.

38

180

2K

ggml will soon run on billion devices. @apple don't sleep on it 🙃.

61

125

1K

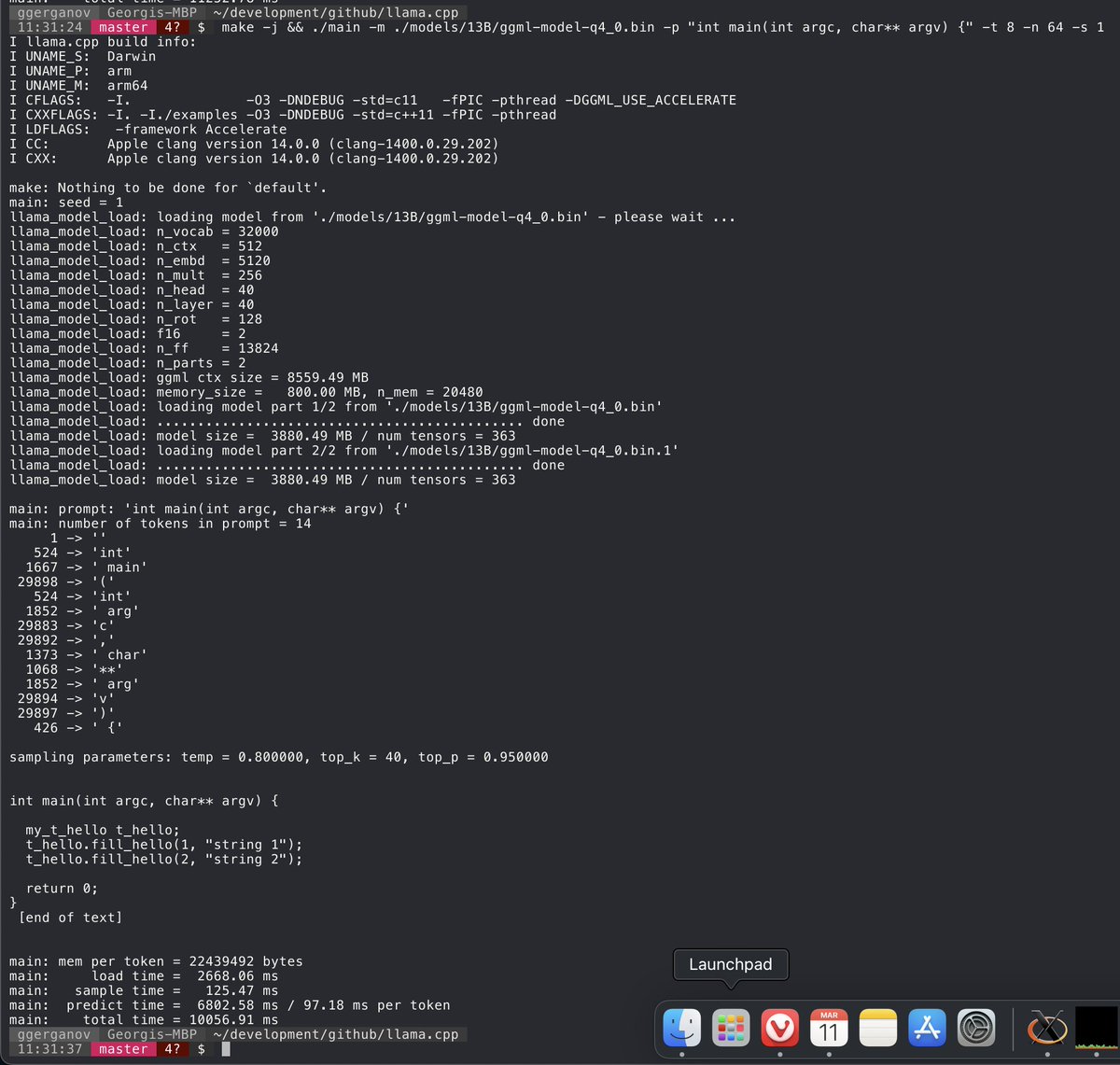

Just added support for all LLaMA models. I'm out of disk space, so if someone can give this a try for 33B and 65BB would be great 😄.See updated instructions in the Readme. Here is LLaMA-13B at ~10 tokens/s

I think I can make 4-bit LLaMA-65B inference run on a 64 GB M1 Pro 🤔. Speed should be somewhere around 2 tokens/sec. Is this useful for anything?.

26

139

1K

llama.cpp just got access to the new Copilot for Pull Request technical preview by @github . Just add tags like "copilot:all" / "copilot:summary" / "copilot:walkthrough" to your PR comment the magic happens 🪄

15

95

980

llama2.c running in a web-page. Compiled with Emscripten and modified the code to predict one token per render pass. The page auto-loads 50MB of model data - sorry about that 😄.

My fun weekend hack: llama2.c 🦙🤠.Lets you train a baby Llama 2 model in PyTorch, then inference it with one 500-line file with no dependencies, in pure C. My pretrained model (on TinyStories) samples stories in fp32 at 18 tok/s on my MacBook Air M1 CPU.

16

144

886

llama.cpp now supports distributed inference across multiple devices via MPI. This is possible thanks to @EvMill's work. Looking for people to give this a try and attempt to run a 65B LLaMA on cluster of Raspberry Pis 🙃.

19

137

855

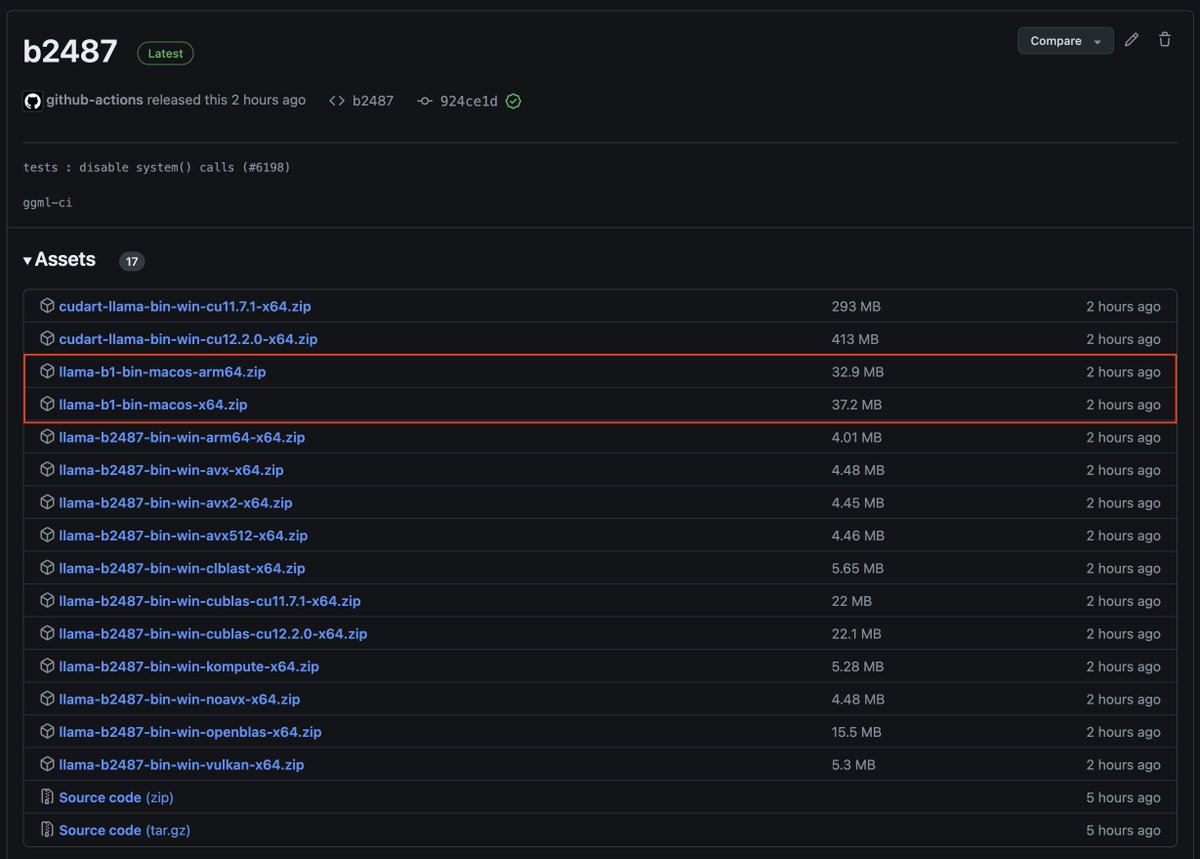

llama.cpp releases now ship with pre-built macOS binaries. This should reduce the entry barrier for llama.cpp on Apple devices. Thanks to @huggingface for the friendly support 🙏

16

67

724

llama.cpp is standing ground against the behemoths. The CUDA backend is contained in a single C++ file so it allows for very easy deployment and custom modifications. (pp - prefill, tg - text gen)

Trying out the new TensorRT-LLM framework and get some pretty good performance out of the box with 3090s. 107 tokens/sec int8 and 54 tok/sec bf16 for llama-2 7B models (not much work to setup either). Get 160+ tokens/sec on 2x3090s (these are just batch_size=1)

12

47

570

The GGUF file format is a great example of the cool things that an open-source community can achieve. Props to @philpax_ and everyone else involved in the design and implementation of the format. I'm thankful and happy to see that it finds adoption in ML.

At @huggingface, we are adding more support to GGUF (model format by @ggerganov). The number of GGUF models on the hub has been exploding & doesn't look like it is gonna slow down🔥.see more at:

11

65

502

ggml inference tech making its way into this week’s @apple M4 announcements is a great testament to this. IMO, Apple Silicon continues to be the best consumer-grade hardware for local AI applications. For next year, they should move copilot on-device.

15

47

547

The ggml roadmap is progressing as expected with a lot of infrastructural development already completed. We now enter the more interesting phase of the project - applying the framework to practical problems and doing cool stuff on the Edge

Took the time to prepare a ggml development roadmap in the form of a Github Project. This sets the priorities for the short/mid term and will offer a good way for everyone to keep track of the progress that is being made across related projects

7

41

522

whisper.cpp now supports @akashmjn's tinydiarize models. These fine-tuned models offer experimental support for speaker segmentation by introducing special tokens for marking speaker changes.

16

64

501

Here is what a properly built llama.cpp looks like. Running 7B on 2 years old Pixel 5 at 1 token/sec. Would be interesting to see how an interactive session feels like.

10

67

448

GGUF My Repo by @huggingface . Create quantum GGUF models fully online - quickly and secure. Thanks to @reach_vb, @pcuenq and team for creating this HF space!. In the video below I give it a try to create a quantum 8-bit model of Gemma 2B - it took about

24

89

456

Very cool experiment by @chillgates_ . Distributed MPI inference using llama.cpp with 6 Raspberry Pis - each one with 8GB RAM "sees" 1/6 of the entire 65B model. Inference starts around ~1:10. Follow the progress here:.

Yeah. I have ChatGPT at home. Not a silly 7b model. A full-on 65B model that runs on my pi cluster, watch how the model gets loaded across the cluster with mmap and does round-robin inferencing 🫡 (10 seconds/token) (sped up 16x)

11

73

431

napkin math ahead:. - buy 8 mac mini (200GB/s, ~$1.2k each).- run LLAMA_METAL=1 LLAMA_MPI=1 for interleaved pipeline inference.- deploy on-premise, serve up to 8 clients in parallel at 25 t/s / 4-bit / 7B. is this cost efficient? energy wise?. thanks to @stanimirovb for idea.

24

26

408

Powered by: ggml / whisper.cpp / llama.cpp / Core ML .STT: Whisper Small.LLM: 13B LLaMA.TTS: @elevenlabsio . The Whisper Encoder is running on Apple Neural Engine. Everything else is optimized via ARM NEON and Apple Accelerate.

10

18

363

Playing some chess using voice. WASM whisper.cpp with a quantized tiny model + grammar sampling (by @ejones). Runs locally in the browser. Not perfect, but I think pretty good overall!. Try it here:

8

43

356

To run the released model with latest llama.cpp, use the "convert-unversioned-ggml-to-ggml" python script and apply the following patch to llama.cpp. The latest llama.cpp offers significant performance and accuracy improvements in the inference computation

I'm excited to announce the release of GPT4All, a 7B param language model finetuned from a curated set of 400k GPT-Turbo-3.5 assistant-style generation. We release💰800k data samples💰 for anyone to build upon and a model you can run on your laptop!.Real-time Sampling on M1 Mac

6

47

344

Great write-up. The CoreML branch speeds up just the Encoder. At the same time, the master branch already has additional ~2-3 factor of speed up in the Decoder thanks to recent work on llama.cpp. When we merge these 2 together, the performance will be mind blowing.

Hello Transcribe 2.2 with CoreML is out, now 3x-7x faster 🚀🥳. Blog post: App Store: #OpenAI #AI #Whisper #CoreML

9

29

319

Let's bring llama.cpp to the clouds!. You can now run llama.cpp-powered inference endpoints through Hugging Face with just a few clicks. Simply select a GGUF model, pick your cloud provider (AWS, Azure, GCP), a suitable node GPU/CPU and you are good to go. For more info, check.

Wanna see something cool?. You can now deploy GGUF models directly onto Hugging Face Inference Endpoints!. Powered by llama.cpp @ggerganov . Try it now -->

9

45

313