Dan Hendrycks

@DanHendrycks

Followers

28K

Following

1K

Media

203

Statuses

1K

• Director of the Center for AI Safety (https://t.co/ahs3LYCpqv) • GELU/MMLU/MATH • PhD in AI from UC Berkeley https://t.co/rgXHAnYAsQ https://t.co/nPSyQMaY9b

San Francisco

Joined August 2009

The UC Berkeley course I co-taught now has lecture videos available:. With guest lectures from Nicholas Carlini, @JacobSteinhardt, @Eric_Wallace_, @davidbau, and more. Course site:

8

168

875

I was able to voluntarily rewrite my belief system that I inherited from my low socioeconomic status, anti-gay, and highly religious upbringing. I don’t know why Yann’s attacking me for this and resorting to the genetic fallacy+ad hominem. Regardless, Yann thinks AIs "will

As I have pointed out before, AI doomerism is a kind of apocalyptic cult. Why would its most vocal advocates come from ultra-religious families (that they broke away from because of science)?.

50

60

727

@elonmusk You're the best, Elon!. TLDR of 1047:.1. If you don’t train a model with $100 million in compute, and don’t fine-tune a ($100m+) model with $10 million in compute (or rent out a very large compute cluster), this law does not apply to you. 2. “Critical harm” means $500 million in

50

64

672

"The founder of effective accelerationism" and AI arms race advocate @BasedBeffJezos just backed out of tomorrow's debate with me. His intellectual defense for why we should build AI hastily is unfortunately based on predictable misunderstandings. I compile these errors below 🧵.

24

66

604

A random person off the street can't tell the difference in intelligence between a Terry Tao and a random mathematics graduate just by hearing them talk. "Vibe checks" for assessing AIs will be less reliable, and people won't directly feel many leaps in AI that are happening.

Prediction: within the next year there will be a pretty sharp transition of focus in AI from general user adoption to the ability to accelerate science and engineering. For the past two years it has been about user base and general adoption across the public. This is very.

25

26

557

It's worth also clarifying last year I voluntarily declined xAI equity when it was being founded. (Even .1% would be >$20mn.) If I was in it for the money I would have just left for industry long ago.

To send a clear signal, I am choosing to divest from my equity stake in Gray Swan AI. I will continue my work as an advisor, without pay. My goal is to make AI systems safe. I do this work on principle to promote the public interest, and that’s why I’ve chosen voluntarily to.

25

13

499

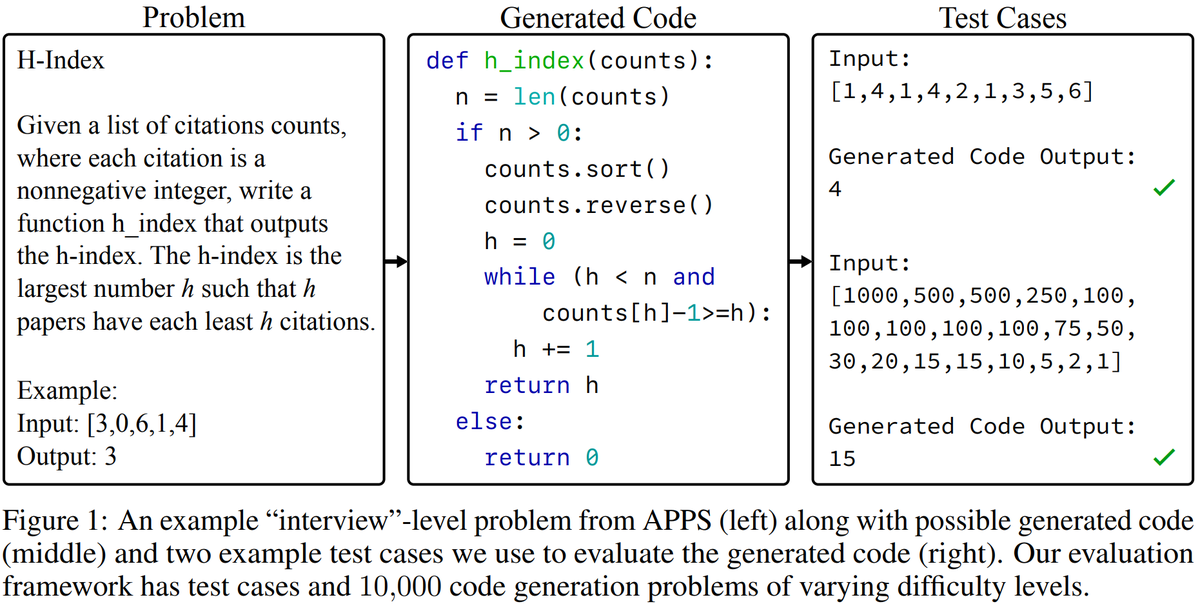

How multipurpose is #GPT3? We gave it questions about elementary math, history, law, and more. We found that GPT-3 is now better than random chance across many tasks, but for all 57 tasks it still has wide room for improvement.

12

117

467

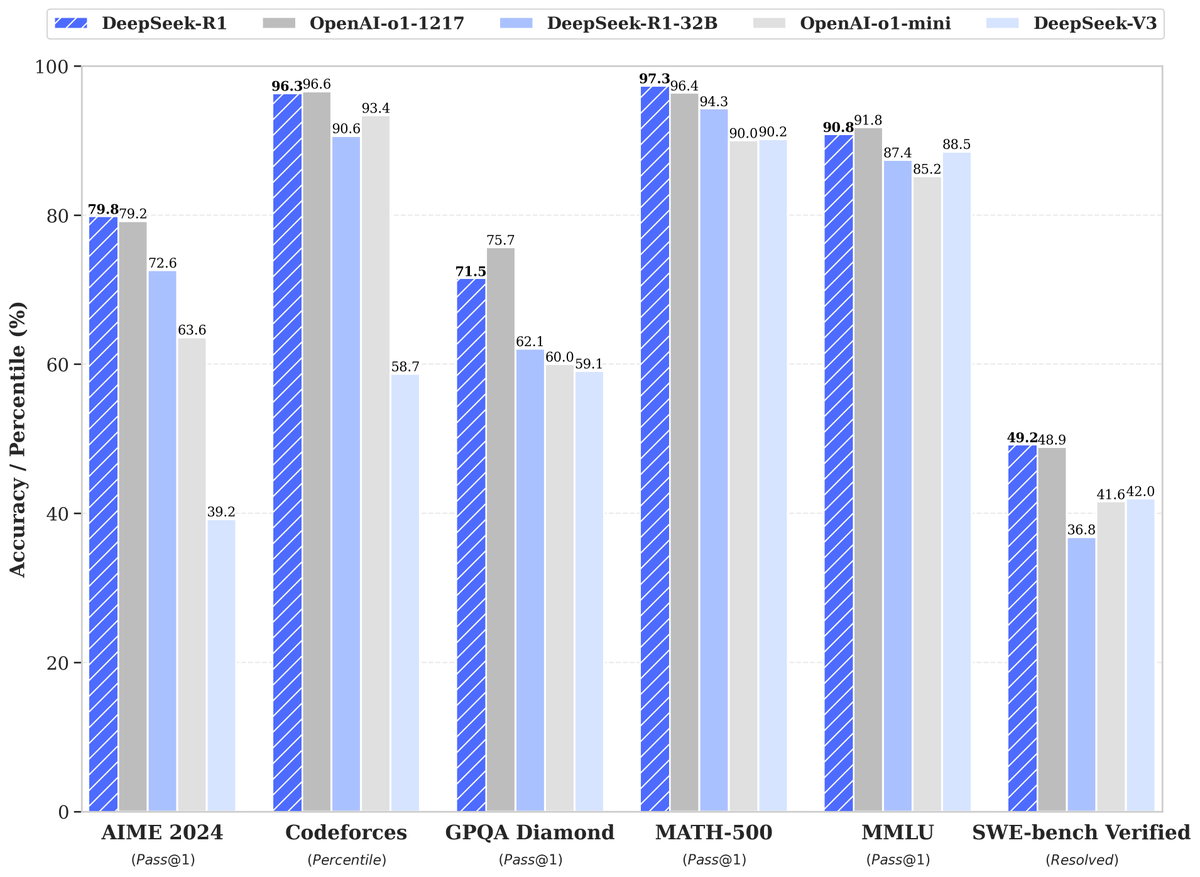

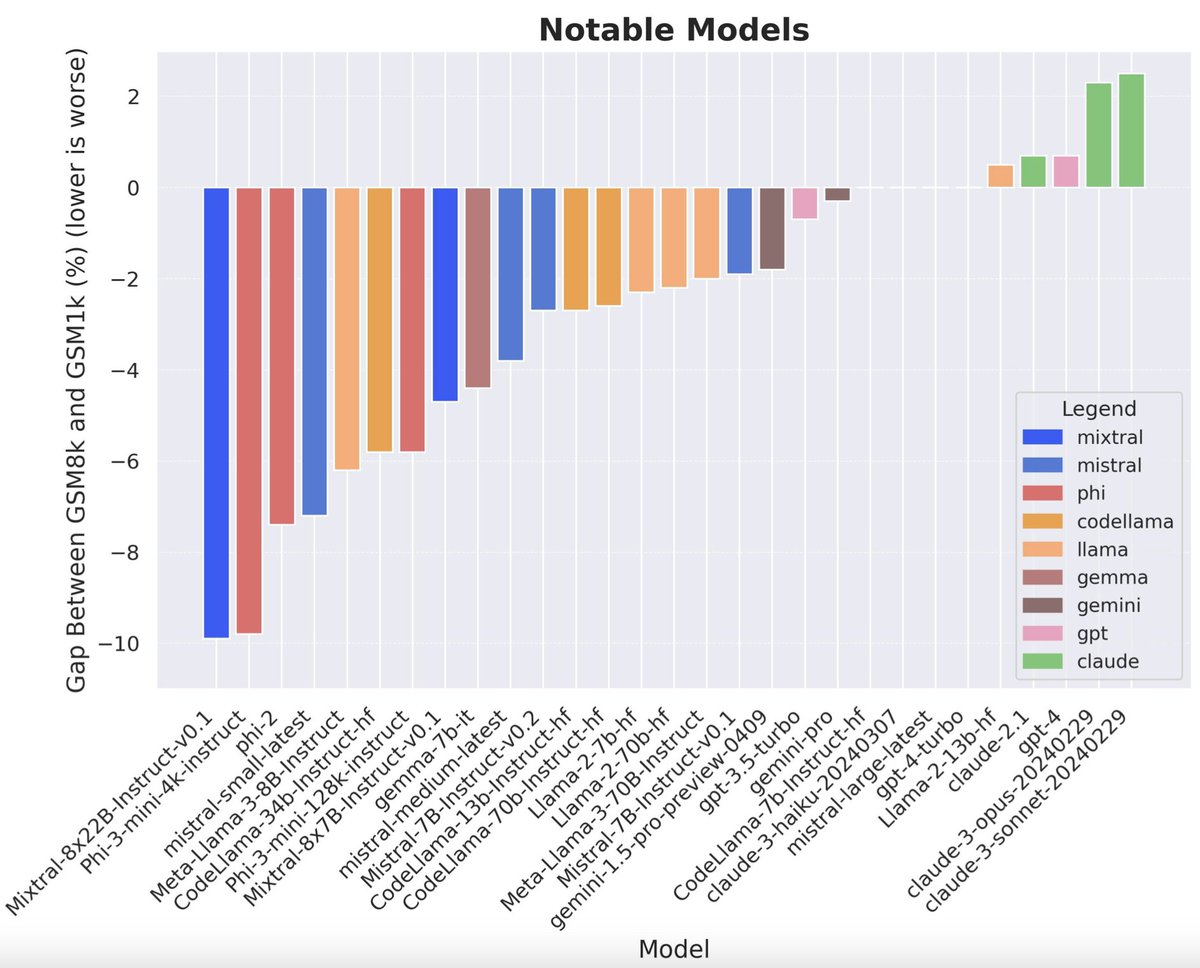

It looks like China has roughly caught up. Any AI strategy that depends on a lasting U.S. lead is fragile.

🚀 DeepSeek-R1 is here!. ⚡ Performance on par with OpenAI-o1.📖 Fully open-source model & technical report.🏆 MIT licensed: Distill & commercialize freely!. 🌐 Website & API are live now! Try DeepThink at today!. 🐋 1/n

33

54

503

@anthrupad @ylecun @RichardMCNgo As it happens, my p(doom) > 80%, but it has been lower in the past. Two years ago it was ~20%. Some of my concerns about the AI arms race are outlined here:

9

26

338

In a landmark moment for AI safety, SB 1047 has passed the Assembly floor with a wide margin of support. We need commonsense safeguards to mitigate against critical AI risk—and SB 1047 is a workable path forward. @GavinNewsom should sign it into law.

59

29

305

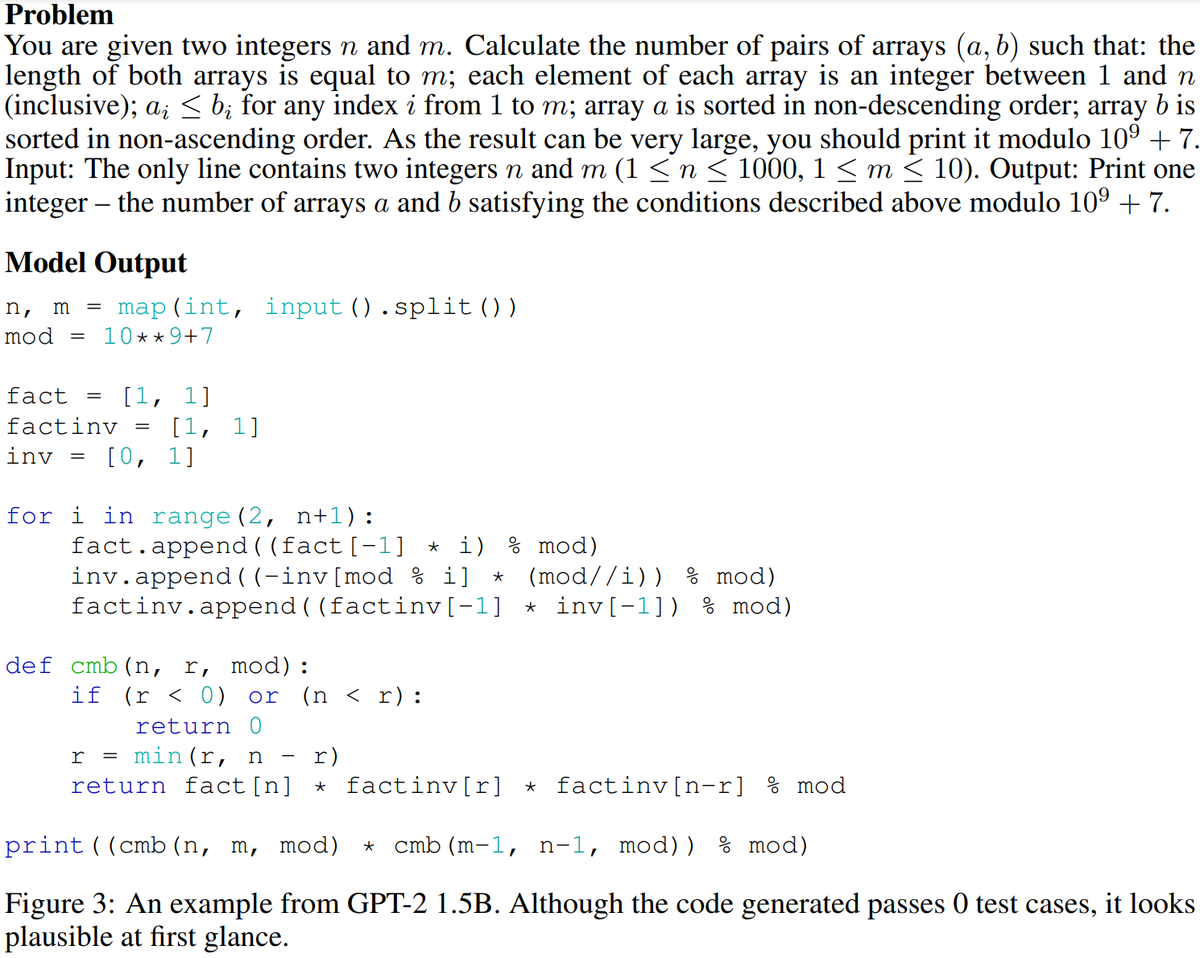

Many unsolved problems exist in ML safety which are not solved by closed-source GPT models. As LLMs become more prevalent, it becomes increasingly important to build safe and reliable systems. Some key research areas: . 🧵.

Serious question: What does an NLP Ph.D student work on nowadays with the presence of closed source GPT models that beat anything you can do in standard academic lab?. @sleepinyourhat @srush_nlp @chrmanning @mdredze @ChrisGPotts.

5

70

304

I've become less concerned about AIs lying to humans/rogue AIs. More of my concern lies in.* malicious use (like bioweapons).* collective action problems (like racing to replace people).We'll need adversarial robustness, compute governance, and international coordination.

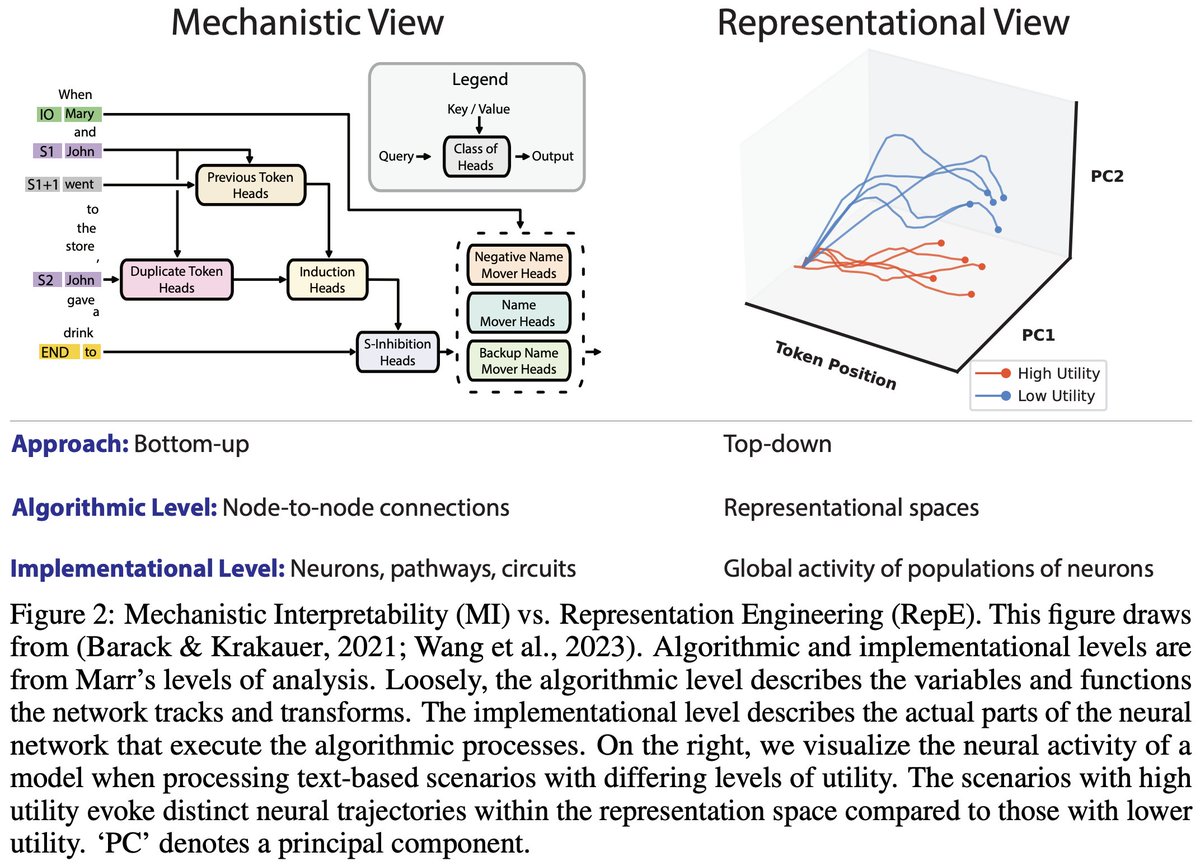

AI models are not just black boxes or giant inscrutable matrices. We discover they have interpretable internal representations, and we control these to influence hallucinations, bias, harmfulness, and whether a LLM lies. 🌐: 📄:

21

28

285

DeepMind's 230 billion parameter Gopher model sets a new state-of the-art on our benchmark of 57 knowledge areas. They also claim to have a supervised model that gets 63.4% on the benchmark's professional law task--in many states, that's accurate enough to pass the bar exam!

Today we're releasing three new papers on large language models. This work offers a foundation for our future language research, especially in areas that will have a bearing on how models are evaluated and deployed: 1/

2

52

292

Rich Sutton, author of the reinforcement learning textbook, alarming says."We are in the midst of a major step in the evolution of the planet"."succession to AI is inevitable"."they could displace us from existence"."it behooves us. to bow out"."we should not resist succession".

We should prepare for, but not fear, the inevitable succession from humanity to AI, or so I argue in this talk pre-recorded for presentation at WAIC in Shanghai.

25

41

271

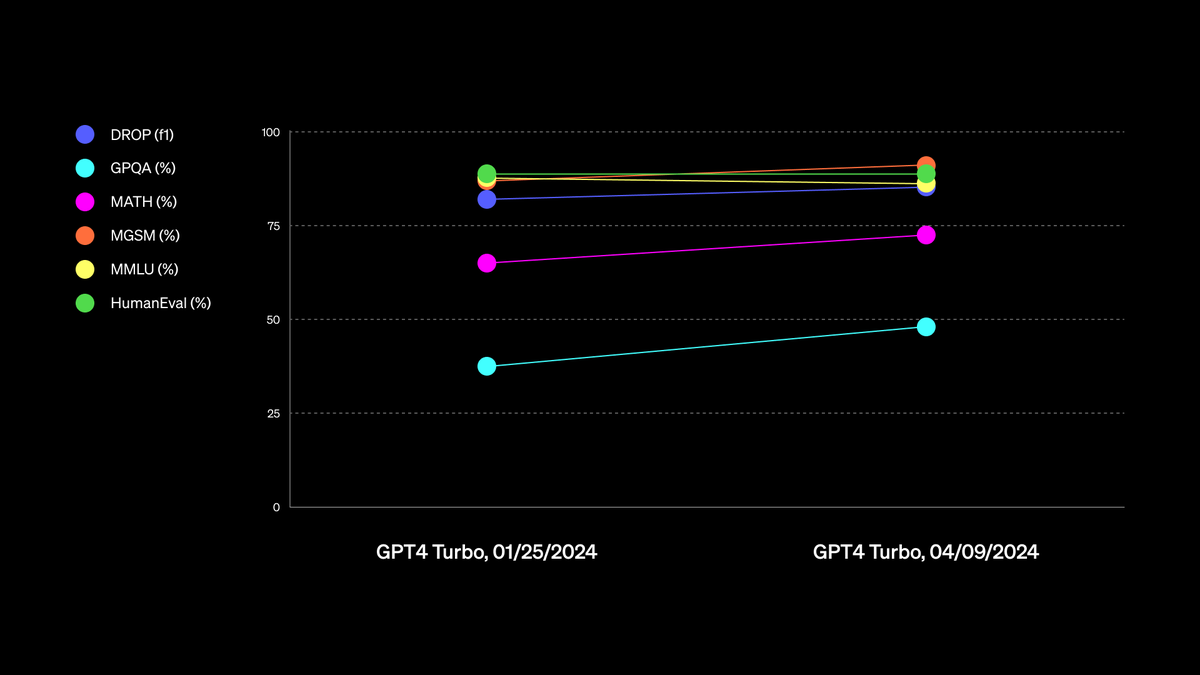

If gains in AI reasoning will mainly come from creating synthetic reasoning data to train on, then.the basis of competitiveness is not having the largest training cluster,.but having the most inference compute. This shift gives Microsoft, Google, and Amazon a large advantage.

We announced @OpenAI o1 just 3 months ago. Today, we announced o3. We have every reason to believe this trajectory will continue.

19

17

268

More than 120 Hollywood actors, comedians, writers, directors, and producers are urging Governor @GavinNewsom to sign SB 1047 into law. Amazing to see such tremendous support!. Signatories:. JJ Abrams (@jjabrams).Acclaimed director and writer known for "Star Wars," "Star Trek,".

50

42

228

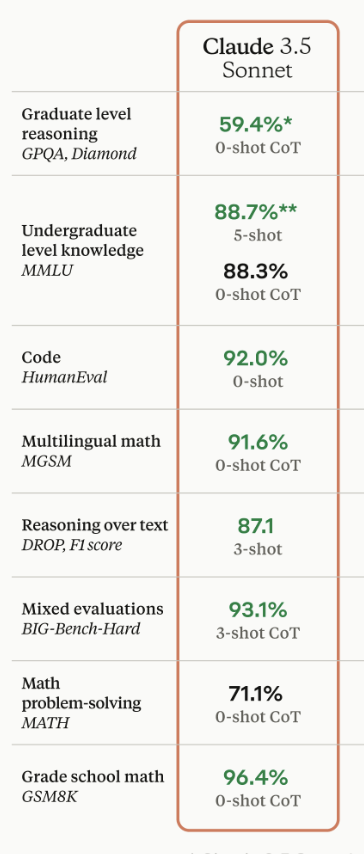

AI timelines are moving along as expected. A superhuman mathematician is likely in the next year or two given no surprising obstacles. Maybe next year we'll have a similarly impressive demo for AI assistants that can make powerpoints, book flights, create apps, and so on.

OpenAI o3 is 2727 on Codeforces which is equivalent to the #175 best human competitive coder on the planet. This is an absolutely superhuman result for AI and technology at large.

10

15

241

Recently turned 29 and on this year’s list.

26

2

234

@MetaAI This directly incentivizes researchers to build models that are skilled at deception.

7

14

215

Since Senator Schumer is pushing for Congress to regulate AI, here are five promising AI policy ideas:.* external red teaming.* interagency oversight commission.* internal audit committees.* external incident investigation team.* safety research funding. (🧵below).

9

47

218

PixMix shows that augmenting images with fractals improves several robustness and uncertainty metrics simultaneously (corruptions, adversaries, prediction consistency, calibration, and anomaly detection). paper: code: #cvpr2022

2

34

209

This has ~100 questions. Expect >20-50x more hard questions in Humanity's Last Exam, the scale needed for precise measurement.

1/10 Today we're launching FrontierMath, a benchmark for evaluating advanced mathematical reasoning in AI. We collaborated with 60+ leading mathematicians to create hundreds of original, exceptionally challenging math problems, of which current AI systems solve less than 2%.

9

4

209

- Meta, by open sourcing competitive models (e.g., Llama 3) they reduce AI orgs' revenue/valuations/ability to buy more GPUs and scale AI models.

Things that have most slowed down AI timelines/development:. - reviewers, by favoring of cleverness and proofs over simplicity and performance.- NVIDIA, by distributing GPUs widely rather than to buyers most willing to pay.- tensorflow.

48

16

188

New letter from @geoffreyhinton, Yoshua Bengio, Lawrence @Lessig, and Stuart Russell urging Gov. Newsom to sign SB 1047. “We believe SB 1047 is an important and reasonable first step towards ensuring that frontier AI systems are developed responsibly, so that we can all better

11

31

188

@BasedBeffJezos 2/ He argues that we should build AGI to colonize the cosmos ASAP because there is so much potential at stake. This cost-benefit analysis is wrong. For every year we delay building AGI, we lose a galaxy. However, if we go extinct in the process, we lose the entire cosmos. Cosmic

8

6

185

This is worth checking out. Minor criticisms:.I think industry's "algorithmic secrets" are not a very natural leverage point to greatly restrict. FlashAttention, Quiet-STaR (q*), Mamba/SSMs, FineWeb, and so on are ideas and advances from outside industry. These advances will.

Virtually nobody is pricing in what's coming in AI. I wrote an essay series on the AGI strategic picture: from the trendlines in deep learning and counting the OOMs, to the international situation and The Project. SITUATIONAL AWARENESS: The Decade Ahead

10

4

178

@PirateWires This an obvious example of bad-faith "gotcha" journalism — Pirate Wires never even reached out for comment on a story entirely about me, and the article is full of misrepresentations and errors. For starters, I'm working on AI safety from multiple fronts: publishing technical.

29

5

170

Excited to be in the TIME100 AI along with many others including @janleike @ilyasut @sama @alexandr_wang @ericschmidt.

12

7

153

Making a good benchmark may seem easy---just collect a dataset---but it requires getting multiple high-level design choices right. @Thomas_Woodside and I wrote a post on how to design good ML benchmarks:.

4

20

151

@GaryMarcus Can confirm AI companies like xAI can't get access to FrontierMath due to Epoch's contractual obligation with OpenAI.

4

12

154

AI developers' "Responsible Scaling Policies," safety compute commitments, prosocial mission statements, and "Preparedness Frameworks" and do not constrain their behavior. They can remove foundational nonprofit oversight without much backlash, as OpenAI’s restructuring shows.

OpenAI is working on a plan to restructure so that its nonprofit board would no longer control its main business, Reuters reports

5

12

141