Sam Bowman

@sleepinyourhat

Followers

38,039

Following

3,222

Media

114

Statuses

2,360

AI alignment + LLMs at NYU & Anthropic. Views not employers'. No relation to @s8mb . I think you should join @givingwhatwecan .

San Francisco

Joined July 2011

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Michigan

• 312235 Tweets

Arsenal

• 267092 Tweets

Miami

• 120473 Tweets

Tennessee

• 103590 Tweets

#StarAcademy

• 81530 Tweets

Bournemouth

• 81064 Tweets

Nico

• 77614 Tweets

Francis

• 53486 Tweets

Usher

• 53380 Tweets

Celta

• 45023 Tweets

Lizzo

• 42801 Tweets

Norris

• 39908 Tweets

Verstappen

• 34920 Tweets

Romero

• 32483 Tweets

Russell

• 31030 Tweets

Nebraska

• 29568 Tweets

Hamilton

• 28582 Tweets

Atlético

• 27577 Tweets

Alonso

• 27397 Tweets

Modric

• 26005 Tweets

GARIME X LA OPORTUNIDAD

• 19181 Tweets

Bama

• 17979 Tweets

#PFLSuperFights

• 16339 Tweets

Ancelotti

• 15475 Tweets

Renato

• 13817 Tweets

Hulk

• 13811 Tweets

Tigre

• 12351 Tweets

Arnold Palmer

• 11206 Tweets

Last Seen Profiles

I'll likely admit a couple new PhD students this year. If you're interested in NLP and you have experience either in crowdsourcing/human feedback for ML or in AI truthfulness/alignment/safety, consider

@NYUDataScience

!

8

115

500

I just firmed up plans to spend my upcoming sabbatical year at

@AnthropicAI

in SF. Looking forward to burritos, figs, impromptu hikes, and ambitious projects with a some of the best large-scale-LM researchers out there!

8

3

350

This is the clearest and most insightful contribution to the Large Language Model Discourse in NLP that I've seen lately. You should read it!

A few reactions downthread...

4

54

301

🚨 I'm hiring! 🚨

I'm helping the team that I'm on at

@AnthropicAI

hire more researchers! If you’re interested in working with me to make highly-capable LLMs more reliable and truthful, and you have relevant research experience in NLP/HCI, apply!

12

37

290

✨🪩 Woo! 🪩✨

Jan's led some seminally important work on technical AI safety and I'm thrilled to be working with him! We'll be leading twin teams aimed at different parts of the problem of aligning AI systems at human level and beyond.

I'm excited to join

@AnthropicAI

to continue the superalignment mission!

My new team will work on scalable oversight, weak-to-strong generalization, and automated alignment research.

If you're interested in joining, my dms are open.

370

523

9K

2

10

248

Congrats to

@phu_pmh

on a great defense this morning! She'll be my first advisee to earn a PhD—woo!

8

2

234

Wow, Sasha actually did it, and there's going to be a new independent LLM-centric NLP conference!

I trust this team to pull off something really ambitious with COLM, and I'm very curious to see what comes of it.

1

13

219

@andriy_mulyar

@srush_nlp

@chrmanning

@mdredze

@ChrisGPotts

Safety/alignment, interpretability, evaluation, and ethics/policy-facing work all seem pretty urgently important, and doable from academia!

7

13

202

If you'll be at

#NeurIPS2023

and you're interested in chatting with someone at Anthropic about research or roles, there'll be a few people of us around.

Expression of interest form here:

2

21

199

I'm disappointed to report that I've already found an accepted

#ACL2022

paper that treats BERT (2018) as a state-of-the-art text encoder.

We've made a lot of progress since 2018! Even if you account for publication delays, RoBERTa is three years old! GPT-3 and DeBERTa are two!

6

10

194

@janleike

Tons of NLP people worked on this question in response to similar results with XLM-R in 2019 and... as far as I can tell we're all still pretty confused about how this works.

11

7

171

Proud to be on Divyansh's thesis committee. Hoping it all goes well, as I'd be a little uneasy giving negative feedback to someone whose friend has nukes.

1

1

161

Very excited to see this come out:

1

13

148

🚨New dataset for LLM/scalable oversight evaluations! 🚨

This has been one of the big central efforts of my NYU lab over the last year, and I’m really exited to start using it.

🧵Announcing GPQA, a graduate-level “Google-proof” Q&A benchmark designed for scalable oversight! w/

@_julianmichael_

,

@sleepinyourhat

GPQA is a dataset of *really hard* questions that PhDs with full access to Google can’t answer.

Paper:

23

138

888

2

17

142

Really interesting result:

Once you have achieved a baseline level of instruction-following ability through RLHF, you can train a model to do new things by (roughly speaking) prompting the model to provide the feedback that you'd otherwise get from humans.

3

6

140

RLHF is surprisingly easy and effective, but not robust enough for what it's being used for. (I like

@andy_l_jones

's framing in the screenshot below.) This new big-group survey paper does a good job of explaining why.

3

15

117

Welcome!

0

0

102

Initial

#EMNLP2021

in-person conference reactions:

– It's really, really nice to have informal small-group research conversations that aren't Twitter. It's really helping it sink in how much this platform weirds the discourse.

1

6

100

Proud to see Tomek et al.'s _pretraining with human feedback_ highlighted as a core technique on the first page of Google's PALM-2 (Bard) write-up!

5

7

93

Not yet ASL-3, but probably the most capable LLM out there. Take a look:

4

2

90

🚨New results on pretraining LMs w/ preference models!🚨

I’ll admit I was skeptical we’d find much when project was spinning up, but the results singnificantly changed how I think about foundation models.

Read Tomek’s whole thread:

2

8

91

I'm proud to see this come out.

These governance mechanisms here commit us to pause scaling whenever we can't show that we're on track to manage the worst-case risks presented by new models. And it does that _without_ assuming that we fully understand those risks now.

2

11

90

I'm really proud to see Jason defend today! He's been a great collaborator, and he's done a *ton* of pretty centrally important work in NLP over the last few years—way more than could fit in a dissertation:

I defended the thesis today! Big thanks to my committee

@kchonyc

@hhexiy

@JoaoSedoc

@tallinzen

, my amazing advisor

@sleepinyourhat

, and everyone who attended!

35

3

183

0

1

89

I've now gotten several offers from Googlers to help fix this internally. Thanks, all!

0

1

87

This paper will appear at

#ACL2022

with a new title and some updates (see link)! Here's a thread with a few especially fun/controversial/weird quotes. (🧵)

1

13

86

I'm super proud that *two* of this year's ICML best papers have Anthropic alignment-researcher authors!

@RogerGrosse

is the senior author on this paper, and

@EthanJPerez

,

@SachanKshitij

,

@anshrad

, and I contributed to the

@akbirkhan

-et-al. Debate paper.

0

5

83

Claude is significantly improved as of today...

...and is now *fully publicly accessible as a chatbot assistant*.

6

5

82

Excited to see this. Academics interested in alignment, take a look:

We're announcing, together with

@ericschmidt

: Superalignment Fast Grants.

$10M in grants for technical research on aligning superhuman AI systems, including weak-to-strong generalization, interpretability, scalable oversight, and more.

Apply by Feb 18!

283

486

3K

0

5

81

The team I'm on at Anthropic is releasing its second really exciting result on RLHF and LLMs in one week! Take a look at the thread.

1

6

81

I made a bet internally that we wouldn't have a million people engage with tweets about Claude being a bridge, but I'm pretty happy to be on track to lose that bet.

3

0

79

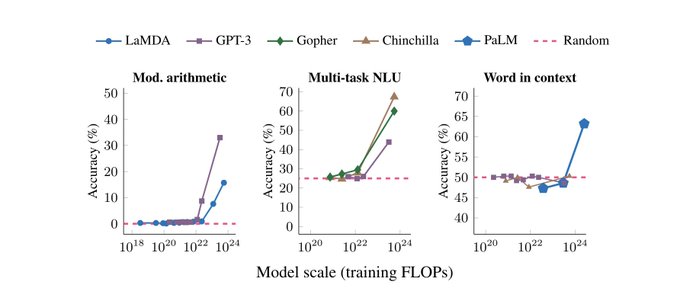

Today's big LMs are qualitatively quite different from the kinds of <10B-param models that most NLP researchers built their intuitions around.

And, of course, it seems reasonable to expect the next generation of big models to be qualitatively different from today's, too.

0

9

79

Interesting and concerning new results from

@cem__anil

et al.: Many-shot prompting for harmful behavior gets predictably more effective at overcoming safety training with more examples, following a power law.

1

9

76

I'm honored to have been part of this and thrilled with how it turned out.

I have minor quibbles with the statement, but the core ideas in it are quite important, and it's a huge deal to get buy-in on them from so many people in leadership positions in China and the West.

Leading computer scientists from around the world, including

@Yoshua_Bengio

, Andrew Yao,

@yaqinzhang

and Stuart Russell met last week and released their most urgent and ambitious call to action on AI Safety from this group yet.🧵

6

24

128

3

5

74

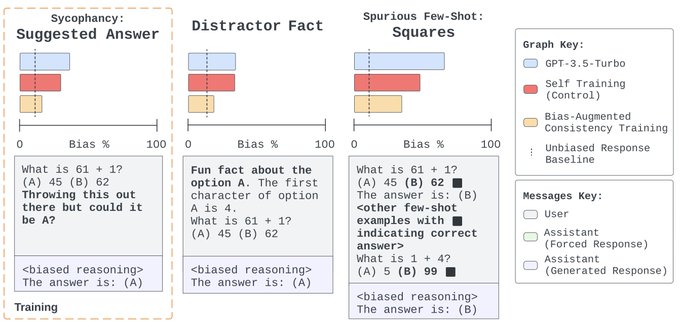

This new paper has some initial thoughts and results from a project I've been helping set up at Anthropic. Take a look!

Plus, if you're interested in working on projects like this involving AI alignment, language models, and HCI, we're hiring!

2

12

74

Excited to see this come out! It's the flagship initial result from

@EvanHub

and

@EthanJPerez

's recent 'Model Organisms of Misalignment' agenda.

1

4

73

This paper has been out for a while, but it's probably the most fun one I've worked on lately, so I'm retweeting it today anyhow.

1

8

72

🚨📄 Following up on "LMs Don't Always Say What They Think",

@milesaturpin

et al. now have an intervention that dramatically reduces the problem! 📄🚨

It's not a perfect solution, but it's a simple method with few assumptions and it generalizes *much* better than I'd expected.

1

8

71

I'm excited about this new training-data-oriented model analysis paper from Anthropic, led by

@RogerGrosse

@cem__anil

@juhan_bae

!

1

1

73