Alex Warstadt

@a_stadt

Followers

2K

Following

1K

Media

52

Statuses

479

Asst Prof. @ UCSD | PI of LeM🍋N Lab | Former Postdoc at ETH Zürich, PhD @ NYU | computational linguistics, NLProc, CogSci, pragmatics | he/him 🏳️🌈

Joined September 2016

Can we learn anything about human language learning from everything that’s going on in machine learning and NLP? In a new position piece Sam Bowman (@sleepinyourhat) and I argue the answer is “yes”. …If we take some specific steps. 🧵

2

62

323

I'm in Singapore, attending EMNLP, presenting BabyLM, and trying the local durian. Also, bc many have asked, I guess this is as good a time as any to announce I will be starting as an Asst. Prof at @UCSanDiego in data science @HDSIUCSD and linguistics in Jan 2025!. Come say hi!

14

5

167

Transformer LMs get more pretraining data every week; what can they do with less? We're releasing the MiniBERTas on @huggingface: RoBERTas pretrained on 1M, 10M, 100M, and 1B words See our blog post for probing task learning curves

CILVR Blog as an inaugural post on the MiniBERTas by Yian Zhang, @liu_haokun, Haau-Sing Li, .Alex Warstadt and @sleepinyourhat: ever wondered what would happen if you pretrained BERT with a smaller dataset? find your answer here!.

2

15

86

This is a HUGE (and 👶) opportunity to advance pretraining, cognitive modeling, and language acquisition. Your participation is 🔑. Also, HUGE shout out to my fellow organizers @LChoshen @ryandcotterell @tallinzen @liu_haokun @amuuueller @adinamwilliams @weGotlieb @ChengxuZhuang

Announcing the BabyLM 👶 Challenge,.the shared task at @conll_conf and CMCL'23!. We’re calling on researchers to pre-train language models on (relatively) small datasets inspired by the input given to children learning language.

0

8

60

A very rewarding watch! Discusses a bunch of naive strategies to a simple game before giving a more psychologically intuitive solution with some reinforcement learning. Accessible & engaging computational cognitive modeling on youtube! @LakeBrenden @todd_gureckis.

1

7

45

Thanks to everyone who made BabyLM a success!. ❤️ The organizers @amuuueller @LChoshen @weGotlieb @ChengxuZhuang.❤️ +Authors @tallinzen @adinamwilliams @ryandcotterell Juan Ciro, Rafa Mosquera, Bhargavi Paranjape.❤️ @conll_conf organizers @davidswelt Jing Jiang, Julia Hockenmaier

1

2

46

I'm at #CogSci2024 giving a poster TODAY from 13-14:15 about the past and future of BabyLM! Come chat about computational models of language acquisition and data-efficient pretraining!.

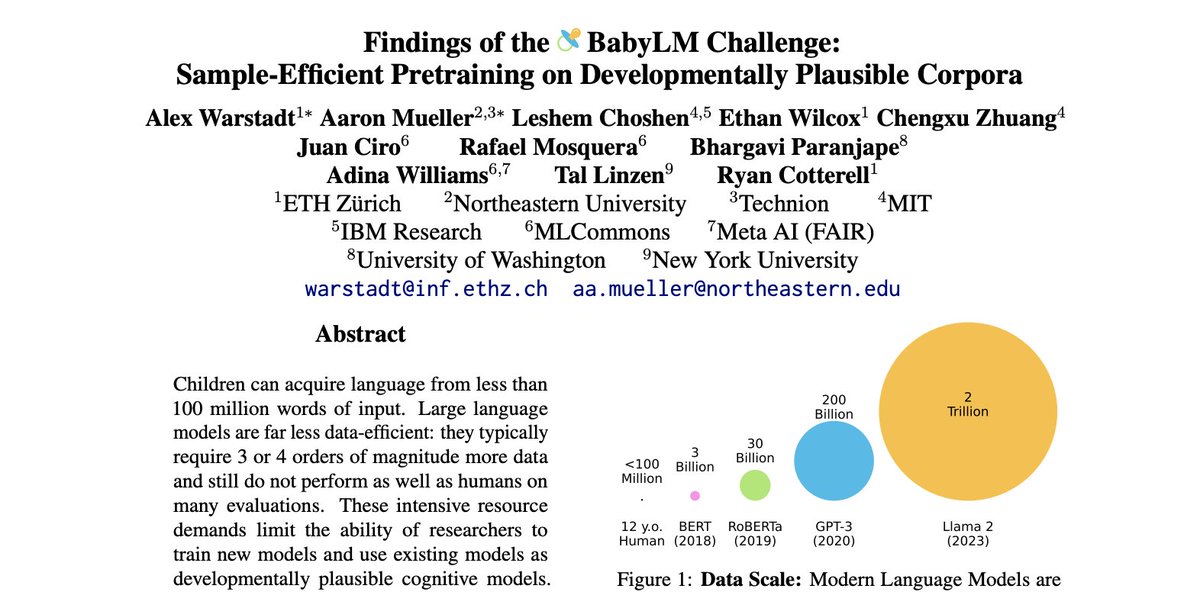

LLMs are now trained >1000x as much language data as a child, so what happens when you train a "BabyLM" on just 100M words?. The proceedings of the BabyLM Challenge are now out along with our summary of key findings from 31 submissions: Some highlights 🧵

0

5

45

📢New paper alert📢 Sometimes prosody is predictable from text, and sometimes it conveys its own information. We develop an NLP pipeline for extracting prosodic features from audio and using LMs to try to predict them from text. Read to find out just how redundant prosody is 🤔

Ever wondered whether prosody - the melody of speech 🎶 - conveys information beyond the text or whether it's just vocal gymnastics? 🏋️♂️ Great work by @MIT and @ETH_en soon to be seen at @emnlpmeeting .

1

3

34

This paper makes such an important point about children's learning signal for language acquisition (which is missing from LMs, btw):. Learners infer grammar rules from which utterances lead to communicative success. And comm. success is essential for achieving nonlinguistic goals.

Our latest paper is out in New Ideas in Psych journal! @mitjanikolaus and I propose a framework linking theories of conversational coordination to several aspects of children's early language acquisition.

1

1

29

Congrats to the competition winners and best paper awards: @davidsamuelcz Lucas Georges Gabriel Charpentier, Chenghao Xiao, G Thomas Hudson @NouraAlMoubayed @Julius_Steuer @mariusmosbach @dklakow @richarddm1 Zebulon Goriely @hope_mcgovern @c_davis90 Paula Buttery @lisabeinborn.

1

2

25

Check out our new paper, harnessing the power of LLMs 💪 to scale up high quality manual annotations of CHILDES 👶.

🆕A new resource for automatic annotation of young children's grammatical vs. ungrammatical language in early child-caregiver interactions — a project spearheaded by Mitja Nikolaus and in collaboration with @a_stadt, to appear in @LrecColing.Thread🧵.

1

1

26

@UCSanDiego @HDSIUCSD Anyone looking for a PhD in computational linguistics, acquisition, or pragmatics, I'd encourage you to apply to UCSD by Dec 15! I'm not formally recruiting now, but there are many great ppl there already in the area, and I will start advising in ling and data science in 2025🧑🎓.

0

8

25

How would you test your BabyLM?. We have a new and improved eval pipeline for round 2 of the BabyLM competition, but we're NOT done adding to it. If you have an idea for a new eval, reach out, open a pull request, or even submit a writeup to our new "paper track"!.

The evaluation pipeline is out!. New:.Vision tasks added.Easy support to models (even out of HF) like mamba and vision ones (DM if your innovativeness make eval hard!). Hidden:.More is hidden now.(cool, new and babyLM spirited). Evaluation a challenge's❤️🔥.

1

3

25

Excited to see this! This is EXACTLY the kind of controlled experiment @sleepinyourhat and I envisioned in our 2022 position paper (.

New preprint!.How can we test hypotheses about learning that rely on exposure to large amounts of data?. No babies no problem: Use language models as models of learning + 🎯targeted modifications 🎯 of language models’ training corpora!

1

1

22

I could not agree more. These are 2 complementary approaches to cognitive modeling:.1. Build it from the bottom up from plausible parts and find what works.2. Start with something that works and make it incrementally more plausible. Ideally, they meet in the middle somewhere.

I love the babyLM challenge, but things to keep in mind:. -No baby learns from text.-No baby learns without the production perception loop.-Babies have communicative intent. The reasons why I’ve been modeling language acquisition with GANs and CNNs from raw speech. Some

1

1

22

📢 BabyLM 👶 evaluation update 📢. The eval pipeline is now public! It's open source, so you can make improvements, open a pull request, etc. We're taking suggestions for more eval tasks. If you want to propose tasks using zero-shot LM scoring or fine-tuning, drop us a line!!.

The evaluation pipeline for the BabyLM 👶 Challenge is out! We’re evaluating on BLiMP and a selection of (Super)GLUE tasks. Code 💻:

1

6

19

This is one of the main differences between human language acquisition and LM language acquisition: Articulatory learning is a huge hurdle for infants, disproportionately affecting language production. Even without having to learn this task, LMs are less data efficient. .

0

1

15

Exciting new work with (surprisingly!) positive results that LMs can predict human neural activation for language inputs **even when restricted to the same amount of input as a human**.

Excited to share our new work with @martin_schrimpf, @zhang_yian, @sleepinyourhat, @NogaZaslavsky and @ev_fedorenko: "ANN language models align neurally and behaviorally with humans even after a developmentally realistic amount of training" 1/n.

1

2

16

An additional (more mundane) factor: vision+language models are usually fine-tuned on aligned data from not-so-rich domains like captions 😴, but text benchmarks really benefit from adaptation to long abstract texts.

In 2021, we've seen an explosion of grounded Langage Models: from "image/video+text" to more embodied models in "simulation+text". But, when tested on text benchmarks, these grounded models really struggle to improve over pure text-LM e.g T5/GPT3. Why?. >>.

1

2

16

Huge thanks to my dissertation committee @sleepinyourhat, @tallinzen, @LakeBrenden, @mcxfrank, Ellie Pavlick (@Brown_NLP). And other folks who gave great feedback: @grushaprasad, @najoungkim, @lambdaviking, @ACournane, @EvaPortelance . /fin.

1

0

12

Lots of useful tips here (e.g. use simple language, define jargon), but as a linguist, I'm tired of advice like #19: "Never use passive tense [sic]; always specify the actor". Passives exist for a reason (actually two reasons, at least)👇.

I wrote up a few paper writing tips that improve the clarity of research papers, while also being easy to implement: I collected these during my PhD from various supervisors (mostly @douwekiela @kchonyc, bad tips my own), thought I would share publicly!.

1

1

12

Paper to appear in TACL, poster @ EMNLP (arXiv link here: . And HUGE shoutout to my awesome co-authors at ETH Zürich:.Ionut Constantinescu Tiago Pimentel @tpimentelms Ryan Cotterell

1

0

11

I'm trying to do a head-to-head of text generation circa 5 years ago vs. today. Anyone know where can I find examples of what was considered high quality neural text generation back then (presumably using LSTM)? . @clara__meister @ryandcotterell @tpimentelms.

6

0

10

The Question Under Discussion❓is to discourse what the CFG is to syntax, but how speakers choose which❓to address next is VASTLY understudied. Excited for another big advance from this group: human jgmts on salience of a potential future❓AND models that can predict salience‼️.

Super excited about this new work!. Empirical: although LLMs have good abilities to generate questions, they don’t inherently know what’s important. We try to solve this!. Linguistic: is reader expectation predictable and if so, how well does that align with what’s in the text?.

1

0

10

New paper announcement #acl2020nlp! Are neural networks trained on natural language inference (NLI) IMPRESsive? I.e. do they view IMPlicatures and PRESuppositions as valid inferences? Coming to a zoom meeting near you!.

NLI is one of our go-to commonsense reasoning tasks in #NLProc, but can NLI models generalize to pragmatic inferences of the type studied by linguists? Our accepted #acl2020nlp paper asks this question (!.The team: Paloma Jeretič @a_stadt Suvrat Bhooshan

0

2

9

Very excited to be talking at Indiana University Bloomington next week about CoLA, BLiMP, and language models learning grammar from raw data!.

Next week Alex Warstadt @a_stadt from NYU will give a talk about acceptability judgments and neural networks at #Clingding ! Check it out at DM me if you are not from IU but want to participate. :) #NLProc.

0

0

9

Love this! . Training speech LMs is crucial for modeling language acquisition. @LavechinMarvin et al. show there are still some BIG hurdles ahead: SLMs have to solve the segmentation problem to get on par with text-based models. But a good benchmark is one hurdle cleared!.

Want to study infant language acquisition using language models? We've got you covered with BabySLM👶🤖. ✅ Realistic training sets.✅ Adapted benchmarks. To be presented at @ISCAInterspeech.📰Paper: 💻Code: More info below 🧵⬇️

1

0

7

Big fan of this paper by Lovering &al! Neural networks sometimes generalize in weird and unintended ways, but they show that there is a clear information-theoretic principle that predicts their behavior (at least in some very simple settings). [1/4].

For those (like me) who weren't really following ICLR last week, some more context on this cool work led by Charles Lovering:.

1

1

6

@najoungkim @eaclmeeting @ryandcotterell @butoialexandra @najoungkim you and Kevin Du should be friends. He is also a FoB (friend of Buddy)!!.

1

0

6

Congrats to my cohortmate @AliciaVParrish on her defense. She's an awesome scientist!.

Proudly presenting Dr. @AliciaVParrish who just successfully defended her supercool @nyuling dissertation “The interaction between conceptual combination and linguistic structure”!.Dr. Parrish will soon be starting a Research Scientist position at @Google, lucky them!!

2

0

7

@srush_nlp Goooood question. It's not well advertised in the paper unfortunately. We asked authors to self-report HPs and training info, the data is here: 1/2👇.

1

1

6

@srush_nlp LTG-BERT trains on the same # of tokens (recounting tokens for multiple epochs) as BERT (. Their BabyLM paper did the same. ~10^11 training tokens / 10^8 dataset tokens ~= 500 epochs with padding. So it's flop comparable to BERT (modulo # of params). /2

1

1

6

Neat solution to an annoying problem (involving subword tokens, as always)!.

💡 New short paper with @neuranna titled "A Better Way to Do Masked Language Model Scoring" accepted at #ACL2023. 🎉🇨🇦. Preprint: 📑 Tweeprint: 🧵👇. #ACL2023NLP #NLProc.

1

0

6

@liu_haokun It's unfair and wrong how you and other international students have been treated by the US. Not to mention stupid: there's nothing to gain from alienating and excluding talented scholars from China.

0

0

6