Cem Anil

@cem__anil

Followers

2,041

Following

1,389

Media

15

Statuses

469

Machine learning / AI Safety at @AnthropicAI and University of Toronto / Vector Institute. Prev. student researcher @google (Blueshift Team) and @nvidia .

Toronto, Ontario

Joined November 2018

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

America

• 1075191 Tweets

Happy 4th

• 875103 Tweets

Labour

• 610827 Tweets

Independence Day

• 523748 Tweets

Reform

• 488385 Tweets

Tories

• 290903 Tweets

#loveIsland

• 217427 Tweets

Tory

• 203913 Tweets

Keir Starmer

• 130811 Tweets

#GeneralElection2024

• 119826 Tweets

Mimi

• 109754 Tweets

Sean

• 103380 Tweets

Mario Delgado

• 74126 Tweets

Maya

• 61849 Tweets

Corbyn

• 54858 Tweets

#TemptationIsland

• 40370 Tweets

Sky News

• 32759 Tweets

Raul

• 32740 Tweets

Andy Murray

• 31305 Tweets

Channel 4

• 26233 Tweets

Luca

• 25227 Tweets

Lib Dems

• 23625 Tweets

Reino Unido

• 20009 Tweets

Matilda

• 18184 Tweets

THE ARCHER

• 15275 Tweets

#ExitPoll

• 12984 Tweets

Joey Chestnut

• 10571 Tweets

GUILTY AS SIN

• 10144 Tweets

Last Seen Profiles

Pinned Tweet

AIs of tomorrow will spend much more of their compute on adapting and learning during deployment.

Our first foray into quantitatively studying and forecasting risks from this trend looks at new jailbreaks arising from long contexts.

Link:

3

7

61

One of our most crisp findings was that in-context learning usually follows simple power laws as a function of number of demonstrations.

We were surprised we didn’t find this stated explicitly in the literature.

Soliciting pointers: have we missed anything?

7

6

69

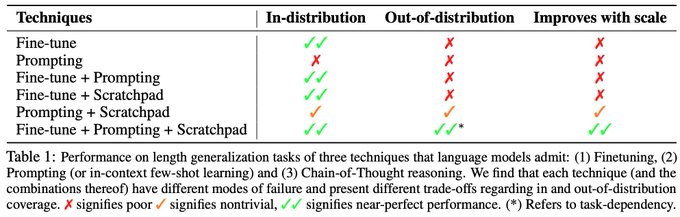

How about few-shot scratchpad, a combo behind many strong LLM results? (eg. our recent

#Minerva

)

This leads to **substantial improvements in length generalization!**

In-context learning enables variable length pattern matching, producing solutions of correct lengths.

5/

1

0

15

Highly recommended!

Spending time at Google Blueshift feels like taking a sneak peek into what the AI scene will look like a few years ahead.

Best part, of course, is working closely with a fantastic team!

@bneyshabur

@Yuhu_ai_

@guygr

@ethansdyer

1

0

13

Relatedly,

@dwarkesh_sp

asks prescient questions about risks from test-time compute in his latest podcast with

@TrentonBricken

and

@_sholtodouglas

.

It’s a fantastic episode, give it a listen!

1

2

10

This was a fantastic collaboration with my amazing co-authors Ashwini Pokle*

@ashwini1024

, Kaiqu Liang*

@kevin_lkq

, Johannes Treutlein

@JohannesTreutle

, Yuhuai (Tony) Wu

@Yuhu_ai_

, Shaojie Bai

@shaojieb

, Zico Kolter

@zicokolter

and Roger Grosse

@RogerGrosse

.

1

0

6

There’s way more in the paper — check it out if you’re interested!

paper: []()

Also come say hi at

@neurips

!

1

0

6

See paper for more - especially our detailed analyses regarding the failure modes of finetuning.

Joint work with my fantastic collaborators

@Yuhu_ai_

, Anders,

@alewkowycz

,

@vedantmisra

,

@vinayramasesh

,

@AmbroseSlone

,

@guygr

,

@ethansdyer

and

@bneyshabur

.

8/

1

0

6

@CFGeek

Thanks!

We’re aware of this kind of scaling law for token-wise losses. The first author of the paper you linked paper is a co-author in ours :)

I should have said few/many shot learning in my tweet above, which has a shared but different problem structure.

2

0

4

@CFGeek

Here’s another paper that we cite that observes a similar token-wise power law trend under the pretraining distribution:

0

0

3

@AnimaAnandkumar

@neurips

Thank you for your comment!

That learning only given one-step evolution data is enough to predict long-horizon behaviour of chaotic systems is very surprising.

Your dissipativity regularizer seems very useful for us as well. Thanks again for the pointer!

0

0

2

@CFGeek

Agreed! Seems quite interesting.

Another similar idea:

Let’s say the function vectors are largely responsible for few-shot learning on simple tasks.

There are not that many attention heads that implement the function vector mechanism afaik. ⬇️

1

0

2

Very interesting work!

New work out on arXiv! Reverse engineering recurrent networks for sentiment classification reveals line attractor dynamics (), with fantastic co-authors

@ItsNeuronal

,

@MattGolub_Neuro

,

@SuryaGanguli

and

@SussilloDavid

.

#tweetprint

summary below! 👇🏾 (1/4)

3

52

201

0

0

1

@CFGeek

There is a huge overlap between these for sure.

I think the structure and data distribution differ enough that results on one might not generalize readily to the other.

E.g. the task vector mechanism seems fairly specific to few/many shot learning.

1

0

1

@CFGeek

Say we deleted these and only these heads from the network.

1) Do we still get token-wise loss scaling laws under pretraining distr? If so, did the exponent change?

2) Do we still get few-shot learning scaling laws? If so, did the exponent change?

0

0

1

@agarwl_

@hu_yifei

@arankomatsuzaki

Great work, congrats!

Loads of interesting new/complementary findings in there, definitely worth a detailed read.

DM me about Claude research access, will check what’s possible :)

0

0

1

@saakethmm

The num of padding tokens on left/right is indeed random (total num of tokens is fixed) — to train all position embeddings even on short instances.

We add the same num of padding tokens on both the input and scratchpad to keep the distance between the input and scratchpad fixed.

0

0

1