Yuhuai (Tony) Wu

@Yuhu_ai_

Followers

23,250

Following

416

Media

41

Statuses

387

Co-Founder @xAI . Minerva, STaR, AlphaGeometry, AlphaStar, Autoformalization, Memorizing transformer.

Stanford

Joined July 2017

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

JIKOOK

• 111389 Tweets

تركيا

• 105086 Tweets

Avusturya

• 79042 Tweets

Eminem

• 77754 Tweets

बालक बुद्धि

• 59790 Tweets

Romania

• 53401 Tweets

TXT CHIKAI OUT NOW

• 52453 Tweets

Gakpo

• 52000 Tweets

TXT WE'LL NEVER CHANGE OUT NOW

• 50537 Tweets

#DOLCEGABBANA

• 37554 Tweets

#DGAltaModa

• 36667 Tweets

Rudy Giuliani

• 33731 Tweets

$ASI

• 31459 Tweets

Tobey

• 27031 Tweets

TRT 1

• 25757 Tweets

Recep Tayyip Erdoğan

• 23340 Tweets

هولندا

• 20084 Tweets

Elmo

• 18539 Tweets

Denji

• 16749 Tweets

チェンソーマン

• 14880 Tweets

Malen

• 13029 Tweets

Carille

• 12362 Tweets

#roened

• 12274 Tweets

Fujimoto

• 12202 Tweets

Hollanda

• 11681 Tweets

Trabalhadores

• 11440 Tweets

オランダ

• 10795 Tweets

Last Seen Profiles

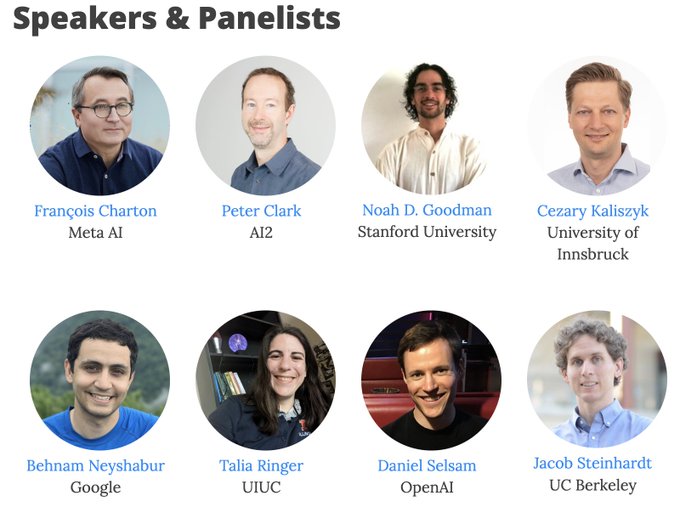

Coming to

#NeurIPS23

now. Will be there until Friday night.

DM me to chat about: reasoning, AI for math, and what we’re doing

@xai

.

Also will be at

#MATHAI

workshop panel discussion on Friday morning. See you there!

121

151

520

Euclidean geometry problems have been my favorite math puzzles since middle school. The most intriguing part of it is the creation of auxiliary lines, which opens a space for imagination and the freedom to explore various diagrams. Once a proof is found, these auxiliary lines

219

191

946

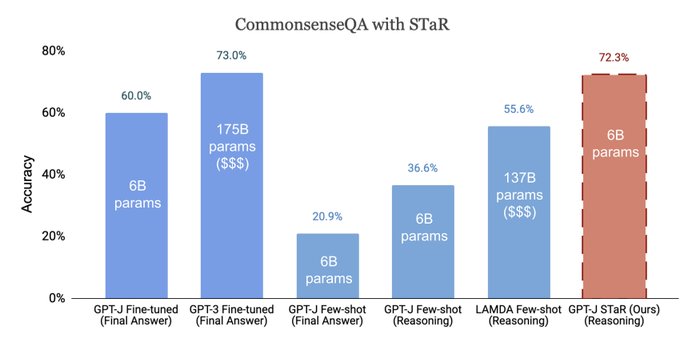

Language models can dramatically improve their reasoning by learning from chains of thought that they generate.

With STaR, just a few worked examples can boost accuracy to that of a 30X larger model (GPT-J to GPT-3).

W.

@ericzelikman

, Noah Goodman

1/

8

93

523

How do you make a transformer recurrent?

You just turn the transformer 90 degree, and apply it in the lateral direction!

Now, with recurrence, the context size is infinite!

Let's make the recurrence great again with Block-Recurrent Transformers:

You think the RNN era is over? Think again!

We introduce "Block-Recurrent Transformer", which applies a transformer layer in a recurrent fashion & beats transformer XL on LM tasks.

Paper:

W. DeLesley Hutchins, Imanol Schlag,

@Yuhu_ai_

&

@ethansdyer

1/

5

68

451

9

41

315

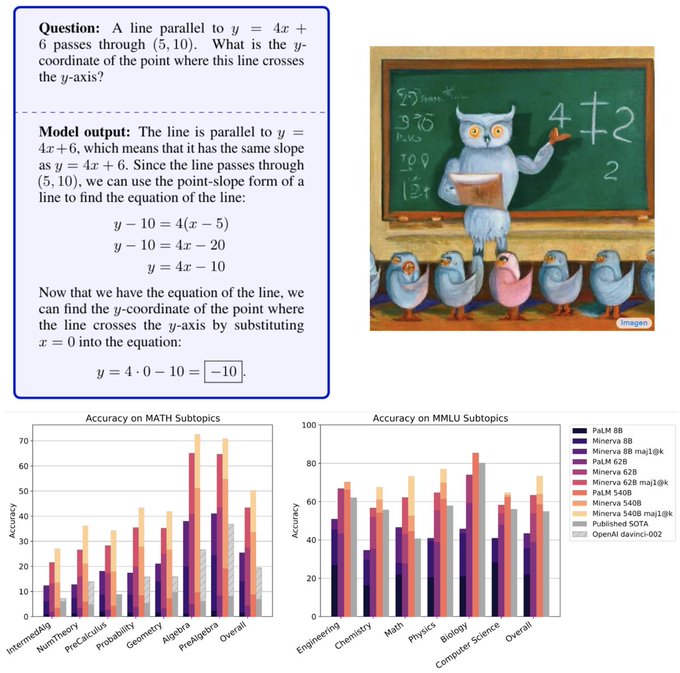

Super excited to share Minerva!! – a language model that is capable of solving MATH with 50% success rate, which was predicted to happen in 2025 by Steinhardt et. al. ()!

#Minerva

1/

4

46

243

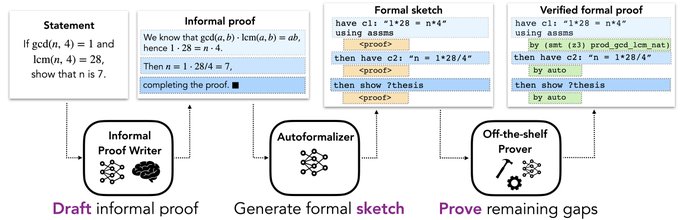

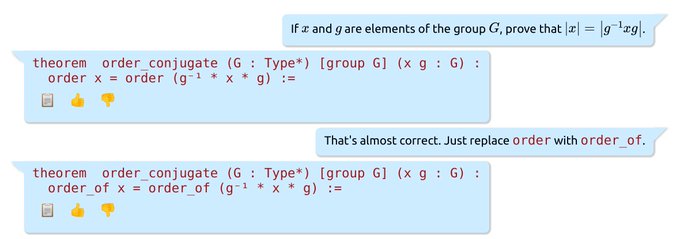

Autoformalization with LLMs in Lean!

@zhangir_azerbay

and Edward Ayers built a chat interface to formalize natural language mathematics in Lean:

Very impressive work!

5

49

193

Hello

#NeurIPS2022

! I'm at New Orleans and will be here until Thursday morning (Dec 1). Let's brainstorm AI for math, LLMs, Reasoning 🤯🤯!

We'll present 8 papers (1 oral and 7 posters) + 2 at workshops (MATHAI and DRL). Featuring recent breakthroughs in AI for math! See👇

3

18

138

We open sourced Memorizing Transformers () and Block Recurrent Transformers () in Meliad!

Repo link:

2

22

138

Memorizing Transformer's camera ready is released!

Main updates:

1. Adding 8K memory ~ 5X-8X larger model parameters.

2. You can easily turn a pretrained LLMs into a memorizing transformer! (4% of pretraining cost to obtain 85% of the benefit)

Thanks a lot to

@Yuhu_ai_

,

@MarkusNRabe

and Delesley Hutchins for their hard work of updating our ICLR paper on retrieval-augmented language modeling, aka "Memorizing Transformer"!

Here is a short thread on why we think this is important.

🧵 1/n

4

41

233

4

18

134

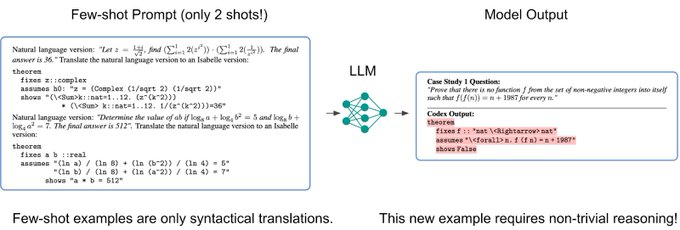

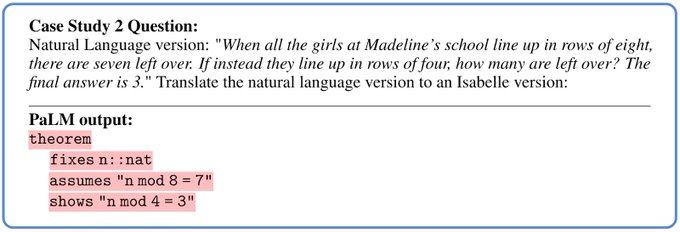

In May, we discovered that LLMs can autoformalize theorem statements:

In June, we showed that LLMs can solve challenging math problems with Minerva.

Now, we show LLMs can turn its generated informal proofs into verified formal proofs!🤯

What's next?😎

2

29

123

Excited to share this new work, which sheds light on the understanding of pre-training via synthetic tasks.

We did three experiments that iteratively simplify pre-training while still retaining gains.

Paper:

W. Felix Li,

@percyliang

.

1/

2

20

112

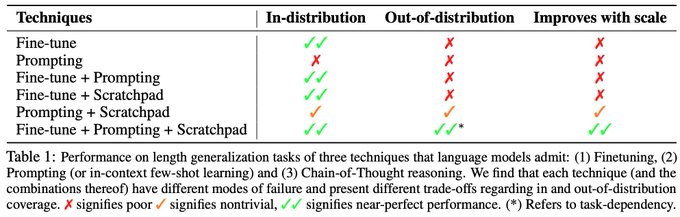

We discover that you can teach LLMs to solve longer problems *only* via in-context learning, instead of fine-tuning.

This is mind-blowing🤯🤯! -- that certain skills are hard to be encoded in model weights, but much easier to be acquired from the context.

2

16

105

Can neural network agents prove theorems outside of the training distribution? We perform a systematic evaluation along 6 generalization dimensions with INT: an inequality theorem proving benchmark:

Joint work with Albert Jiang, Jimmy Ba,

@RogerGrosse

.

0

7

46

If you use K-FAC you only need to do 1 update (ACKTR), but if you use first order optimizer, you need to do 320 updates (PPO). AND 1 update by K-FAC still wins. This is what we (with

@baaadas

) find by comparing ACKTR vs. PPO vs. PPOKFAC.

0

12

38

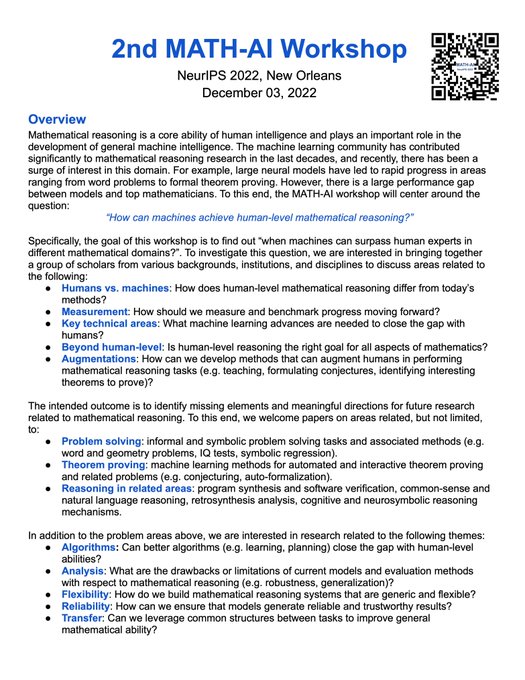

Compared to the 1st MATHAI workshop 1 year ago, the number of submissions this time almost doubled! Glad to see the field is growing rapidly 🙌

Also there are many mind-blowing works 🤯🤯 Stay tuned!

🚨👇Reminder that the submission deadline for the MATH-AI workshop at

#NeurIPS2022

is tomorrow -- Sep 30, 11:59pm PT.

Submit your recent works (e.g. ICLR submissions) if they are about Math&AI, reasoning, algorithmic capabilities!

0

4

20

1

3

36

Two papers in

@ICLR18

:

1. Short horizon bias in meta-learning optimization:

2. RELAX:

One invited to workshop:

3. Exploration in Meta-Reinforcement Learning

0

3

35

Epic night with

@TheGregYang

@jimmybajimmyba

@ChrSzegedy

@PiotrRMilos

@s_tworkowski

@WendaLi8

@ericzelikman

@MarioKrenn6240

and many friends!!

#NeurIPS2022

4

2

33

I'm glad to share that LIME is accepted at

#ICML2021

! One of the things I like about our publishing process is that there is always the next conference :) If you truly believe in your paper, then it will be published sooner or later! Just keep polishing 🛠️🛠️

@yaringal

We had a paper rejected with 8,7,6,6, with thorough reviews and lots of discussion.

The one-sentence reason for rejection -- that training on data is the wrong way to instill knowledge in an algorithm -- feels like something out of AAAI 1993.

14

24

201

2

2

29

Yeah I am stunned by this. Don't know what to think of it. We have worked so hard on this. Getting rejected by just one sentence meta-review, overriding all decisions made by the reviewers, just seems so crazy and unfair.

@yaringal

We had a paper rejected with 8,7,6,6, with thorough reviews and lots of discussion.

The one-sentence reason for rejection -- that training on data is the wrong way to instill knowledge in an algorithm -- feels like something out of AAAI 1993.

14

24

201

1

0

26

Lastly, big thanks to my collaborators:

@AlbertQJiang

,

@WendaLi8

,

@MarkusNRabe

, Charles Staats,

@Mateja_Jamnik

,

@ChrSzegedy

!!!

1

3

23

🚨👇Reminder that the submission deadline for the MATH-AI workshop at

#NeurIPS2022

is tomorrow -- Sep 30, 11:59pm PT.

Submit your recent works (e.g. ICLR submissions) if they are about Math&AI, reasoning, algorithmic capabilities!

🚨Call for Papers🚨 Submission to the

#NeurIPS2022

MATH-AI Workshop will be due on Sep 30, 11:59pm PT (2 days after ICLR😆). The page limit is 4 pages (not much workload🤩). Work both in progress and recently published is allowed. Act NOW and see you in

#NewOrleans

!🥳🥳🍻

0

9

26

0

4

20

Join us to work on reasoning with large language models!

0

5

20

I’ve been working with Blueshift on reasoning with LLMs. It’s an amazing team with an ambitious goal, and a group of super smart, talented people.

0

3

16

Excited to share this new work! We trained a GNN-based branching heuristics for model counting. It generalizes to problems of much larger sizes, achieving improvement over SOTA by orders of magnitude.

Can neural network agents improve wall-clock performance of propositional model counters? We present Neuro#, a neuro-symbolic solver that can do that:

Joint work w/

@gilled34

,

@Yuhu_ai_

,

@cjmaddison

,

@RogerGrosse

, Edward Lee, Sanjit Seshia, Fahiem Bacchus

0

3

18

0

1

16

@ericzelikman

Human reasoning is often the result of extended chains of thought.

We want to train a model that can generate explicit rationales before answering a question.

The main challenge: most of the datasets only contain a question answer pair, but not the intermediate rationales.

1

0

14

Great work!!

Autoformalization with LLMs in Lean... for everyone!

The chat interface for autoformalizing theorem statements in Lean built by myself and

@ewayers

is now publicly available as a vs-code extension.

7

30

162

0

0

12

Last but not least, if you are interested in AI for math / reasoning / LLMs, come visit the MATH-AI workshop on Dec 3:

0

1

13

Lastly, I want to thank my amazing collaborators, Eric Zelikman

@ericzelikman

, and Noah Goodman for this exciting work!

11/

2

1

13

If we can collect the instructions used by humans to fix the formalization, that'd be very valuable.

@XenaProject

@TaliaRinger

.

Autoformalization with LLMs in Lean!

@zhangir_azerbay

and Edward Ayers built a chat interface to formalize natural language mathematics in Lean:

Very impressive work!

5

49

193

1

1

13

"Exploring Length Generalization in Large Language Models" accepted as an *Oral presentation*! We discovered that certain skills are hard to be encoded in model weights, but much easier to be acquired from the context.

5/ 10

1

0

12

People need RELAX!

RELAX! Our new gradient estimator handles discrete variables and black-box functions. Now going to try hard attention, latent graphs, and more RL problems. by amazing students

@wgrathwohl

@chlekadl

@Yuhu_ai_

@geoffroeder

3

151

447

0

4

11

@iandanforth

Hi Ian, thanks for pointing out the Abstraction and Reasoning Challenge. We will take a closer look to see if our model fits!

1

0

12

@ChrSzegedy

@MarkusNRabe

@spolu

@jessemhan

@GuillaumeLample

@f_charton

@AlbertQJiang

@WendaLi8

@DanHendrycks

would love to see you there!

1

0

11

Many thanks to all of my students / collaborators

@AlbertQJiang

@cem__anil

@ericschmidt

@JinPZhou

@s_tworkowski

, Felix Li, Imanol Schlag,

@WendaLi8

, Michał Zawalski, mentors

@ChrSzegedy

@percyliang

@bneyshabur

, and wonderful teammates at N2formal, Blueshift for a fruitful year!

1

0

10

LLMs autoformalize natural language mathematics into formal math code, the first proof-of-concept for autoformalization🤯:

1/10

1

0

11

Camera ready version on short-horizon bias, to appear in

#iclr2018

. It tells you why you should always start with aggressive learning rate and then decay. Meta-optimization is hard because the objective is biased. A fantastic collaboration with

@mengyer

,

@RogerGrosse

and Renjie.

1

3

10

I was told many cool people are at MATHAI workshop😎

Math-AI workshop starting at room 293-294 with

@lupantech

@wellecks

@Yuhu_ai_

@HannaHajishirzi

@percyliang

#NeurIPS2022

#NLProc

0

4

28

0

2

10

Minerva attains grades higher than average high school students and surpasses timeline prediction by 3 years:

3/10

1

0

9

Great opportunity to work on LLM reasoning with amazing people!

0

1

9

"Fast and Precise: Adjusting Planning Horizon with Adaptive Subgoal Search" accepted to DRL workshop:

10/10

1

0

9

A very cool work on natural language theorem proving from

@wellecks

et. al.!

It's nice to see lots of observations are shared between informal and formal math proving: the importance of premise selection, failure cases etc.

Looking forward to combine the best of both worlds!

1

1

9

Looking forward to our discussion on Mathematics and AI tomorrow at

#ICML2022

!

We're also very excited to be hosting a panel discussion on the nexus of AI and Mathematics from 3:45-4:30pm ET featuring

@Yuhu_ai_

@PetarV_93

@TaliaRinger

and

@ibab_ml

!

1

2

7

1

4

8

Extrapolating to more difficult problems via equilibrium models:

9/10

1

0

9

Very nice work! Automating prompt engineering for the win!

APE generates “Let’s work this out in a step by step way to be sure we have the right answer”, which increases text-davinci-002’s Zero-Shot-CoT performance on MultiArith (78.7 -> 82.0) and GSM8K (40.7->43.0). Just ask for the right answer?

@ericjang11

@shaneguML

3

14

101

0

1

9

LLMs are not good at premise selection in theorem proving due to limited context window. Thor addresses this by combining symbolic AI (sledgehammer) to achieve SOTA:

6/10

1

0

8

Three experiments iteratively simplify pre-training, shedding light on the understanding of pre-training via synthetic tasks.

8/10

Excited to share this new work, which sheds light on the understanding of pre-training via synthetic tasks.

We did three experiments that iteratively simplify pre-training while still retaining gains.

Paper:

W. Felix Li,

@percyliang

.

1/

2

20

112

1

0

8

We recently worked on extracting datasets for training neural theorem provers for Lean. Our model can prove 35.9% test theorems.

Check out the following Demo! We created a tool for querying a 3B GPT model when writing math proofs in VS code.

#InteractiveNeuralTheoremProving

1

1

8