Gabriel Ilharco

@gabriel_ilharco

Followers

4,141

Following

1,270

Media

59

Statuses

451

Building cool things @xAI . Prev. PhD at UW, Google Research

Palo Alto, CA

Joined September 2015

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

England

• 373695 Tweets

Noah Lyles

• 290952 Tweets

ヒロアカ

• 184309 Tweets

Polônia

• 122498 Tweets

フワちゃん

• 101828 Tweets

ジャンプ

• 83682 Tweets

堀越先生

• 76304 Tweets

Corinthians

• 70601 Tweets

Alemania

• 60810 Tweets

Thompson

• 60479 Tweets

Gabi

• 60314 Tweets

Bolt

• 54505 Tweets

Peillat

• 53035 Tweets

Carol

• 51219 Tweets

Rosamaria

• 49935 Tweets

Evandro

• 42008 Tweets

やす子ちゃん

• 40002 Tweets

BAUTI MASCIA EN YOUTUBE

• 37549 Tweets

Tamworth

• 36496 Tweets

RFK Jr.

• 30500 Tweets

Thaisa

• 28174 Tweets

#めざましテレビ

• 24167 Tweets

やす子さん

• 22818 Tweets

ケイくん

• 20578 Tweets

Greggs

• 18219 Tweets

Juventude

• 17614 Tweets

神さま学校の落ちこぼれ

• 16829 Tweets

Solari

• 15529 Tweets

#dilematvi

• 14155 Tweets

#エンタメプレゼンターK

• 12444 Tweets

もちづきさん

• 12292 Tweets

#فضفض_بكلمه

• 11334 Tweets

Last Seen Profiles

As good a time as any to say I recently graduated and joined

@xAI

.

It’s going to be an exciting year, buckle up =)

31

50

480

Grok is going multimodal!

It’s incredible to see how fast a small, focused team can move. Kudos to the amazing team

@xAI

that made this possible

15

67

374

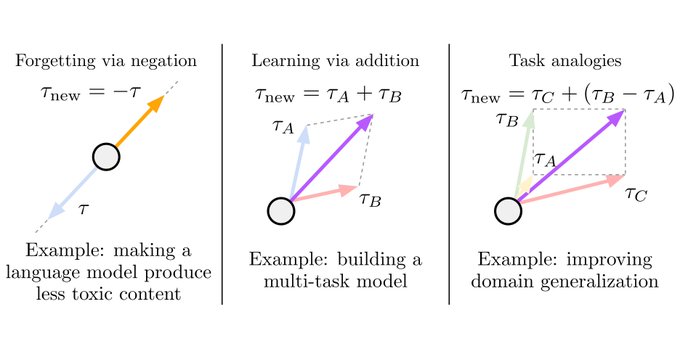

Instead of a single neural network, why not train lines, curves and simplexes in parameter space?

Fantastic work by

@Mitchnw

et al. exploring how this idea can lead to more accurate and robust models:

2

48

293

Another breakthrough in CLIP models, powered by better datasets. Great job

@Vaishaal

,

@AlexFang26

and team!

Paper:

3

44

275

🚀Big updates to OpenCLIP! We now support over 100 pretrained models, and many other goodies. Check it out!

v2.23.0 of OpenCLIP was pushed out the door! Biggest update in a while, focused on supporting SigLIP and CLIPA-v2 models and weights. Thanks

@gabriel_ilharco

@gpuccetti92

@rom1504

for help on the release, and

@bryant1410

for catching issues. There's a leaderboard csv now!

2

18

147

1

17

78

A surprisingly simple way to improve generalization when fine-tuning: combine the weights of zero-shot and fine-tuned models.

We find significant improvements across many datasets and model sizes, at no additional computational cost at fine-tuning or inference time!

0

21

80

New paper out!

In NLP, fine-tuning large pretrained models like BERT can be a very brittle process. If you're curious about this, this paper is for you!

Work with the amazing

@JesseDodge

,

@royschwartz02

, Ali Farhadi,

@HannaHajishirzi

&

@nlpnoah

1/n

1

23

60

We are hosting a tutorial on High Performance NLP at

#emnlp2020

, covering a bunch of fun stuff in efficiency!

Our first live Q&A session starts in ~1h!

Slides:

With the amazing Cesar Ilharco,

@IuliaTurc

,

@Tim_Dettmers

, Felipe Ferreira and

@kentonctlee

.

0

12

48

Thrilled that our paper got honorable mention for Best Paper Award for Research Inspired by Human Language Learning and Processing!

@conll2019

#emnlp2019

4

11

47

None of this would be possible without my amazing collaborators, so huge thanks to

@marcotcr

,

@Mitchnw

,

@ssgrn

,

@lschmidt3

,

@HannaHajishirzi

and Ali Farhadi!

Check out our paper and code at

📜:

🖥️:

(n/n)

0

1

31

If you're interested in the next generation of vision datasets, don't miss our workshop today at

#ICCV2023

!

1

1

27

I recently had my last day as a

@GoogleAI

Resident. It has been an amazing year and I'm very thankful to

@jasonbaldridge

,

@vihaniaj

,

@alex_y_ku

,

@quocleix

and other collaborators for teaching me what no book can and making me fall in love with doing research.

1

0

20

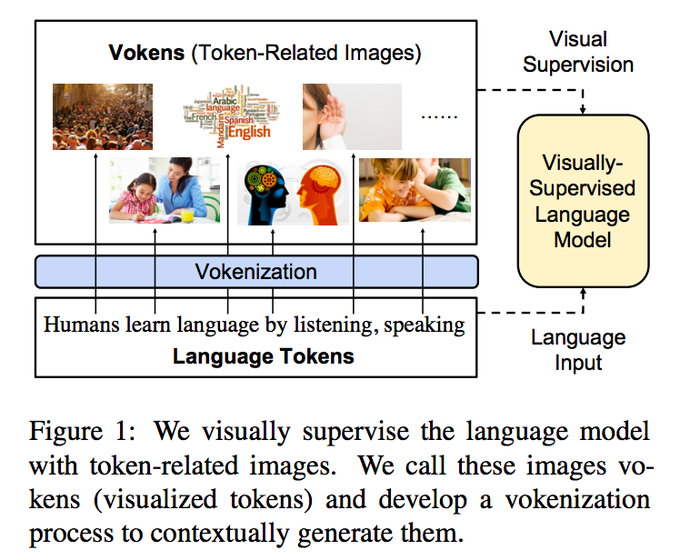

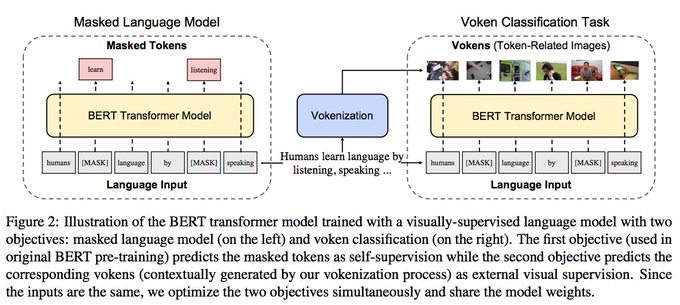

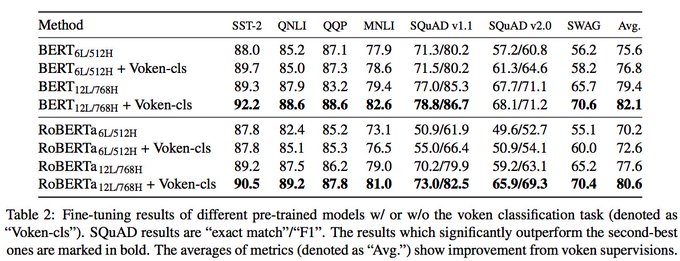

Whoa, this is really cool!

Text-only models often outperform text+vision models in text-only tasks, given the statistical discrepancies in the language used in these domains.

"Vokenization" is a neat way to get some grounded supervision without paying the domain shit price

*Vokenization*: a visually-supervised language model attempt in our

#emnlp2020

paper: (w.

@mohitban47

)

To improve language pre-training, we extrapolate multimodal alignments to lang-only data by contextually mapping tokens to related images ("vokens") 1/4

7

89

356

0

2

17

Large-scale image-text datasets like LAION or DataComp are heavily filtered.

Instead of throwing millions of images away, can we make use of them via image captioning models?

Check out this very cool work led by

@thao_nguyen26

! 👇

0

0

16

This massive effort was made possible thanks to the work of many,

@sy_gadre

,

@AlexFang26

,

@JonathanHayase

, Georgios Smyrnis,

@thao_nguyen26

, Ryan Marten,

@Mitchnw

, Dhruba Ghosh,

@JieyuZhang20

, Eyal Orgad,

@rahiment

,

@giannis_daras

,

@sarahmhpratt

,

@RamanujanVivek

, ...

1

2

16

Researcher 1: we should show that our system is robust

Researcher 2: how about we simulate what would happen if a giraffe tried to eat the cube?

Researcher 1: excellent idea

0

2

15

@peterjliu

A lot of it is data! E.g. in DataComp we saw big gains with better datasets without changing the training recipe

0

0

14

...

@YonatanBitton

,

@Kalyani7195

,

@MussmannSteve

,

@rvencu

,

@mehdidc

,

@RanjayKrishna

,

@PangWeiKoh

,

@osaukh

, Alexander Ratner,

@SongShuran

,

@HannaHajishirzi

, Ali Farhadi,

@rom1504

,

@sewoong79

,

@AlexGDimakis

,

@JJitsev

,

@Vaishaal

, Yair Carmon and

@lschmidt3

1

2

13

If your at

@ACL2019_Italy

and multimodal learning and natural language grounding interests you, come check out our presentation of !

1

3

11

While I'm sad to leave this incredible environment, I'm very excited to be joining

@uwcse

as a PhD student this Fall!

1

0

11

1) Image Transformer (2018), by

@nikiparmar09

,

@ashVaswani

,

@kyosu

,

@lukaszkaiser

, Noam Shazeer,

@alex_y_ku

,

@dustinvtran

.

Local attention at the pixel level for image generation and super-resolution

Link:

1

0

11

@ericjang11

@colinraffel

Some of our recent papers that might interest you!

Merging a finetuned and a pretrained model:

Merging models finetuned on the same task:

Merging models finetuned on different tasks:

1

0

11

2) Attention Augmented CNNs (2019), by

@IrwanBello

,

@barret_zoph

,

@ashVaswani

, Jonathon Shlens,

@quocleix

Augmenting CNNs with self-attention yields considerable improvements on ImageNet

Link:

1

1

11

Check out model soups, a new recipe for fine-tuning! 🍜

Our recipe leads to 90.98% accuracy on ImageNet when fine-tuning BASIC

0

1

10

Packed room today at our

#KDD

tutorial on Deep Learning for

#NLProc

with

#TensorFlow

! Great to talk to such a broad and intelligent audience!

1

2

9

It has been incredibly fun to put this together!

Huge shout-out to the amazing people involved

@Mitchnw

, Nicholas Carlini,

@rtaori13

, Achal Dave,

@Vaishaal

, John Miller, Hongseok Namkoong,

@HannaHajishirzi

, Ali Farhadi &

@lschmidt3

. Special thanks to

@_jongwook_kim

and

@AlecRad

0

0

9

This is a big steps towards democratizing access to large models. Congrats on the great work, Tim!

0

1

9

6) DETR: End-to-End Object Detection with Transformers (2020), by

@alcinos26

,

@fvsmassa

,

@syhw

, Nicolas Usunier,

@kirillov_a_n

,

@szagoruyko5

)

Object detection as a set prediction problem and a transformer on top of a CNN backbone

Link:

1

1

9

7) Group Equivariant Stand-Alone Self-Attention for Vision (2020) by

@davidwromero

,

@jb_cordonnier

Self-attention with equivariance to arbitrary symmetries by carefully defining the positional encodings

Link:

1

0

9

If you haven't been following it,

@wightmanr

,

@CadeGordonML

and others have been doing amazing work with the OpenCLIP library!

They recently trained two ViT models on LAION-400M, the first large-scale, open-source CLIP models where the data is also publicly available!

0

1

8

5) Axial-DeepLab (2020), by Huiyu Wang, Yukun Zhu, Bradley Green, Hartwig Adam,

@YuilleAlan

, Liang-Chieh Chen)

Factorizing 2-d attention into two faster 1-d operations. Strong results on classification and segmentation

Link:

1

0

8

Fantastic work by

@OfirPress

,

@nlpnoah

and

@ml_perception

showing when it's helpful to use shorter sequences for language modeling!

Thread 👇

0

0

8

More details in the great thread below by

@Mitchnw

.

We added a number of new experiments and results in our paper, including additional models such as ALIGN and BASIC, along with further discussions on the role of hyperparameters.

1

1

6

If you're interested in robustness, don't miss

@anas_awadalla

's great work! Tons of models and evaluations, and lots of practical insights!

0

0

7