niki parmar

@nikiparmar09

Followers

9,954

Following

810

Media

7

Statuses

189

Explore trending content on Musk Viewer

Sinwar

• 933241 Tweets

#يحيي_السنوار

• 337694 Tweets

Bluesky

• 251634 Tweets

Calderón

• 216212 Tweets

Tyler

• 100392 Tweets

Rigathi Gachagua

• 86062 Tweets

#TUDUMNaLata

• 85976 Tweets

غير مدبر

• 80359 Tweets

Monsalve

• 79463 Tweets

Rafah

• 66017 Tweets

HAPPY FOURTH DAY

• 60273 Tweets

ابو ابراهيم

• 39660 Tweets

Riggy G

• 36883 Tweets

対象作品

• 22954 Tweets

Macaya

• 22010 Tweets

Karen Nyamu

• 18218 Tweets

Vural Çelik

• 15673 Tweets

Red Bull

• 14798 Tweets

CASA DA VÓ NEUZA

• 14466 Tweets

Sofía Delgado

• 12975 Tweets

Hakan Fidan

• 12653 Tweets

احمد ياسين

• 12267 Tweets

Kawhi

• 11326 Tweets

Alcaraz

• 11231 Tweets

Last Seen Profiles

I’m excited to announce our new startup Adept with the mission to build useful general intelligence. We are a research and product lab that is enabling humans and computers to work together collaboratively.

8

7

335

Our paper, Image Transformer got accepted to ICML! 💃🏻💃🏻💃🏻

4

18

199

Our paper got accepted to

#Neurips

!!

Code release coming soon, keep an eye out :)

2

26

197

Our paper "Attention Is All You Need" gets SOTA on WMT!!! Faster to train, better results

#transformer

6

39

178

ACT-1 : Transformers that take actions for you in your browser and sheets. Check out some exciting examples below 👇👇

6

12

158

Check out an early preview of the models we are building at

The future of interaction with computers is going to be massively redefined, starting with a few tools to anything you do with them. Come shape this future at

@AdeptAILabs

- we are hiring.

7

3

102

Check out this paper on how de-noising decoders are useful for downstream vision tasks like segmentation. I'm excited about the future of a single, unified model across modalities and tasks.

1

11

99

Hilarious. Machine learning course ads appearing at the back of rickshaws (3 wheeled auto) in 🇮🇳! 😂😂

2

11

79

We presented our work on Image Transformer today at ICML’2018. We show self attention based techniques for Image Generation. Great experience with super colleagues

@ashVaswani

and

@dustinvtran

and others :)

1

1

80

tl;dr Check out our new results on Non Autoregressive MT!! We come very close to a greedy Transformer baseline while being >3x faster!

We develop a non-autoregressive machine translation model whose accuracy almost matches a strong greedy autoregressive baseline Transformer, while being 3.3 times faster at inference. Joint work with

@ashVaswani

@nikiparmar09

Aurko Roy

2

50

209

0

9

45

Mesh TensorFlow: A sneak peak of what we've been working on with Noam Shazeer,

@topocheng

and others, to make model parallelism easier to scale to really large models. More details to come soon..

1

2

42

Pre-training on large amounts of text using a Transformer and then performing similar or better on a whole range of NLP tasks using little fine tuning! Impressive results , showing again that increasing compute to train bigger pre-trained models are helpful across various tasks.

0

14

39

This particular example that generates a 2min long video based on a changing story is really cool

Congrats to all the authors!

1

3

33

Come by our talk and poster today to hear more about Image Generation using Self Attention. Paper:

#ICML2018

Check out the Image Transformer by

@nikiparmar09

@ashVaswani

others and me. Talk at 3:20p @ Victoria (Deep Learning). Visit our poster at 6:15-9:00p @ Hall B

#217

!

0

31

119

1

4

32

Super excited to be on this journey with

@ashVaswani

along with our incredible team

@mrinal_iyer_01

,

@ag_i_2211

,

@samcwl

,

@andrewhojel

and Varun Desai.

We believe that a small, focused team of motivated individuals can create outsized breakthroughs. If you want to work on some

2

1

29

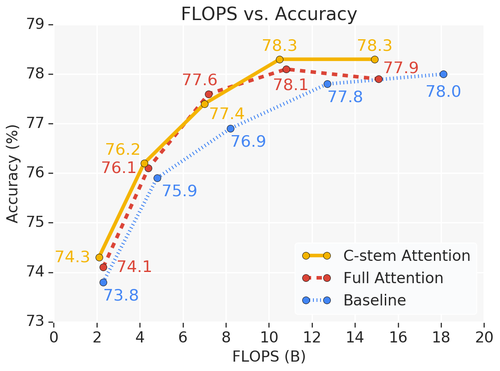

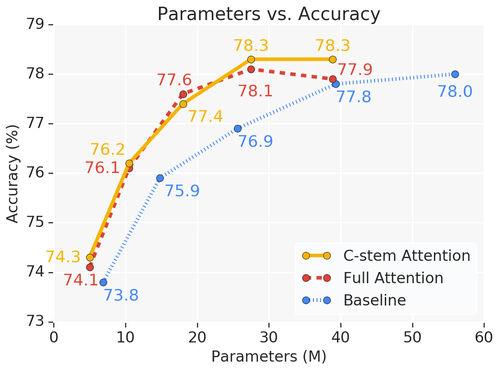

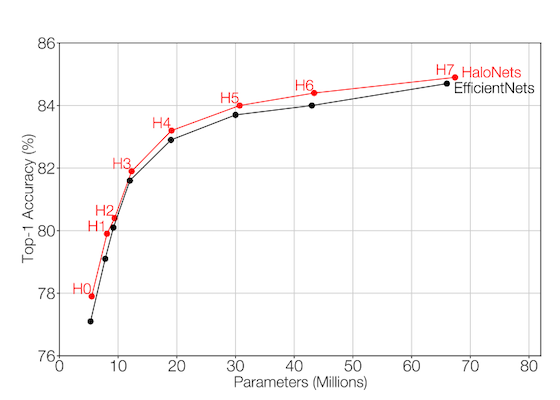

Check out HaloNets, local self-attention that is efficient and gets strong results on ImageNet!

*Faster runtimes

*Parameter efficient

*85.6% Top-1 Accuracy

0

1

31

I am at

#NeurIPS18

in Montreal. PM me if you’d like to talk about Generative Models, Model Parallelism or anything else!

We will be presenting our poster on Mesh TensorFlow along with

@topocheng

on Dec 4th, Poster Session A,

#136

.

0

0

25

@ashVaswani

@mrinal_iyer_01

@ag_i_2211

@samcwl

@AndrewHojel

We are thrilled to be partnering with

@MarchCPs

@ThriveCapital

,

@amd

, Franklin Venture Partners (

@FTI_US

), Google, KB Investment,

@nvidia

and the support from our angels,

@amasad

,

@altcap

,

@w_conviction

,

@eladgil

, Francis deSouza, David H. Patraeus,

@GSapoznik

,

@jwmontgomery

,

1

1

24

Great work! Text to text Transformer with a masking loss does better than other Transfer learning techniques.

0

5

23

Amazing results by OpenAI on using the GPT Language Model across so many tasks!

It's surprising to see how far, simple unsup LM models with scale, can take us :)

0

2

14

Thanks Nathan, excited to have you on this journey!

Enter

@AdeptAILabs

' and its brilliant co-founders

@jluan

and

@nikiparmar09

and

@ashVaswani

who building machines to work together with people in the driver's seat: discovering new solutions, enabling more informed decisions, and giving us more time for the work we love.

2

0

14

2

0

13

@ashVaswani

@mrinal_iyer_01

@ag_i_2211

@samcwl

@AndrewHojel

@MarchCPs

@ThriveCapital

@AMD

@FTI_US

@nvidia

@amasad

@altcap

@w_conviction

@eladgil

@GSapoznik

@jwmontgomery

Follow

@essential_ai

for future updates and announcements!

1

0

11

We are hosting a competition to build efficient neural networks at NeurIPS’19. Submit your entries to help design future hardware and models!

0

2

11

Open-source version of our latest models (Transformer, SliceNet etc)

Announcing the Tensor2Tensor open-source system for training a wide variety of deep learning models in

#TensorFlow

6

500

840

1

0

11

Also attaching our poster from

#NeurIPS18

“Mesh-TensorFlow: Deep Learning for Supercomputers" by Noam Shazeer,

@topocheng

,

@nikiparmar09

and others from

@GoogleAI

. Accepted by

#NeurIPS2018

Read the full paper at

0

1

3

1

1

8

Useful application of model-parallelism for large inputs.

A nice collaboration between

@GoogleAI

researchers and engineers and

@MayoClinic

to use Mesh TensorFlow to deal with very high resolution images that show up when doing machine learning on some kinds of medical imaging data.

0

34

218

0

0

7

Simulation of Universe unfolding using MeshTF

The Universe in your hand 🔭💫

Learn how researchers from

@UCBerkeley

and

@NERSC

are using a new simulation code, FlowPM, to create fast numerical simulations of the Universe in TensorFlow.

Read the blog →

0

55

205

0

0

6

To all the women sharing their stories,

#MeToo

. I literally don't know any women who haven't experienced this.

0

0

6

My fantastic peer group who support me and amaze me! Including

@KathrynRough

,

@timnitGebru

, Victoria Fossum and many more!

@JeffDean

s/o to the amazing women I get to work with who inspire and support me. I’ve had so many fantastic mentors, and wanted to highlight how important my peer group has been for my everyday

@huangcza

@nikiparmar09

@ermgrant

@annadgoldie

@yasamanbb

@catherineols

@collinsljas

, & more!

1

2

17

1

0

5

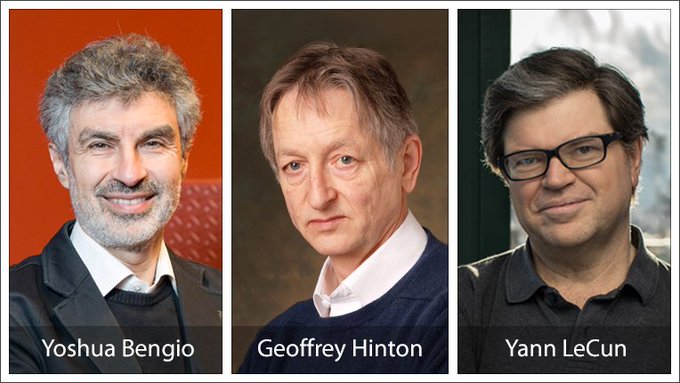

Congratulations

@geoffreyhinton

. This is awe-inspiring.

Yoshua Bengio, Geoffrey Hinton and Yann LeCun, the fathers of

#DeepLearning

, receive the 2018

#ACMTuringAward

for conceptual and engineering breakthroughs that have made deep neural networks a critical component of computing today.

28

1K

3K

0

0

4

@rsprasad

@Google

@GoogleIndia

@PMOIndia

@PIB_India

@_DigitalIndia

@MEAIndia

@vijai63

@ofbjp_usa

@sundarpichai

@RajanAnandan

@DDNewsLive

I saw you outside the Office yesterday. I work at Google and interested to solve problems in weather and farming using AI. Need help with data and resources. Can we connect?

0

0

3

@jekbradbury

The ImageNet experiments are also on 32x32 resolution. The current full autoregressive nature prevents us from going to bigger images. But we want to expand this to bigger images and stronger conditioning in the future

0

0

3

@prafdhar

Congratulations! this is very impressive. Why are the last 5 pixels out of place? Is it the model signature :)

1

0

3

@MortezDehghani

@USC

@GoogleBrain

I wish I could :) really packed on time, spent the day at ISI. Next time around, I’ll plan well in advance and inform you. Apologies :)

0

0

1

Reviews on

#iPhonex

after 2 weeks of use

Good: amazing camera, colors and sharpness brilliant, good battery life, Face ID unlock works mostly

Bad: charging slow, feels a bit heavy, Face ID struggles in low battery mode, when using it in bed and when phone is a bit further away

1

0

1

@aertherks

We train both the baseline and our model for the same number of epochs with the same learning rate and scheme and other regularizations.

1

0

0

@kalpeshk2011

@MohitIyyer

@andrewmccallum

@HamedZamani

@YejinChoinka

@Google

Congratulations, Kalpesh! 🎓

0

0

1

@VFSGlobal

I keep getting "User already logged in" or "data not found " error. It's hard to navigate your website and follow the steps.

1

1

1