Jack Clark

@jackclarkSF

Followers

78K

Following

33K

Media

1K

Statuses

32K

@AnthropicAI, ONEAI OECD, co-chair @indexingai, writer @ https://t.co/3vmtHYkIJ2 Past: @openai, @business @theregister. Neural nets, distributed systems, weird futures

San Francisco, CA

Joined October 2009

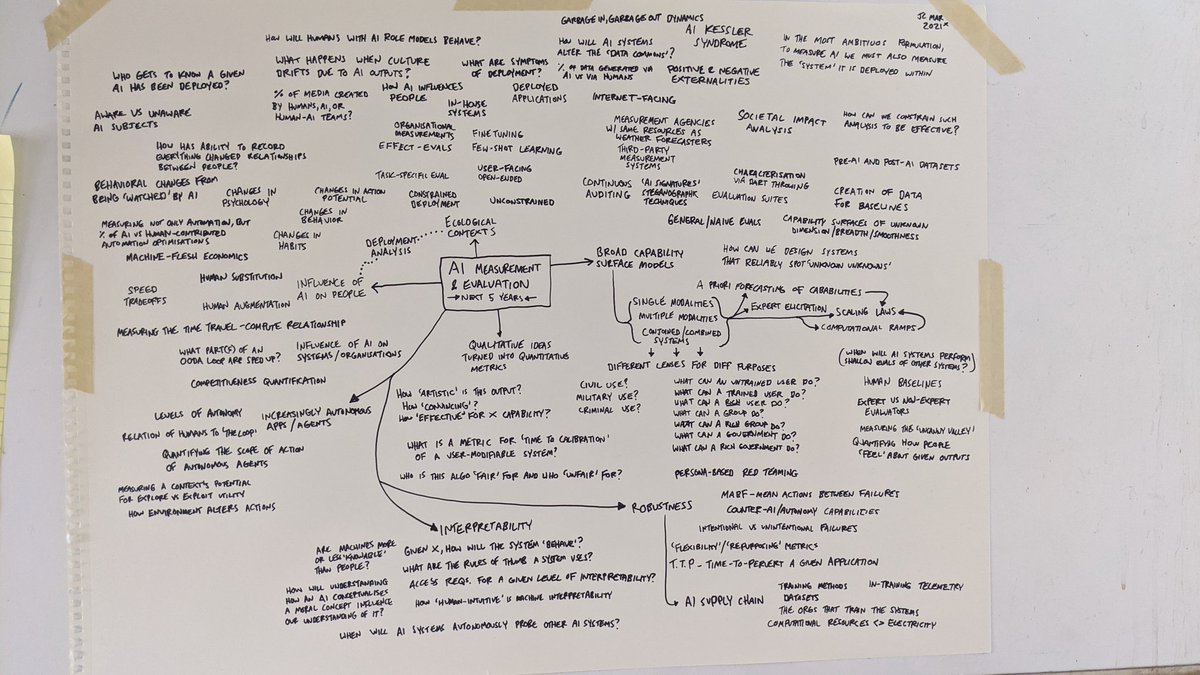

Here’s what I’ve been working on recently: @anthropicai. I’ll be spending a lot of my time on measurement and assessment of our AI systems, as well as thinking of ways govs/others can assess AI tech. There’s a lot to do!.

46

33

508

Stable Diffusion: $600k to train. I'm impressed and somewhat surprised - I figured it'd have cost a bunch more. Also, AI is going to proliferate and change the world quite quickly if you can train decent generative models with less than $1m.

@KennethCassel We actually used 256 A100s for this per the model card, 150k hours in total so at market price $600k.

21

137

1K

Excited to release my major research breakthrough, the 3D Transformer.

Try our live demo for DreamGaussian to get your 3D model in under two minutes at �. Thanks to @huggingface for the free GPU grant!

23

62

717

Google CEO writes letter re @timnitGebru .Sundar: "learning from our experiences like the departure of Dr. Timnit Gebru".Translated Sundar: "analyzing why I let us fire Timnit Gebru and am now desperately trying to position myself as a bystander".

17

77

558

Note that the reason The Register got this monster market-moving story was because it employs (and trains) extremely technical journalists. If you don't understand tech you get lied to. If you can read code it's way harder to get lied to. Other news orgs should follow!

Intel CPUs have a security bug that's forced Linux, Windows kernel redesigns. The fix was almost named FUCKWIT

11

215

564

Today, I testified to the U.S. Senate Committee on Commerce, Science, & Transportation @commercedems. I used an @AnthropicAI language model to write the concluding part of my testimony. I believe this marks the first time a language model has 'testified' in the U.S. Senate.

19

92

518

You're saying these things are dumb? People are making the math-test equivalent of a basketball eval designed by NBA All-Stars because the things have got so good at basketball that no other tests stand up for more than six months before they're obliterated.

AI skeptics: LLMs are copy-paste engines, incapable of original thought, basically worthless. Professionals who track AI progress: We've worked with 60 mathematicians to build a hard test that modern systems get 2% on. Hope this benchmark lasts more than a couple of years.

20

30

533

I typically stay out of stuff like this, but I'm absolutely shocked by this email. It uses the worst form of corporate writing to present @timnitGebru firing as something akin to a weather event - something that just happened. But real people did this, and they're hiding.

12

39

463

I've moved on from OpenAI to work on something new with some colleagues (. I'm also going to be continuing a lot of my work on technology assessment with @indexingai and the @OECD , and am very excited about stuff in the pipeline there!.

48

16

461

New jobs announced for @AndrewYNg startup. Description makes jobs seem anti people with families. Horrible message.

Want to grow your career? We're finally hiring! Thanks also everyone who'd previously tried to volunteer. :).

42

156

402

Current top entry on the @OpenAI Retro Contest Leaderboard has learned to glitch through a test level. Devious RL!

4

123

406

The Future of Computers Is the Mind of a Toddler http://t.co/VhMZFGFarM - aka, how the next-generation of AI will have common sense.

32

152

375

It's covered a bit in the above podcast by people like @katecrawford - there's huge implications to industrialization, mostly centering around who gets control of the frontier, when the frontier becomes resource intensive. So far control is accruing to the private sector (uh oh!)

21

78

374

Stability AI (people behind Stable Diffusion and an upcoming Chinchilla -optimal code model) now have 5408 GPUs, up from 4000 earlier this year - per @EMostaque in a Reddit ama.

17

28

386

People in AI like to complain about the standard of journalistic coverage of AI. It is therefore v confusing to me that #NeurIPS2018 has banned journalists from attending workshops. That's where the debates and new stuff are. How do we get better coverage without sharing more?.

21

65

373

@DanielColson6 @OpenAI @GoogleDeepMind @AnthropicAI @TIME One thing I regularly tell journalists who ask me for story ideas is that they should take the AGI aspirations of the frontier labs literally, rather than covering AGI as a kind of laughable marketing stunt.

11

30

380

Here's a system that beats Stratego, a game with complexity far, far higher than Go.

Mastering the Game of Stratego with Model-Free Multiagent Reinforcement Learning.abs: introduce DeepNash, an autonomous agent capable of learning to play the imperfect information game Stratego from scratch, up to a human expert level

10

42

323

Here's MINERVA which smashes prior math benchmarks by double digit percentage point improvements

Language models have shown strong performance on a variety of #NLU tasks but are weaker at solving tasks that involve quantitative reasoning. Learn how #Minerva uses step-by-step reasoning to achieve a new state of the art on quantitative reasoning tasks→

1

28

287

Will write something longer, but if best ideas for AI policy involve depriving people of the 'means of production' of AI (e.g H100s), then you don't have a hugely viable policy. (I 100% am not criticizing @Simeon_Cps here; his tweet highlights how difficult the situation is).

Wow guys, Falcon-40B (SOTA open-source, probably already dangerous from a misuse perspective) has been trained with ~400 A100s over 2 months😮. It means that if we want to avoid that a random org trains in less than 1y a SOTA open source system and releases it or leak it bc.

17

33

291

@LondonBreed @OpenAI Hiya, I worked at OpenAI for many years and I've got to say that some of my colleagues didn't feel safe walking home at night because of how dangerous chunks of the mission were. I think SF got extremely lucky with OpenAI but you really need to get a handle on crime and housing.

4

9

274

Congratulations to PrimeIntellect on completing a 10B decentralized training run. Decentralized training is one of the most important things to track from an AI policy POV - most efforts to control compute rest on the assumption that strategic AI compute needs to be centralized.

Releasing INTELLECT-1: We’re open-sourcing the first decentralized trained 10B model:. - INTELLECT-1 base model & intermediate checkpoints.- Pre-training dataset.- Post-trained instruct models by @arcee_ai.- PRIME training framework.- Technical paper with all details

7

34

278