Ted Xiao

@xiao_ted

Followers

13K

Following

6K

Media

213

Statuses

1K

Robotics and Gemini @GoogleDeepMind. Posts about large models, robot learning, and scaling. Opinions my own.

San Francisco

Joined October 2013

New phenomenon appearing: the latest generation of foundation models often switch to Chinese in the middle of hard CoT thinking traces. Why? AGI labs like OpenAI and Anthropic utilize 3P data labeling services for PhD-level reasoning data for science, math, and coding; for.

Why did o1 pro randomly start thinking in Chinese? No part of the conversation (5+ messages) was in Chinese. very interesting. training data influence

25

45

439

@aidangomezzz you, a heathen, cheering mindlessly when loss go down. me, an X-risk chad, carefully measuring each gradient by hand to make sure it's not over the proscribed limit to prevent FOOM. we are not the same.

3

14

376

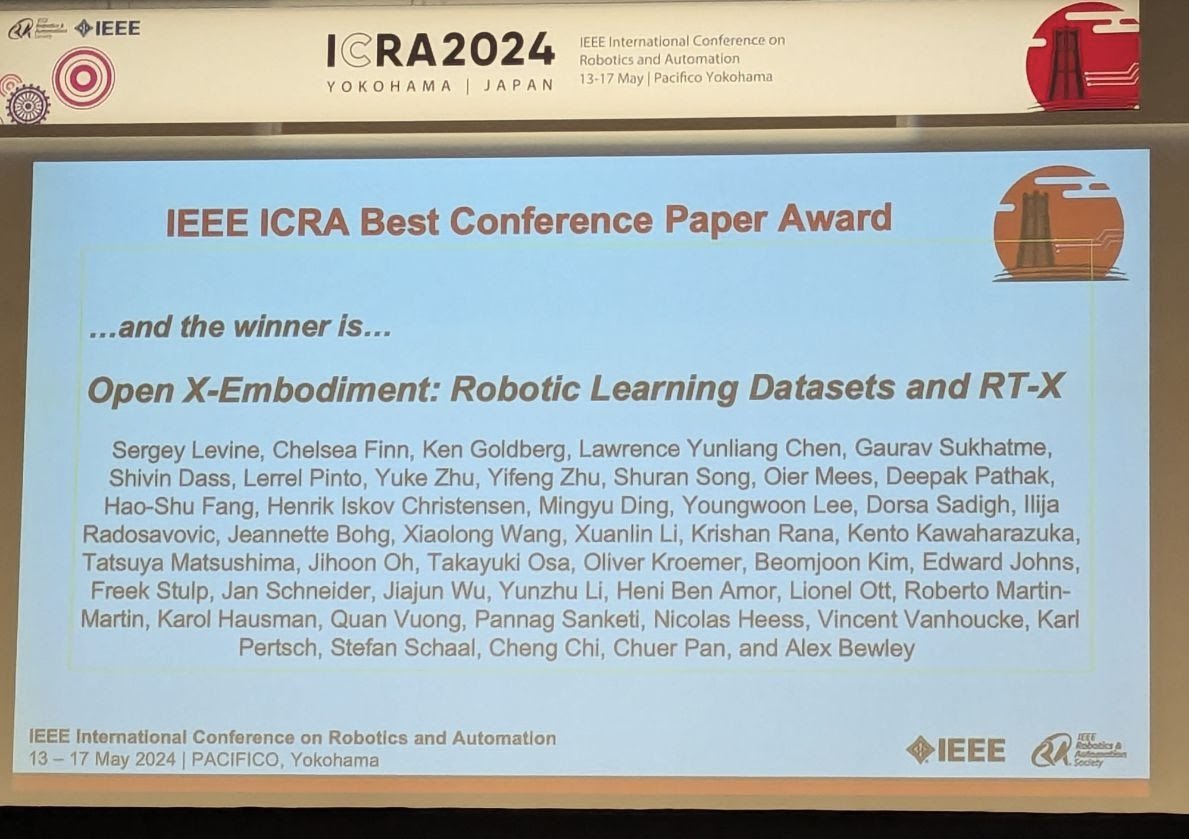

Open X-Embodiment wins the Best Paper Award at #ICRA2024 🎉🤖!. An unprecedented Best Paper 170+ author list (most didn’t fit on the slide) may be a record for ICRA! So amazing to see what a collaborative community effort can accomplish in pushing robotics + AI forward 🚀

5

31

187

🚨In case you missed it: it’s been non-stop technical progress updates from Chinese humanoid companies the past 48 hours! 🚨. In just the last 2 days, batched updates from numerous exciting Chinese humanoid companies all dropped at once:. 1) @UnitreeRobotics showcases technical

5

32

161

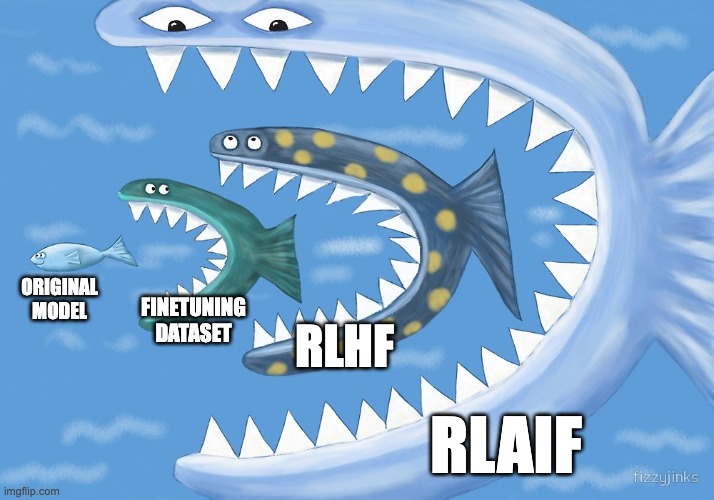

What if you used LLMs for… all parts of RL?. GPT-4 as the Actor and Critic.GPT-4 as the Bellman Update.GPT-4 as the reward function.Vector DB as the replay buffer.GPT-4 as the importance sampling logic.

What if we set GPT-4 free in Minecraft? ⛏️. I’m excited to announce Voyager, the first lifelong learning agent that plays Minecraft purely in-context. Voyager continuously improves itself by writing, refining, committing, and retrieving *code* from a skill library. GPT-4 unlocks

9

17

147

Differentiable physics engines show *huge* wall time speed ups vs. standard MuJoCo 🤯

Speeding Up Reinforcement Learning with a New Physics Simulation Engine . Blog post by @bucketofkets on Brax, a new physics simulation engine that matches the performance of a large compute cluster with just a single TPU or GPU.

2

22

137

New @GoogleDeepMind blog post covering 3 recent works on AI + robotics!. 1. Auto-RT scales data collection with LLMs and VLMS. 2. SARA-RT significantly speeds up inference for Robot Transformers. 3. RT-Trajectory introduces motion-centric goals for robot generalization.

How could robotics soon help us in our daily lives? 🤖. Today, we’re announcing a suite of research advances that enable robots to make decisions faster as well as better understand and navigate their environments. Here's a snapshot of the work. 🧵

6

22

134

Excited to share 3 papers recently accepted at @corl_conf 🤖:. 1) SayCan: Robots ground language models plans with what’s achievable and contextually appropriate. 1.5 year effort with tons of collaborators!. Paper: Paper sites:

8

8

127

The writing is clearly on the wall now: a path to embodied intelligence is more clear than ever!. Congrats to OpenAI and Figure on the exciting partnership, and welcome to the intelligent robotics game 📈.

Last month we demonstrated Figure 01 making coffee only using neural networks. This is a fully learned, end-to-end visuomotor policy mapping onboard images to low level actions at 200hz. Next up: excited to push the boundaries on AI learning with OpenAI.

4

11

122

I am convinced that the amount of robot data collected for learning will be larger in 2024-2025 than all previous years combined. Amazing progress in creative teleop (gloves, puppeteering), in cross-embodiment learning, and in large scale industry data engines (humanoids) 🚀.

Can we collect robot data without any robots?. Introducing Universal Manipulation Interface (UMI). An open-source $400 system from @Stanford designed to democratize robot data collection. 0 teleop -> autonomously wash dishes (precise), toss (dynamic), and fold clothes (bimanual)

2

21

116

We’re living through the golden age of AI Research: . - Highly leveraged impact thanks to successful methods scaling and transferring easily to other domains (ie. generative modeling ideas landing in language, robotics, representation learning). - Relatively low ramp-up cost due.

Seeing people tweet about doing PhD apps and I’ll just say I got into 0/8 of the programs I applied to and things turned out great. There are lots of opportunities for research, don’t stress :).

4

8

118

Extremely thought-provoking work that essentially says the quiet part out loud: general foundation models for robotic reasoning may already exist *today*. LLMs aren’t just about language-specific capabilities, but rather about vast and general world understanding.

Very excited to announce: Keypoint Action Tokens!. We found that LLMs can be repurposed as "imitation learning engines" for robots, by representing both observations & actions as 3D keypoints, and feeding into an LLM for in-context learning. See: More 👇

1

17

117

> year is 2031.> be me, token farmer in the slop mines.> bring in 20k novel tokens for weighing at the Token Utility station.> my token haul is two stddevs over the compressibility threshold, phew.> get my daily blueprint nutrition allocation.> trickle down tokenomics utopia.

📢 Excited to finally be releasing my NeurIPS 2024 submission!. Is Chinchilla universal? No! We find that:.1. language model scaling laws depend on data complexity.2. gzip effectively predicts scaling properties from training data. As compressibility 📉, data preference 📈. 🧵⬇️

8

8

116

Promising progress from 1X on learned world models which improve with more experience and physical interaction data. What I'm excited about:.- World models are likely the only path forward for reproducible and scalable evaluations in *multi-agent settings*; see success of world.

3

10

104

While LLMs have been amazing for high-level reasoning, low-level policy learning is still the bottleneck in robotics. Over 17 months (!!), our team has scaled a massively multitask Transformer-based model to over 700 tasks using 130k real-world episodes. Check out the 🧵 👇.

Introducing RT-1, a robotic model that can execute over 700 instructions in the real world at 97% success rate!. Generalizes to new tasks✅.Robust to new environments and objects✅.Fast inference for real time control✅.Can absorb multi-robot data✅.Powers SayCan✅.🧵👇

3

5

104

Reinforcement learning (!).at scale in the real world (!!).for useful robotics tasks (!!!).in multiple "in-the-wild" offices (!!!!) . A culmination of years of effort -- so excited finally be able to share this publicly! 🤖.

Very exited to announce our largest deep RL deployment to date: robots sorting trash end-to-end in real offices!. (aka RLS). This project took a long time (started before SayCan/RT-1/other newer works) but the learnings from it have been really valuable.🧵

3

15

94

Happy to share that RT-Trajectory has been accepted as a Spotlight (Top 5%) at #ICLR2024!. This was my first last-author project, it was a ton of fun collaborating with a strong team led by @Jiayuan_Gu 🥳. Blogpost: Website: 🧵⬇️.

Instead of just telling robots “what to do”, can we also guide robots by telling them “how to do” tasks?. Unveiling RT-Trajectory, our new work which introduces trajectory conditioned robot policies. These coarse trajectory sketches help robots generalize to novel tasks! 🧵⬇️

5

14

96

AutoRT is now out on Arxiv!. Check out how we set up fleet-scale data collection by leveraging Foundation Models for robot orchestration 📈 . Website: Paper: Original Thread:

Google presents AutoRT. Embodied Foundation Models for Large Scale Orchestration of Robotic Agents. paper page: demonstrate AutoRT proposing instructions to over 20 robots across multiple buildings and collecting 77k real robot episodes via both

2

19

85

Extremely saddened to hear about Felix’s passing. His thoughtful research contributions played a major part of my own development as a scientist. Most recently, his poignant essay on AI and stress humanized the pressure cooker that is today’s AI environment. It resonated deeply.

Do you work in AI? . Do you find things uniquely stressful right now, like never before? . Haver you ever suffered from a mental illness? . Read my personal experience of those challenges here: .

3

6

89

Action-conditioned world models are one step closer! Neural simulations hold a lot of promise for scaling up real-world interaction data, especially for domains which physics-based simulators struggle at. 🚀.

Google presents Diffusion Models Are Real-Time Game Engines. discuss: We present GameNGen, the first game engine powered entirely by a neural model that enables real-time interaction with a complex environment over long trajectories at high quality.

0

14

84

“yeah that’s definitely just a dude in a suit” - the highest compliment a humanoid company can get. Congrats to @ericjang11 @BerntBornich and the team at 1X for a new SOTA humanoid!.

3

2

81

Introducing RT-2, representing the culmination of two trends:. - Tokenize and train everything together: web-scale text, images, and robot data.- VLMs are not just representations, big VLMs *are policies*. Sounds subtle, but we’ll look back on this as an inflection point! 📈.

Today, we announced 𝗥𝗧-𝟮: a first of its kind vision-language-action model to control robots. 🤖. It learns from both web and robotics data and translates this knowledge into generalised instructions. Find out more:

1

17

82

Excited to share that 4 of our recent works will appear at #ICRA 2024 in Yokohama!. (1) RT-X: Open X-Embodiment and (2) GenGap expand and study large generalist datasets. (3) PromptBook and (4) PG-VLM improve the robotic reasoning capabilities of frontier LLMs and VLMs. 🧵👇.

5

13

82

Update: Our recent work "Manipulation of Open-World Objects" (MOO) has been accepted to #CoRL2023!. Extremely simple object-centric representations (literally just a single pixel) can significantly boost robot generalization!. Check out the original thread + new updates below⬇️

Robot learning systems have scaled to many complex tasks, but generalization to novel objects is still challenging. Can we leverage pre-trained VLMs to expand the open-world capabilities of robotic manipulation policies?. Introducing MOO: Manipulation of Open-World Objects!🐮🧵👇

2

20

78

The secret ingredient behind robot foundation models has been compositional generalization, which enables positive transfer across different axes of generalization. I'm excited to share our new project where we use compositional generalization to inform data collection! 🧵⬇️

How should we efficiently collect robot data for generalization? We propose data collection procedures guided by the abilities of policies to compose environmental factors in their data. Policies trained with data from our procedures can transfer to entirely new settings. (1/8)

1

10

75

@DrJimFan Agree 100%. Feels extremely lucky to be a witness and participant in this era of history.

Our grandchildren will look back at our timeline as a step function - a binary before and after of amazing technologies. But I’m so grateful to be part of this most magical time period where we are living through and defining history. Cheers to 2022 and an even better 2023! 🎊

3

4

75

Congrats to Ding Liren for becoming the World Chess Champion! 👑♟️. What an exhilarating series and an amazing Game 4 of the Rapid Tiebreakers, where Ding’s mental game shined through. Well deserved victory!.

Ding Liren wins the 2023 FIDE World Championship 🏆. Congratulations to Ding on becoming the new FIDE World Champion, and cementing his place in chess history after a thrilling match! 👏

1

8

70

Wow, 4 years later, the MineRL Diamond challenge has been solved *without demonstrations* 🤯! In 2019, the best solutions used many priors and demos but still couldn’t solve the task. In 2022, VPT was the 1st method to collect diamonds, but with demos + IDM. Congrats DreamerV3!.

Introducing DreamerV3: the first general algorithm to collect diamonds in Minecraft from scratch - solving an important challenge in AI. 💎. It learns to master many domains without tuning, making reinforcement learning broadly applicable. Find out more:

1

17

69

Amazing unveil from @DrJimFan and @yukez: a cross-embodiment *humanoid* foundation model project in just three months (!!). Exciting to see long-term seemingly unrelated bets by NVIDIA pay off: extensive sim2real, multimodal robot policies, and top-tier accelerators! 👏.

Today is the beginning of our moonshot to solve embodied AGI in the physical world. I’m so excited to announce Project GR00T, our new initiative to create a general-purpose foundation model for humanoid robot learning. The GR00T model will enable a robot to understand multimodal

4

10

67

A major debate in robot learning is whether language is only a good modality for abstract high-level semantics but not low-level motion. Our recent work RT-Hierarchy shows that granular *language motions* can go surprisingly far! . Thread below ⬇️.

Is language capable of representing low-level *motions* of a robot?. RT-Hierarchy learns an action hierarchy using motions described in language, like “move arm forward” or “close gripper” to improve policy learning. 📜: 🏠: (1/10)

1

10

64

Nice work from Berkeley showing how motion-centric information is useful for robot policies to exhibit cross-embodiment transfer! . Brings together some ideas from coarse egocentric trajectories ( and dense point tracking flow ( 🌊.

What state representation should robots have? 🤖 I’m thrilled to present an Any-point Trajectory Model (ATM), which models physical motions from videos without additional assumptions and shows significant positive transfer from cross-embodiment human and robot videos! 🧵👇

2

9

66

To scale robot data collection effectively 🚀, it's clear that we need go beyond the 1 human : 1 robot ratio. Towards this, we introduce AutoRT: leveraging foundation model reasoning and planning for robot orchestration at scale!. Check out the thread from Keerthana below 🧵

In the last two years, large foundation models have proven capable of perceiving and reasoning about the world around us unlocking a key possibility for scaling robotics. We introduce a AutoRT, a framework for orchestrating robotic agents in the wild using foundation models!

0

6

64

Scalable evaluation is a major bottleneck for generalist real-world robot policies. Projects like RT-1 and RT-2 required *thousands* of evaluation trials in the real world 😱. In our new work SIMPLER, we evaluate robot policies in simulation to predict real world performance! 👇

[1/14] Real robot rollouts are the gold standard for evaluating generalist manipulation policies, but is there a less painful way to get good signal for iterating on your design decisions? Let’s take a deep dive on SIMPLER 🧵👇 (or see quoted video)!.

2

10

61

Molmo is a very exciting multimodal foundation model release, especially for robotics. The emphasis on pointing data makes it the first open VLM optimized for visual grounding — and you can see this clearly with impressive performance on RealworldQA or OOD robotics perception!

Try out Molmo on your application! This is a great example by @DJiafei! We have a few videos describing Molmo's different capabilities on our blog! This one is me trying it out on a bunch of tasks and images from RT-X:

0

9

60

In contrast, I���m grateful to be part of a growing all-star team at GDM Robotics pushing forwards on the frontier of Embodied AI 🙌. I’m very optimistic about real-world tokens being indispensable for AGI!.

What do Google, Microsoft and OpenAI have in common? They all had robotics projects and gave up on them.

2

3

59

Can "teachability" be a core LLM capability that can be learned via finetuning?. Introducing Language Model Predictive Control (LMPC): distilling entire in-context teaching sessions via in-weight finetuning improves the teachability of LLMs!. Website: 🧵👇

We can teach LLMs to write better robot code through natural language feedback. But can LLMs remember what they were taught and improve their teachability over time?. Introducing our latest work, Learning to Learn Faster from Human Feedback with Language Model Predictive Control

1

12

57

Emergent RL capabilities have never looked so cute before 🥹. Check out an amazing effort from colleagues on scaling RL + self-play to real world football!.

Soccer players have to master a range of dynamic skills, from turning and kicking to chasing a ball. How could robots do the same? ⚽. We trained our AI agents to demonstrate a range of agile behaviors using reinforcement learning. Here’s how. 🧵

0

11

58

Q: What happens when you combine LLMs, general robot manipulation policies, and a live remote demo from halfway across the world?. A: The Google DeepMind demo at #RSS2023 on Tuesday. Join us at the 2:30PM Demo Session on 7/11!. w/ @andyzeng_ @hausman_k @brian_ichter and🤖

3

7

58

VLMs can express their temporal and semantic knowledge of robotics via *predicting value functions of robot videos*! Check out our recent work on leveraging VLMs like Gemini for Generative Value Learning 🧵👇.

Excited to finally share Generative Value Learning (GVL), my @GoogleDeepMind project on extracting universal value functions from long-context VLMs via in-context learning!. We discovered a simple method to generate zero-shot and few-shot values for 300+ robot tasks and 50+

0

7

57

The major thing going for IL is that it turns open-ended research risks (RL tuning, GAIL instabilities, reward shaping) into a data engineering problem, which is much more tractable and easier to make consistent progress on. Intellectually disappointing but effective 🫠.

Huge fraction of all written text as its training data and GPT-4 still makes basic reasoning mistakes; kinda makes you suspicious about imitation learning as a robust approach in other settings e.g. driving, robotics.

4

3

54

Had a great time today with @YevgenChebotar and @QuanVng visiting @USCViterbi to give a talk on “Robot Learning in the Era of Foundation Models”. Slides out soon, packed with works from *just the past 5 months* 🤯. Thanks to @daniel_t_seita for hosting!

1

3

57

@DrJimFan Nice detective work! Agree with architectural guesses (1), (3), (5). I think (2) and (4) are a bit less obvious; very high DoF l + long horizon + high frequency control means that standard decisions decisions in manipulation may not be enough. But video-level tokenization is hard.

1

2

56

Excited to showcase how generative models can be used for semantically relevant image augmentation for robotics. ROSIE uses a diffusion model to produce semantically relevant visual augmentations on existing datasets -- unlocking new skills and more robust policies 🖌️🎨.

Text-to-image generative models, meet robotics! . We present ROSIE: Scaling RObot Learning with Semantically Imagined Experience, where we augment real robotics data with semantically imagined scenarios for downstream manipulation learning. Website: 🧵👇

1

7

51

How can we connect advances in video generation foundation models to low-level robot actions? We propose a simple but powerful idea: condition robot policies directly on generated human videos!. Video Generation 🤝 Robot Actions. Check out Homanga’s thread:.

Gen2Act: Casting language-conditioned manipulation as *human video generation* followed by *closed-loop policy execution conditioned on the generated video* enables solving diverse real-world tasks unseen in the robot dataset!. 1/n

1

9

54

Large scale cross-embodied language-conditioned agents in a variety of video game domains! What’s particularly exciting is seeing positive transfer: generalist agents outperform specialist agents.

Introducing SIMA: the first generalist AI agent to follow natural-language instructions in a broad range of 3D virtual environments and video games. 🕹️. It can complete tasks similar to a human, and outperforms an agent trained in just one setting. 🧵

0

4

50

Announcing our recent work RT-Sketch!. ❌Goal images contain a lot of useful information, but perhaps too much .❌Language instructions may not provide enough information .✅Goal *sketches* focus on the important details, and are easy to specify!. Checkout the thread:

We can tell our robots what we want them to do, but language can be underspecified. Goal images are worth 1,000 words, but can be overspecified. Hand-drawn sketches are a happy medium for communicating goals to robots!. 🤖✏️Introducing RT-Sketch: 🧵1/11

2

7

51