Yuke Zhu

@yukez

Followers

17K

Following

1K

Media

60

Statuses

324

Assistant Professor @UTCompSci | Co-Leading GEAR @NVIDIAAI | CS PhD @Stanford | Building generalist robot autonomy in the wild | Opinions are my own

Austin, TX

Joined August 2008

Got a taste of @Tesla's FSD v12.3.4 last night. By no means flawless, but the human-like driving maneuvers (with no interventions) delivered a magical experience. Excited to witness the recipe of scaling law and data flywheel for full autonomy show signs of life in real products.

31

197

2K

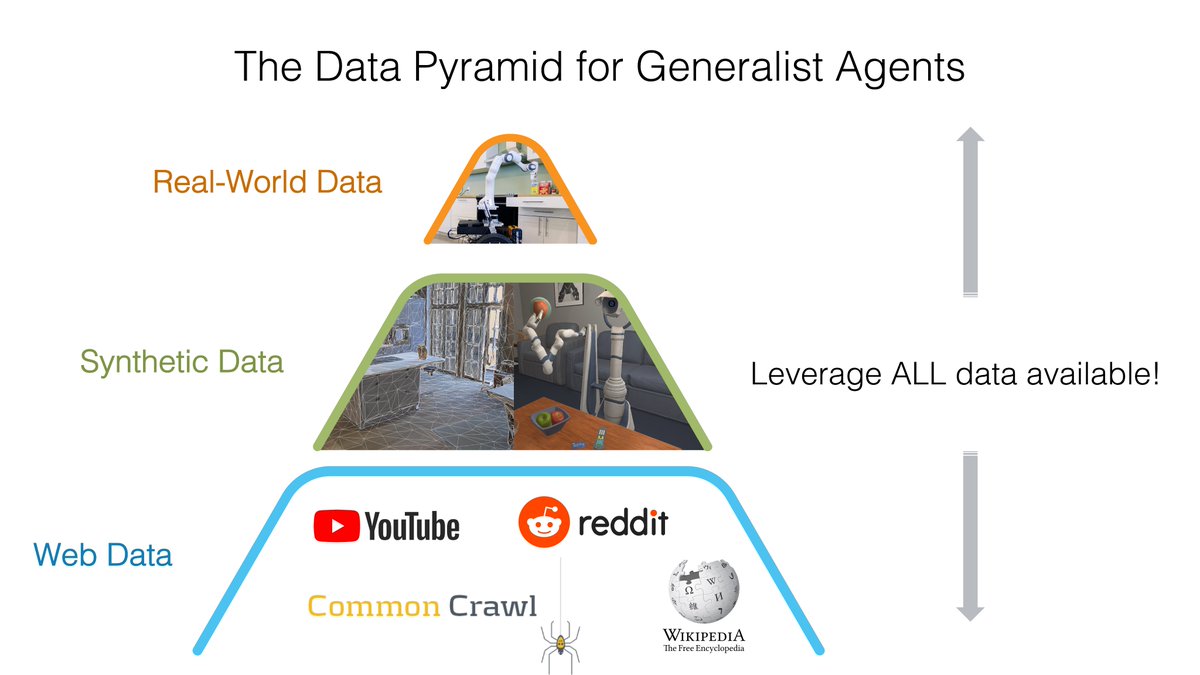

Proud to see our latest progress on Project GR00T featured in Jensen's #SIGGRAPH2024 keynote talk today! We integrated our RoboCasa and MimicGen works into NVIDIA Omniverse and Isaac, enabling model training across the Data Pyramid from real-robot data to large-scale simulations.

13

74

435

The million-dollar question in humanoid robotics is: Can humanoids tap into Internet-scale training data such as online videos due to their human-like physique?. Our #CoRL2024 oral paper showed the promise of humanoids learning new skills from single video demonstrations. (1/n)

13

108

569

My Robot Learning class @UTCompSci is updated with the latest advances and trends, such as implicit representations, attention architectures, offline RL, human-in-the-loop, and synthetic data for AI. All materials will be public. Enjoy! #RobotLearning

13

104

541

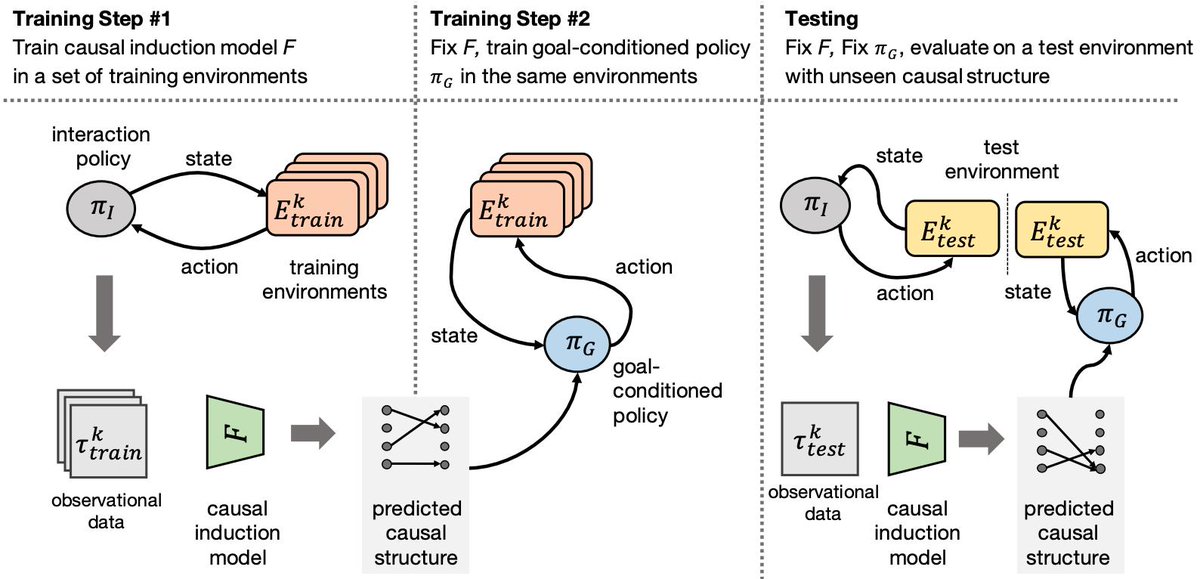

New work: we built a meta-learning algorithm for an agent to discover the causal and effect relations from its visual observations and to use such causal knowledge to perform goal-directed tasks. Paper: Joint work w/ @SurajNair_1 @drfeifei @silviocinguetta

5

107

453

📢Update announced in today’s #GTC2024 Keynote📢. We are working on Project GR00T, a general-purpose foundation model for humanoid robots. GR00T will enable the robots to follow natural language instructions and learn new skills from human videos and demonstrations. Generalist

12

63

423

Thrilled to co-lead this new team with my long-time collaborator @DrJimFan. We are on a mission to build transformative breakthroughs in the landscape of Robotics and Embodied Agents. Come join us and shape the future together!.

Career update: I am co-founding a new research group called "GEAR" at NVIDIA, with my long-time friend and collaborator Prof. @yukez. GEAR stands for Generalist Embodied Agent Research. We believe in a future where every machine that moves will be autonomous, and robots and

16

18

304

Life update: I will be joining @UTAustin as an Assitant Professor in @UTCompSci starting Fall 2020. I am thrilled to continue my research on robot learning and perception as a faculty and look forward to collaborating with the exceptional faculty, researchers, and students at UT.

22

15

371

Excited to share our latest progress on legged manipulation with humanoids. We created a VR interface to remote control the Draco-3 robot 🤖, which cooks ramen for hungry graduate students at night. We can't wait for the day it will help us at home in the real world! #humanoid

7

65

317

Releasing my Stanford Ph.D. dissertation and talk slides "Closing the Perception-Action Loop: Towards Building General-Purpose Robot Autonomy", a summary of my work on robot perception and control @StanfordSVL. Slides: Dissertation:

3

54

305

Congratulations to @snasiriany and @huihan_liu on winning the #ICRA2022 Outstanding Learning Paper award for their first paper @UTCompSci “Augmenting Reinforcement Learning with Behavior Primitives for Diverse Manipulation Tasks”!

23

20

303

Taught my first (online) class @UTCompSci. Super pumped to teach a grad-level Robot Learning seminar this fall. Great to see UT students from all kinds of backgrounds passionate about learning what’s going on at the forefront of AI + Robotics🤘Syllabus:

6

21

252

Very impressed by the new @Tesla_Optimus end2end skill learning video!. Our TRILL work ( spills some secret sauce: 1. VR teleoperation, 2. deep imitation learning, 3. real-time whole-body control. It's all open-source! Dive in if you're into humanoids! 👾

1

54

243

Just learned that our MineDojo paper won the Outstanding Paper award at #NeurIPS2022 See you in New Orleans next week!

Introducing MineDojo for building open-ended generalist agents! ✅Massive benchmark: 1000s of tasks in Minecraft.✅Open access to internet-scale knowledge base of 730K YouTube videos, 7K Wiki pages, 340K Reddit posts.✅First step towards a general agent.🧵

5

31

243

Some of my proudest memories of my PhD are working with people from different countries and being advised by a stellar all-women thesis committee. I encourage students from diverse backgrounds to apply for my future lab @UTCompSci where diversity and inclusion will be valued.

3

19

228

Dear academics, check out our 6 pack!! 💪 Ok. I meant 6-PACK, our new 6DoF Pose Anchor-based Category-level Keypoint tracker, real-time tracking of novel objects without known 3D models!.

We present 6-PACK, an RGB-D category-level 6D pose tracker that generalizes between instances of classes based on a set of anchors and keypoints. No 3D models required! Code+Paper: w/ Chen Wang @danfei_xu Jun Lv @cewu_lu @silviocinguetta @drfeifei @yukez

1

35

185

As much as I'd like to tweet positivity and focus on #AcademicChatter, I know how difficult this moment is for the Asian community when my wife and I feel anxious about going out for shopping & errands, hearing recent news about hate crimes. Hatred is NOT a solution to a virus.

5

6

166

Thanks Fei-Fei @drfeifei for being such an amazing advisor, mentor, role model, and friend! Finishing a PhD is the end of the beginning. And greater things have yet to come!.

Very proud of my PhD student @yukez for passing his PhD thesis defense with flying colors! His work is on perception, learning and robotics. Thank you thesis committee members @leto__jean @EmmaBrunskill @silviocinguetta & Dan Yamins!

4

2

152

First time attending @HumanoidsConf (on the @UTAustin campus!) Feels pumped to see the lightning-fast progress in this space. I expect this community to proliferate in the next few years --- Generalist robot intelligence can't be achieved without general-purpose hardware!

1

12

152

100% agreed! I also felt extremely lucky to have some kindest and smartest advisors @Stanford and colleagues @UTCompSci . "We're all smart. Distinguish yourself by being kind." This quote is one of the first principles I will teach to my students as a scholar.

All the technically strongest people I know are *kind* people. My advisors/profs at @WisconsinCS, my colleagues at @UTCompSci, they are all competent, caring, empathetic human beings. Sure, there are some jerks, but they are the minority -- there is no need to hire them.

2

14

137

Check out a new blog post of our work on long-horizon planning for robot manipulation. We also released RoboVat, our learning framework that unifies #BulletPhysics simulation and Sawyer robot control interfaces. Sim2real has never been easier.

How can a robot solve complex sequential problems?. In our newest blog post, @KuanFang introduces CAVIN, an algorithm that hierarchically generate plans in learned latent spaces.

1

22

130

robosuite ( has been a true labor of love for the past seven years. Building this open-source simulation framework has required massive collaboration across institutions. Open-source software often goes underappreciated in academic culture. robosuite was.

📢New Release📢 We are excited to announce **robosuite v1.5**, supporting more robots, teleoperation interfaces, and controllers ➕ real-time ray tracing rendering! We continue our commitment to building open-source research software. Try it out at

2

18

130

Another key result from my lab in leveraging human-centered data sources for humanoid robots — this time, human motion captures. By training on large-scale mocap databases and remapping human motions to humanoids, Harmon enables the robots to generate motions from text commands.

Excited to share our #CoRL2024 paper on humanoid motion generation! Combining human motion priors with VLM feedback, Harmon generates natural, expressive, and text-aligned humanoid motion from freeform text descriptions. 👇(1/4)

4

16

124

Our robot can now make morning coffee for you…. The secret recipe:.1⃣ Object-centric representation.2⃣ Transformer-based policy architecture.3⃣ Data-efficient imitation learning algorithm.4⃣ Robust impedance controller. Enjoy ☕️! #CoRL2022 #VIOLA.

How can robot manipulators perform in-home tasks such as making coffee for us? We introduce VIOLA, an imitation learning model for end-to-end visuomotor policies that leverages object-centric priors to learn from only 50 demonstrations!

2

13

125

Visiting @Princeton today to speak at the Symposium on Safe Deployment of Foundation Models in Robotics. Fall is a beautiful season to see the Princeton campus! Event website:

5

6

122

Check out our new survey paper on Foundation Models in Robotics!.

Foundation Models in Robotics: Applications, Challenges, and the Future. paper page: We survey applications of pretrained foundation models in robotics. Traditional deep learning models in robotics are trained on small datasets tailored for specific

4

25

115

2 years ago I was shopping for a coffee machine at Target. I found a perfect Keurig not for me but for my robot:. - Round tray to insert a K-cup;.- Lid open/close w/ weak forces;.- Coffee out w/ one button click. There's no magic. Human ingenuity is behind every robot's success.

If you want to learn more about how the task has motivated a line of research in manipulation, see the list:.- VIOLA: - HYDRA: - AWE: - HITL-TAMP: - MimicGen:

3

10

90

Our #ICRA2019 paper received the Best Conference Paper Award w/ @michellearning @leto__jean @animesh_garg @drfeifei @silviocinguetta

7

5

114

MimicGen source code is now publicly available! Our system generates automated robot trajectories from a handful of human demonstrations, enabling large-scale robot learning:

Want to generate large-scale robot demonstrations automatically?. We have released the full MimicGen code. Excited to see what the community will do with this powerful data generation tool!. Code: Docs:

0

17

115

Rewriting classical robot controller with physics-informed neural network, plugging it as learnable module into data-driven autonomy stack, trained with large-scale GPU-accelerated simulation ➡️ Adaptivity & Robustness to the next level💡.

How can we enable robot controllers to better adapt to changing dynamics? Idea: learn a data-driven controller implemented with physics-informed neural networks, and finetune on task-specific dynamics. Website: Paper:

0

19

105

Delighted to present our recent work on hierarchical Scene Graphs for neuro-symbolic manipulation planning. We use 3D Scene Graphs as an object-centric abstraction to reason about long-horizon tasks. w/ @yifengzhu_ut, Jonathan Tremblay, Stan Birchfield

0

15

98

Excited to share my recent talk at the Stanford Robotics Seminar on “Objects, Skills, and the Quest for Compositional Robot Autonomy” featuring projects from my first year @UTCompSci and our lab’s vision of building the next generation of autonomy stack.

1

12

99

We have just released our new work on 6D pose estimation from RGB-D data -- real-time inference with end-to-end deep models for real-world robot grasping and manipulation! Paper: Code: w/ @danfei_xu @drfeifei @silviocinguetta

3

23

97

Excited to share VIMA, our latest work on building generalist robot manipulation agents with multimodal prompts. Massive transformer model + unified task specification interface for the win!.

We trained a transformer called VIMA that ingests *multimodal* prompt and outputs controls for a robot arm. A single agent is able to solve visual goal, one-shot imitation from video, novel concept grounding, visual constraint, etc. Strong scaling with model capacity and data!🧵

0

14

97

🎉Public release🎉 Thrilled to kickstart our new embodied AI moonshot: building general-purpose open-ended agents with Internet-scale knowledge!.

Introducing MineDojo for building open-ended generalist agents! ✅Massive benchmark: 1000s of tasks in Minecraft.✅Open access to internet-scale knowledge base of 730K YouTube videos, 7K Wiki pages, 340K Reddit posts.✅First step towards a general agent.🧵

1

10

96

I felt fortunate to attend all four CoRL conferences in the past and served as an AC the first time. @corl_conf is hands down my favorite conference - focused Robot Learning community, high-quality (<200) papers, YouTube live stream, inclusion events. I couldn't ask for more!.

0

3

90

We've released an updated version of ACID, our #RSS2022 paper on volumetric deformable manipulation, with real-robot experiments. ACID predicts dynamics, 3d geometry, and point-wise correspondence from partial observations. It learns to maneuver a cute teddy bear into any pose.

1

9

91

All talk recordings of our #CVPR2023 3D Vision and Robotics Workshop are now available on the YouTube playlist: Check them out in case you missed the event!.

Heading to @CVPR today! We are organizing a 3D Vision and Robotics workshop tomorrow with a great line-up of speakers: Also, I am recruiting a postdoc on vision + robotics for my group. Come to chat with me if interested - DMs are open!.

0

13

85

Spot-on! Top AI researchers and institutes have the magic power of pushing a research field years back, simply by publishing initial papers and inadvertently creating a vicious cycle of worthless publications. With great power comes great responsibility.

A lot of machine learning research has detached itself from solving real problems, and created their own "benchmark-islands". How does this happen? And why are researchers not escaping this pattern?. A thread 🧵

0

8

82

Sharing the slides of my talk "Learning Keypoint Representations for Robot Manipulation" presented at the Workshop on Learning Representations for Planning and Control @IROS2019MACAU. Slides: Workshop:

1

18

83

I will attend ICML in Hawaii next week to present VIMA ( and meet friends. Our NVIDIA team is seeking new talent for AI Agents, LLMs, and Robotics. Reach out via DMs if interested!.

I'm going to ICML in Hawaii!. My team pushes the research frontier in AI agents, multimodal LLMs, game AI, and robotics. If you're interested in joining NVIDIA or collaborating with me, please reach out by email! My contact info is at If applicable,

0

6

81

Check out our new work, BUMBLE — Vision-language models (VLMs) act as the "operating system" for robots, calling perceptual and motor skills through APIs. The stronger the core VLM's capabilities, the better the robot gets at mobile manipulation.

🤖 Want your robot to grab you a drink from the kitchen downstairs?. 🚀 Introducing BUMBLE: a framework to solve building-wide mobile manipulation tasks by harnessing the power of Vision-Language Models (VLMs). 👇 (1/5). 🌐

0

6

75

Pleased to be invited by @SamsungUS to talk about my research on robot perception and learning. Covered our latest work on self-supervised sensorimotor learning, hierarchical planning, and cognitive learning and reasoning in the open world. Video:

1

10

74

We’re advancing automated runtime monitoring and fleet learning with visual world models — a pivotal step toward building a data flywheel for robot learning. Kudos to @huihan_liu for spearheading the Sirius projects in my lab. Very proud of her achievements!.

With the recent progress in large-scale multi-task robot training, how can we advance the real-world deployment of multi-task robot fleets?. Introducing Sirius-Fleet✨, a multi-task interactive robot fleet learning framework with 𝗩𝗶𝘀𝘂𝗮𝗹 𝗪𝗼𝗿𝗹𝗱 𝗠𝗼𝗱𝗲𝗹𝘀! 🌍 #CoRL2024

3

7

71

Our department @UTCompSci @UTAustin is recruiting new Robotics faculty this year. Come join us in the booming city of Austin!

0

21

69

Excited to introduce 𝚛𝚘𝚋𝚘𝚖𝚒𝚖𝚒𝚌, a new framework for Robot Learning from Demonstration. This open-source library is a sister project of 𝚛𝚘𝚋𝚘𝚜𝚞𝚒𝚝𝚎 in our ARISE Initiative. Try it out!.

Robot learning from human demos is powerful yet difficult due to a lack of standardized, high-quality datasets. We present the robomimic framework: a suite of tasks, large human datasets, and policy learning algorithms. Website: 1/

0

6

72

A nice summary of our recent works on imitation learning from visual demonstration. Compositionality and abstraction are key to scaling up IL algorithms to long-horizon manipulation tasks.

What if we can teach robots to do new task just by showing them one demonstration? . In our newest blog post, @deanh_tw and @danfei_xu show us three approaches that leverage compositionality to solve long-horizon one-shot imitation learning problems.

1

13

71

Pleased to see our Sirius paper nominated for the Best Paper Award #RSS2023: Join our presentation in Daegu, Korea on July 11th!. Exciting times ahead as our lab explores the new frontier of 𝗥𝗟𝗢𝗽𝘀 (Robot Learning + Operations) in long-term deployment.

Like the best chess players are human-AI teams (centaurs), trustworthy deployment of robot learning models needs such a partnership! Sirius is our first milestone toward Continuous Integration and Continuous Deployment (CI/CD) for robot autonomy during long-term deployments👇.

3

3

70

Looking forward to sharing our latest progress on GPU-accelerated robotics simulation in the Isaac Gym tutorial @RoboticsSciSys 2021 next Monday.

Join us on July 12th at #RSS. This workshop will introduce the end-to-end GPU accelerated training pipeline in #NVIDIA Isaac Gym, demonstrate #robotics applications, and answer questions in breakout sessions. Register here: #AI #robots #nvidiaisaac

0

5

66

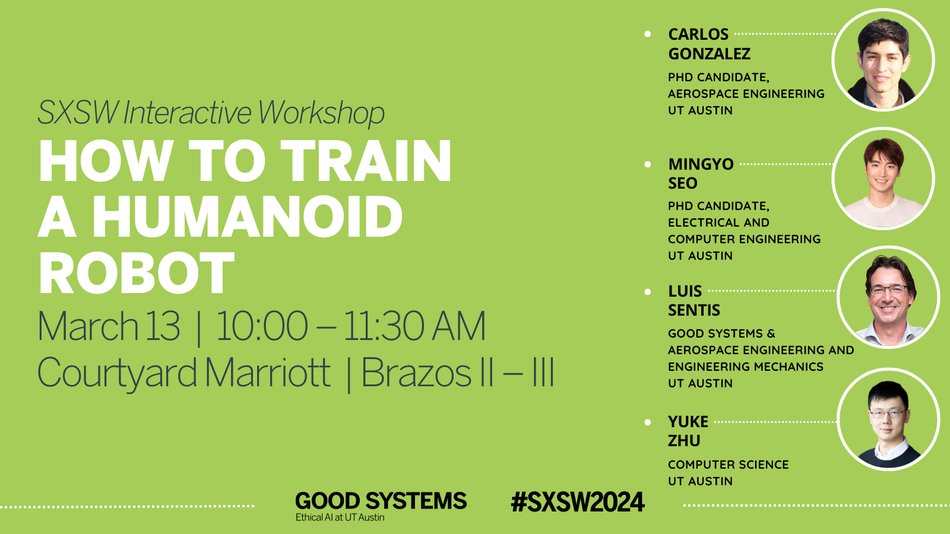

I will give a talk at #SXSW2024 on How to Train a Humanoid Robot tomorrow from 10 to 11:30 a.m. Come to check out our ramen-cooking DRACO 3 robot developed @texas_robotics and learn the technical stories behind it!

2

6

64

Our Eureka follow-up work is out!.

Introducing DrEureka🎓, our latest effort pushing the frontier of robot learning using LLMs!. DrEureka uses LLMs to automatically design reward functions and tune physics parameters to enable sim-to-real robot learning. DrEureka can propose effective sim-to-real configurations

1

6

62

Check out our recent work on building agents that self-generate training tasks to facilitate the learning of harder tasks.

We introduce APT-Gen to procedurally generate tasks of rich variations as curricula for reinforcement learning in hard-exploration problems. Webpage: Paper: w/ @yukez @silviocinguetta @drfeifei

0

12

61

Revisiting @DavidEpstein's book Range: Why Generalists Triumph in a Specialized World as a roboticist unveils a compelling insight: Elite athletes usually start broad and embrace diverse experiences as a generalist prior to delayed specialization. Given this prevailing pathway.

1

5

58

This is a fantastic initiative and exciting collaboration between industry and academia toward unleashing the future of Robot Learning as a Big Science! We must join forces in the quest for the north-star goal of generalist robot autonomy.

Introducing 𝗥𝗧-𝗫: a generalist AI model to help advance how robots can learn new skills. 🤖. To train it, we partnered with 33 academic labs across the world to build a new dataset with experiences gained from 22 different robot types. Find out more:

0

6

58

Excited about coming back to Vancouver, the beautiful city where I attended college, for #NeurIPS2019 Looking forward to catching up with the latest research and hanging out with friends!

1

1

57

Like the best chess players are human-AI teams (centaurs), trustworthy deployment of robot learning models needs such a partnership! Sirius is our first milestone toward Continuous Integration and Continuous Deployment (CI/CD) for robot autonomy during long-term deployments👇.

Deep learning for robotics is hard to perfect. How do we harness existing models for trustworthy deployment, and make them continue to learn and adapt?. Presenting Sirius🌟, a human-in-the-loop framework for continuous policy learning & deployment!. 🌐:

0

13

55

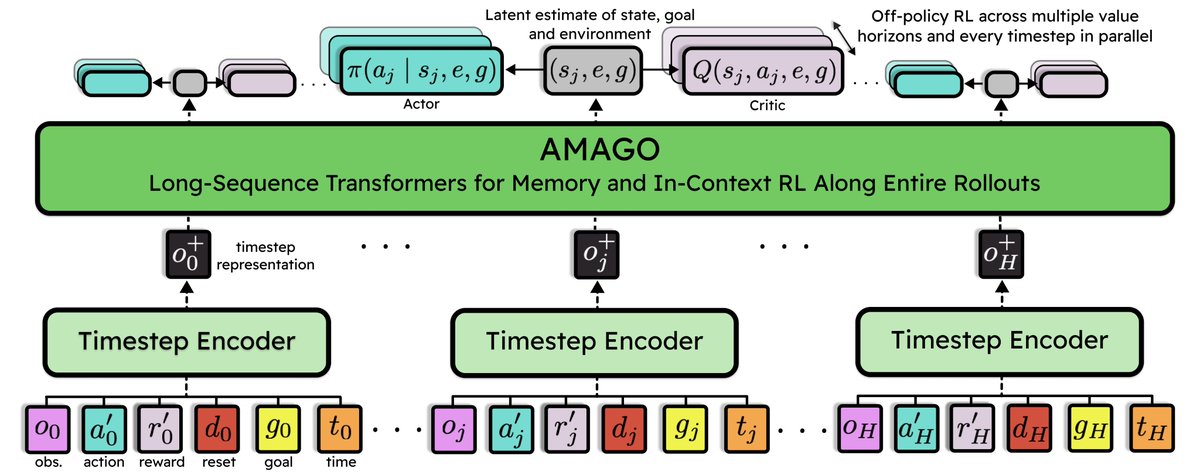

For the past two years, @__jakegrigsby__ and I have been exploring how to make Transformer-based RL scales the same way as supervised learning counterparts. Our AMAGO line of work shows promise for building RL generalists in multi-task settings. Meet him and chat at #NeurIPS2024!.

There’s an RL trick where we turn Q-learning into classification. Among other things, it’s a quick fix for multi-task RL’s most unnecessary problem: that the scale of each task’s training loss evolves unevenly over time. It’d be strange to let that happen in supervised learning,

0

7

55

MimicPlay is selected for an oral presentation next Thursday at #CoRL2023. Come check out this exciting work led by @chenwang_j.

What's the best way for humans to teach robots?. I'm excited to announce MimicPlay, an imitation learning algorithm that extracts the most signals from unlabeled human motions. MimicPlay combines the best of 2 data sources:. 1) Human "play data": a person uses their hands to

0

6

49

DexMimicGen is one of our core tools for building the data pyramid to train humanoid robots. Through automated data generation in simulation, it produces orders of magnitude more synthetic training data from a handful of real-robot trajectories.

How can we scale up humanoid data acquisition with minimal human effort?.Introducing DexMimicGen, a large-scale automated data generation system that synthesizes trajectories from a few human demonstrations for humanoid robots with dexterous hands. (1/n)

1

8

54

Yet another manifestation of the power of hybrid Imitation + Reinforcement learning! Imitating high-level cognitive reasoning 🧠 from humans + reinforcing agile motor actions 🦿 in parallel simulation = quadrupedal locomotion in dynamic, human-centered environments #PRELUDE.

Introducing PRELUDE, a hierarchical learning framework that allows a quadruped to traverse across dynamically moving crowds. The robot learns gaits from trial and error in simulation and decision-making from human demonstration. Paper, Code, Videos:

2

7

54