Danfei Xu

@danfei_xu

Followers

7K

Following

4K

Media

65

Statuses

806

Faculty at Georgia Tech @ICatGT, researcher at @NVIDIAAI | Ph.D. @StanfordAILab | Making robots smarter

Atlanta, GA

Joined August 2013

I’ll be joining GaTech @gtcomputing @ICatGT as an Assistant Professor in Fall 2022! . Looking forward to continuing my work in Robot Learning as a faculty and collaborating with researchers & students at GTCS @GTrobotics @mlatgt. Reach out for collaborations / joining the lab!.

47

12

512

The past decade has seen a troubling decline in Western perceptions of people of Chinese origin, largely fueled by political narratives and biases. For Chinese scholars and students in the US, this has created a constant sense of alienation. Academia has long served as a refuge.

Mitigating racial bias from LLMs is a lot easier than removing it from humans! . Can’t believe this happened at the best AI conference @NeurIPSConf . We have ethical reviews for authors, but missed it for invited speakers? 😡

13

37

347

I defended!.

Very proud of my student @danfei_xu (co-advised with @silviocinguetta ) for his wonderful PhD thesis defense today! Danfei’s work in computer vision and robotic learning pushes the field forward towards enabling robots to do long horizon tasks of the real world. 1/2

19

4

290

A bit more formally: I'm hiring Ph.D. students in Robot Learning this year! . If you are excited about the future of data-driven approaches to robotics, apply through the School of Interactive Computing at @gtcomputing by Dec 15th.

I’ll be joining GaTech @gtcomputing @ICatGT as an Assistant Professor in Fall 2022! . Looking forward to continuing my work in Robot Learning as a faculty and collaborating with researchers & students at GTCS @GTrobotics @mlatgt. Reach out for collaborations / joining the lab!.

0

39

182

We started this moonshot project a year ago. Now we are excited to share our progress on robot learning from egocentric human data 🕶️🤲. Key idea: Egocentric human data is robot data in disguise. By bridging the kinematic, visual, and distributional gap, we can directly leverage.

Introducing EgoMimic - just wear a pair of Project Aria @meta_aria smart glasses 👓 to scale up your imitation learning datasets!. Check out what our robot can do. A thread below👇

3

18

158

We figured out a way to solve long-horizon planning problem by composing a bunch of modular diffusion models in a factor graph! . This allows us to reuse the diffusion models in unseen new tasks and achieve zero-shot generalization to multi-robot collaborative manipulation tasks.

How can robots compositionally generalize over multi-object multi-robot tasks for long-horizon planning?. At #CoRL2024, we introduce Generative Factor Chaining (GFC), a diffusion-based approach that composes spatial-temporal factors into long-horizon skill plans. (1/7)

1

27

129

Just got to Munich! Looking forward to catching up with people at #CoRL2024. Also extremely honored, nervous, and excited about giving an Early Career Keynote on Thursday.

1

12

116

Excited to share my internship project @DeepMindAI with Misha Denil @notmisha!. Positive-Unlabeled Reward Learning.arXiv:

3

17

104

@elonmusk No it's not a more complex game. It only requires optimizing immediate reward like health and money. Go requires longer horizon planning.

5

6

95

I often get this question: Is LLM all you need for robot planning? . I'd go: "obviously not, because you need to consider physical constraints, dynamics, . ", which then turn into a non-stop rant. Now I'll just point them to this paper 😎.

If you're interested in learning SOTA of optimization-based task and motion planning, please give it a read of our recent survey paper, ranging from classical to learning methods. @ZhaoZhigen @ShuoCheng94 @yding25 @ZiyiZhou2 @ShiqiZhang7 @danfei_xu .

0

9

99

This is clearly going to benefit the privileged. Even the info that this conference/track existed probably will only circulate in a small group with direct tie to academia/tech (parents etc). How about we flip this into a track for creating accessible tutorials, lectures,.

This year, we invite high school students to submit research papers on the topic of machine learning for social impact! See our call for high school research project submissions below.

1

7

94

Excited to share Generalization Through Imitation (GTI)! GTI learns visuomotor control from human demos and generalizes to new long-horizon tasks by leveraging latent compositional structures. Joint w/ @AjayMandlekar @RobobertoMM @silviocinguetta @drfeifei

2

26

92

Can't believe that I just came across this insanely cool paper. 3D gaussian seems to be such an intuitive representation to model large & dynamic scenes (Lagrangian vs. Eularian). Expect it to drive a whole new wave of dense/obj-centric representation w/ self-supervision.

Dynamic 3D Gaussians: Tracking by Persistent Dynamic View Synthesis. We model the world as a set of 3D Gaussians that move & rotate over time. This extends Gaussian Splatting to dynamic scenes, with accurate novel-view synthesis and dense 3D trajectories.

3

10

86

160 H100 for GT Makerspace!.

Putting the promise of AI directly in students’ hands: We’re powering up the Georgia Tech AI Makerspace - a student-focused AI supercomputer hub. Proud to work with @nvidia and @WeAre_Penguin to make this a reality on campus for our students.

4

5

83

Is teleoperation + BC our ultimate path to productizing Robot Learning?. Well . Talk is cheap, show me your data & policy!. We are thrilled to organize a teleoperation and imitation learning challenge at #ICRA2025, with a total prize pool of $200,000 (cash + robots)!. General

0

16

75

Accepted to RSS 2020!.

Excited to share Generalization Through Imitation (GTI)! GTI learns visuomotor control from human demos and generalizes to new long-horizon tasks by leveraging latent compositional structures. Joint w/ @AjayMandlekar @RobobertoMM @silviocinguetta @drfeifei

2

6

71

We present Regression Planning Network (RPN), a type of recursive network architecture that learns to perform high-level task planning from video demonstrations. #NeurIPS2019 (1/3)

1

21

67

🤖 Inspiring the Next Generation of Roboticists! 🎓. Our lab had an incredible opportunity to demo our robot learning systems to local K-12 students for the National Robotics Week program @GTrobotics . A big shout-out to @saxenavaibhav11 @simar_kareer @pranay_mathur17 for hosting

1

11

65

We also made a similar transition to ROS-free. The non-obvious thing is that modern NN models (BC policies, VLMs, LLMs) breaks the abstraction of ROS modules. Raw sensory stream instead of state estimation, actions instead of plans, etc. Need new ROS for the next-gen modules.

Interesting (and sad) result here; I really had hoped more people would be able to just run with ROS2. But it seems like it's not quite there, if this is in any way worth doing for a small company/fast-moving startup that should be the target audience.

1

4

57

Presenting two papers at #NeurIPS2019! Come say hi if you are around. 1. Regression Planning Networks.We combine classic symbolic planning and recursive neural network to plan for long-horizon tasks end-to-end from image input. Paper & Code: . 1/

1

9

59

One of the most impressive CV works I've seen recently. Also huge kudos to Meta AI for sticking to open sourcing despite the trend increasingly going towards the opposite direction.

Today we're releasing the Segment Anything Model (SAM) — a step toward the first foundation model for image segmentation. SAM is capable of one-click segmentation of any object from any photo or video + zero-shot transfer to other segmentation tasks ➡️

0

3

58

Can we teach a robot hundreds of tasks with only dozens of demos? . This is only possible with a truly compositional system. Our new work, NOD-TAMP, learns generalizable skills with only a handful of demos and composes them to zero-shot solve long-horizon tasks, including loading.

Can we teach a robot hundreds of tasks with only dozens of demos?.Introducing NOD-TAMP: A framework that chains together manipulation skills from as few as one demo per skill to compositionally generalize across long-horizon tasks with unseen objects and scenes. (1/N)

0

5

57

Our group headed by @MarcoPavoneSU at NVIDIA Research is hiring fulltime RS and interns! Tons of cool problems in planning, control, imitation, and RL. Job posting 👇. Intern: Full-time:

0

5

54

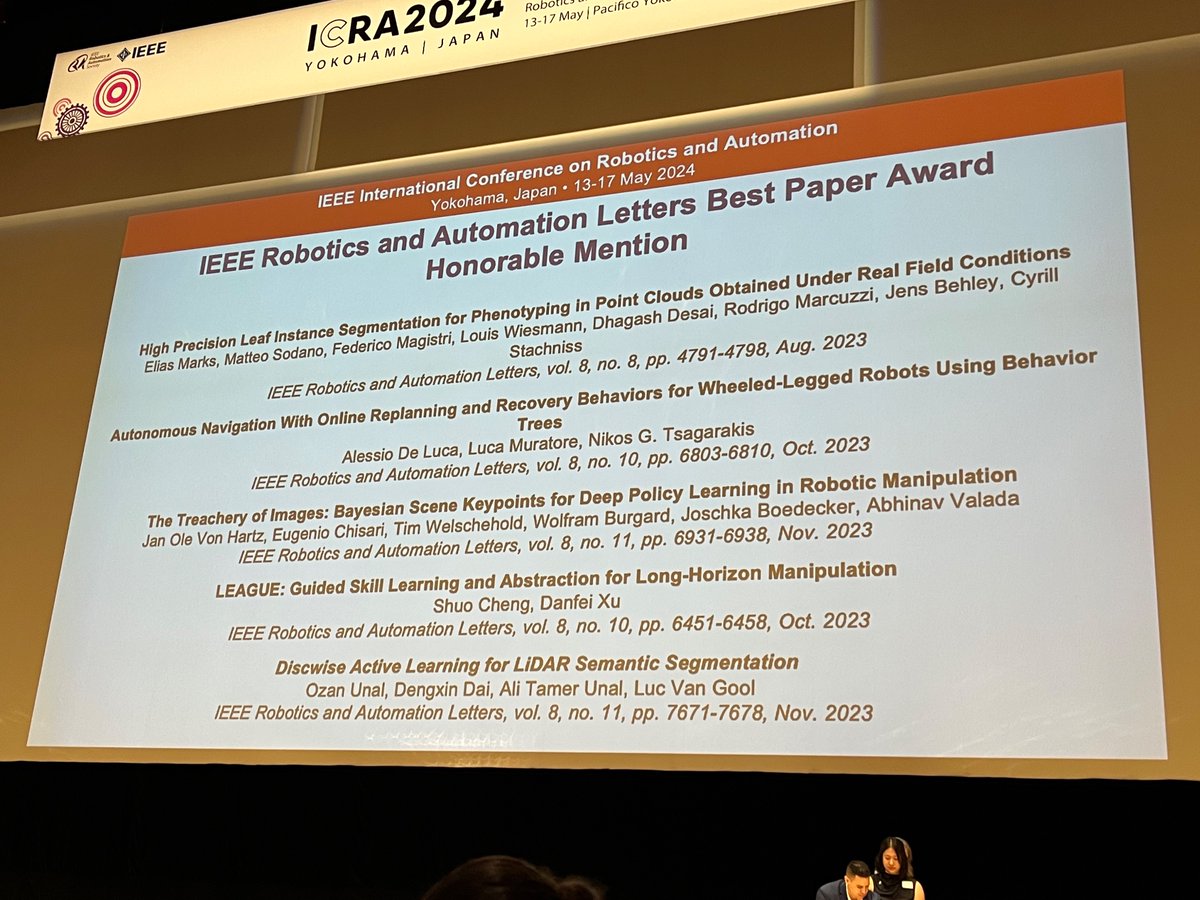

Congratulations to @ShuoCheng94 for leading LEAGUE, 1 of 5 papers out of 1200+ to receive an RA-L best paper award honorable mention at ICRA! As the sole student author on a two-person team in a field trending towards 10+ authors/paper, Shuo's vision and technical prowess shine.

3

1

50

Annnnd that's a wrap! First semester teaching at GT and it's been an absolute blast. Really happy to see the progression of the student projects and the final poster session joined by ~170 students. Couldn't have made it without my awesome TAs. Thanks @mlatgt for the sponsorship!

1

1

50

Detail: 10hz image -> 200hz EEF control. I'm guessing keep the same image token for 20 steps while updating proprio state? Also given how smooth the motion looks --- high-quality OSC implementation?.

Finally, let's talk about the learned low-level bimanual manipulation. All behaviors are driven by neural network visuomotor transformer policies, mapping pixels directly to actions. These networks take in onboard images at 10hz, and generate 24-DOF actions (wrist poses and

2

2

48

A good day for @ICatGT @GTrobotics. Congratulations @sehoonha @naokiyokoyama0 @shahdhruv_ and @ShuoCheng94 !

3

7

48

First work coming out of my lab at GT! LEAGUE is a "virtuous cycle" system that combines the merit of Task and Motion Planning and RL. The result is continually-learning and generalizable agents that can carry their knowledge to new task and even environments.

Introducing LEAGUE - Learning and Abstraction with Guidance! LEAGUE is a new framework that uses symbolic skill operators to guide skill learning and state abstraction, allowing it to solve long-horizon tasks and generalize to new tasks and domains. Joint work with @danfei_xu 1/6

1

10

47

Super excited about this new #CoRL2023 work on compositional planning! We introduce a new generative planner (GSC) to compose skill-level diffusion models to solve long-horizon manipulation problem, without ever training on long-horizon tasks. @ICatGT @GTrobotics @mlatgt.

How to enable robots to plan and compositionally generalize over long-horizon tasks?. At #CoRL2023, we introduce Generative Skill Chaining (GSC), a diffusion-based, generalizable and scalable approach to compose skill-level transition models into a task-level plan generator.(1/7)

1

3

44

Among so many thoughtful & nuanced discussions on regulating AI, the EU chooses to "mitigate the risk of extinction from AI". This is some sort of joke, right?.

Mitigating the risk of extinction from AI should be a global priority. And Europe should lead the way, building a new global AI framework built on three pillars: guardrails, governance and guiding innovation ↓

1

6

40

We are organizing the Deep Representation and Estimation of State tutorial at the virtual IROS2020! .Fantastic speaker line-up: @leto__jean, Yunfei Bai, and @ChrisChoy208. Co-organized with @KuanFang and @deanh_tw. A short thread about each session👇

1

9

43

Agreed. Humanoid is a problem, not a solution.

Do you really need legs? We don't think so. As much we love anthropomorphic humanoids (our co-founder built one in 9th grade), we believe virtually all menial tasks can be done with two robot arms, mounted on wheels. In our view, @1x_tech's Eve robot is the optimal form factor

2

3

41

Honored to be selected a DARPA Riser and giving a talk about our robot learning works!.

T minus 2 hours until we begin our next #DARPAForward event @GeorgiaTech. @DoDCTO Heidi Shyu will kick off a packed agenda featuring experts on pandemic preparedness, cybersecurity, and more. Visit our page for more on how you can join future events:

4

1

39

Our #CoRL2024 work on learning tactile control policies directly from human hand demonstrations. Check out Kelin's tweet for more details!.

Introducing MimicTouch, our new paper accepted by #CoRL2024 (also the Best Paper Award at the #NIPS2024 TouchProcessing Workshop). MimicTouch learns tactile-only policies (no visual feedback) for contact-rich manipulation directly from human hand demonstrations. (1/6)

0

3

40

Robotics dataset is expanding at an unprecedented pace. How do we control the quality of the collected data? Our #CoRL2023 work presents an offline imitation learning method that learns to discern (L2D) data from expert in a mixed-quality demonstration dataset. Code coming soon!.

Introducing our #CoRL2023 work Learning to Discern (L2D)! As robotics datasets grow, quality control becomes ever more important. L2D is our solution for handling mixed-quality demo data for offline imitation learning. (1/6)

0

9

39

An open-source playground for training generative agents from real-world driving data! Work led by @Yuxiao_Chen_ @iamborisi @drmapavone and myself from our team at @NVIDIAAI, in close collaboration with @NVIDIADRIVE.

We are excited to announce the release of Traffic Behavior Simulation (TBSIM) developed by the Nvidia Autonomous Vehicle research group (, which is our software infrastructure for closed-loop simulation with data-driven traffic agents. (1/7)

2

2

36

Used ACME for my summer internship project @DeepMind , can confirm it's an amazing framework.

Interested in playing around with RL? We’re happy to announce the release of Acme, a light-weight framework for building and running novel RL algorithms. We also include a range of pre-built, state-of-the-art agents to get you started. Enjoy!

0

2

38

Need a fully automated sim-to-real pipeline to train locomotion policies for arbitrary robot (with URDF) in ~1hr.

@DisneyResearch introduces their new robot at #IROS2023! Trained in simulation with #reinforcementlearning! @ieeeiros

0

0

36

New work on Humanoid Sim2Real by @fukangliuu! . Key takeaway: Trajectory Optimization (TO) can generate dynamics-aware reference trajectories (how much torque should I exert to pick up a heavy vs. a light box?). Policies trained to track these trajectories in simulation can be.

Opt2Skill ( combines TO and RL to enable real-world humanoid loco-manipulation tasks. Shoutout to all the collaborators! @gu_zy14 @Yilin_Cai98 @ZiyiZhou2 @HyunyoungJung5 @sehoonha @danfei_xu @GT_LIDAR

1

3

35

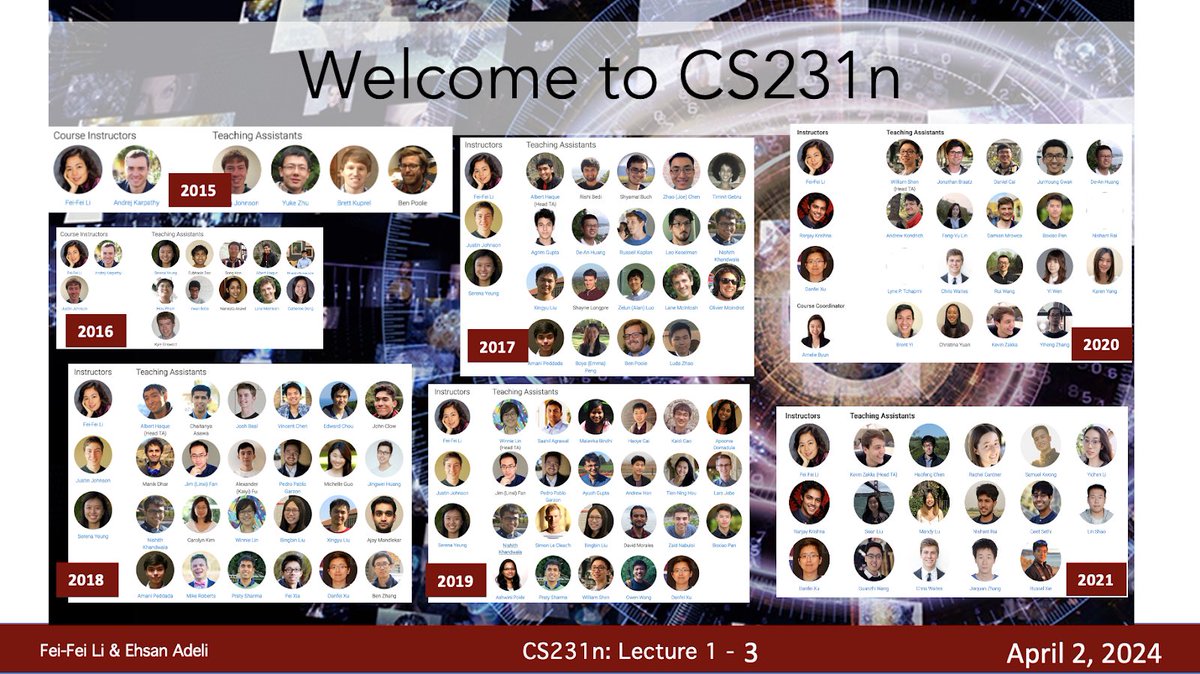

A thread by my awesome co-instructor @RanjayKrishna recapping @cs231n for the past quarter. It happens to be the *largest* class on campus for the quarter!. Thanks all the teaching staff, especially our head TA @kevin_zakka for making this course possible!.

Academic quarter recap: here's a staff photo after the last lecture of @cs231n. It's crazy that we were the largest course at Stanford this quarter. This year, we added new lectures and assignments (open sourced) on attention, transformers, and self-supervised learning.

1

1

34

Object representation is a fundamental problem for robotic manipulation. Our #CoRL2023 work found that *density field* can efficiently represent state and dynamics of non-rigid objects such as granular material. To be presented as a spotlight&poster on Thursday!.

How to represent granular materials for robot manipulation?. Introducing our #CoRL2023 project: Neural Field Dynamics Model for Granular Object Piles Manipulation, a field-based dynamics model for granular object piles manipulation. 🌐 👇 Thread

0

5

34

Accepted to ICRA 2020! Paper & code:

We present 6-PACK, an RGB-D category-level 6D pose tracker that generalizes between instances of classes based on a set of anchors and keypoints. No 3D models required! Code+Paper: w/ Chen Wang @danfei_xu Jun Lv @cewu_lu @silviocinguetta @drfeifei @yukez

2

7

33

Join us on Sunday at 9:00-1:30pm PT for the Advances & Challenges in Imitation Learning for Robotics #RSS2020 Workshop: with an exciting list of speakers! Live streaming at

1

3

32

It was an honor to have been part of this epic journey!.

It’s that time of the year - first lecture of @cs231n !! It’s the 9th year since @karpathy and I started this journey in 2015, what an incredible decade of AI and computer vision! Am so excited to this new crop of students in CS231n! (Co-instructing with @eadeli this year 😍🤩)

0

1

31

Whoa this is huge! No more wrangling w/ python2 compatibility & root access issues.

Incredibly happy that our @RoboStack paper has been accepted to the @ieeeras Robotics & Automation Magazine 🥳. @RoboStack brings together #ROS @rosorg with @condaforge and @ProjectJupyter. Preprint: Find out some key benefits in this 🧵: 1/n

1

5

28

Check out our #ICCV2019 work on harnessing mid-level representations in training interactive agents.

We are releasing our #ICCV2019 work on goal-directed visual navigation. We introduced a method that harnesses different perception skills based on situational awareness. It makes a robot reach its goals more robustly and efficiently in new environments.

0

3

28

Check out our new work on imitation learning from human demos! We released a set of sim&real tasks, demo datasets, and a modular codebase & clean APIs to help you develop new algorithms!.

Robot learning from human demos is powerful yet difficult due to a lack of standardized, high-quality datasets. We present the robomimic framework: a suite of tasks, large human datasets, and policy learning algorithms. Website: 1/

0

2

28

Blog post by @deanh_tw and I summarizing our line of work on generalizable imitation of long-horizon tasks: Neural Task Programming, Neural Task Graphs, and Continuous Relaxation of Symbolic Planner. Enjoy!.

What if we can teach robots to do new task just by showing them one demonstration? . In our newest blog post, @deanh_tw and @danfei_xu show us three approaches that leverage compositionality to solve long-horizon one-shot imitation learning problems.

1

4

27

@gtcomputing @ICatGT @GTrobotics @mlatgt In the meantime, I will spend my gap year at @nvidia . Research. I couldn’t be more excited and I’m immensely grateful for my advisors @silviocinguetta @drfeifei and many collaborators and friends who helped me to get here.

0

1

27

Data fuels the progress in robotics, whether it's sim, real teleoperated, or auto-generated. Our workshop at #RSS2024 will bring together researchers from academia, industry, and startups around the world to share insights🧐 and hot takes 🔥.

Data is the key driving force behind success in robot learning. Our upcoming RSS 2024 workshop "Data Generation for Robotics” will feature exciting speakers, timely debates, and more! Submit by May 20th.

0

1

25

Robot auction sale by Intrinsic (Alphabet’s industrial automation/robotics startup). Sad to see this.

If you're a hardware biz or R&D lab in Silicon Valley, you should definitely be keeping your eye on the liquidation auctions, which are on fire right now. This one is auctioning off more than 100 new and used Kuka robot arms: .

2

1

25

It's today! DeepRL workshop

@RobobertoMM @deanh_tw @yukez @silviocinguetta @drfeifei 2. Positive-Unlabeled Reward Learning . . Deep Reinforcement Learning Workshop. Joint work with @notmisha . 3/.

0

8

27

Our new work on training competent robot collaborators from human-human collaboration demonstrations! @corl_conf @stanfordsvl @StanfordAILab.

0

4

27

Learning for high-precision manipulation is critical to bridge *intelligence* to repeatable *automation*. C3DM is a diffusion model that learns to remove noise from the input by "fixating" on the target object. To be presented at the Deployable Robot workshop at #CoRL2023 today!.

Introducing C3DM 🤖 - a Constrained-Context Conditional Diffusion Model that solves robotic manipulation tasks with:. ✅ high precision and .✅ robustness to distractions!. 👇 Thread

0

2

27

Fantastic research led by Chen! Continuing our work on hierarchical imitation starting for real-world long-horizon manipulation. It turns out that we can train high-level planner directly from *human video*. This greatly reduces need for on-robot data and improves robustness 1/2.

How to teach robots to perform long-horizon tasks efficiently and robustly🦾?. Introducing MimicPlay - an imitation learning algorithm that uses "cheap human play data". Our approach unlocks both real-time planning through raw perception and strong robustness to disturbances!🧵👇

1

1

24

To carry out long-horizon tasks, robots must plan far and wide into the future. What state space should the robot plan with, and how can they plan for objects & scenes that they have never seen before? See 👇for our new work on Generalizable Task Planning (GenTP).

1/ Can we improve the generalization capability of a vision-based task planner with representation pretraining?. Check out our RAL paper on learning to plan with pre-trained object-level representation. Website:

0

0

26

Active perception with NeRF!. It’s quite rare to see a work that is both principled and empirically effective. Neural Visibility Field (NVF) led by @ShangjieXue is a delightful exception. NVF unifies both visibility and appearance uncertainty in a Bayes net framework and achieved.

How can robots efficiently explore and map unknown environments? 🤖📷. Introducing Neural Visibility Field (NVF), a principled framework to quantify uncertainty in NeRF for next-best-view planning. #CVPR2024 1/6. 🌐 👇 Thread

0

2

27

Excited to share our milestone in building generalizable long-horizon task solvers at #CoRL2023! As part of our long-term vision for a never-ending data engine for everyday tasks, HITL-TAMP combines the best of structured reasoning (TAMP) and end-to-end imitation learning.

How can humans help robots improve? Introducing Human-In-The-Loop Task and Motion Planning (HITL-TAMP), a perpetually-evolving TAMP system that learns visuomotor skills from human demos for contact-rich, long-horizon tasks. #CoRL2023. Website: 1/

0

2

26

Very nice post!. Slightly different take: Scaling up should be the **question**, not the answer. Yes we need to scale up to more task, envs, robots, but there should be many possible answers to this question. Training on lots of data may be an answer but should not the only one.

There was a lot of good and interesting debate on "is scaling all we need to solve robotics?" at #CoRL23. I spent some time writing up a blog post about all the points I heard on both sides:

1

1

24

Our paper on learning generalizable neural programs for complex robot tasks will appear in #icra2018! See you soon. Arxiv: Two minutes paper: Video:

0

7

22

We! are! hiring! 👏.

Yo! Georgia Tech School of Interactive Computing. @ICatGT.is live! Come be part of the coolest computing science department in the world

0

1

20

Excited about hierarchy, abstraction, model learning, skill learning, planning with LLMs, and benchmarking long-horizon manipulation tasks? Submit a paper to our learning for Task and Motion Planning (L4TAMP) workshop at RSS'23!.

We are organizing the RSS’23 Workshop on Learning for Task and Motion Planning . .Contributions of short papers or Blue Sky papers are due May 19th, 2023.

1

2

18

A neat extension of our Regression Planning Networks to 3D scene graph and more fine-grained skills!.

Delighted to present our recent work on hierarchical Scene Graphs for neuro-symbolic manipulation planning. We use 3D Scene Graphs as an object-centric abstraction to reason about long-horizon tasks. w/ @yifengzhu_ut, Jonathan Tremblay, Stan Birchfield

0

1

18

As the Deep Learning course at GT draws to a close this semester, I'd like to extend a heartfelt thanks to @WilliamBarrHeld. His exceptional lecture and programming assignment on Transformers and LLMs were truly enlightening. Don't miss out on these incredible resources!.

For @danfei_xu's Deep Learning course this semester, I made a homework for Transformers and gave a lecture on LLMs. I'm sharing resources I made for both in hopes they are useful for others!. Lecture Slides: HW Colab:

1

0

17

Our new work on learning real-time 6DoF tracking from RGB-D data.

We present 6-PACK, an RGB-D category-level 6D pose tracker that generalizes between instances of classes based on a set of anchors and keypoints. No 3D models required! Code+Paper: w/ Chen Wang @danfei_xu Jun Lv @cewu_lu @silviocinguetta @drfeifei @yukez

0

1

17

OPT is practically the only pathway for international students like me to legally work in the U.S. after graduation. This is beyond short-sighted.

The OPT program is crucial for retaining talented international students in the US. I relied on the OPT myself for summer internships during college and for full-time work after graduation.

1

1

15

Make sure to check out our workshop on NeRF + robotics happening tomorrow at #ICCV2023, Paris and virtually!.

#ICCV2023 Join us for the “Neural Fields for Autonomous Driving and Robotics” ( workshop 8:55-17:00 on 10/3 at S03! We have a great lineup of speakers @vincesitzmann @jon_barron @AjdDavison @LingjieLiu1 @jiajunwu_cs @lucacarlone1 @Jamie_Shotton.

1

0

16

This is a great effort to collect large robot dataset on standardized hardware setup! Also happy to see that Robomimic is adopted as the core policy learning infrastructure.

After two years, it is my pleasure to introduce “DROID: A Large-Scale In-the-Wild Robot Manipulation Dataset”. DROID is the most diverse robotic interaction dataset ever released, including 385 hours of data collected across 564 diverse scenes in real-world households and offices

0

2

14

cool idea: learning feature embeddings via multi-view contrastive loss, similar to DenseObjectNet by Florence et al., 2018.

Our #ECCV2020 paper is now on arXiv. We show that 3D object tracking emerges automatically when you train for multi-view correspondence. No object labels necessary!.Video: results from KITTI. Bottom right shows a bird's eye view of the learned 3D features.

0

0

15