Jeannette Bohg

@leto__jean

Followers

6,960

Following

506

Media

193

Statuses

1,541

Assistant Professor @StanfordAILab @StanfordIPRL . Perception, learning and control for autonomous robotic manipulation #BlackLivesMatter she/her 🌈

Stanford, CA

Joined May 2017

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Adalet

• 229495 Tweets

#4MINUTES_EP7

• 110778 Tweets

Chester

• 109053 Tweets

ベイマックス

• 108916 Tweets

Merchan

• 87219 Tweets

Jean Carroll

• 67100 Tweets

Eagles

• 66452 Tweets

#2KDay

• 65265 Tweets

HAPPY BIRTHDAY TANNIE

• 54862 Tweets

#dilanpolat

• 42720 Tweets

Franco Escamilla

• 36228 Tweets

Ayşenur Ezgi Eygi

• 33350 Tweets

Packers

• 29860 Tweets

#CristinaMostraElTitulo

• 22644 Tweets

Ganesh Chaturthi

• 14787 Tweets

Bafana Bafana

• 14541 Tweets

Tesehki

• 13943 Tweets

Barcola

• 13148 Tweets

Chaine

• 12323 Tweets

Alina Habba

• 10927 Tweets

Go Birds

• 10887 Tweets

Skylar

• 10060 Tweets

Last Seen Profiles

Pinned Tweet

We dramatically sped up Diffusion policies through consistency distillation.

With the resulting single step policy, we can run fast inference on laptop GPUs and robot on-board compute.

👇

Diffusion Policies are powerful and widely used. We made them much faster.

Consistency Policy bridges consistency distillation techniques to the robotics domain and enables 10-100x faster policy inference with comparable performance.

Accepted at

#RSS2024

10

62

293

1

14

104

Dear Academic colleagues, please VOTE in November!

Your international colleagues and students cannot.

New

@ICEgov

policy regarding F-1 visa international students is horrible & will hurt the US, students, and universities. Pushes universities to offer in-person classes even if unsafe or no pedagogical benefit, or students to leave US amidst pandemic and risk inability to return.

49

833

3K

0

24

208

We made the first place at the NuScenes Tracking Challenge (AI Driving Olympics

@NeurIPSConf

)

Spoiler alert: It's a Kalman filter!

We beat the AB3DMOT baseline by a large margin.

arXiv

1

36

192

Don't we all want our robots to do many different tasks?

We learned a single policy for 78 manipulation tasks.

We don't use goal images. We use natural language instructions to index the task.

Chat with us tomorrow 8am PDT

#RSS2020

1/4

1

29

156

How do you safely navigate a complex world with only an RGB camera?

Turns out, the density represented by NeRFs can serve as a collision metric.

👇

3

23

141

Seeing TidyBot come together in my lab has been fun! And yes, our lab has been exceptionally clean 🧹

Many of you commented on the mobile platform which I'm so pleased about seeing it move!

Let me tell you the story behind these platforms!

🧵

2

19

117

I am very grateful to the Sloan Foundation to recognize and support the research done at my lab

@StanfordIPRL

!

This wouldn't have been possible without the hard work of my students, postdocs and the support by my mentors!

We are delighted to announce the winners of this year’s Sloan Research Fellowship! These outstanding researchers are shining examples of innovation and impact—and we are thrilled to support them. Meet the winners here: 🎉

#SloanFellow

#STEM

#ScienceTwitter

13

61

409

11

4

105

Thank you so much for all the congratulations to the 2020 RSS Early Career Award!

I'm honored to receive it and astounded and happy to hear that I serve as an inspiration.

I want to mention who inspires me

Congratulations

@leto__jean

on your 2020 RSS Early Career Award! You are an inspiration to many.

0

0

2

5

3

101

Proud of my amazing students who worked incredibly hard for this 🙌

Members of

@StanfordIPRL

submitted a total of 11 papers for ICRA.

We have 11 acceptances.

Congratulations everyone! 🎊🎉🗼

6

3

139

2

1

87

At

#CVPR2023

, we present CARTO - a model that reconstructs articulated objects from a single stereo image.

This includes the object's 3D shape, 6D pose, size, joint type, and joint state. All this in a category agnostic fashion.

2

11

73

I was invited to the

#IROS2020

Tutorial on Deep Representation and Estimation of State for Robotics by

@deanh_tw

@danfei_xu

@KuanFang

:

I created a mini-course on Representations and their interplay with decision-making & control:

1

10

70

We are presenting our new work on learning user-preferred mappings for assistive teleop

#IROS2020

!

Making assistive teleoperation more intuitive by learning user-preferred mappings for latent actions w/ Mengxi Li,

@loseydp

and

@leto__jean

1

14

93

1

9

71

So happy to see

@Stanford

joining our peer institutions in an amicus brief to support Harvard and MIT in a lawsuit against the

#StudentBan

The letter of MTL to the acting secretary of the Department of Homeland Security

1

6

69

Are there more Roboticists out there right now, on a deadline grind, wondering: How is this even possible?

Such an incredible achievement. So many possible points of failure. So many years of work.

JUST, WOW.😍

Grab the popcorn because the

@NASAPersevere

rover has sent us a one-of-kind video of her Mars landing. For the first time in history, we can see multiple angles of what it looks like to touch down on the Red Planet.

#CountdownToMars

451

7K

23K

2

3

69

New Grasping Data Set Alert 🚨

We trained a model called UniGrasp to generate stable grasps for multi-fingered hands.

Code + Data now available including not only a variety of objects but also hands:

0

10

62

Understanding dynamic 3D environment is crucial for robotic agents.

We propose MeteorNet for learning representations of dynamic 3D point cloud sequences (Oral

@ICCV19

).

Project Page:

Arxiv:

1

8

59

Learning of manipulation skills often considers point-to-point motion only. Many manipulation tasks are however periodic and repeated indefinitely:

🧵🥣🛠️🪚🧼🪠🧹🪡✂️ 🪛🪥

We learn and represent periodic policies with complex deformable objects or granular material from vision

0

11

60

Ever worked on a real robot in a not so controlled environment?

Then you may have experienced changing lighting conditions, sensor drift or just a broken sensor.

We propose a way to deal with these unexpected measurements through crossmodal compensation

🧵

0

8

59

We want our robots to extrapolate from a few examples of a manipulation task to many variations.

Embedding equivariance in both, our object representation and policy architecture allows our 🤖 to do just that.

0

5

58

✋

My PhD advisor is the power roboticist and woman

@DanicaKragic

Where would I be without her? I aspire to be to my students what Dani has been to me!

2

1

57

Happy to announce that

@StanfordIPRL

is part of the challenge and has qualified for Phase 2!

We are so excited to remotely work on a real robot

3

7

54

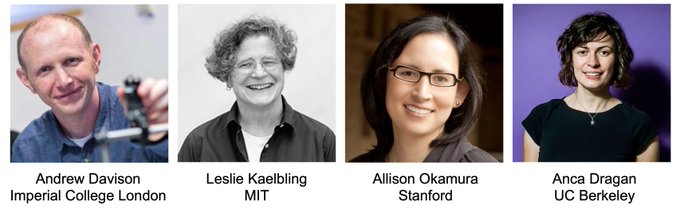

Mark your calendars for this new public Robotics Seminar Series starting Friday May 15th 1PM EST with

@AjdDavison

!

Upcoming amazing speakers:

@AjdDavison

, Leslie Kaelbling, Allison Okamura,

@ancadianadragan

Hope to see you there and participate in the Q&As!

We are launching a new

#virtual

#robotics

seminar “Robotics Today--A Series of Technical Talks”. Andrew Davison

@AjdDavison

will give the first seminar “From SLAM to Spatial AI” on Friday May 15th 1PM EST.

Watch and learn more about the seminars here:

2

70

224

1

9

55

We embedded equivariance in Diffusion policies to let them generalize to scenarios that would otherwise be out of distribution.

👇 Check Jingyun's thread for all the details!

0

10

54

Thank you Scott Kuindersma

@BostonDynamics

, for this very exciting and entertaining talk! On top of all the controller details, I loved hearing about how you integrate perception.

Re-watch talk and Q&A here!

0

9

53

Proud of my former grad student Toki Migimatsu!

I love the self-corrections of mistakes at the end of the video

1

0

51

Working with robots? Sometimes having trouble with coordinate transforms and rotations? Here are STL files for printing these extremely useful 3D coordinate systems with magnets, so you can attach them to your whiteboard. Kudos to Felix from

@MPI_IS

3

14

49

Looking forward to present our line of work on enabling robots to do long-horizon task planning by leveraging large-scale human activity datasets!

#CVPR2021

Thanks for the invite!

Sun 20/6 10:00 EDT (full day)

EPIC Workshop with 5 challenges announcement, and live talks with Q&A by winners. Keynotes by L Torresani, D Krandall

@leto__jean

@davsca1

and K Grauman. Program:

2/6

1

1

8

0

5

50

We have previously shown how multimodal representation learning enables generalization in contact-rich manipulation tasks.

I'm excited about this new

#CVPR2022

work that is object-centric and includes vision, touch and audio.

Excited to share our

#CVPR2022

paper ObjectFolder 2.0, a multisensory object dataset with visual, acoustic, and tactile data for Sim2Real transfer.

Paper:

Project page:

Dataset/Code:

Details with narration👇

4

23

144

0

3

49

Tune in! Because

@siddhss5

wants to share some important open research problems with you so that he can retire :)

1

3

48

Fusing multiple modalities for state estimation requires dynamics and measurement models.

Differentiable filters enable learning these models end-to-end while retaining the algorithmic structure of recursive filters.

Project Page:

#IROS2020

2

12

47

How do you autonomously learn a library of composable, visuomotor skills?

At

#IROS2023

we present an approach that can create novel yet feasible tasks to gradually train the skill policies on harder and harder tasks.

Monday morning Poster Session: MoAIP-19.9

0

8

47

I'll be presenting EquiBot today (Friday) at

#RSS2024

in the Workshop on Geometric and Algebraic Structure in Robot learning.

Room: Room: ME B - Newton

Poster Session: 3-4pm

1

5

47

How do you sequence learned skills for a manipulation task? Use STAP to plan with learned skills and maximize the expected success of each skill in the plan where success is encoded in Q-functions.

Today 3-4pm

#ICRA2023

at Pod 42 WePO2S-21.1

@agiachris

4

5

47

This afternoon

#ICRA2024

, we are presenting RoboFuME - a method that implements the pre-train + fine-tuning paradigm for multi-task robot policies.

4:30-6pm at "Big Data in Robotics and Automation"

Room: CC-313

1

6

44

It is this time of the year: We are teaching rotations in CS336.

Check out these awesome 3D printed coordinate frames for visualizing rotations and making your life easier.

Working with robots? Sometimes having trouble with coordinate transforms and rotations? Here are STL files for printing these extremely useful 3D coordinate systems with magnets, so you can attach them to your whiteboard. Kudos to Felix from

@MPI_IS

3

14

49

2

3

43

Really cool, out-of-the-box hand-design allowing for new ways to think about in-hand manipulation!

My student

@linshaonju

also contributed with his wisdom on policy learning - in this case through imitation

Manipulating objects using a robot grasper with steerable rolling fingertips. Our Roller Grasper V2 project is being presented at

#IROS2020

Details at:

3

7

56

1

4

41

Large Language Models promise to replace task planners in robotics.

But how do we verify that these plans are correct - especially for tasks that require long-horizon reasoning?

👇Check out Kevin's 🧵 on text2motion!

0

9

43

Looking forward to discuss this work with all of you tomorrow at our poster

#ICRA2022

Check out our paper “Vision-Only Robot Navigation in a Neural Radiance World” at

#ICRA2022

! Come learn how to use NeRFs for trajectory optimization, state estimations, and MPC.

We’ll be presenting at 4:15pm on Thursday in Room 124!

More info:

1

28

203

1

7

42

A lot of us

@StanfordEng

spend a lot of time at Bytes and Coupa. The employees there are hourly, and with Stanford’s effective shutdown, their hours are being cut or people are being let go.

This fundraiser is to help them with financial assistance:

1

8

42

Thanks so much

@siddhss5

for this wonderful talk on motion planning! You can re-watch it below.

Some of the audience questions were on "What should I work on?"

Sidd's advise: Be fearless!

To unwrap this, check out his talk on this topic here:

1

4

41

How do you autonomously learn a library of composable, visuomotor skills?

We propose an approach that can create novel yet feasible tasks to gradually train the skill policies on harder and harder tasks.

1

6

39

Tying a knot is a topological task that has infinitely many geometric solutions. How can a robot translate a topological plan into a motion plan?

We learn a library of topological motion primitives for creating various knots.

#iros2020

Project:

1

3

38

Thanks

@StanfordEng

for this profile!

Also thanks to

@ai4allorg

for everything you do and specifically for organizing the fireside chat described in this story!

#IAmAnEngineer

: I'm a first-generation university graduate who grew up in communist East Germany. I'm passionate about mentoring young women in science and engineering. -Jeannette Bohg, Assistant Professor of Computer Science

0

13

55

2

4

38

+ The six lessons for computer vision.

At least 4 of which robotics has acknowledged for decades.

1

2

38

We want robots that can manipulate any articulated object 🚪🪟📪🗃️🗄️📒📦

Turns out: this is still harder than you think!

AO-Grasp proposes robot grasps on articulated objects and generalizes 0⃣ shot from sim2real.

Talk to us at

#BARS2023

1

5

37

The Open-X Embodiment project will be presented today, Wed at

#ICRA2024

.

Award Session on Robot Manipulation 🎉

Room: CC-Main Hall

Time: 10:30-12:00

0

10

36

Today at

#RSS2024

we are presenting SpringGrasp at the evening grasping session.

Come to our poster at 6pm with all your comments and questions!

1

4

37

Come to our NeRF-Shop today at

#ICRA22

!

We may have some live demos 😊

We're excited to announce our

@ieee_ras_icra

workshop "Motion Planning with Implicit Neural Representations of Geometry!"

We'll discuss the future of INRs -- like DeepSDFs, NeRFs, and more -- in robotics.

Submissions due April 15.

1

24

136

1

6

35

Yes people, we are aiming for in-person 🥳🎆🎊

Leaning for Dynamics and Control 2022 will take place

@Stanford

. More information can be found at

@CSSIEEE

@NeurIPSConf

1

29

131

0

0

34

This is such a great opportunity!

We are happy to announce the organized by

@MPI_IS

,

@MILAMontreal

and

@NYU

. Each team passing the simulation stage will have more than 100 real-robot-hours. Coding in Python, simulator in PyBullet and tutorials are provided. Start 3rd Aug.

2

80

232

0

4

35

Dedicated to giving girls the opportunity to explore robotics. Age doesn't matter 🤖 Featuring Naos, Athena & Apollo

@MPI_IS

,

@FRANKAEMIKA

& Ocean1

@Stanford

0

2

34

So excited to see these news! Matt is the best. Congratulations to CMU and to Matt.

He was a PostDoc at KTH in Stockholm when I was doing my PhD. Working with Matt gave me such a boost of energy! Truly enjoyed it!

Can't wait to see him leading RI

0

1

33

Super excited about co-organizing this virtual Robotics Retrospectives workshop

@rssconf

!

The good thing about making it virtual? We don't have to worry about visas! 😊

Check out the webpage for submission info and our virtual formats

Very excited about the program of our virtual Robotics Retrospectives workshop ! Want to participate? Submit your reflections on your own past work or a subfield of robotics.

with

@leto__jean

, Arunkumar Byravan and Akshara Rai.

0

0

13

1

6

33

We want our robots to extrapolate from a few examples of a manipulation task to many variations.

Embedding equivariance in both, our object representation and policy architecture allows our 🤖 to do just that.

Today Wed

#ICRA2024

Room: CC-418

Time: 1:30-3pm

Poster: 4:30-6pm

0

9

33

My first time at

#CVPR2019

. I'll be giving a talk at the Vision meets Cognition ws in 103c along with other great speakers! Come by if you like to hear about the challenges and opportunities of bringing together vision and robotics

3

0

32

Extremely happy to have

@contactrika

join

@StanfordIPRL

as a PostDoc in January through the CIFellows 2020 program!

Can't wait to work together :)

3

2

32

Tune in again on Friday! This week we have

@ancadianadragan

talking about how to finally get rid of this annoying thing called reward engineering :)

Watch live: 1 PM Friday, June 12:

@Berkeley_EECS

’s Anca Dragan

@ancadianadragan

#humanrobot

interaction "Optimizing Intended Reward Functions: Extracting all the right information from all the right places"

1

7

16

2

1

31

@black_in_ai

launches the 2020/21 Graduate Application Program for those of you who self-identify as Black and/or African and intend to apply for grad school this cycle.

More info here:

Paging

@BlackInRobotics

for signal boosting 🤖

1

24

31

Proud that our lab has contributed to this long-term data collection effort called Droid - an in-the-wild robot manipulation dataset of unprecedented diversity.

Amazing leadership by

@SashaKhazatsky

,

@KarlPertsch

and

@chelseabfinn

🧵👇

0

2

31

Love this!

A lecture that gives insights into how research directions come about, how much effort and time they take to follow through and about the context of the research community in which they blossom.

Last semester, a guest lecture fell through last minute. So improvised a lecture on the backstories behind some research papers -- i.e. not about the research results, but about how we ended up doing research in that direction.

#metaresearch

Online now:

1

90

504

0

1

30

Love the idea of this retrospective NeurIPS WS!

The overarching goal of retrospectives is to do better science, increase the openness and accessibility of the machine learning field, and to show that it’s okay to make mistakes.

One of those at

#ICRA2020

?

4

2

30

Hi

#ICRA2023

folks, we are presenting text2motion that can verify LLM plans in the 'Pretraining for Robotics' Workshop this morning.

Location: ICC Capital Suite 7

Spotlights 10am, Poster session 10:20am. CU there with

@agiachris

0

3

30

How can we adopt the successful pre-training + finetuning paradigm in Robotics?

Presenting RoboFuME 🤖that can learn new manipulation tasks by finetuning a multi-task policy.

And all of that with minimal human supervision!

👇

0

4

30

Key Insight of TidyBot:

Summarization with LLMs is an effective way to achieve generalization in robotics from just a few example preferences.

Jimmy presents TidyBot on Monday afternoon at

#IROS2023

: MoBIP-16.5

Come by to chat!

0

4

30

@linshaonju

@StanfordIPRL

@krishpopdesu

Thanks for all the congrats 😊

Maybe some perspective on the 100% success rate:

4 of those papers were rejected before. Although it can be frustrating to be rejected, we worked hard on improving them based on the reviews.

So I am extra happy for those 4! And never give up 🙌

1

2

30

A blog post on our work on fusing vision and touch in robotics!

And as a bonus, check out code & data here:

While humans can seamlessly combine our sensory inputs, can we teach robots to do the same?

@michellearning

writes about how we can use self-supervision to learn a representation that combines vision and touch.

2

14

60

0

5

29

We worked on a new, more convenient way to goal-condition robot policies: user sketches! 👨🎨

Sketches are less ambiguous than language and not too specific (like goal images).

1

5

29