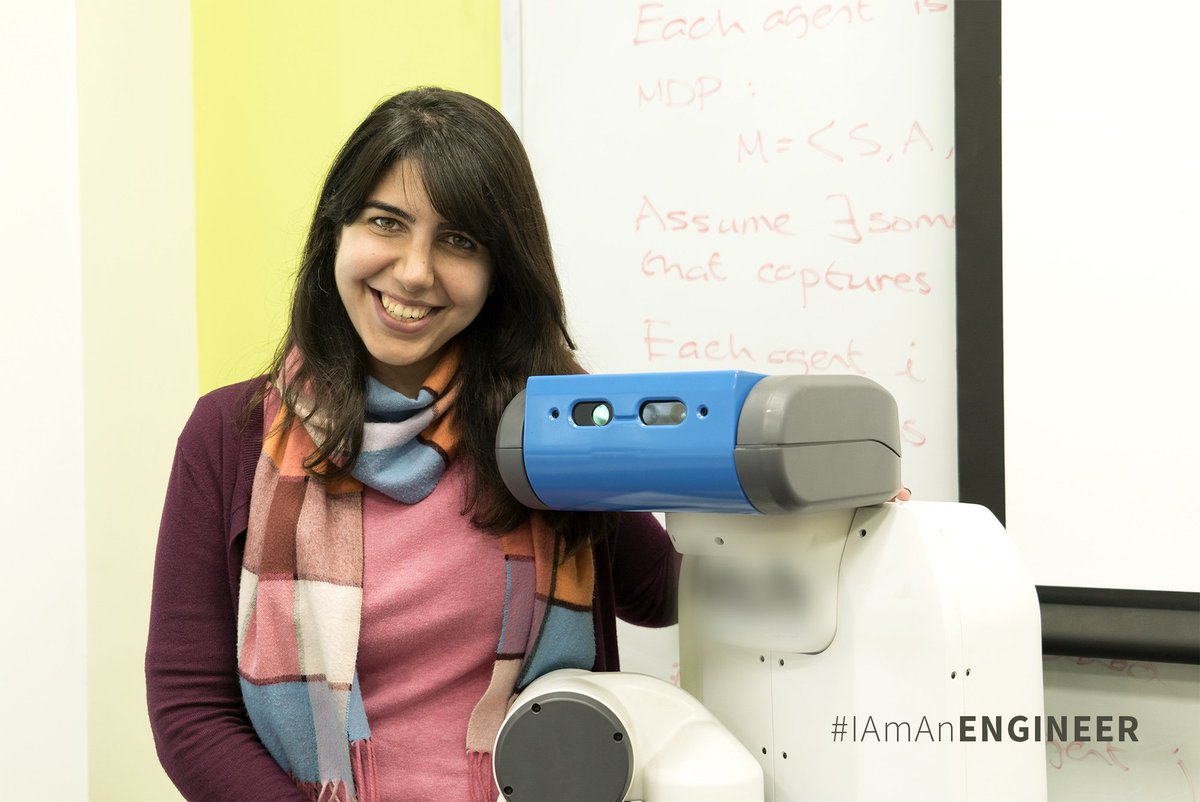

Anca Dragan

@ancadianadragan

Followers

11K

Following

334

Media

17

Statuses

279

director of AI safety & alignment at Google DeepMind • associate professor at UC Berkeley EECS • proud mom of an amazing 2yr old

San Francisco, CA

Joined March 2018

Ok @demishassabis , I guess "I got a Nobel prize" is an ok reason to cancel our meeting. :) In all seriousness though, huge congratulations to you and the entire AlphaFold team!!! Inspiring progress and so happy to see it recognized.

1

10

685

So excited and so very humbled to be stepping in to head AI Safety and Alignment at @GoogleDeepMind. Lots of work ahead, both for present-day issues and for extreme risks in anticipation of capabilities advancing.

We're excited to welcome Professor @AncaDianaDragan from @UCBerkeley as our Head of AI Safety and Alignment to guide how we develop and deploy advanced AI systems responsibly. She explains what her role involves. ↓

31

38

588

When I joined @GoogleDeepMind last year, I came across this incredible group of people working on deliberative alignment, and managed to convince them to join my team in a quest to account for viewpoint and value pluralism in AI. Their Science paper is on AI-assisted deliberation.

4

12

223

Imagine asking an LLM to explain RL to you, or to book a trip for you. Should the LLM just go for it, or should it first ask you clarifying questions to make sure it understands your goal and background? We think the latter: (w Joey Hong and @svlevine ).

4

29

203

Gemini 1.5 Pro is the safest model on the Scale Adversarial Robustness Leaderboard! We’ve made a number of innovations -- which importantly also led to improved helpfulness -- but the key is making safety a core priority for the entire team, not an afterthought. Read more about.

1/ Scale is announcing our latest SEAL Leaderboard on Adversarial Robustness!. 🛡️ Red team-generated prompts.🎯 Focused on universal harm scenarios.🔍 Transparent eval methods. SEAL evals are private (not overfit), expert evals that refresh periodically.

28

25

167

Proud to share one of the first projects I've worked on since joining @GoogleDeepMind earlier this year: our Frontier Safety Framework. Let’s proactively assess the potential for future risks to arise from frontier models, and get ahead of them!

5

21

135

I had a fun time talking to @FryRsquared about AI safety at Google DeepMind -- what is alignment / amplified oversight / frontier safety / robustness / present day safety / & even a little bit on assistance games :).

Join host @FryRSquared as she speaks with @AncaDianaDragan, who leads safety research at Google DeepMind. 🌐. They explore the challenges of aligning AI with human preferences, oversight at scale, and the importance of mitigating both near and long-term risks. ↓

10

12

126

Congrats safety&alignment team for an honorable mention for the outstanding paper award at ICLR this year, for "Robust Agents Learn Causal World Models" @tom4everitt.

2

13

113

On the research side of @Waymo, we've been experimenting with what it takes to learn a good driving model from only a dataset of expert examples. Synthesizing perturbations and auxiliary losses helped tremendously, and the model actually drove a real car!

0

32

109

Thanks @pabbeel for inviting me to your podcast, it was very fun to have an interview with a colleague and close friend! :).

On Ep15, I sit down with the amazing @ancadianadragan, Prof at Berkeley and Staff Research Scientist at Waymo. She explains why Asimov's 3 laws of robotics need updating, how to instill human values in AI and make driverless cars naturally reason about other cars and humans.

1

8

92

Proud of my team for building safety into these models and watching out for future risks. More on this soon with our Gemini technical report, and prep ahead of the AI Seoul Summit!!.

Making great progress on the Gemini Era. At #GoogleIO we shared 2M long context breakthrough with 1.5 Pro and announced Gemini 1.5 Flash, a lighter-weight multimodal model with long context designed to be fast and cost-efficient to serve at scale. More:

1

9

84

My first attempt at a talk for a public audience, explaining some of the intricacies of human-robot coordination. Also a non-technical overview of work with @DorsaSadigh, Jaime Fisac, @andreaBajcsy, and collaborators from Claire Tomlin's group:

0

7

76

Alignment becomes even harder to figure out when you start accounting for changing values. And how AI actions might influence that change.

Excited to share a unifying formalism for the main problem I’ve tackled since starting my PhD! 🎉. Current AI Alignment techniques ignore the fact that human preferences/values can change. What would it take to account for this? 🤔. A thread 🧵⬇️

3

7

78

I think this is a pretty big deal. It's all deterministic, but even so that's where the deep RL big results started. TL;DR: whether or not you can just be greedy(ish) on the random policy's value function predicts PPO performance.

Excited to present our new paper on bridging the theory-practice gap in RL! For the first time, we give *provable* sample complexity bounds that closely align with *real deep RL algorithms'* performance in complex environments like Atari and Procgen.

3

3

62

Super proud of and happy for @DorsaSadigh, so well deserved!!!.

Congratulations to @StanfordAILab faculty Dorsa Sadigh on receiving an MIT Tech Review TR-35 award for her work on teaching robots to be better collaborators with people.

1

2

62

congrats @andreea7b for another HRI best paper nomination, this time for getting human input that is designed to focus explicitly on what the robot is still missing

1

4

61

thank you @demishassabis, it's been a great week for Gemini all around, go team!.

Great to see Gemini 1.5 Pro top the new @scale_ai leaderboard for adversarial robustness! Congrats to the entire Gemini team, and special thanks to @ancadianadragan & the AI safety team for leading the charge on building in robustness to our models as a core capability.

2

2

59

@GaryMarcus Dudes. Is this really constructive scientific debate or are you two just sh***ing on each other at this point? We could ask for clarification instead of accusing inconsistency. I for one would like to learn from you both, not have my BP rise every time I go on twitter.

1

0

60

Hard at work on supervised/imitation learning. Fei-Fei, you'll like this ;) @ai4allorg @berkeley_ai @drfeifei

3

12

56

Assistance via empowerment: agents can assist humans without inferring their goals or limiting their autonomy.by increasing the human’s controllability of their environment, i.e. their ability.to affect the environment through actions (also @NeurIPSConf)

1

10

50

assistive typing: map neural activity(ECoG)/gaze to text by learning from the user "pressing" backspace to undo; most exciting: tested by UCSF w. patient with quadriplegia! @interact_ucb +@svlevine+@KaruneshGanguly 's labs, led by @sidgreddy and Jensen Gao

1

10

50

We're running the second edition of the @berkeley_ai @ai4allorg camp this year, starting in just 24hours. We're excited to teach talented high-school students from low-income communities about human-centered AI!

0

13

52

It was wonderful to be on NPR Marketplace (I love @NPR !!) talking about how game theory applies to human-robot interaction :)

2

8

48

I usually worry about aligning capable models, but . a weak model can do bad by tapping into a perfectly aligned capable model multiple times, with benign requests. Hard tasks are sometimes decomposable into benign hard components + not-so-benign easy components; not to mention.

Model developers try to train “safe” models that refuse to help with malicious tasks like hacking. but in new work with @JacobSteinhardt and @ancadianadragan, we show that such models still enable misuse: adversaries can combine multiple safe models to bypass safeguards 1/n

1

5

49

Offline RL figures out to block you from reaching the tomatoes so you change to onions if that's better, or put a plate next to you to get you to start plating. AI can guide us to overcome our suboptimalities and biases if it knows what we value, but . will it?.

Offline RL can analyze data of human interaction & figure out how to *influence* humans. If we play a game, RL can examine how we play together & figure out how to play with us to get us to do better! We study this in our new paper, led by Joey Hong: 🧵👇

0

7

46

Let's think of language utterances from a user as helping the agent better predict the world!.

How can agents understand the world from diverse language? 🌎. Excited to introduce Dynalang, an agent that learns to understand language by 𝙢𝙖𝙠𝙞𝙣𝙜 𝙥𝙧𝙚𝙙𝙞𝙘𝙩𝙞𝙤𝙣𝙨 𝙖𝙗𝙤𝙪𝙩 𝙩𝙝𝙚 𝙛𝙪𝙩𝙪𝙧𝙚 with a multimodal world model!

1

7

43

So happy to have @noahdgoodman onboard -- he's going to be invaluable in a number of alignment areas, from group/deliberative alignment, to better understanding human feedback, to helping us better evaluate our pretraining, and increase alignment-related reasoning capabilities.

This seems like a good time to mention that I've taken a part-time role at @GoogleDeepMind working on AI Safety and Alignment!.

0

1

42

I think this might be the most fun thing @sidgreddy did in his PhD -- learning interfaces when it is not obvious how to design a natural one, by observing that an interface is more intuitive if the person's input has lower entropy when using it; no supervision required.

We've come up with a completely unsupervised human-in-the-loop RL algorithm for translating user commands into robot/computer actions. Below: an interface that maps hand gesture commands to Lunar Lander thruster actions, learned from scratch.

1

5

42

A single state leaks information about the reward function. We can learn from it by simulating what might have happened in the past that led to that state (previously in small toy environments, now the scaled-up version in slightly less-toy environments :) @interact_ucb.

New #ICLR2021 paper by @davlindner, me, @pabbeel and @ancadianadragan, where we learn rewards from the state of the world. This HalfCheetah was trained from a single state sampled from a balancing policy!. 💡 Blog: 📑 Paper: (1/5)

0

9

42

Congrats @NeelNanda5 and team on this release! Here are Neel's open problems we hope the community can solve with GemmaScope. (and thanks Neel for all you've taught me about mech interp in the past half year).

And there's a *lot* of open problems that we hope Gemma Scope can help solve. As a starting point, here's a list I made - though we're also excited to see however else the community applies it! See the full list here:.

2

0

41

Ion Stoica got me to speak at this -- somewhat different from my typical audiences, but will be fun to share a bit about the challenges of ML for interaction with people.

#RaySummit is happening in 1 week! If you want to learn how companies like @OpenAI, @Uber, @Cruise, @Shopify, @lyft, @Spotify, and @Instacart are building their next generation ML infrastructure, join us!.

1

3

37

Very proud of @DorsaSadigh !!.

#IAmAnEngineer: I didn't fully appreciate the value of role models until I met Anca Dragan. Before meeting her I had male advisors who were terrific but I couldn't see myself in them the way I could see myself in Anca. - @DorsaSadigh

0

0

34

After a few months of work, CoRL is finally happening! Excited about the program we lined up, including this great tutorial by @beenwrekt. Thanks to all authors for their submissions, to our keynote and tutorial speakers for making the trip to Zurich, and to the local organizers.

0

1

29

Safe and steady wins the race:).

We’re excited to announce that we’ve closed an oversubscribed investment round of $5.6B, led by Alphabet, with continued participation from @a16z, @Fidelity, Perry Creek, @silverlake_news, Tiger Global , and @TRowePrice. More:

1

3

31

Excited to welcome @daniel_s_brown to InterACT! :).

I successfully defended my PhD titled "Safe and Efficient Inverse Reinforcement Learning!". Special thanks to my wonderful committee: @scottniekum, Peter Stone, Ufuk Topcu, and @ancadianadragan . Very excited to start a postdoc in Sept with @ancadianadragan and @ken_goldberg.

0

1

30

Open SAEs everywhere all at once!

Sparse Autoencoders act like a microscope for AI internals. They're a powerful tool for interpretability, but training costs limit research. Announcing Gemma Scope: An open suite of SAEs on every layer & sublayer of Gemma 2 2B & 9B! We hope to enable even more ambitious work

0

0

30

Sophia, one of our participants in the @berkeley_ai @ai4allorg camp for high school students, wrote about her experience (out of her own initiative!) -- including her project using @MicahCarroll's Overcooked-inspired human-AI collaboration environment <3

0

2

29

A nice improvement on training SAEs (even on top of Gated SAEs), and we have big plans in this space re:Gemma2! Wonderful to see the progress from @NeelNanda5 and team!.

New GDM mech interp paper led by @sen_r: JumpReLU SAEs a new SOTA SAE method! We replace standard ReLUs with discontinuous JumpReLUs & train directly for L0 with straight-through estimators. We'll soon release hundreds of open JumpReLU SAEs on Gemma 2, apply now for early access!

0

2

28

Our NeurIPS workshop on autonomous driving and transportation was quite well attended. Thanks to the great speakers from industry and academia alike! @aurora_inno @Waymo @zoox @oxbotica @PonyAI_tech @DorsaSadigh

3

3

28

So proud of @andreea7b !!!.

Very excited to announce that I'll be joining @MIT's AeroAstro department as a Boeing Assistant Professor in Fall 2024. I'm thankful to my mentors and collaborators who have supported me during my PhD, and I look forward to working with students and colleagues at @MITEngineering.

0

0

26

very nice to see progress in the SAE space by the team -- getting us just a little bit closer to determining what "concepts" LLMs use!.

Fantastic work from @sen_r and @ArthurConmy - done in an impressive 2 week paper sprint! Gated SAEs are a new sparse autoencoder architecture that seem a major Pareto improvement. This is now my team's preferred way to train SAEs, and I hope it'll accelerate the community's work!.

0

0

27

Empower the human as an alternative to inferring the reward -- with @vivek_myers @svlevine . cc: @d_yuqing @RichardMCNgo.

Human behaviors often don't correspond to maximizing a scalar reward. How can we create aligned AI agents without inferring and maximizing a reward?. I'll have a poster at @mhf_icml2024 at 11:30 on a scalable contrastive objective for empowering humans to achieve different goals

4

3

25

Check out Andreea's work on aligning the representation used for reward functions with what people internally care about. Idea: ask similarity queries. Seems advantageous over getting at the representation via meta-reward-learning.

How can we learn one foundation model for HRI that generalizes across different human rewards as the task, preference, or context changes? Come see at #HRI2023 in the Thursday 13:30 session!.Paper: w/ Yi Liu, @rohinmshah, @daniel_s_brown, @ancadianadragan

0

3

23

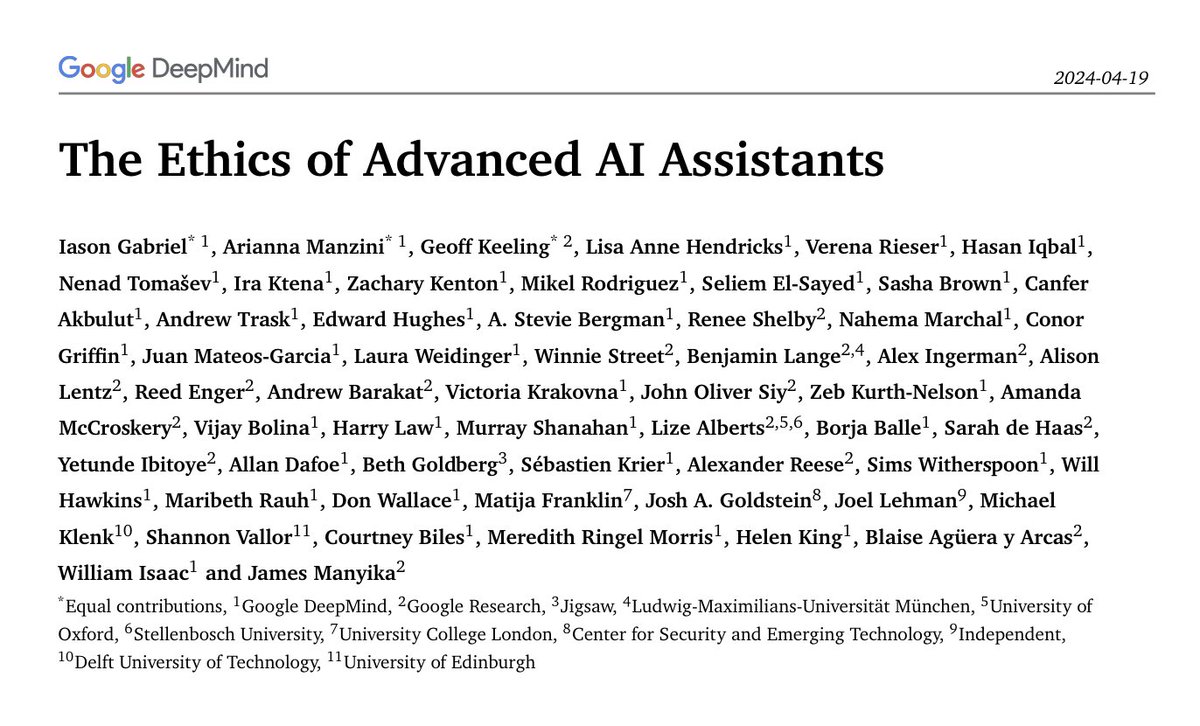

Really impressive work by Iason and colleagues.

1. What are the ethical and societal implications of advanced AI assistants? What might change in a world with more agentic AI?. Our new paper explores these questions:. It’s the result of a one year research collaboration involving 50+ researchers… a🧵

0

1

24

@janleike I think you inspired some of this! I then ended up with @bakkermichiel at the same workshop on social choice in alignment and realized that what I was pitching there to do, he was already working on :).

0

0

22

We've been looking into additional sources of information about reward functions. We found a lot in the current state of the world, before the robot observes any demonstrated actions: humans have been acting already, and only some preferences explain the current state as a result.

New post/paper: learning human preferences from a single snapshot of the world — by thinking about what must have been the preferences to have ended up in this state. Eg robot shouldn’t knock vases off the table b/c being on tables is signal people have avoided knocking them off.

0

1

21

A few folks from AI Safety and Alignment at @GoogleDeepMind are speaking in this summer school!.

Join us in Prague on July 17-20, 2024 for the 4th Human-aligned AI Summer School! We'll have researchers, students, and practitioners for four intensive days focused on the latest approaches to aligning AI systems with human values. You can apply now at

0

1

21

@chelseabfinn Makes a lot of sense, and this was the original way people did RLHF (it was called preference-based RL back then)

1

1

19

Learning from prefs and demos is more popular than ever, but we have to be careful about the rationality level we assume in human responses. Overestimating it is bad. Also, while demos are typically more informative, with very suboptimal humans we should stick to comparisons.

We are excited to announce that our paper “The Effect of Modeling Human Rationality Level on Learning Rewards from Multiple Feedback Types” will be presented at AAAI’23 on Sunday, February 12th, 2023. [1/8].

0

1

18

Thanks for organizing this!!.

Watch live: 1 PM Friday, June 12: @Berkeley_EECS’s Anca Dragan @ancadianadragan #humanrobot interaction "Optimizing Intended Reward Functions: Extracting all the right information from all the right places"

1

0

15

A little write up from Berkeley Engineering on ICML work with @MicahCarroll and @dhadfieldmenell about evaluating and penalizing preference manipulation/shift by recommender systems

0

3

16

sophie on some of our scalable oversight work <3.

"We want to create a situation where we're empowering … human raters [of AI fact checkers] to be making better decisions than they would on their own." – Sophie Bridgers discussing scalable oversight and improving human-AI collaboration at the Vienna Alignment Workshop.

0

1

16

please consider joining @IasonGabriel, he's doing amazing work with his team.

Are you interested in exploring questions at the ethical frontier of AI research?. If so, then take a look at this new opening in the humanity, ethics and alignment research team: . HEART conducts interdisciplinary research to advance safe & beneficial AI.

0

2

16

Congratulations @dhadfieldmenell and Aditi, so proud of and happy for you!!.

Today, Schmidt Futures is excited to announce the first cohort of AI2050 Early Career Fellows who will work on the hard problems we must solve in order for AI to benefit society. To learn more, visit:

1

0

15

"pragmatic" compression: instead of showing an image that's visually similar, learn to show an image that leads to the user doing same thing as they would have done on the original image; w @sidgreddy and @svlevine.

An "RL" take on compression: "super-lossy" compression that changes the image, but preserves its downstream effect (i.e., the user should take the same action seeing the "compressed" image as when they saw original) w @sidgreddy & @ancadianadragan . 🧵>

0

0

12

Our work on explaining deep RL performance continues at ICLR!.

Last year we showed that deep RL performance in many *deterministic* environments can be explained by a property we call the effective horizon. In a new paper to be presented at @iclr_conf we show that the same property explains deep RL in *stochastic* environments as well! 🧵.

0

0

14