Aidan Clark

@_aidan_clark_

Followers

5,212

Following

215

Media

36

Statuses

1,037

Research @OpenAI . Ex: @DeepMind , @BerkeleyDAGRS Hae sententiae verbaque mihi soli sunt

Joined November 2020

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

#FNS歌謡祭夏

• 512358 Tweets

My Way

• 193009 Tweets

Bozkurt

• 185137 Tweets

#SnowMan

• 119973 Tweets

Lagos

• 93832 Tweets

#Number_i_FNS

• 54181 Tweets

#BREAKOUT初披露

• 45741 Tweets

ラウール

• 39203 Tweets

POISON

• 38034 Tweets

HYDE

• 35128 Tweets

フジファブリック

• 33510 Tweets

うたプリ

• 29892 Tweets

スタトレ

• 28825 Tweets

SEUNGMIN AT CHANEL COCO CRUSH

• 25962 Tweets

紫耀くん

• 24826 Tweets

#夏BON

• 23592 Tweets

コラボ最高

• 20408 Tweets

Türklüğün

• 17957 Tweets

最高のコラボ

• 13768 Tweets

ボムギュ

• 11339 Tweets

ラウちゃん

• 11016 Tweets

相葉くん

• 10676 Tweets

Last Seen Profiles

I am not convinced this paper was not written by ChatGPT. But I’m so confused. Was this reviewed by anyone? Can anyone be a Senior IEEE member? Where do I sign up?

24

19

216

To those in this sitch; my advice:

1) Do research. Grab a friend & write a workshop paper. Beg a prof for 1hr/month. However you do it, find a way.

2) SWE at a top lab is a better stepping stone than (e.g.) ML-Eng at a project company.

3) You'll need skill & luck. Prep for that.

3

0

143

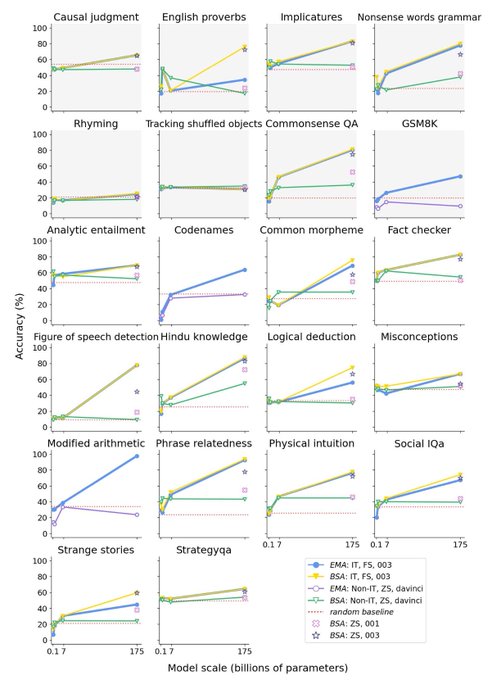

I'm extremely proud to share this work we've done over the last 18 months, and give infinite thanks to the awesome collaborators who made it possible. I firmly believe conditionality is the future of neural networks! Some quick thoughts.... 1/7

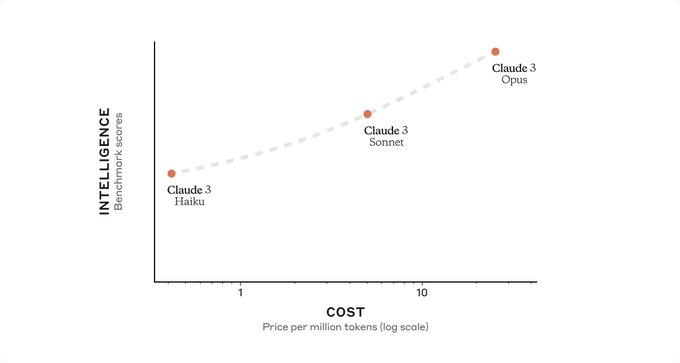

How do language MoEs scale?

New work introduces scaling laws describing MoE-like models, quantifying the benefits of these techniques and discussing their implications:

Work by

@_aidan_clark_

,

@diegolascasas

,

@liaguy77

,

@arthurmensch

and others!

10

32

164

4

22

136

As an AI researcher with a degree in classical languages I feel relatively qualified to comment on Ithaca.

Firstly, this is such cool work: huge congrats to

@iassael

+ the team (a Nature cover no less!). So excited to see what comes of increasing classics + ML overlap.

1/8 🧵

Featured on the cover of Nature: our work on restoring, locating and dating ancient texts using deep neural networks! Big thanks to

@TSommerschield

@BrendanShilling

@itpavlopoulos

@ionandrou

@jonprag

@NandoDF

and all of our colleagues and collaborators.

3

95

417

2

14

94

There is absolutely no way that 500M unique humans, let alone a 1/100th of that number, have downloaded GPT-2. I bet that less than half of the OAI technical staff has.

So it’s all bots… but what I don’t understand is why so many downloads from said bots?

Over 1 BILLION LLM downloads from

@huggingface

in the last 2 years. (guess which LLM has 500M downloads alone)

Nearly 2 million downloads daily 🌞

Kudos to

@Thom_Wolf

@ClementDelangue

+ team

More insights soon w/

@WilliamGao1729

!

9

34

206

12

0

77

This is a bad take.

LLMs are definitely architecturally capable of abductive reasoning. Whether they learn to do so is a different question.

Also, an LLM can create a new truth. Whether it can create a new truth that isn’t an interpolation of known truths is less clear.

6

2

63

I try not comment on OAI drama (hard to not be labelled a shill) but I have a strong +1 to

@jachiam0

. Request

#4

betrays a deeply naive worldview, and perpetuates my prior that safety people are generally the highest probability leak vector.

1

3

57

@davidmbudden

Proof of membership is agreeing it still makes sense to set aside money for kids’ college funds.

4

0

49

100% — that’s why I’m so grateful for my Time Machine that lets me correct my own actions without it I’d never do anything right

3

0

47

@aidangomezzz

would that my response to a sudden drop in loss was so reverent not "ah fuck what did I screw up"

3

0

46

Unbelievably proud to work with

@barret_zoph

— his great leadership was truly on display the last few days

Big shoutout also to the camaraderie created discovering Barret’s home speaker system refused to do anything but play

@icespicee_

on repeat

1

0

44

I *remain* extremely proud that USLRLM got accepted to ICML with a long presentation!

Reviewer 2 always hated DVD-GAN, so this becomes my first 1st author paper to be published at a conference! This was a great project, and I'm excited to present it to more people in Baltimore!

5

2

41

If you're groggily waking up at

#ICML2022

and trying to figure out what to go see after the invited talk, check out the Deep Learning session () where we'll be presenting Unified Scaling Laws for Routed Language Models at 11!

2

4

37

@drjwrae

Right? It's one thing to point out the (obvious) limitations with current approaches -- it's another to claim the field is hitting dead ends in the face of such progress.

0

0

30

Hard not to write off everyone saying “Google *wants* Gemini to return pictures of black confederate soldiers” as accounts not to take seriously.

2

1

29

@hiddenchoir

About half of the people I work with (myself included) don’t have any postgraduate degrees. It requires luck and skill but it’s doable — don’t over index on the PhD!

2

0

28

lots of great infra at goog but TF ain’t it

@_timharley

once said “TF was a great solution to a problem that turned out not to matter”

I think that’s too kind. Lots of benefits to a graph->compile framework, but none which ever really matured in time

3

1

26

Playing with an LLM (Gopher in my case) made me believe real AI might be closer than I'd thought.

#dalle

has similarly floored me. To the extent that art is about stimulating emotion in the human viewer, I have been convinced AI is capable of its production.

1

2

26

Very happy to see that we’re taking this issue seriously. This bubble of AI people on Twitter might have good priors on what is and isn’t AI but the wider world doesn’t. Elections are too infrequent to deal with the problems after the fact!

0

0

22

It's been such a privilege to watch this work develop and I'm so excited Gopher is now shared with the rest of the world!

Casually interacting with a large LM really changed my view of the near-term capabilities of AI, and I'd encourage everyone to play with one themselves.

1

2

20

A wild take — ambitious researchers want to work at the cutting edge. There are lots of edges — many in academia! — but many in industry too.

@sherjilozair

corporate AI labs have become more secretive and proprietary - academia doesn't have compute at the moment, but they have advantage of open science - no truly ambitious researcher wants to work for a product manager under an NDA

1

0

10

2

0

18

+1000 to this great thread. To add one: my biggest misconception (that I still need to get rid of) is the importance of theoretical novelty.

The most important thing is solving a problem no one else can solve. How you do it (and the novelty needed) isn't important.

2

0

18

@jxmnop

LLM intelligence doesn't map cleanly to human intelligence: GPT-4 is way smarter than any human in many ways and way dumber in lots of others. It's unclear, for the types of highly-agentic-ASI-y actions we imagine, how much solving the still-dumber-than-us part matters.

2

0

17

Generally have crazy faith in JAX/the JAX team to do the right thing so baseline excited but I get a little worried whenever someone sells automatically deriving a distributed program from annotations because there are a ton of frameworks that do that and most are terrible.

2

1

17

@tomgoldsteincs

A charitable (but valid) take I’ve heard is:

TF solved the problem of fully general cross-device differentiable programs better than anything else. It just turns out this problem isn’t so important.

0

0

16

Very cool news and excited for the future of all my friends/ex-coworkers! ... but boy is this naming scheme hilarious. Pity I showed up too late for the OG Google DeepMind swag.

We’re proud to announce that DeepMind and the Brain team from

@Google

Research will become a new unit: 𝗚𝗼𝗼𝗴𝗹𝗲 𝗗𝗲𝗲𝗽𝗠𝗶𝗻𝗱.

Together, we'll accelerate progress towards a world where AI can help solve the biggest challenges facing humanity. →

116

519

2K

2

0

16

@kohjingyu

Big problem in industry is that lots of good work doesn't really fit into the paper format, but the incentive structure doesn't really know how to reward alternative presentation strategies.

0

0

16

I'm not saying I have a real moral high-ground here but I stopped working on video generation when the implications of solving the problem scared me a bit and Google's first example is literally "this video, but everything is on fire"

1

1

16

@sherjilozair

I think this misses the entire point of the last 20 years of NN research! Details matter :) good luck training a Transformer with 2010-era DL knowledge!

0

0

16

There were a lot of people who very confidently tweeted that browsing was gone forever and I hope each and everyone one of their followers learns to trust them a bit less.

Huge props to the browsing folks for producing an amazing thing!

0

1

16

@aidan_mclau

Many🚩

> KANs are 10x slower than MLPs

10x is nothing! You can learn to solve nontrivial tasks in a day & waiting 10 for a paper is easy. Begs the Q: why aren't there higher dim results?

MLPs are magic not because they work well in R^10 but because they work well in R^(10^10)

1

4

15

And now we’re about to present our poster! Stand 304, right by the entrance! Can’t miss us, any and all questions welcome :)

If you're groggily waking up at

#ICML2022

and trying to figure out what to go see after the invited talk, check out the Deep Learning session () where we'll be presenting Unified Scaling Laws for Routed Language Models at 11!

2

4

37

0

0

15