jack morris

@jxmnop

Followers

26K

Following

14K

Media

491

Statuses

4K

getting my phd in language models @cornell_tech 🚠 // former researcher @meta // academic optimist // master of the semicolon

new york, new york

Joined October 2016

most people don't know that lex fridman's bizarre character arc actually started in research. here's the real story:. > around 2018 tesla deploys Autopilot, software that lets the car drive for you on highways. but only as your hands are on the steering wheel.> all research.

“I was really disappointed that Zelensky wouldn’t say a kind word about Putin on my show.”. Maybe because he’s been bombing Ukraine every day for the past three years bro

172

733

10K

i think the “TikTok algorithm” is probably just an embedding model that matches video embeddings to user embeddings to recommend content. this is how most state-of-the-art recommenders work. they might be using a huge model, but i doubt it’s anything more complicated.

134

83

3K

i guess DeepSeek broke the proverbial four-minute-mile barrier. people used to think this was impossible. and suddenly, RL on language models just works . and it reproduces on a small-enough scale that a PhD student can reimplement it in only a few days. this year is going to.

We reproduced DeepSeek R1-Zero in the CountDown game, and it just works . Through RL, the 3B base LM develops self-verification and search abilities all on its own . You can experience the Ahah moment yourself for < $30 .Code: Here's what we learned 🧵

31

218

2K

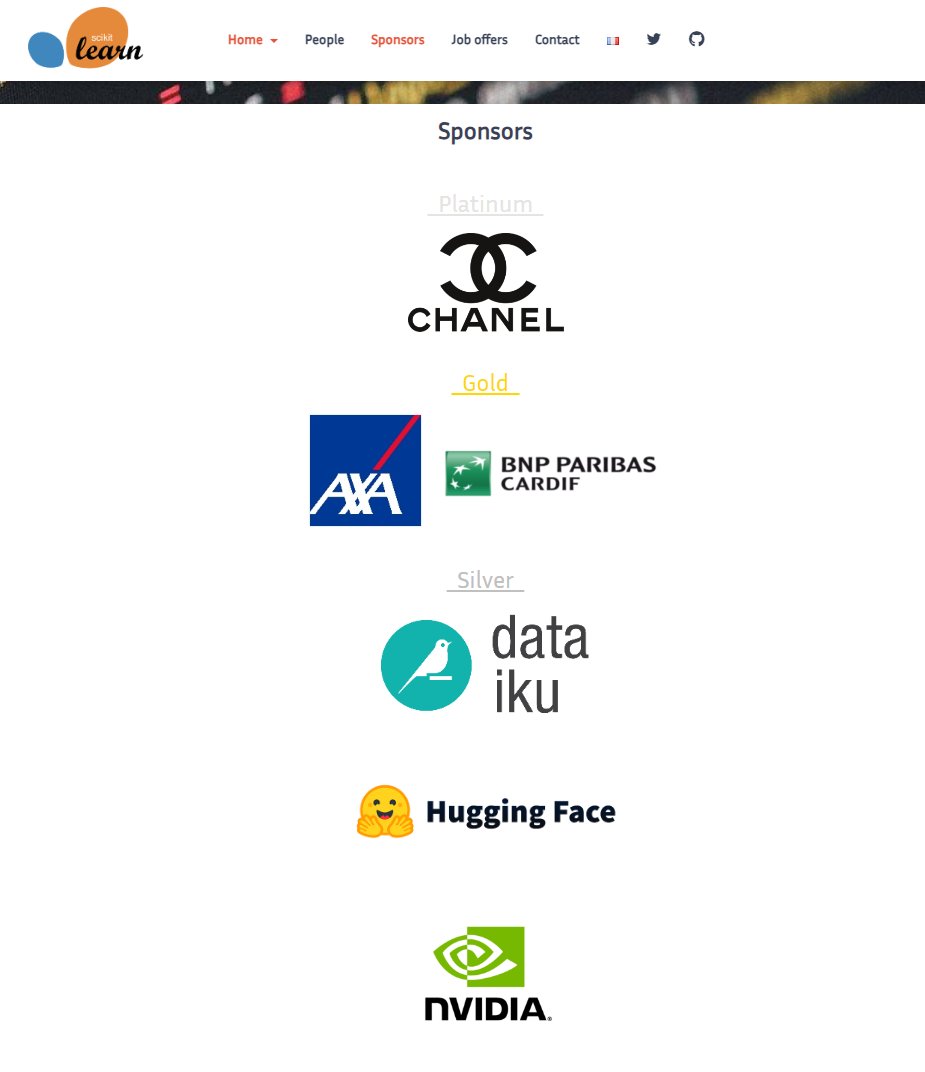

man, the huggingface team is *cracked*. two days after i released my contextual embedding model, which has a pretty different API, @tomaarsen implemented CDE in sentence transformers. you can already use it. implementation was not at all trivial; those people just work fast

19

100

1K

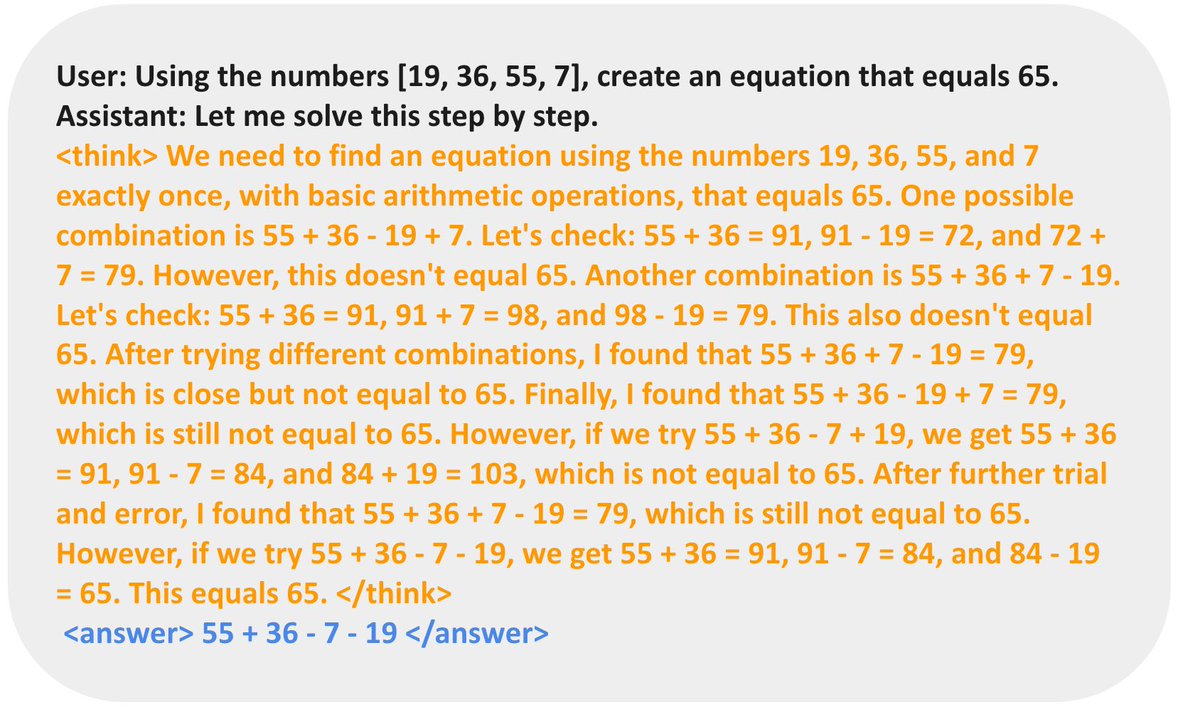

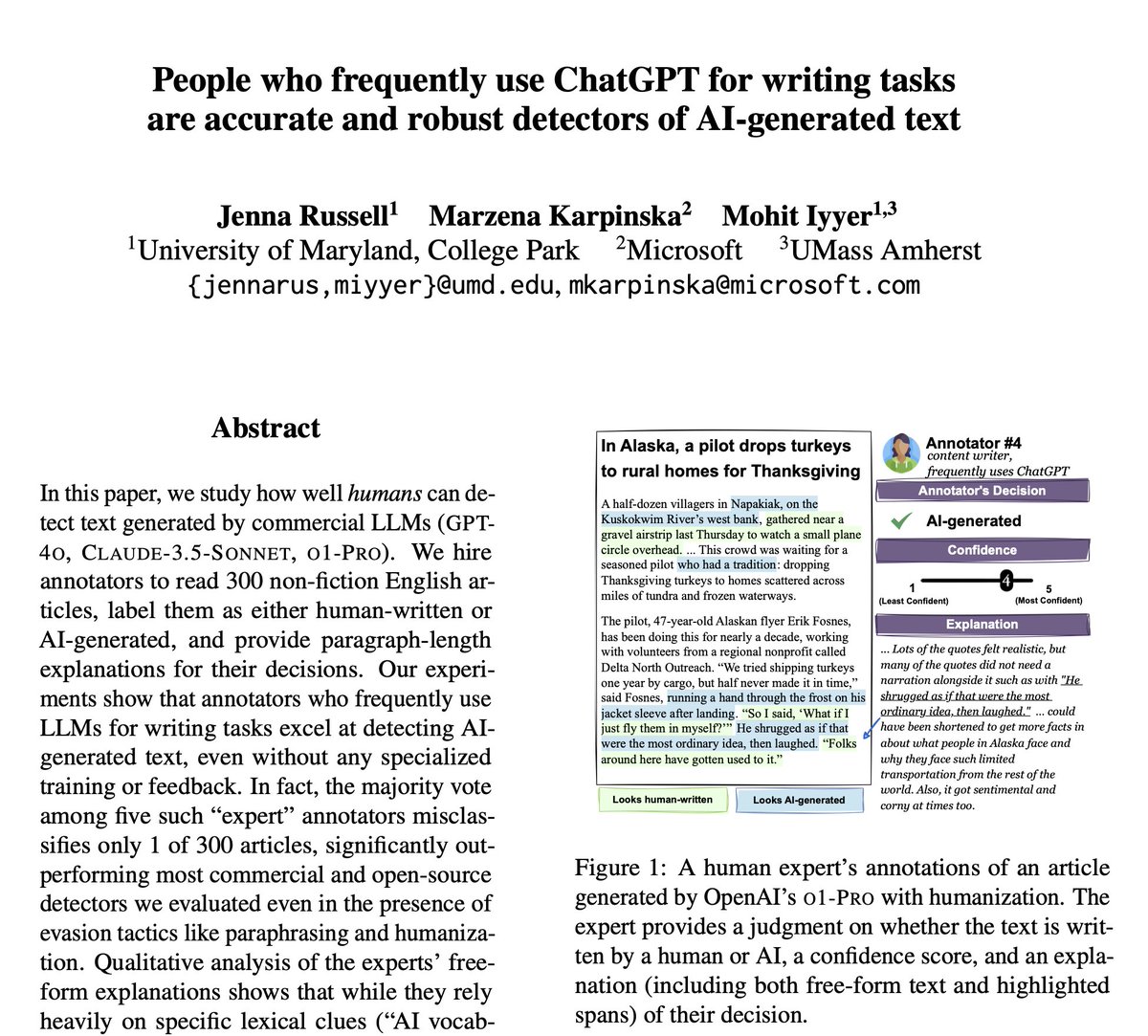

apparently people who use chatGPT a lot are subconsciously training themselves to get really good at detecting AI-generated text.

People often claim they know when ChatGPT wrote something, but are they as accurate as they think?. Turns out that while general population is unreliable, those who frequently use ChatGPT for writing tasks can spot even "humanized" AI-generated text with near-perfect accuracy 🎯

45

48

1K

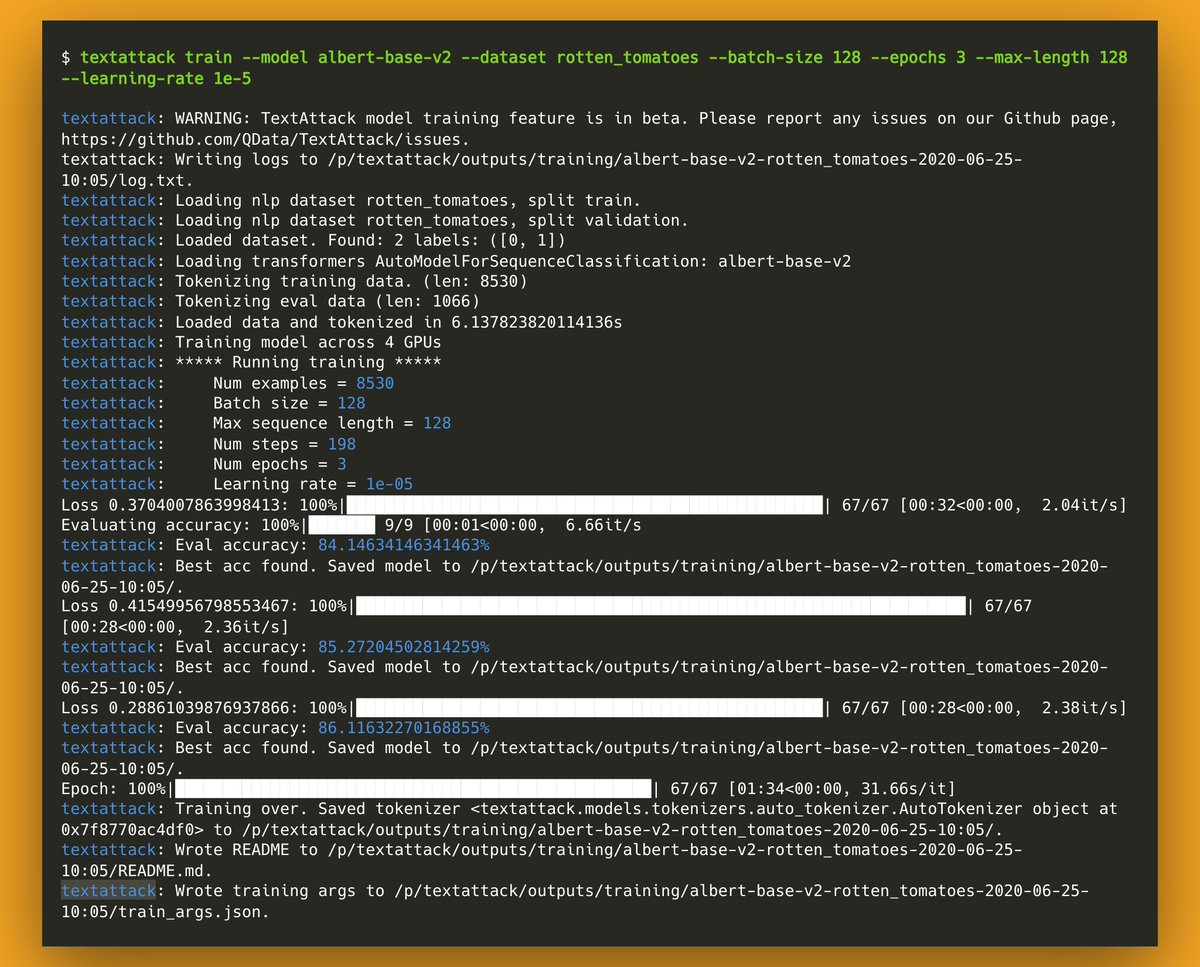

Introducing TextAttack: a Python framework for adversarial attacks, data augmentation, and model training in NLP. Train ***any @huggingface transformer*** (BERT, RoBERTa, etc) on ***any @huggingface nlp classification/regression dataset*** in a single command.

12

209

851

I turned down a job at google research to do a PhD at Cornell right before chatGPT came out and I don’t regret it at all. I see it like this. Do you want to work with a large group on building the fastest & fanciest system in the world, or in a small group testing crazy theories.

As PhD applications season draws closer, I have an alternative suggestion for people starting their careers in artificial intelligence/machine learning: . Don't Do A PhD in Machine Learning ❌. (or, at least, not right now). 1/4 🧵.

13

21

770

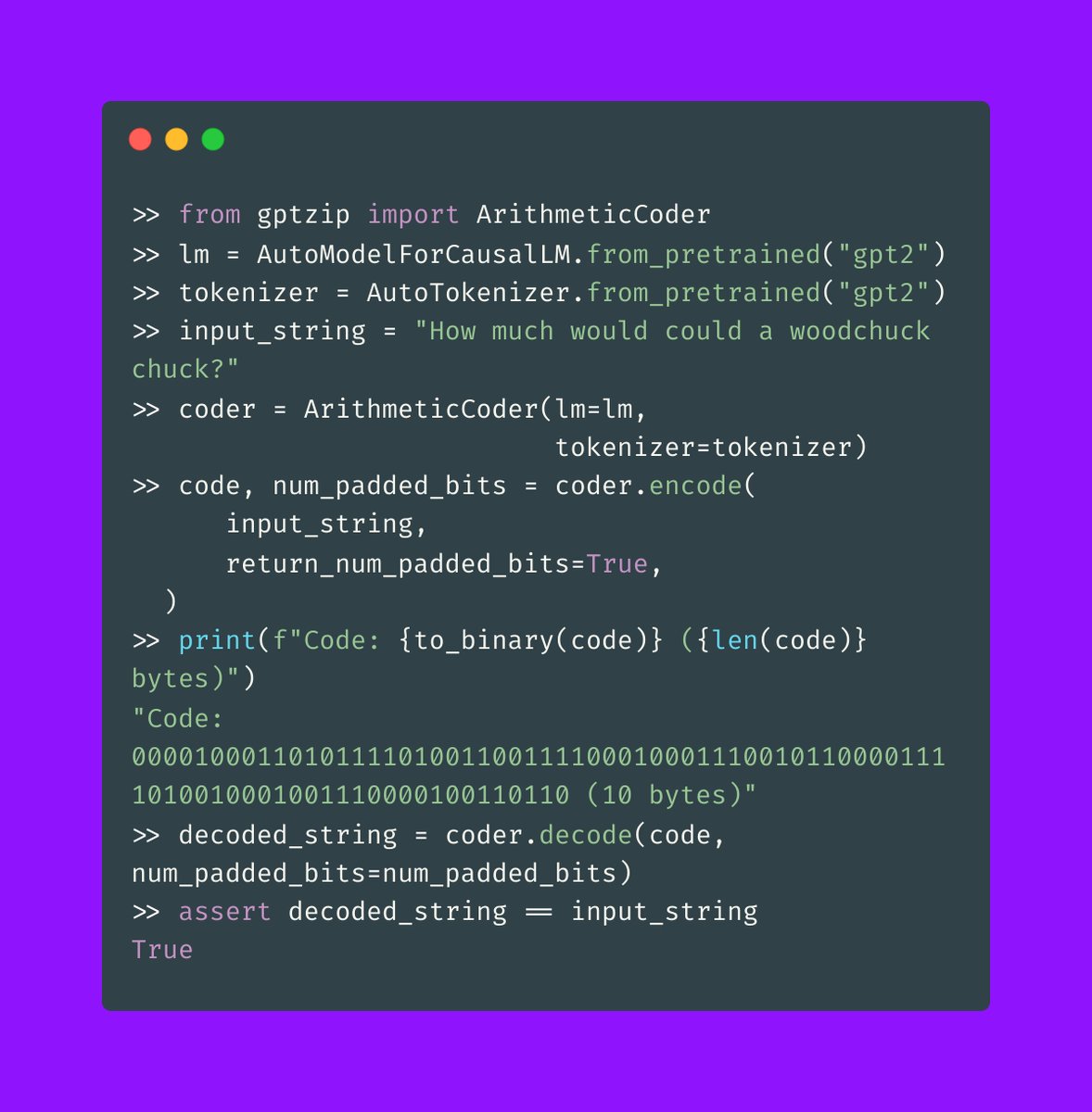

funny little story about Extropic AI. >been curious about them for a while.>have twitter mutual who is an engineer/researcher for this company.>often tweets energy-based modeling and LM-quantumhype mumbojumbo.>last winter, i wanted to get to the bottom of this.>meet up with.

33

16

775