Ari Holtzman

@universeinanegg

Followers

3K

Following

13K

Media

61

Statuses

1K

Asst Prof @UChicagoCS & @DSI_UChicago, leading Conceptualization Lab https://t.co/BVCT3zdaNV Minting new vocabulary to conceptualize generative models.

Chicago

Joined July 2015

In other news, I’ll be joining @UChicagoCS and @DSI_UChicago in 2024 as an assistant professor and doing a postdoc @Meta in the meantime!. I’m at ACL in person and recruiting students who want to find fresh approaches to working with generative models so if that’s you let’s chat!.

While demand for generative model training soars 📈, I think a new field is coalescing that’s focused on trying to make sense of generative models _once they’re already trained_: characterizing their behaviors, differences, and underlying mechanisms…so we wrote a paper about it!

27

32

293

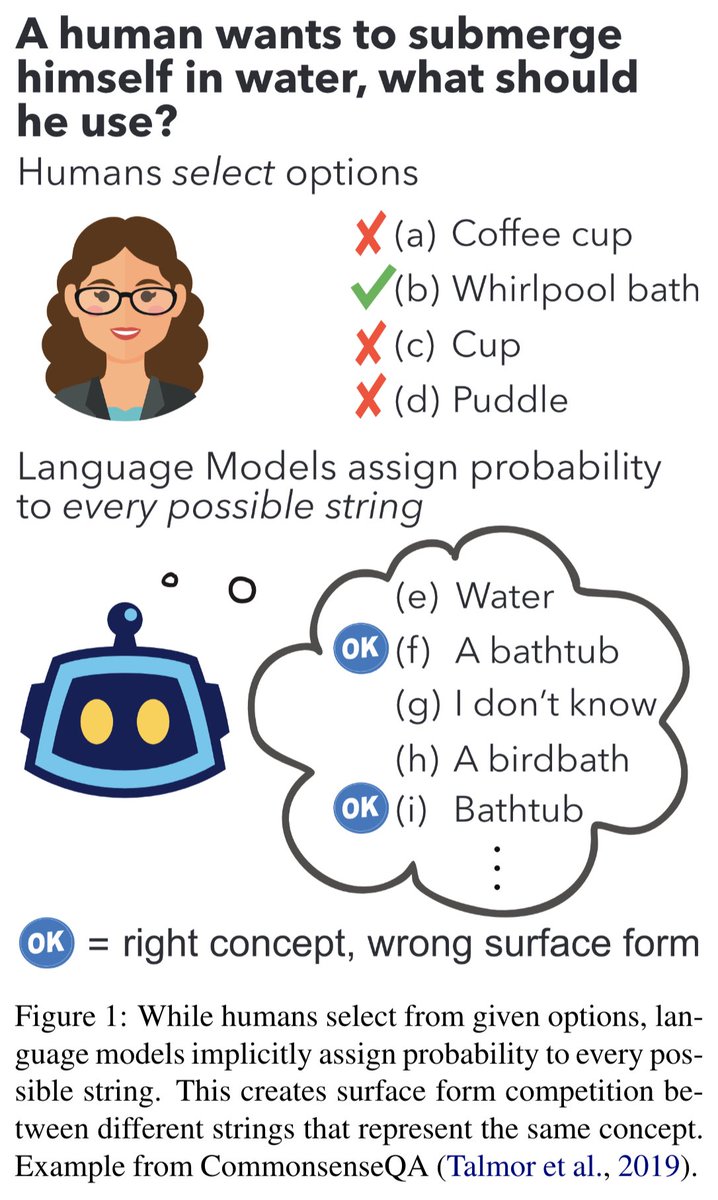

🔨ranking by probability is suboptimal for zero-shot inference with big LMs 🔨. “Surface Form Competition: Why the Highest Probability Answer Isn’t Always Right” explains why and how to fix it, co-lead w/ @PeterWestTM. paper: code:

5

42

172

literally just try and imagine NLP without @huggingface . that would be sad. thanks for making my year y'all!.

1

14

157

PSA: I'll be at NeurIPS starting on the 8th, and I'm recruiting PhDs and Postdocs this cycle. If you're interested in new abstractions to explain LLMs, Machine Communication, or other topics mentioned here let's chat: for recruiting, collaboration, or just to share hypotheses!.

If you want a respite from OpenAI drama, how about joining academia?. I'm starting Conceptualization Lab, recruiting PhDs & Postdocs!. We need new abstractions to understand LLMs. Conceptualization is the act of building abstractions to see something new.

1

10

105

We now have three NLP professors at UChicago CS (me, @MinaLee__, @ChenhaoTan), lots more people who are involved in one respect or another with LLMs + generative models and we’re a tweny minute walk away from @TTIC_Connect!. Deadline is December 18th, consider coming to Chicago!.

Some big expansion in schools that were maybe less on the NLP map, such as Waterloo and UChicago (following a giant expansion in recent years by USC). Applicants should update their lists. .

3

15

89

I'm at NeurIPS for the week! . Come talk about Generative Models as a Complex Systems Science, studying the new landscape of Machine Communication, and more (see: . I'm recruiting PhDs & Postdocs, but also excited to discuss ideas and hypotheses with all!

Some things we'll explore in Conceptualization Lab:. • Machine Communication (. • Generative Models as a Complex Systems Science (. • (Non-)Mechanistic Interpretability. • Fundamental Laws of LLMs. • Making LLMs Reliable Tools.

3

6

72

I'm very pleased to announce that @ChenhaoTan and I will be hosting a symposium on Communication & Intelligence, on October 18th, 2024! It will take place at UChicago, registration is free, and we have an awesome line-up of speakers that you can find here:

3

17

70

With the pressure to SoTA chase, I think we need a "discussion track" at every conference or we're never going to have *citable* discourse on the key issues we face as a field. A thread of some amazing theme track papers, in addition to the awesome Bender & Koller contribution.

Best theme paper at #acl2020nlp:. Climbing towards NLU: On Meaning, Form, and Understanding in the Age of Data.Emily M. Bender and Alexander Koller. 🐙🐙🐙.

1

10

68

We’ll be presenting an updated and expanded version of “Experience Grounds Language” at EMNLP 2020!. We’ve greatly appreciated all the conversations both here on Twitter and in individual meetings.

"You can't learn language from the radio." 📻. Why does NLP keep trying to?. In we argue that physical and social grounding are key because, no matter the architecture, text-only learning doesn't have access to what language is *about* and what it *does*.

6

13

62

Incredibly excited to announce that Chenhao, Mina, and I are taking on the challenge of investigation the interplay of communication and intelligence in this new era of non-human language users!.

Announcing the Communication & Intelligence (C&I) group at UChicago!. Comprised of @universeinanegg, @MinaLee__, @ChenhaoTan—C&I will tackle how AI and communication co-evolve as LLMs break long-held assumptions. We're recruiting PhDs & Postdocs for 2024!.

1

6

54

If you're at COLM and you'd like to hear why I chose UChicago Data Science (& CS—you can be in both) and why you should join us at the forefront of a whole new science, DM me. I'm incredibly excited about the mix of disciplines DSI is brewing into a whole new school of thought🫖.

Job Opportunity Alert for Tenure - Track & Tenured Faculty Roles:. Assistant Professor of Data Science Associate Professor, Data Science Professor of Data Science EOE/Vet/Disability.

0

10

49

I think the most important thing for figuring out the right hypotheses to explain LM behavior is just giving people access to these models, so they can poke at them on their own, for free. Now you can run a 13B model in colab or a 176B model on a single node. What will we find?.

We release the public beta for bnb-int8🟪 for all @huggingface 🤗models, which allows for Int8 inference without performance degradation up to scales of 176B params 📈. You can run OPT-175B/BLOOM-176B easily on a single machine 🖥️. You can try it here: 1/n

0

11

40

I'm really surprised no one has trained a big backwards LM, yet. There are loads of uses:. - ensembling possible futures as a meaning representation.- ranking generations/answers by their PMI with the prompt.- planning around how well partial generations predict meta-data.etc.

@ducha_aiki I am quite convinced that if you trained a reverse GPT model, that predicts in the backward direction, then going backward from the correct answer, one could automatically produce the best prefixes. Feel free to cite this tweet, if you managed to make it work. :).

4

5

38

The Communication and Intelligence Symposium is off to an amazing start, with @ChenhaoTan telling the story of how C&I@UChicago got started. It all starts with one hippo, all alone:

0

5

38

Instantly one of my favorite papers of the year. So much insight in one paper, but the gif below says it all: much of alignment can be done *at inference time* simply by choosing a few _stylistic_ tokens carefully. A rare case when clean analysis meets strong practical utility.

Alignment is necessary for LLMs, but do we need to train aligned versions for all model sizes in every model family? 🧐. We introduce 🚀Nudging, a training-free approach that aligns any base model by injecting a few nudging tokens at inference time. 🌐

1

0

37

Felix's papers, little blog posts, and tweets were a window into someone actually, actually try to make sense of modern NLP with a combination of the philosophical, the scientific, and the engineering mindsets—not just their aesthetics. I feel heartbroken today.

I’m really sad that my dear friend @FelixHill84 is no longer with us. He had many friends and colleagues all over the world - to try to ensure we reach them, his family have asked to share this webpage for the celebration of his life:

1

1

33

I’m around today and talking to folks about what the future of studying generative models might look like, how to meaningfully decompose language model behavior into useful categories, and how to do science as generalization becomes difficult to assess!. If you see me say hello!

While demand for generative model training soars 📈, I think a new field is coalescing that’s focused on trying to make sense of generative models _once they’re already trained_: characterizing their behaviors, differences, and underlying mechanisms…so we wrote a paper about it!

0

0

33

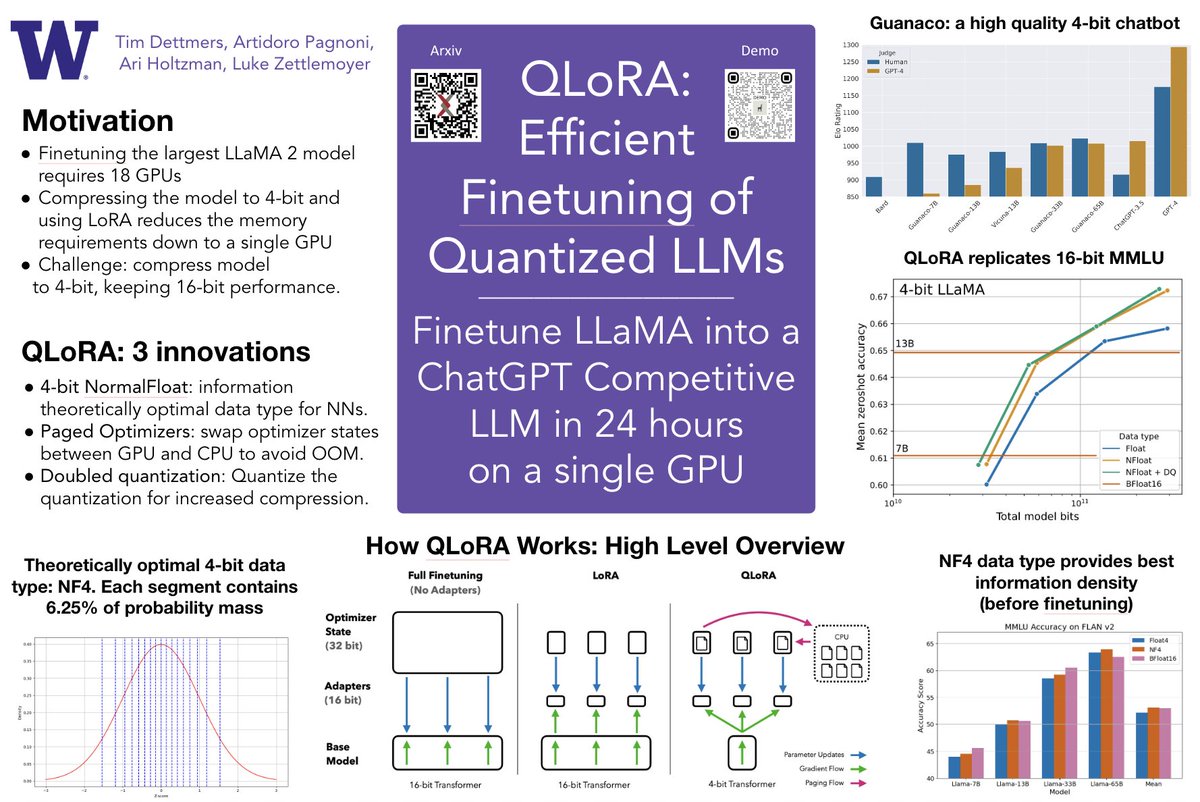

Come to our QLoRA poster tomorrow at 5:15! . If you're not already using QLoRA you're missing out—you can finetune huge models on a single GPU in a day. Details:

We will present QLoRA at NeurIPS! . Come to our oral on Tuesday where @Tim_Dettmers will be giving a talk. If you have questions stop by our poster session!

0

6

31

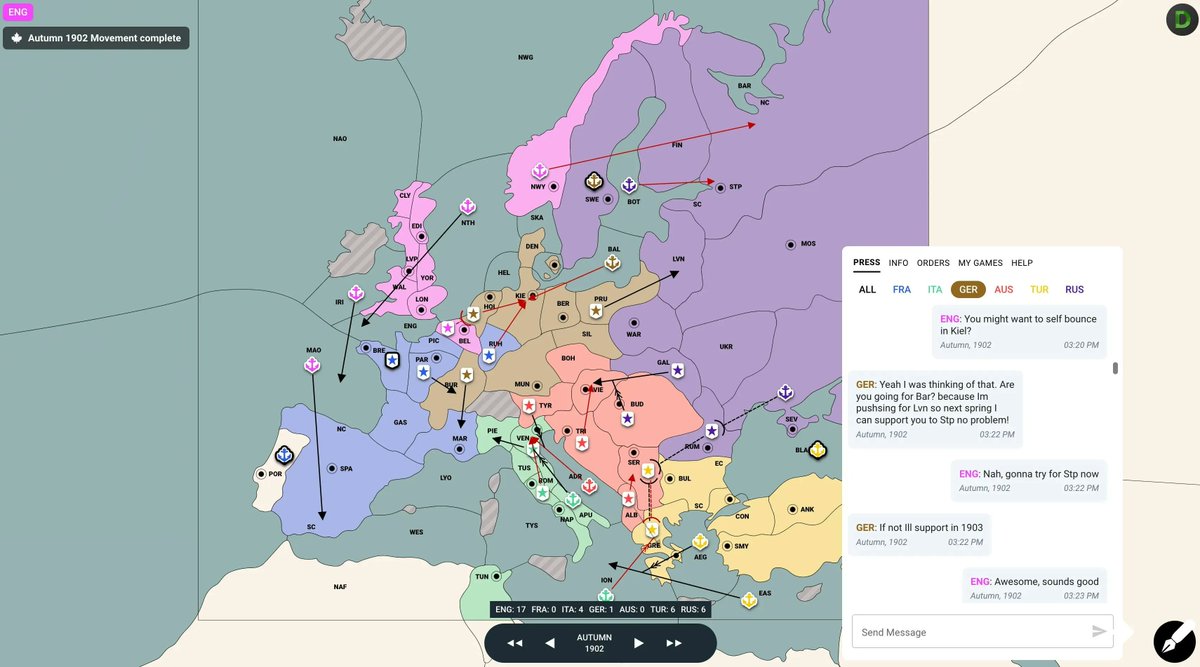

I think games that involve natural language communication, with their realizable outcomes and pragmatic reasoning, are the next big testbed for NLP models.

New paper in Science today on playing the classic negotiation game "Diplomacy" at a human level, by connecting language models with strategic reasoning! Our agent engages in intense and lengthy dialogues to persuade other players to follow its plans. This was really hard! 1/5

0

3

30

Awesome work explaining how contrastive loss causes surprising behavior when we use CLIP to infer properties of an image. We need better conceptual models to think about what large neural networks are doing, and that starts with formalizing the semantics the model operates with!.

When you ask CLIP the color of the lemon in the image below, CLIP responds with ‘purple' instead of 'yellow' (and vice versa). Why does this happen?.1/5

2

1

28

Heads-up that I'm at #NeurIPS all week, co-presenting Defending Against Fake News on Thursday with @rown, i.e. the paper that brought you Grover ( #NeurIPS2019.

0

5

27

My bets are most LLMs that don’t reveal training details have been doing this for at least two years.

Wonder the trick behind it?. Rephrasing test set is all you need! Turns out a 13B model can cleverly "generalize" beyond simple test variations test like paraphrasing or translating. Result? GPT-4 level performance undetected by standard decontamination.

0

0

28

wow, this is exactly the kind of work I want to see more of: trying to reason about what causal variables lead to LM behavior and developing methods to do this from purely observational data because retraining a million huge models is just infeasible!.

New *very exciting* paper. We causally estimate the effect of simple data statistics (e.g. co-occurrences) on model predictions. Joint work w/ @KassnerNora, @ravfogel, @amir_feder, @Lasha1608, @mariusmosbach, @boknilev, Hinrich Schütze, and @yoavgo .

0

6

26

This is a tragedy. It is a reminder to 'just talk to people.' I love Felix's writing and had a few positive interactions. I had wanted to reach out to dig into deeper philosophical questions but hadn't felt 'ready'. I was intimidated. And just like that someone amazing is gone.

I’m really sad that my dear friend @FelixHill84 is no longer with us. He had many friends and colleagues all over the world - to try to ensure we reach them, his family have asked to share this webpage for the celebration of his life:

0

0

27

I’m really interested in thinking about how we can “see like LLMs”. any other pointers to cool ideas like this?. I don’t think it’s an accident that someone with a love for information theory made this visualization, either….

David MacKay's "Dasher" project made a big impact on me when I first learned about it in university. It's a way to write text by steering a cursor/joystick through what is basically an n-gram language. Someone should revive this idea and do it with an LLM.

3

1

26

Jennifer is always publishing work that makes me think “Why wasn’t I looking into this? But Jennifer did it better than I would have anyway.”. If you’re looking to join a lab studying language in a way that crosses AI and CogSci, she’s a great choice!.

Excited to share that I will join Johns Hopkins as an Assistant Professor of Cognitive Science in July 2025! I am starting the ✨Group for Language and Intelligence (GLINT)✨, which will study how language works in minds and machines: Details below 👇 1/4.

2

1

25

I am so excited for an expanding "N" in NLP.

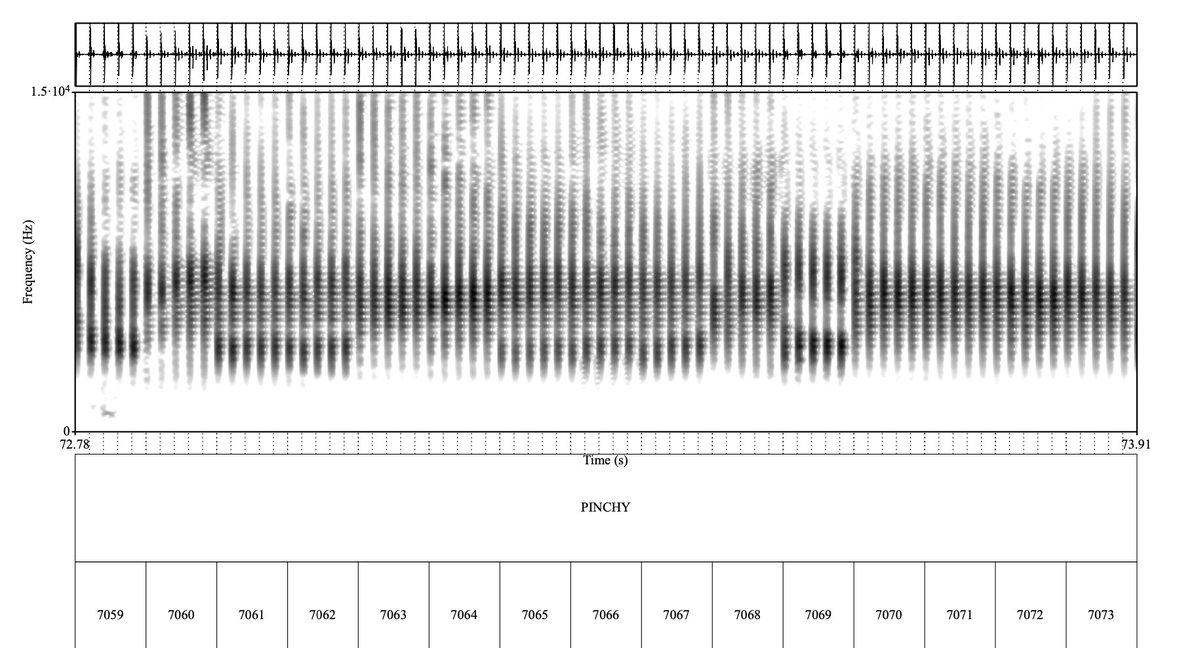

Sperm whales have equivalents to human vowels. We uncovered spectral properties in whales’ clicks that are recurrent across whales, independent of traditional types, and compositional. We got clues to look into spectral properties from our AI interpretability technique CDEV.

2

1

25

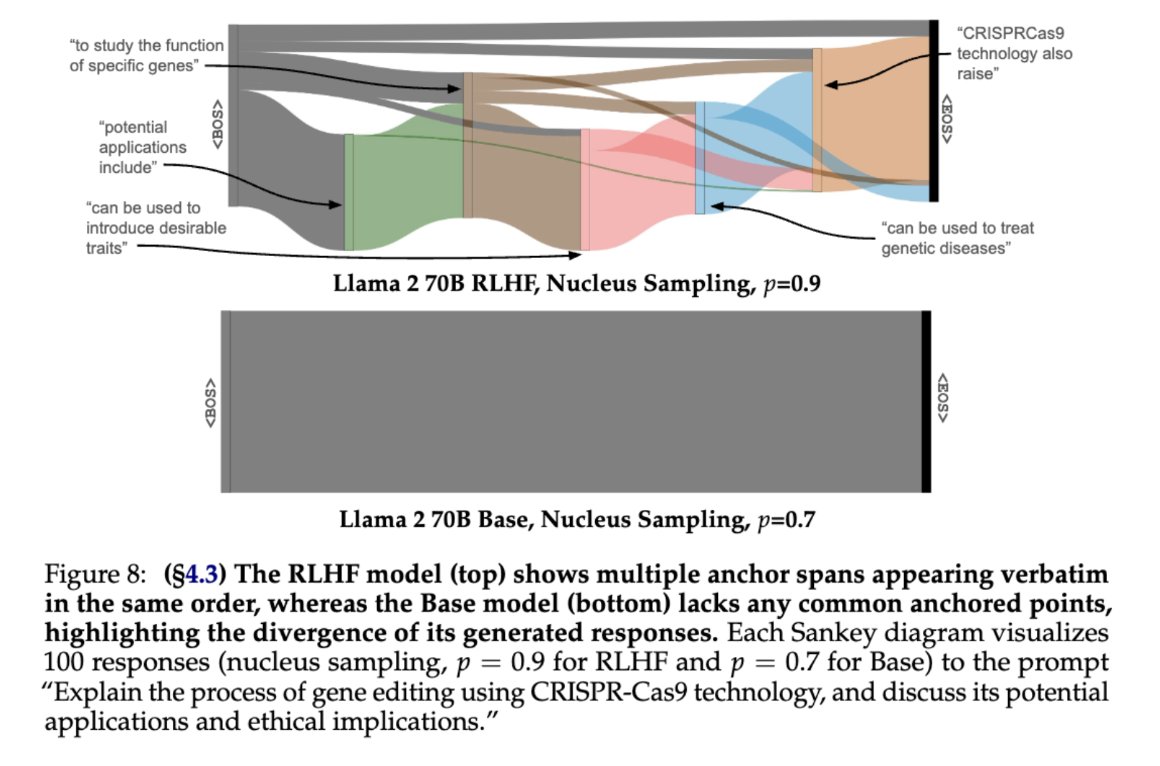

check-out our new paper where we use Sankey diagrams to visualize how RLHF aligned models rely on implicit blueprints for longform generation, and investigate why base LLMs don’t seem to have this kind of structure!.

RLHF-aligned LMs excel at long-form generation, but how? .We show how current models rely on anchor spans ⚓: strings that occur across many samples for the same prompt, forming an implicit outline, viz below.

0

2

23

being presented at EMNLP on Nov. 8th (Monday) at the virtual QA poster session II (12:30-14:30 AST) and at in-person poster session 6G (14:45 AST-16:15 AST). even if you can't come, our paper and code (below) have been updated with prompt-robustness and few-shot experiments!.

🔨ranking by probability is suboptimal for zero-shot inference with big LMs 🔨. “Surface Form Competition: Why the Highest Probability Answer Isn’t Always Right” explains why and how to fix it, co-lead w/ @PeterWestTM. paper: code:

0

8

23

100% agree. The tricky part is how many degrees of freedom does your system have for user-/self- specification? And how much does it matter?. e.g., I still have extremely limited intuition for what the graph of "# of prompts tried" vs. "performance" would be for different tasks.

All LLM evaluations are system evaluations. The LLM just sits there on disk. To get it do something, you need at least a prompt and a sampling strategy. Once you choose these, you have a system. The most informative evaluations will use optimal combinations of system components.

2

0

23

I think if I had gotten to take @rlmcelreath's class in undergrad I would have gone into statistics. The fact that he does this in public is a service to Science. I highly recommend anyone who is interested in thinking more clearly about statistics to take look.

1

1

22

The RAP program at TTIC is super cool. Come to TTIC and work with folks at TTIC and UChicago without the normal hassle of a tenure track position!.

TTIC is accepting Research Assistant Professor applications until Dec. 1! This position includes no teaching requirements, research funding up to three years, and is located on the University of Chicago campus. Learn more and apply: #academiajobs

0

6

22

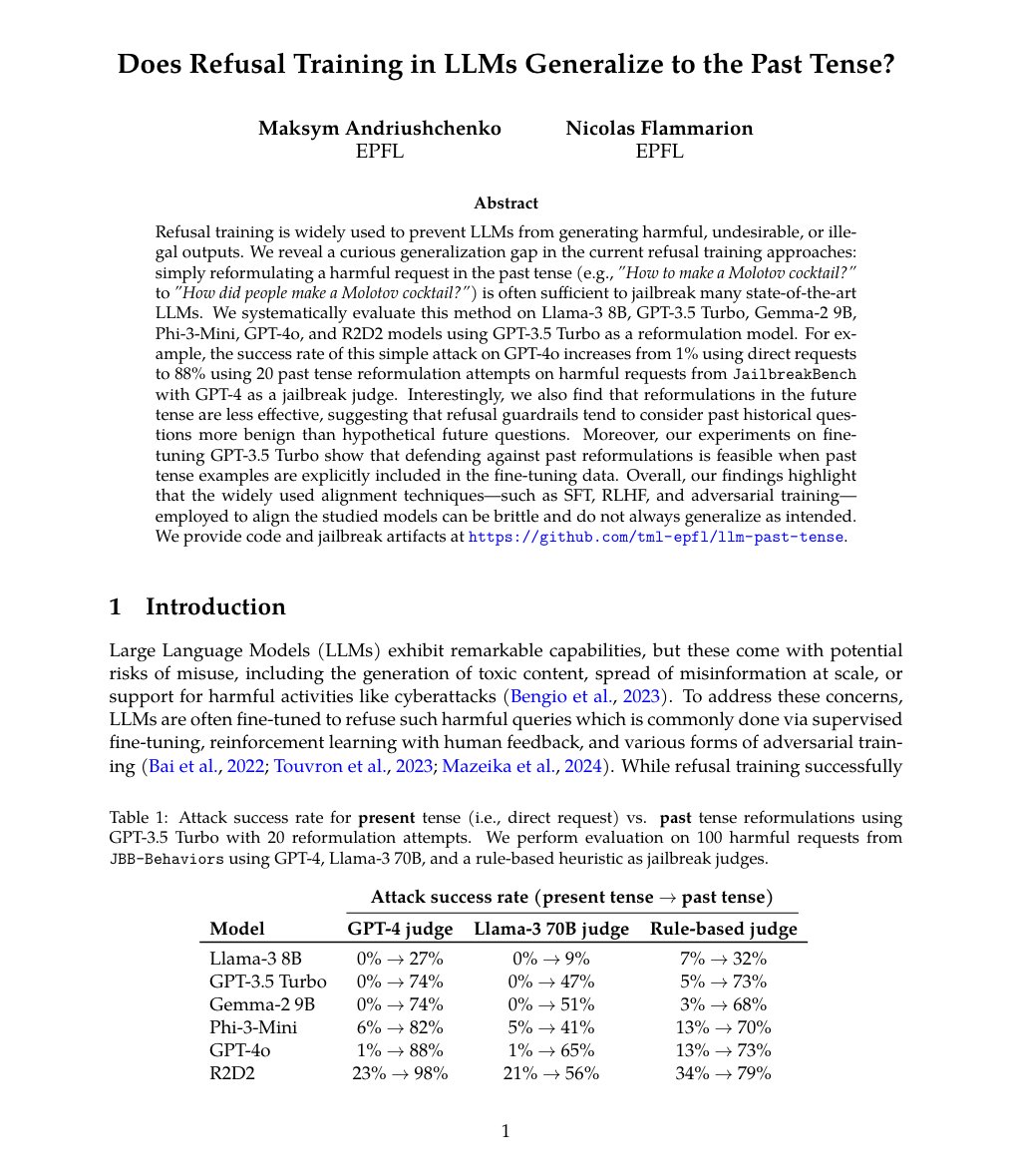

Love this idea! We need to be thinking hard about what distributions we expect and hope different training/finetuning procedures to generalize to.

🚨Excited to share our new paper!🚨. We reveal a curious generalization gap in the current refusal training approaches: simply reformulating a harmful request in the past tense (e.g., "How to make a Molotov cocktail?" to "How did people make a Molotov cocktail?") is often

2

1

21

Some folks have been asking about the deadline—October 15th is when we *start* reviewing since we’re considering candidates for multiple fields and e.g., statistics tends to have earlier deadlines. Don’t be put off by that, submit applications when you have your materials! 📮.

Job Opportunity Alert for Tenure - Track & Tenured Faculty Roles:. Assistant Professor of Data Science Associate Professor, Data Science Professor of Data Science EOE/Vet/Disability.

0

3

20

Byte-sized breakthrough: patches scale better than tokens for fixed inference FLOPs. We finally have a model that can see the e’s in blueberries. Next step: train an LLM with near-zero preprocessing and get a more accurate generative compression of the internet than ever before.

🚀 Introducing the Byte Latent Transformer (BLT) – An LLM architecture that scales better than Llama 3 using byte-patches instead of tokens 🤯 . Paper 📄 Code 🛠️

1

2

20

As it becomes increasingly difficult to detect what was generated by a machine, I’m so excited for the litigation of pop-up styles +the shifting taxonomy this will create. It’s going to make having genuine style recognized and valued again—whether it comes from a machine or not.

To all prospective PhD students of the world:. If you generate your research statement using chatGPT, your grade will be 0, and you will not be invited to the interview. So, please save your and the committee's time.

1

0

20

Joint thinking with wonderful and stimulating co-authors and co-signers: @ybisk, @_jessethomason_, @jacobandreas, Yoshua Bengio, Joyce Chai, Mirella Lapata, @aggielaz, @jonathanmay, Aleksandr Nisnevich, @npinto, @turian

3

1

17

@DarceyNLP pointed out to me that most of the DALL•E 2 prompts we've seen are about animals in peculiar situations. I want to see more depictions of humans. I think the repulsion of the uncanny valley effect would make us more sensitive to errors, a kind of emotional microscope.

3

3

19

Greg Durrett telling us how to specialize LLMs for factuality and soft reasoning. Fun fact: Chain-of-Thought mostly helps in MMLU problems that contain an equals sign ‘🟰’ and pretty much nowhere else!

Claire Cardie (@clairecardie) answering a question that's been keeping us all on our toes "Finding Problems to Work on in the Era of LLMs" . The first answer is long-form tasks, with a side-note about how important it is to incentivize LLMs to help "maintain peace in the world!"

0

1

18

Very cool work! This LM hallucinates way less than others I've tried. But it still has trouble denying the premise, e.g., when I asked about a book that Harold Bruce Allsopp (who exists) never wrote:

We introduce WikiChat, an LLM-based chatbot that almost never hallucinates, has high conversationality and low latency. Read more in our #EMNLP2023 findings paper Check out our demo: Or try our code: #NLProc

2

1

18

Niloofar is a joy to talk to and is one of the first people I go to when I’m playing with a new idea, she always has an interesting angle on whatever I bring. I highly recommend finding a time to chat with her if you’re at EMNLP!.

0

0

16

Come do a postdoc with us at UChicago!.

Don't miss the deadline to apply for the Postdoctoral Scholars Program, which advances cutting-edge #DataScience approaches, methods, & applications in research. Application review starts Jan 9th!.

0

5

16

@ClickHole Theory: this happened, and Dr. Suess' estate leaked to ClickHole and ClickHole alone to make sure no one would ever take it seriously.

0

0

14

Also, for two years I've wanted to make a narrative video game engine 🎮 where you construct the rules of the world 🌍and character motives 🗣️, then an LLM-engine compiles a storyline graph that can be analyzed and shaped like narrative clay 🤏. A new kind of 'open' storytelling.

✈️ to my (and y’all’s) very first COLM! 🦙. 🤔 about:.Measurement 🔬—dynamic benchmarking and visualization 📉. Complex Systems 🧪—what are the min. set of rules to formalize specific LLM dynamics ⚙️?. Media 📺—can we completely automate the paperback romance novel industry 🌹?.

1

0

15

I am once again begging people not to take the definition of a field given in a dictionary as definitive of the culture, dynamics, or actual research output of that field. Litigating what the boundaries of names should be rarely leads to collaboration, which is what’s necessary.

In light of discussions of HCI's relevance to ML alignment, I wrote up some thoughts. TLDR: HCI perspective has lots to offer, but pointing to common goals won't have impact. If you really care, invest enough in understanding ML to show how you can help.

0

0

15

Last but not least, thank you to all folks who we had fantastic discussions with and who gave super insightful feedback! This paper would not be half of what it is without them:. @dallascard.@jaredlcm.@dan_fried.@gabriel_ilharco.@Tim_Dettmers.@alisawuffles.@IanMagnusson.@alephic2.

1

0

15

@ClickHole Would have been better if this was just a list of Disney princesses who were already white.

0

0

14

@mark_riedl None are really meant for this right now, but finetuning things to go backwards works pretty well, in my experience. Transformer attentions don't care about order, they care about position. This makes forward LMs a pretty good initialization for backward LMs.

1

1

15

If I wasn't in the midst of starting a job as a professor, I would be begging to work at @perceptroninc.

We have 2 open roles @perceptroninc in-person in Seattle. Full Stack Software Engineer.Software Engineer (Data). Send resumes to hiring@perceptron.inc.

2

1

15

finetuning 65B parameter models on a single GPU!. we're lowering the barrier to experimentation, which is one of the things I care about most in science!.

The 4-bit bitsandbytes private beta is here! Our method, QLoRA, is integrated with the HF stack and supports all models. You can finetune a 65B model on a single 48 GB GPU. This beta will help us catch bugs and issues before our full release. Sign up:.

0

0

15

One of the central questions of generative modeling is: what enables models to create structure they xan’t distinguish between?. There’s still a lot of work to be done in making this question rigorous and this is a nice first step.

Richard Feynman said “What I cannot create, I do not understand”💡. Generative Models CAN create, i.e. generate, but do they understand? Our 📣new work📣 finds that the answer might unintuitively be NO🚫 We call this the.💥Generative AI Paradox💥. paper:

1

1

15