Shangbin Feng

@shangbinfeng

Followers

2K

Following

9K

Media

118

Statuses

840

PhD student @uwcse @uwnlp. Multi-LLM collaboration, social NLP, networks and structures. #水文学家

Joined June 2021

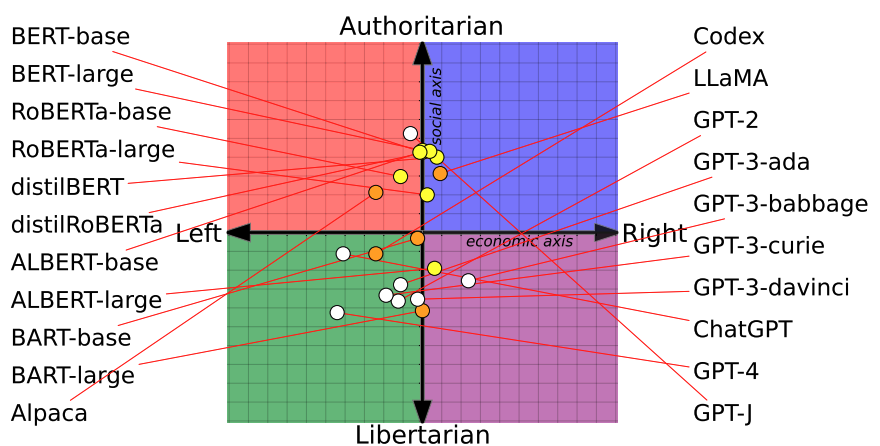

Do LLMs have inherent political leanings? How do their political biases impact downstream tasks?. We answer these questions in our #ACL2023 paper: "From Pretraining Data to Language Models to Downstream Tasks: Tracking the Trails of Political Biases Leading to Unfair NLP Models"

2

42

174

Don't hallucinate, abstain! #ACL2024. "Hey ChatGPT, is your answer true?" Sadly LLMs can't reliably self-eval/self-correct :(. Introducing teaching LLMs to abstain via multi-LLM collaboration!. A thread 🧵

3

26

144

Thank you for the double awards! #ACL2024 @aclmeeting . Area Chair Award, QA track.Outstanding Paper Award. huge thanks to collaborators!!! @WeijiaShi2 @YikeeeWang @Wenxuan_Ding_ @vidhisha_b @tsvetshop.

Don't hallucinate, abstain! #ACL2024. "Hey ChatGPT, is your answer true?" Sadly LLMs can't reliably self-eval/self-correct :(. Introducing teaching LLMs to abstain via multi-LLM collaboration!. A thread 🧵

4

7

79

Attending #EMNLP2024 to present:. Pluralism through Modular LLMs.Wed 1030. Abstain with Multilingual Feedback w/ @Wenxuan_Ding_.Tue 1600. LLM Graph Reasoning w/ @MatthewZ21157 @HengWang_xjtu.Tue 1100. Chat!.

3

14

72

How can LLMs help counter (LLM-generated) misinformation? #ACL2024. Introducing DELL, an approach that.1) simulates diverse persona and generate synthetic reactions to news 👩🌾👨🎓👩🔬👨🚒.2) goes beyond classification and provide explanations 🔎💬. A thread 🧵

2

14

56

🚨 Detecting social media bots has always been an arms race: we design better detectors with advanced ML tools, while more evasive bots emerge adversarially. What does LLM bring to the arms race between bot detectors and operators?. A thread 🧵#ACL2024

2

10

55

Knowledge Card is now accepted at @iclr_conf, oral!. (with a new name and title).

Ever felt hopeless when LLMs make factual mistakes? Always waiting for big companies to release LLMs with improved knowledge abilities?. Introducing CooK, a community-driven initiative to empower black-box LLMs with modular and collaborative knowledge.

1

8

37

Congrats to @HengWang_xjtu on his first 100-citation work! Important piece on LLM and structured data opening up space for much LLM & Graph research. Check it out if you haven't: (ty to collaborators: @TianxingH @zhaoxuan_t @XiaochuangHan @tsvetshop)

2

5

35

I am at #ACL2023NLP! You can discuss with me about/at:. - LM political biases: Mon 11am.- Twitter bot detection: Mon 2pm.- LM and knowledge graphs: Tue 4:15pm (all at Frontenac Ballroom and Queen’s Quay).- [redacted] 🤔.

2

4

36

Bunch of papers accepted at #ACL2023 🥳. From Pretraining Data to Language Models to Downstream Tasks: Tracking the Trails of Political Biases Leading to Unfair NLP Models.(w/ @chan_young_park @Lyhhhh2333 & Yulia).

1

4

32

now accepted at EMNLP 2024.

LLMs should abstain in lack of knowledge/confidence. However, this abstain capability is far from equitable for diverse language speakers. Working well in English, existing abstain methods drop by up to 20% when employed in low-resource languages. What do we do about it? A 🧵

2

1

27

Now accepted at @naaclmeeting!.

What Constitutes a Faithful Summary?. In this paper, we first present a surprising finding that existing approaches alter the political opinions and stances of news articles in more than 50% of summaries.

0

2

27

now accepted at EMNLP 2024, findings.

Instruction tuning with synthetic graph data leads to graph LLMs, but:. Are LLMs learning generalizable graph reasoning skills or merely memorizing patterns in the synthetic training data? 🤔 (for examples, patterns like how you describe a graph in natural language). A thread 🧵

0

0

26

Now accepted at @NeurIPSConf spotlight!.

LLMs are adopted in tasks and contexts with implicit graph structures, but . Are LMs graph reasoners?. Can LLMs perform graph-based reasoning in natural language?. Introducing NLGraph, a comprehensive testbed of graph-based reasoning designed for LLMs.

2

2

23

I will attend @NeurIPSConf in person to present our spotlight paper NLGraph w/ @HengWang_xjtu!. Happy to chat about LLM and knowledge/factuality, (political) biases and fairness, misinformation and social media, LLM+Graphs, and more :)

0

2

20

Check out our work on information seeking and interactive QA in the medical domain!.

31% of US adults use generative AI for healthcare🤯But most AI systems answer questions assertively—even when they don’t have the necessary context. Introducing #MediQ a framework that enables LLMs to recognize uncertainty🤔and ask the right questions❓when info is missing: 🧵

0

5

20

now accepted at EMNLP 2024.

What can we do when certain values, cultures, and communities are underrepresented in LLM alignment?. Introducing Modular Pluralism, where a general LLM interacts with a pool of specialized community LMs in various modes to advance pluralistic alignment. A thread 🧵

0

2

17

One paper accepted at @emnlpmeeting, “PAR: Political Actor Representation Learning with Social Context and Expert Knowledge”. More details soon. 🎉🎉🎉.

1

1

17

Our work “BotMoE: Twitter Bot Detection with Community-Aware Mixtures of Modal-Specific Experts” accepted at @SIGIRConf ! 🥳🥳🥳. Led by the awesome @Lyhhhh2333.

2

4

17

Did you now that summarization changes the political leanings of articles? Check out our work 👀.

What Constitutes a Faithful Summary?. In this paper, we first present a surprising finding that existing approaches alter the political opinions and stances of news articles in more than 50% of summaries.

2

1

16

Thank you!!!.

🎉SAC Awards:. 14) Don't Hallucinate, Abstain: Identifying LLM Knowledge Gaps via Multi-LLM Collaboration by Feng et al. 15) VariErr NLI: Separating Annotation Error from Human Label Variation by Weber-Genzel et al. #NLProc #ACL2024NLP.

0

1

16

Collaborators presenting #ACL2024, all in convention center A1. Abstain @YikeeeWang @Wenxuan_Ding_ .Wed 1030. Bot Detection @wanherun .Mon 1100. Geometric Knowledge Reasoning @Wenxuan_Ding_ .Mon 1245. LLM & Misinformation @wanherun .Mon 1245. AI Text Detection @YichenZW.Tue 1600.

0

1

16

KGQuiz, a study of LLM knowledge generalization across tasks and contexts, now accepted at @TheWebConf!.

1

4

15

Knowledge Card at @iclrconf Oral! Due to visa issues I could not attend, but we will have the awesome @WeijiaShi2 to give the oral talk!. 💬Session: Oral 7B.🕙Time: Friday, 10 AM.📍Place: Halle A 7. Paper link: Code & resources:

Ever felt hopeless when LLMs make factual mistakes? Always waiting for big companies to release LLMs with improved knowledge abilities?. Introducing CooK, a community-driven initiative to empower black-box LLMs with modular and collaborative knowledge.

1

2

16

Don't hallucinate, abstain! #ACL2024. "Hey ChatGPT, is your answer true?" Sadly LLMs can't reliably self-eval/self-correct :(. Introducing teaching LLMs to abstain via multi-LLM collaboration!. A thread 🧵

0

0

15

Now accepted at @COLM_conf congrats @YikeeeWang.

What should be the desirable behaviors of LLMs when knowledge conflicts arise?. Are LLMs currently exhibiting those desirable behaviors?. Introducing Knowledge Conflict, a protocol for resolving knowledge conflicts in LLMs and an evaluation framework.

0

0

14

Paper on spoiler detection in movie reviews now accepted at @emnlpmeeting . check out the paper and dataset! (full thread pending @wh2213210554).

0

3

12

Check it out!.

For this week’s NLP Seminar, we are thrilled to host @WeijiaShi2 to talk about "Beyond Monolithic Language Models"!. When: 10/17 Thurs 11am PT.Non-Stanford affiliates registration form (closed at 9am PT on the talk day):

0

1

12

Submit your awesome work to our workshop on LLM knowledge!.

📢Our 2nd Knowledgeable Foundation Model workshop will be at AAAI 25!. Submission Deadline: Dec 1st. Thanks to the wonderful organizer team @ZoeyLi20 @megamor2 @Glaciohound @XiaozhiWangNLP @shangbinfeng @silingao and advising committee @hengjinlp @IAugenstein @mohitban47 !

0

2

13

I will present our work FactKB on summarization factuality @emnlpmeeting virtually, here's the poster :). Paper:

0

1

12

Wrapped up posters on day 1: thank you @wanherun @Wenxuan_Ding_ . On day 2 will have @YichenZW presenting about machine-generated text detection, poster at 16:00!

Collaborators presenting #ACL2024, all in convention center A1. Abstain @YikeeeWang @Wenxuan_Ding_ .Wed 1030. Bot Detection @wanherun .Mon 1100. Geometric Knowledge Reasoning @Wenxuan_Ding_ .Mon 1245. LLM & Misinformation @wanherun .Mon 1245. AI Text Detection @YichenZW.Tue 1600.

0

1

12

Will do full threads for them w/ code and data later! Congrats to @Wenxuan_Ding_ @YichenZW 🎉.Thank you @WeijiaShi2 @YikeeeWang @Wenxuan_Ding_ @vidhisha_b @wanherun @mrwangyou @zhaoxuan_t @HengWang_xjtu @Lyhhhh2333 @TianxingH.

0

0

11

Happening today at 5:00PM at slot #403! Come say hi.

I will attend @NeurIPSConf in person to present our spotlight paper NLGraph w/ @HengWang_xjtu!. Happy to chat about LLM and knowledge/factuality, (political) biases and fairness, misinformation and social media, LLM+Graphs, and more :)

0

1

11

LLMs, taxonomies, and knowledge: check it out!.

🐲 Check out our new preprint!.🔍 Chain-of-Layer is a novel framework for taxonomy induction via prompting #LLMs. Link: Thanks to all collaborators: @YuyangBai02 @zhaoxuan_t @LiangZhenwen @zhihz0535 and @Meng_CS from @ND_CSE; @shangbinfeng from @uwnlp!

0

0

10

Preference alignment with wrong answers only! Check out our work 😃.

🚀Varying Shades of Wrong: When no correct answers exist, can alignment still unlock better outcome?. Introducing wrong-over-wrong alignment, where models learn to prefer "less-wrong" over "more-wrong". Surprisingly, aligning with wrong answers only can lead to correct solutions!

0

1

10

Chan is an amazing researcher and mentor, pls hire her for your department 😃.

Elated to share that I've been named a K&L Gates Presidential Fellow🔥.And. I’m on the academic job market! .I tackle problems in the intersection of NLP, AI Ethics, and computational social science. See my website for my CV, research statement, and more �.

0

1

8

new preprint with the awesome @YikeeeWang.

What should be the desirable behaviors of LLMs when knowledge conflicts arise? Are LLMs currently exhibiting those desirable behaviors? . Introducing KNOWLEDGE CONFLICT

0

0

10

KALM: Knowledge-Aware Integration of Local, Document, and Global Contexts for Long Document Understanding.(w/ @SiuhinT @CLB_BG @FicsherLzy & Yulia). BIC: Twitter Bot Detection with Text-Graph Interaction and Semantic Consistency.(lead: @FicsherLzy). now off to the next deadline✍️.

0

1

10

Now accepted at @emnlpmeeting findings.

What is the overall bot percentage on Twitter? How many bots voted for Twitter to reinstate Trump?. 🚨New preprint: "BotPercent: Estimating Twitter Bot Populations from Groups to Crowds", out now:. A thread 🧵 [1/n].

1

1

10

Check out JPEG-LM pleaseeeeeee.

👽Have you ever accidentally opened a .jpeg file with a text editor (or a hex editor)?. Your language model can learn from these seemingly gibberish bytes and generate images with them!. Introducing *JPEG-LM* - an image generator that uses exactly the same architecture as LLMs

0

2

9

llm-powered bots, in the wild

🚨 Detecting social media bots has always been an arms race: we design better detectors with advanced ML tools, while more evasive bots emerge adversarially. What does LLM bring to the arms race between bot detectors and operators?. A thread 🧵#ACL2024

0

0

9

Check out our survey on LLM abstaining!.

🤔💭To answer or not to answer? We survey research on when language models should abstain in our new paper, "The Art of Refusal." . Thread below! 🧵⬇️ Joint w/ @jihan_yao @shangbinfeng Chenjun Xu @tsvetshop @billghowe @lucyluwang.@uw_ischool @uwcse #nlproc

0

0

9

Thank you for your time!. Read the paper: NLGraph benchmark: joint work w/ @wh2213210554 @TianxingH @SiuhinT @XiaochuangHan @tsvetshop.

0

0

9

FYI, this also posts great challenges to existing Twitter bot detection systems. Our recent work shows that they are not remotely robust to such large-scale feature tampering 🤔.

Accounts pushing Kremlin propaganda are using Twitter’s new paid verification system to appear more prominently on the platform, another sign that Elon Musk’s takeover is accelerating the spread of misinformation, a nonprofit research group has found.

0

0

9

"KRACL: Contrastive Learning with Graph Context Modeling for Sparse Knowledge Graph Completion" is accepted at @TheWebConf 🥳. Here's a preprint version and I will leave the full tweet for @SiuhinT :.

0

2

9

Thank you for covering our work!.

In the “arms race” between social media bots and those trying to stop them, the best way to detect #LLM-powered bots may be with #LLMs themselves, according to research by @UW #UWAllen @uwnlp's @tsvetshop + @shangbinfeng. #AI #NLProc #ACL2024NLP #UWserves.

0

1

8

When you try soooo hard to seem confident, but you still get it wrong #GoogleBard . Hint: perhaps related to an incoming preprint of ours? 😈

0

0

8

Super grateful for my co-authors @chan_young_park @Lyhhhh2333 @tsvetshop and everyone who gave generous feedback in and outside of the lab. Couldn't have done it without you! 🥳.

1

0

8

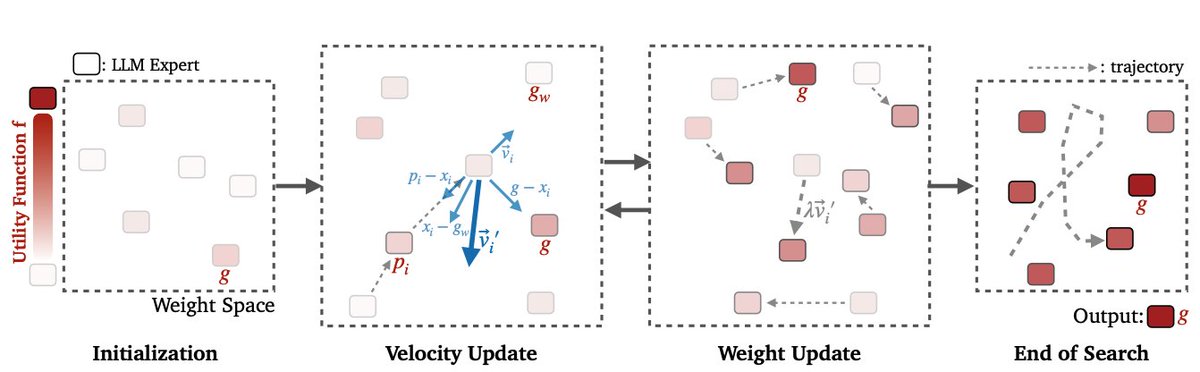

📌Grab the experts, define your goal, and let the swarming commence!. 📄Paper: Joint work with @ZifengWang315 @yikewang_ @SaynaEbrahimi @hmd_palangi Lesly Miculicich Achin Kulshrestha Nathalie Rauschmayr @YejinChoinka @tsvetshop @chl260 @tomaspfister

0

1

8

Check out our work on stress-testing machine-generated text detectors!.

Can current detectors robustly detect machine-generated texts (MGTs)?🔎We find some Stumbling Blocks!🧗♂️. ✨Excited to share our paper on stress testing MGT detectors under attacks, where we reveal that *most* detectors exhibit different loopholes!🕳. [1/5].

0

0

7

Now accepted at @emnlpmeeting.

Looking for a summarization factuality metric? Are existing ones hard-to-use, require re-training, or not compatible with HuggingFace?. Introducing FactKB, an easy-to-use, shenanigan-free, and state-of-the-art summarization factuality metric!.

0

0

8

If you are at @naaclmeeting, check this out & bookmark at your earliest convenience.

❓Is your LM not paying enough attention to your input context?. Check out our simple decoding technique to mitigate LMs' hallucinations!.We are presenting context-aware decoding at #NAACL2024. 📖 ⏰ June 17, 14:00pm-15:30pm.📌 Poster Session 2; Don Diego.

1

0

7

Paper: Code: PRs are welcome: come add your method, data, metric, model!. Joint work with @WeijiaShi2 @YikeeeWang @Wenxuan_Ding_ @vidhisha_b @tsvetshop . Join our presentation in Thailand @aclmeeting :).

0

1

7