Yuji Zhang

@Yuji_Zhang_NLP

Followers

285

Following

137

Media

2

Statuses

40

Postdoc@UIUC. Robust and trustworthy LLMs.

Urbana, IL

Joined August 2020

Deep appreciation to my co-authors for their amazing support and great suggestions!🤩😊.@ZoeyLi20 @JiatengLiu PengfeiYu @YiFung10 @ManlingLi_ @hengjinlp.

1

0

8

Big thanks to my co-authors for their awesome support and great suggestions!!!@ZoeyLi20 @JiatengLiu @YiFung10 @ManlingLi_ @hengjinlp.

0

0

5

@zoewangai Thanks! Truly the imbalanced word patterns (and knowledge) are ubiquitous in training data, thus it would both challenging and meaningful for us to dive deeper into better training distribution, strategies, and architectures :).

0

0

3

@flesheatingemu The "actual AI hallucination" may indicate higher-level intelligence in the future. Look forward to exploring it!.

1

0

2

@hbouammar Thanks! Indeed knowledge overshadowing universally exists in various domains, including the group-bias domain. To mitigate the prior bias introduced by training data, we utilize the inference-time SCD method for broader applications, and we believe RL methods could also help in.

0

0

2

@TonyCheng990417 Thanks!🤓 We have experimented with 160m-7b models on fine-tuning tasks and 1b-13b models on inference-time ones (Table 4, 5). Interestingly, we observed the reverse scaling tendency with the model sizes, showing that knowledge overshadowing exacerbates with the increasing model.

1

0

2

@flesheatingemu Thanks for this interesting question! That would a challenging but very significant direction to explore considering the existing fallacies of current architectures. If we want to achieve that, we should resort to human-like architectures and strategies that encourage more.

1

0

2

@KevinGYager Thanks for raising this insightful question! Sometimes hallucination bring more creativity. However, creativity brought by hallucination is less controllable. Although it has the potential to produce exciting content, currently we still have a long way to go to expect good.

0

0

1

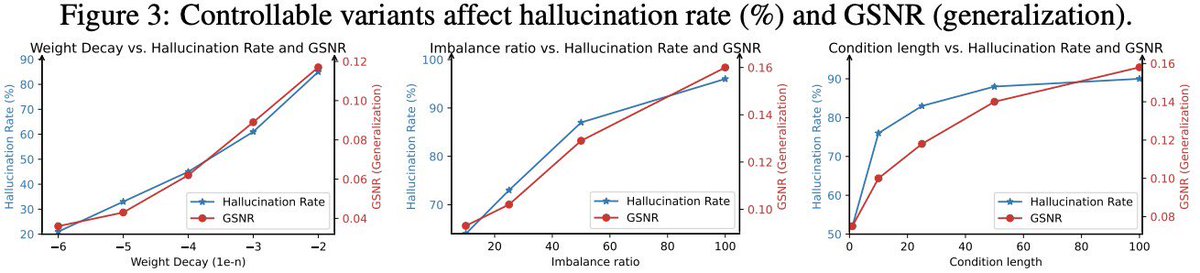

@gerardsans Thanks! This opinion well aligns with what we find in our paper: hallucination is generalization.😆 That means all outputs different from training data points are results of generalization, and that is the reason why hallucination exacerbates with generalization. The difference.

0

0

1