Paul Liang

@pliang279

Followers

6K

Following

4K

Media

236

Statuses

4K

Assistant Professor MIT @medialab @MITEECS || PhD from CMU @mldcmu @LTIatCMU || Foundations of multisensory AI to enhance the human experience.

Joined July 2012

Despite the successes of contrastive learning (eg CLIP), they have a fundamental limitation - it can only capture *shared* info between modalities, and ignores *unique* info. To fix it, a thread for our #NeurIPS2023 paper w Zihao Martin @james_y_zou @lpmorency @rsalakhu:

2

82

418

If recent models like DALL.E, Imagen, CLIP, and Flamingo have you excited, check out our upcoming #CVPR2022 tutorial on Multimodal Machine Learning - next monday 6/20 9am-1230pm. slides, videos & a new survey paper will be posted soon after the tutorial!

6

68

331

I'm on the faculty market in fall 2023! I work on foundations of multimodal ML applied to NLP, socially intelligent AI & health. My research & teaching If I could be a good fit for your department, I'd love to chat at #ACL2023NLP & #ICML2023 DM/email me!

2

61

292

Multimodal AI studies the info in each modality & how it relates or combines with other modalities. This past year, we've been working towards a **foundation** for multimodal AI:. I'm excited to share our progress at #NeurIPS2023 & #ICMI2023: see long 🧵:

2

78

266

This semester, @lpmorency and I are teaching 2 new graduate seminars @LTIatCMU @mldcmu. The first, 11-877 Advanced Topics in Multimodal Machine Learning, focuses on open research questions and recent theoretical & empirical advances in multimodal ML:.

3

40

237

Follow our course 11-777 Multimodal Machine Learning, Fall 2020 @ CMU @LTIatCMU. with new content on multimodal RL, bias and fairness, and generative models. All lectures will be recorded and uploaded to Youtube.

3

56

200

Multimodal models like VilBERT, CLIP & transformers are taking over by storm! But do we understand what they learn? At #ICLR2023 we're presenting MultiViz, an analysis framework for model understanding, error analysis & debugging.

4

28

192

Excited to present MultiBench, a large-scale benchmark for multimodal representation learning across affective computing, healthcare, robotics, finance, HCI, and multimedia domains at #NeurIPS2021 benchmarks track! 🎉. paper: code:

3

33

153

Extremely honored to have received the teaching award - check out our publicly available CMU courses and resources on multimodal ML (MML) and artificial social intelligence (ASI):. MML: Advanced MML: ASI:

Paul Liang (@pliang279) received the 2023 Graduate Student Teaching Award "for incredible work in designing and teaching several new courses in Multimodal Machine Learning and Artificial Social Intelligence, general excellence in teaching, and excellence in student mentorship."

11

11

150

Are you working on federated learning over heterogeneous data? Use Vision Transformers as a backbone!.In our upcoming #CVPR2022 paper, we perform extensive experiments demonstrating the effectiveness of ViTs for FL:. paper: code:

2

33

145

Extremely honored to have received a Facebook PhD Fellowship to support my research in socially intelligent AI! I am indebted to my advisors @lpmorency @rsalakhu at @mldcmu and my collaborators @brandondamos @_rockt @egrefen at @facebookai.

10

8

134

Excited that HighMMT - our attempt at a single multimodal transformer model with shared parameters for many modalities including images, videos, sensors, sets, tables & more, was accepted at TMLR:. paper: code:

High-Modality Multimodal Transformer: Quantifying Modality & Interaction Heterogeneity for High-M. Paul Pu Liang, Yiwei Lyu, Xiang Fan et al. Action editor: Brian Kingsbury. #multimodal #modality #gestures.

3

29

113

heading to #emnlp2024! would love to chat with those interested in joining our Multisensory Intelligence research group at MIT @medialab @MITEECS . Our group studies the foundations of multisensory AI to create human-AI symbiosis across scales and sensory

3

15

116

dressed up as our PhD advisor @rsalakhu for (belated) halloween! cos there’s nothing scarier than your advisor 👻🥳😝 jk Russ is the best 🎉🍾❤️

1

5

113

icymi @ #ICML2023, the latest multimodal ML tutorial slides are posted here:. along with a reading list of important work covered in the tutorial, as well as slides and videos for previous versions (more application focused).

If you're attending #ICML2023 don't miss our tutorial on multimodal ML (w @lpmorency). Content:.1. Three key principles of modality heterogeneity, connections & interactions.2. Six technical challenges.3. Open research questions. Monday July 24, 930 am Hawaii time, Exhibit Hall 2

3

25

96

I'll be at #NeurIPS next week and would love to chat about multimodal AI research, opportunities in my Multisensory Intelligence group, and faculty openings at MIT. Please DM if you'd like to meet!. Full application links to all MIT opportunities:. Media Lab faculty hiring:.

2

9

94

If @OpenAI's CLIP & DALL·E has gotten you interested in multimodal learning, check out a reading list (w code) here.covering various modalities (language, vision, speech, video, touch) & applications (QA, dialog, reasoning, grounding, healthcare, robotics).

1

22

90

Excited to share our new work on measuring and mitigating social biases in pretrained language models, to appear at #ICML2021!. with Chiyu Wu, @lpmorency, @rsalakhu @mldcmu @LTIatCMU . check it out here:.paper: code:

1

25

87

If you're coming to #NAACL2022 drop by our workshop on Multimodal AI, now in its 4th edition! We have invited speakers covering multimodal learning for embodied AI, virtual reality, robotics, HCI, healthcare, & education!. July 15, 9am-4pm.

3

20

86

Excited to attend #NeurIPS2023 this week! Find me to chat about the foundations of multimodal machine learning, multisensory foundation models, interactive multimodal agents, and their applications. I'm also on the academic job market, you can find my statements on my website:

1

11

87

check out our multimodal ML course @LTIatCMU - all lecture videos and course content available online!.

1

10

86

found this gem of a reading list for NLP: focuses on biases, fairness, robustness, and understanding of NLP models. collected by @kaiwei_chang @UCLA #NLProc

2

17

81

If you're attending #ICML2023 don't miss our tutorial on multimodal ML (w @lpmorency). Content:.1. Three key principles of modality heterogeneity, connections & interactions.2. Six technical challenges.3. Open research questions. Monday July 24, 930 am Hawaii time, Exhibit Hall 2

1

10

82

Excited to present Deep Gamblers: Learning to Abstain with Portfolio Theory at #NeurIPS2019! Strong results for uncertainty estimation, learning from noisy data and labels. with Ziyin, Zhikang, @rsalakhu, LP, Masahito. paper: code:

1

14

79

If you're at #NeurIPS2024 this week, check out HEMM (Holistic Evaluation of Multimodal Foundation Models), the largest and most comprehensive evaluation for multimodal models like Gemini, GPT-4V, BLIP-2, OpenFlamingo, and more. Fri 13 Dec 11am - 2pm @ West Ballroom A-D #6807

1

14

72

A few weeks ago @lpmorency and I wrapped up this semester's offerings of 2 new graduate seminars @LTIatCMU @mldcmu. We're releasing all course content, discussion questions, and readings here for the public to enjoy:.

2

12

63

I gave a talk about some of our recent work on multimodal representation learning and their applications in healthcare last week at @MedaiStanford . check out the video recording here: links to papers and code:

This week, @pliang279 from CMU will be joining us to talk about fundamentals of multimodal representation learning. Catch it at 1-2pm PT this Thursday on Zoom!. Subscribe to #ML #AI #medicine #healthcare

0

7

60

friends interested in multimodal learning: I've updated my reading list with the latest papers (+code) and workshops at #NeurIPS2019. cool new papers spanning multimodal RL, few-shot video generation, multimodal pretraining, and emergent communication!.

1

12

56

Excited that our paper on efficient sparse embeddings for large vocabulary sizes was accepted at #ICLR2021!. strong results on text classification, language modeling, recommender systems with up to 44M items and 15M users!. w Manzil, Yuan, Amr @GoogleAI.

Anchor & Transform: efficiently learn embeddings using a set of dense anchors and sparse transformations!. statistical interpretation as a Bayesian nonparametric prior which further learns an optimal number of anchors. w awesome collaborators @GoogleAI .

3

9

55

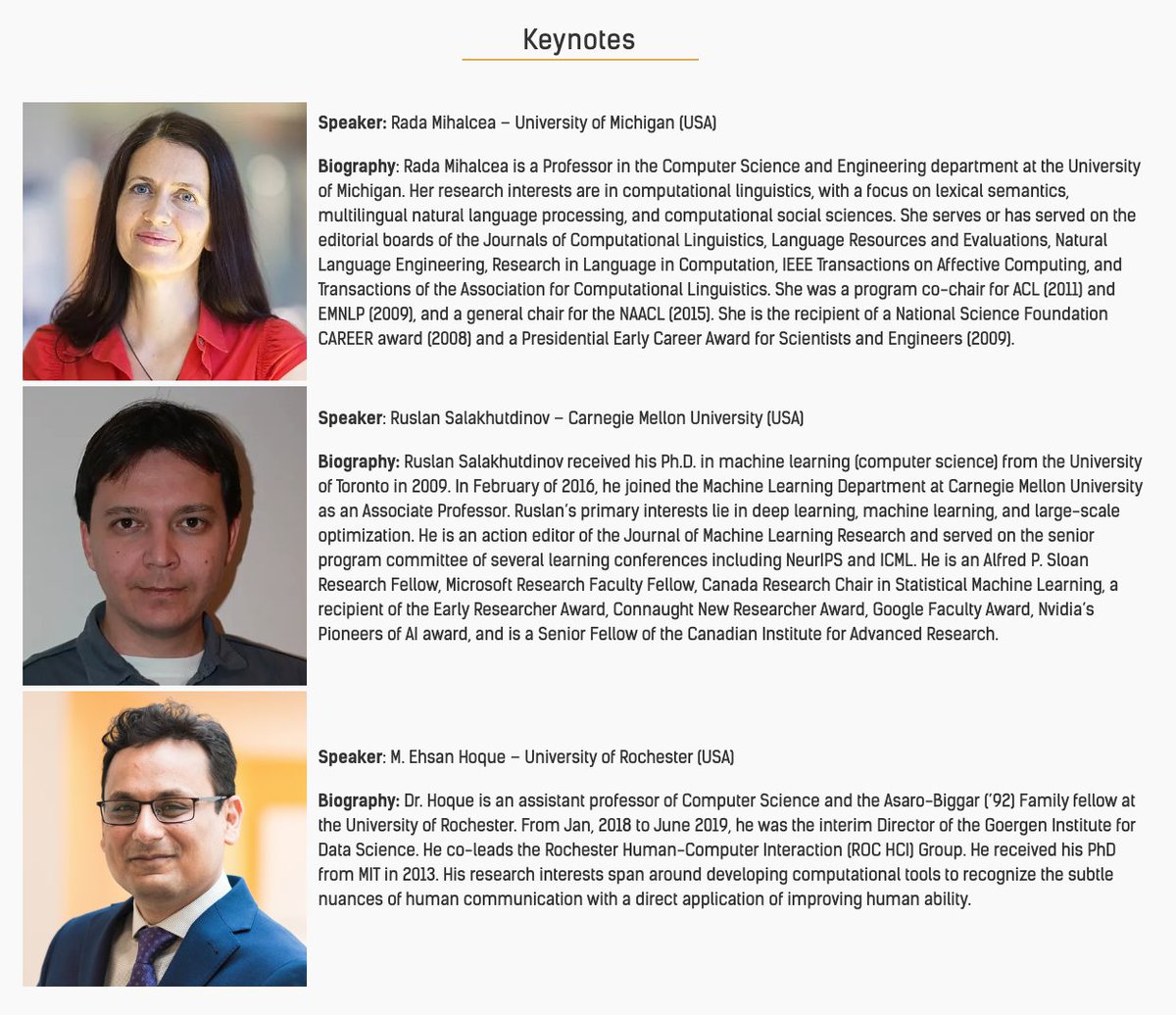

Excited to announce the 2nd workshop on multimodal language @ #ACL2020!. We welcome submissions in all areas of human language, multimodal ML, multimedia, affective computing, and applications!. w/ fantastic speakers:.@radamihalcea @rsalakhu @ehsan_hoque

2

14

52

Giving a talk tmr (friday) morning 820am at the #ACL2024NLP Advances in Language and Vision Research (ALVR) workshop, on high-modality multimodal learning:. Building multimodal AI over many diverse input modalities, given only partially observed subsets of data or model

1

8

54

With many grad student visit days happening this month, @andrewkuznet has written an educational post on the ML@CMU blog on questions to ask prospective Ph.D. advisors! Please share with your friends who are attending visit days all around the world!.

0

12

52

We (@lpmorency Amir and I) are organizing a new seminar course on advanced topics in multimodal ML:. It will primarily be reading and discussion-based. We've come up with a list of open research questions and will post discussion highlights every friday!

2

5

52

If you weren't able to join us for #CVPR2022, we'll be giving an updated tutorial on multimodal machine learning at #NAACL2022 in Seattle this Sunday, July 10, 2:00–5:30pm. slides and videos are already posted here:

If recent models like DALL.E, Imagen, CLIP, and Flamingo have you excited, check out our upcoming #CVPR2022 tutorial on Multimodal Machine Learning - next monday 6/20 9am-1230pm. slides, videos & a new survey paper will be posted soon after the tutorial!

0

9

46

My advisor @lpmorency is finally on twitter! Follow him to stay up to date with awesome work in multimodal ML, NLP, human-centric ML, human behavior analysis, and applications in healthcare and education coming out of the MultiComp Lab @LTIatCMU @mldcmu.

4

4

48

Join us next week for a discussion on different careers in NLP!.

📢 Join us for the next ACL Mentorship session: "Exploring career in NLP (e.g., grad school applications, job market tips, industry vs. academia)". ⌚️Sept 10th 8-9:00pm EST / Sept 11th 12-1:00am GMT. 🔗Zoom: Panelists: @pliang279 & @MagicaRobotics.Hosts:

1

4

46

Check out our #NAACL2021 paper on StylePTB: a compositional benchmark for fine-grained controllable text style transfer!. with Yiwei, @hai_t_pham, Ed Hovy, Barnabas, @rsalakhu @lpmorency @mldcmu @LTIatCMU. paper: code: a thread:

3

15

43

With many grad student visit days happening this month, it's time to pull up this blog post on @mlcmublog: . *Questions to ask prospective Ph.D. advisors*. Please share with your friends who are attending visit days all around the world!. by @andrewkuznet.

1

8

35

I was lucky to have the most supportive PhD advisors @lpmorency & @rsalakhu, and the chance to learn from @BlumLenore Manuel Blum @trevordarrell @manzilzaheer & @AI4Pathology. Becoming a professor has been a dream come true for me, and I'm excited to nurture the next generation

1

0

33

If your downstream task data is quite different from your pretraining data, make sure you check out our new approach *Difference-Masking* at #EMNLP2023 findings. Excellent results on classifying citation networks, chemistry text, social videos, TV shows etc. see thread below:.

In continued pretraining, how can we choose what to mask when the pretraining domain differs from the target domain?. In our #EMNLP2023 paper, we propose Difference-Masking to address this problem and boost downstream task performance!. Paper:

0

6

32

As prospective PhD student visit days are happening around the world, I would like to share a valuable resource @andrewkuznet has written on the @mlcmublog:. **Questions to Ask a Prospective Ph.D. Advisor on Visit Day, With Thorough and Forthright Explanations**

1

3

31

Heading to #ACL2024NLP late - check out poster session 6 on wed morning 1030am, where Alex and I will be presenting "Think Twice: Perspective-Taking Improves Large Language Models’ Theory-of-Mind Capabilities". A new way of simulating LLMs inspired by Simulation Theory’s notion

1

1

30

Happening in ~2 hours at #ICML2023.930am @ exhibit hall 2. Also happy to chat about.- understanding multimodal interactions and modeling them.- models for many diverse modalities esp beyond image+text.- Applications in health, robots, education, social intelligence & more. DM me!.

If you're attending #ICML2023 don't miss our tutorial on multimodal ML (w @lpmorency). Content:.1. Three key principles of modality heterogeneity, connections & interactions.2. Six technical challenges.3. Open research questions. Monday July 24, 930 am Hawaii time, Exhibit Hall 2

1

1

30

Congrats to @roboVisionCMU @CMU_Robotics for winning the #CVPR2019 best paper award! ( For the second year in a row, they won the best paper/student paper with a paper **not** primarily about neural net architectures! #CVPR2018:

0

1

26

excited to present our paper on studying biases in sentence encoders at #acl2020nlp:. web: code: also happy to take questions during the live Q&A sessions:. July 7 (14:00-15:00, 17:00-18:00 EDT). w Irene, Emily, YC, @rsalakhu, LP

0

5

27

Do AI models know if an object can be easily broken💔? or melts at high heat🔥?.Check out PACS: a new audiovisual question-answering dataset for physical commonsense reasoning and new models at #ECCV2022 this week:. paper: video:

3

5

27

Follow ML@CMU blog @mlcmublog for your weekly dose of ML research, conference highlights, broad surveys of research areas, and tutorials!. For starters, check out our recent post on best practices for real-world data analysis!. @mldcmu @LTIatCMU @SCSatCMU.

0

3

27

friends at CMU, come check out the poster presentations for 10-708 Probabilistic Graphical Models, Tuesday 4/30 3-5pm at NSH atrium! projects cover theories and applications of pgms in nlp, rl, vision, graphs, healthcare, and more! @rl_agent @alshedivat @_xzheng @mldcmu.

1

3

26

Anchor & Transform: efficiently learn embeddings using a set of dense anchors and sparse transformations!. statistical interpretation as a Bayesian nonparametric prior which further learns an optimal number of anchors. w awesome collaborators @GoogleAI .

1

2

24

Heading to #NeurIPS2022 - message me if you wanna watch the world cup or chat about multimodal machine learning, socially intelligent AI, and their applications in healthcare and education (in that order ⚽,🤖). My collaborators and I will be presenting the following papers:

1

3

27

check out tutorial slides and reading resources here: recorded tutorial videos will be uploaded soon #CVPR2022

If recent models like DALL.E, Imagen, CLIP, and Flamingo have you excited, check out our upcoming #CVPR2022 tutorial on Multimodal Machine Learning - next monday 6/20 9am-1230pm. slides, videos & a new survey paper will be posted soon after the tutorial!

1

6

27

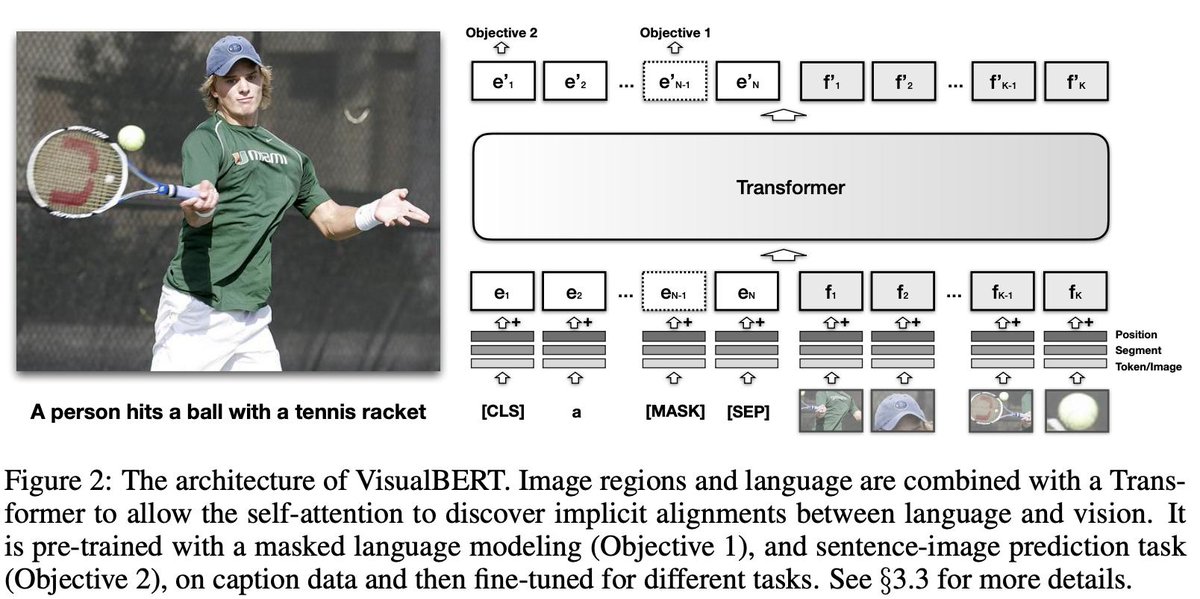

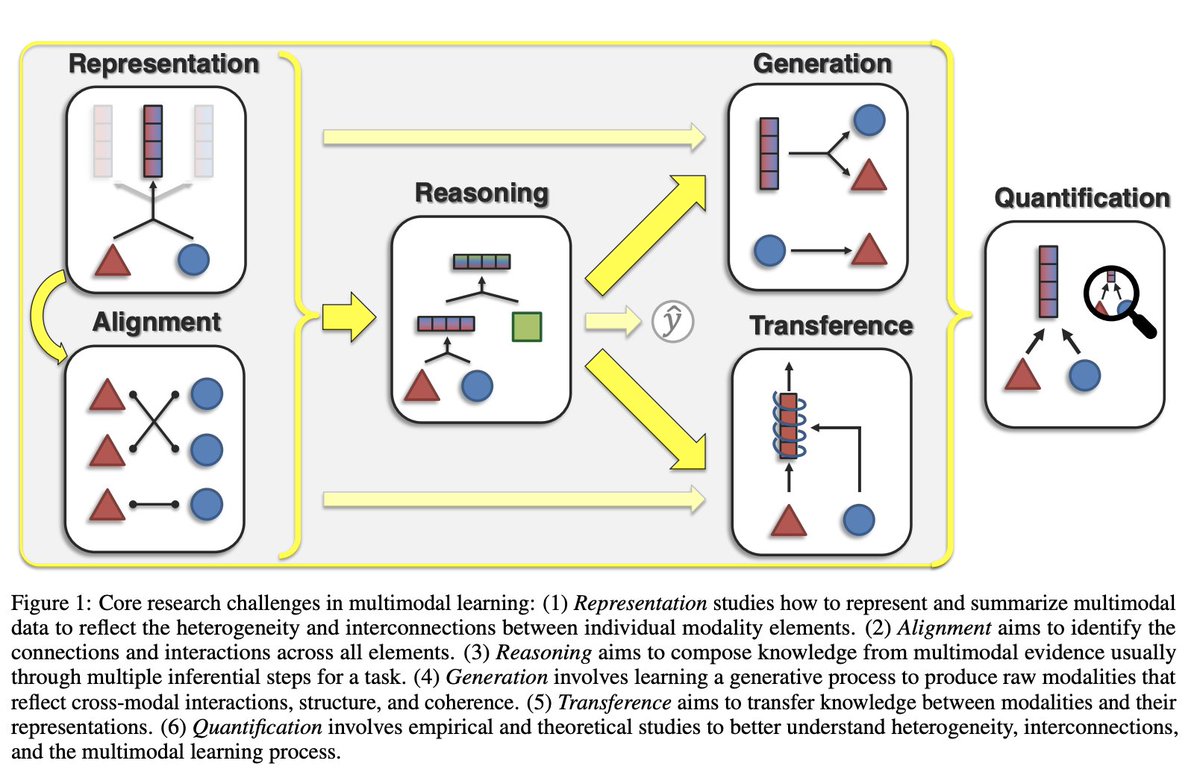

@lpmorency @LTIatCMU @mldcmu @SCSatCMU This tutorial will cover 6 core challenges in multimodal ML: representation, alignment, reasoning, transference, generation, and quantification. Recent advances will be presented through the lens of this revamped taxonomy, along with future perspectives.

0

3

25

@medialab @MITEECS @MIT_CSAIL @MIT Our group is young, but we're starting strong. I'm lucky to work with the best students in AI, and students with diverse backgrounds integrating music, art, cultures, smell, taste, and real-world sensors into the AI toolkit. My group is united in our vision to enhance AI.

1

1

25

We'll be presenting the following at #ICLR2023:. Check out MultiViz, which answers:.1. what we should be interpreting in multimodal models,.2. how we can interpret them accurately,.3. how we can evaluate interpretability through real-world user studies:.

Multimodal models like VilBERT, CLIP & transformers are taking over by storm! But do we understand what they learn? At #ICLR2023 we're presenting MultiViz, an analysis framework for model understanding, error analysis & debugging.

1

4

24

found a cute @Pittsburgh corner on r/place!. ft @CarnegieMellon @steelers @PittTweet. also ft some teams involved in a basketball game or something? not too sure. 😝

1

1

23

friends at #AAAI19 come check out our spotlight talks and posters!. 1. Found in Translation: Learning Robust Joint Representations by Cyclic Translations Between Modalities, 2pm, Coral 1. with @hai_t_pham, @Tom_Manzini, LP Morency, Barnabás Póczos @mldcmu @LTIatCMU @SCSatCMU

1

4

24

starting soon at 9am in great hall B! #CVPR2022

If recent models like DALL.E, Imagen, CLIP, and Flamingo have you excited, check out our upcoming #CVPR2022 tutorial on Multimodal Machine Learning - next monday 6/20 9am-1230pm. slides, videos & a new survey paper will be posted soon after the tutorial!

0

5

22

A reminder to submit your work in multimodal ML, language, vision, speech, multimedia, affective computing, and applications to the workshop on multimodal language @ #ACL2020!.with fantastic speakers:.@radamihalcea @rsalakhu @ehsan_hoque @YejinChoinka

1

3

23

If you're at #ICCV2023, check out our new resource of lecture slides with speaker audio & videos. A step towards training and evaluating AI-based educational tutors that can answer and retrieve lecture content based on student questions!. @ Friday 2:30-4:30pm Room Nord - 011

2

3

21

Join us this Friday for the first workshop on tensor networks at #NeurIPS2020, with a fantastic lineup of speakers:.@AmnonShashua.@AnimaAnandkumar.@oseledetsivan.@yuqirose.@jenseisert.@fverstraete.@giactorlai.and 30 accepted papers!.

Amazing speakers in our Workshop on Quantum Tensor Networks in Machine Learning at Neurisp2020. Please join us this Fri at .#tensornetwors #quantum #machinelearning #neurisp2020 @XiaoYangLiu10 @JacobBiamonte @pliang279 @nadavcohen @sleichen.

0

8

21

@medialab @MITEECS @MIT_CSAIL @MIT To find out more about our group's research on multisensory AI, please see my website.

0

1

20

During my 10 years @CarnegieMellon @mldcmu @LTIatCMU, I’ve met an amazing group of collaborators and had the strongest encouragement from my family and friends ❤️❤️❤️

1

0

22

2. Relational Attention Networks via Fully-Connected CRFs @ Bayesian Deep Learning workshop #NeurIPS2018 . with Ziyin Liu, Junxiang Chen, Masahito Ueda. @mldcmu @LTIatCMU @SCSatCMU

1

5

20

Our work on investigating competitive influences on emergent communication in multi-agent teams, to appear at #aamas2020. paper: code: @rsalakhu @SatwikKottur @mldcmu @LTIatCMU.

On Emergent Communication in Competitive Multi-Agent Teams: External competitive influence leads to faster emergence of communicative languages that are more informative and compositional: #aamas2020 . w/t @pliang279 , J. Chen, LP Morency, S. Kottur

0

4

21