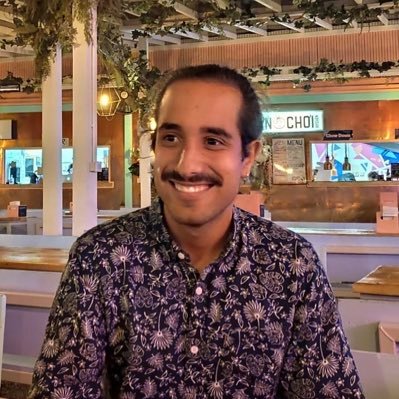

Cong Lu

@cong_ml

Followers

1,171

Following

978

Media

32

Statuses

241

Postdoctoral Research Fellow @UBC_CS , in open-ended RL, and AI for Scientific Discovery. Prev: PhD @UniofOxford , RS Intern @Waymo , @MSFTResearch !

Vancouver, British Columbia

Joined October 2019

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

#KawalPutusanMK

• 3262875 Tweets

#TolakPolitikDinasti

• 2077284 Tweets

#TolakPilkadaAkal2an

• 1997649 Tweets

FB JOIN OPENING BTV HOUSE

• 472073 Tweets

BATALKAN BUKAN TUNDA

• 330462 Tweets

Mulyono

• 226113 Tweets

Bolt

• 89836 Tweets

South Africans

• 83902 Tweets

SF x PONDPHUWIN

• 50801 Tweets

鯉登少尉

• 47107 Tweets

ポケ森サ終

• 40123 Tweets

首都高バトル

• 28703 Tweets

中川大志

• 25157 Tweets

南京大虐殺

• 22281 Tweets

本人確認

• 20833 Tweets

BGYO TRASH OUT NOW

• 17644 Tweets

GCSE

• 16902 Tweets

シェフィ

• 13239 Tweets

ドラゴンズ

• 12824 Tweets

VLOG 10 WITH BUILD

• 12148 Tweets

勝ち越し

• 12040 Tweets

#ミュージックジェネレーション

• 11926 Tweets

ベイスターズ

• 10574 Tweets

Last Seen Profiles

Pinned Tweet

It’s been a dream of mine since I started in ML to see autonomous agents conduct research independently and discover novel ideas! 💡 Today we take a large step towards making this a reality.

We introduce *The AI Scientist*, led together with

@_chris_lu_

and

@RobertTLange

. [1/N]

8

10

79

RL agents🤖need a lot of data, which they usually need to gather themselves. But does that data need to be real? Enter *Synthetic Experience Replay*, leveraging recent advances in

#GenerativeAI

in order to vastly upsample⬆️ an agent’s training data!

[1/N]

5

37

184

🚨 Model-based methods for offline RL aren’t working for the reasons you think! 🚨

In our new work, led by

@anyaasims

, we uncover a hidden “edge-of-reach” pathology which we show is the actual reason why offline MBRL methods work or fail!

Let's dive in! 🧵

[1/N]

3

17

107

I am extremely excited to share Intelligent Go-Explore, presenting a robust exploration framework for foundation model agents! 🤖

It was a delight to work with

@shengranhu

and

@jeffclune

on this!

📜 Paper:

🌐 Website and Code:

Excited to introduce Intelligent Go-Explore: Foundation model (FM) agents have the potential to be invaluable, but struggle to learn hard-exploration tasks!

Our new algorithm drastically improves their exploration abilities via Go-Explore + FM intelligence. Led by

@cong_ml

🧵1/

5

47

253

1

17

73

Super excited to share that I'll be starting as a postdoc

@UBC_CS

with

@jeffclune

this January working on advancing open-endedness with large language/multimodal models and deep RL! 🤩

I'll be at NeurIPS next week and would love to discuss on any of those topics, I'll also be...

5

4

53

Delighted that our paper won the *Outstanding Paper Award* at

#LDOD

at

#RSS2022

!🥳 Thanks to the organizers for an amazing event!

Paper + Code + Data:

Joint with my amazing collaborators🥰:

@philipjohnball

@timrudner

@jparkerholder

@maosbot

@yeewhye

2

11

47

We've now released code for this project at ! We think the potential of synthetic data for sample efficiency and robustness is huge and can't wait to see what people do with it!

In other news, we've extended the paper with pixel-based experiments... [1/2]

RL agents🤖need a lot of data, which they usually need to gather themselves. But does that data need to be real? Enter *Synthetic Experience Replay*, leveraging recent advances in

#GenerativeAI

in order to vastly upsample⬆️ an agent’s training data!

[1/N]

5

37

184

1

8

39

I love this visualisation of where we were at the start of the project (no latex, only markdown, only a few experiments). Our current version of The AI Scientist is the worst it will ever be. 🚀🚀🚀

0

2

33

Thank you so much - I was so incredibly fortunate to have spent these years in Oxford under your and

@maosbot

's supervision, and will always treasure the fun discussions and lessons learned throughout!

7

2

31

Delighted that this piece of work was accepted to

#NeurIPS2023

! Excited to chat about it in New Orleans ✈️✈️

RL agents🤖need a lot of data, which they usually need to gather themselves. But does that data need to be real? Enter *Synthetic Experience Replay*, leveraging recent advances in

#GenerativeAI

in order to vastly upsample⬆️ an agent’s training data!

[1/N]

5

37

184

1

1

30

Will be presenting our spotlight at Reincarnating RL

@iclr_conf

on generating synthetic data for RL with diffusion models at 10:40AM tomorrow!

If you can't make it, here's the pre-recorded talk:

Paper:

#ICLR2023

#GenerativeAI

0

6

27

Super excited to share our new work led by

@JacksonMattT

showing that policy guidance + trajectory diffusion models produce extremely strong RL training data! 💥💥

As an added bonus, our code comes with JAX implementations of offline RL algorithms and diffusion upsampling! 🚀

0

2

22

No better time to start on offline RL from pixels! V-D4RL is now on

@huggingface

at

💥 New D4RL-style visual datasets!

💥 Competitive baselines based on Dreamer and DrQ!

💥 A set of exciting open problems!

Thanks

@Thom_Wolf

for the idea 😻

Delighted that our paper won the *Outstanding Paper Award* at

#LDOD

at

#RSS2022

!🥳 Thanks to the organizers for an amazing event!

Paper + Code + Data:

Joint with my amazing collaborators🥰:

@philipjohnball

@timrudner

@jparkerholder

@maosbot

@yeewhye

2

11

47

0

6

21

Come chat to us at the

#NeurIPS2023

Robot Learning Workshop in Hall B2 about policy-guided diffusion!

Super exciting work showing that guided diffusion enables long-sequence on-policy synthetic data for training agents! 🚀🚀

0

3

18

So cool to see people building agents with Intelligent Go-Explore already!! 🚀🚀🚀

Magency: my project for the

@craft_ventures

agents hackathon 🤖👇

There is no good way to control AI agents on a mobile phone. There should be an app for that!

Magency is a mobile app that lets you "make a wish", aka just say what you want into one text box and see a feed of

1

9

29

0

2

18

Delighted that research from our lab was featured in Science News! Great read about harnessing large language models to create open-ended learning systems!

OMNI-EPIC & Intelligent Go-Explore in Science News! "Both works are significant advancements towards creating open-ended learning systems,” -Tim Rocktäschel.Lead by

@jennyzhangzt

@maxencefaldor

&

@conglu

Quotes

@j_foerst

&

@togelius

too.Thx

@SilverJacket

!

2

14

54

0

1

18

Really excited about this recent work to feature in

#ICML2021

on meta-learning task exploration in agent belief space!

Exploration in Approximate Hyper-State Space for Meta Reinforcement Learning

-

@luisa_zintgraf

,

@lylbfeng

,

@cong_ml

,

@MaxiIgl

,

@kristianhartika

,

@katjahofmann

,

@shimon8282

1

2

24

0

1

15

In many realistic imitation learning settings, we often have differences in observations between experts and imitators. E.g. when experts have privileged information.

Excited to share our new work towards a principled Bayesian solution to resolving such imitation gaps! 😍

0

2

15

Excited to share our ICLR spotlight on revisiting🤔 design choices in offline MBRL: later today!

In the meantime, check out our light-hearted video introducing the paper: 😀

@philipjohnball

@jparkerholder

@maosbot

, Steve Roberts

0

4

12

Extremely excited to share our new work led by

@GunshiGupta

and

@KarmeshYadav

showing that pretrained diffusion models provide powerful vision-language representations for control tasks that drive efficiency and generalization!

All code open-sourced at:

0

3

12

Come chat to us

@jparkerholder

@philipjohnball

at the

#ICLR

SSL-RL Workshop about generalisation to environments with changed dynamics from offline data on a single environment with Augmented World Models!

Gathertown Link:

Paper:

0

2

12

We are excited about scaling this work to more settings! This is joint work with awesome co-authors:

@philipjohnball

,

@jparkerholder

🥰. Come chat with us at the Reincarnating RL Workshop at

@iclr_conf

or get in touch!

Paper:

[7/N]

0

3

12

Super excited by our recent work massively expanding the scope of PBT methods in RL!

💥 Joint adaptation of architecture and hyperparameters

💥 Treating the *whole* RL hyperparameter space with trust-region BO

💥 Massive improvements on the prior PBT baselines, all code online!

0

2

10

Really excited to share our recent work with

@philipjohnball

,

@jparkerholder

and Steve Roberts on dynamics generalisation from data from a single offline RL environment! To appear as a spotlight in the

#ICLR2021

SSL-RL Workshop 😃

0

0

12

Come chat to us at C0 about generalisation to new tasks from offline data on a single environment with AugWM!

#ICML2021

Spotlight presentation in Reinforcement Learning 5, Wed 21 Jul 02:00 BST — 03:00 BST (Tues 6 p.m. PDT)

Poster Session 2: Wed 21 Jul 04:00 BST — 07:00 BST (Tues 8 p.m - 11 p.m. PDT)

@philipjohnball

,

@cong_ml

,

@jparkerholder

, Stephen Roberts

#ICML2021

1

1

4

0

2

11

Excited to present some recent work with

@timrudner

,

@maosbot

and

@yaringal

at the

#NeurIPS2020

BDL Meetup today at 12 & 5pm GMT! Join us at !

0

1

11

Come see us tomorrow at the contributed orals at the

#GenAI4DM

workshop, talking about leveraging text-to-image diffusion models as vision-language representation learners for control!

#ICLR2024

Also looking forward to the Contributed Oral Talks at

#GenAI4DM

workshop at

#ICLR2024

:

Do Transformer World Models Give Better Policy Gradients?

Authors:

@michel_ma_

@twni2016

Clement Gehring

@proceduralia

@pierrelux

Pretrained Text-to-Image Diffusion

0

4

13

0

1

9

Come chat to us now @ D5, RL4RL workshop: about our new work revisiting uncertainty quantification in offline MBRL and showcasing new SOTA results on D4RL MuJoCo 😻 Paper:

@philipjohnball

@jparkerholder

@maosbot

, Stephen Roberts

0

4

9

Thanks for having me! Super fun discussion on The AI Scientist! ❤️

WE ARE STARTING IN 15 MIN

We have a great list of papers and guests!

The AI Scientist: Towards Fully Automated Open-Ended Scientific Discovery - first author

@cong_ml

will be presenting!

Scaling LLM Test-Time Compute Optimally can be More Effective than Scaling Model

1

3

32

0

0

9

Check out our recent work revisiting design choices in offline model-based reinforcement learning! 🤔 arXiv: With fantastic collaborators:

@philipjohnball

@jparkerholder

@maosbot

Stephen Roberts!

1

0

8

Agreed, truly a phenomenal team to envision the future of scientific discovery with!!! 🥰🥰🥰

Time to retire

@SchmidhuberAI

?

📹:

Jokes aside - this project has been soo much fun!

@_chris_lu_

and

@cong_ml

made this one of the best colabs I had so far 🥰

1

1

39

0

0

7

We are excited about what this unified perspective of offline RL might mean for future work!

To find out more, please check out our paper: ,

And code: ,

Thanks again to my amazing co-authors

@anyaasims

@yeewhye

! 🥰🥰

[N/N]

0

0

6

Blog:

Paper:

Open-Source Code:

It was an absolute joy to work together with

@_chris_lu_

,

@RobertTLange

,

@j_foerst

,

@jeffclune

,

@hardmaru

on this 🥰, and can’t wait to see what the community does with this!

0

0

5

We hope this work can springboard progress in this very nascent field! Work done with some awesome collaborators:

@philipjohnball

@timrudner

@jparkerholder

@maosbot

@yeewhye

. 🥳 [8/N]

1

0

5

... presenting our new work on efficiently training RL agents with synthetic generative data () at Poster Session 2 on Tuesday. Do come say hi! 👋

RL agents🤖need a lot of data, which they usually need to gather themselves. But does that data need to be real? Enter *Synthetic Experience Replay*, leveraging recent advances in

#GenerativeAI

in order to vastly upsample⬆️ an agent’s training data!

[1/N]

5

37

184

0

1

2

@jsuarez5341

Agreed! Some way of selectively deferring to the FM for the “harder” parts of the env would drastically increase throughput!

1

0

4

You can find the paper here:

Code here:

Thanks again to my amazing co-authors

@philipjohnball

@timrudner

@jparkerholder

@maosbot

@yeewhye

😻

[N/N]

0

1

4

To kick off progress on new methods, we include strong baselines derived from the SoTA DreamerV2 and DrQ-v2 algorithms! Concretely, we adapt DreamerV2 (

@danijarh

) to the offline setting by introducing a penalty based on mean disagreement, resulting in Offline DV2. [2/N]

1

0

4

This work was done during an awesome MAX internship over the summer hosted jointly by

@MSFTResearchCam

and

@XboxStudio

in the very lovely Cambridge. I’m super grateful to my hosts

@ralgeorgescu

and

@rookboom

, and all the friends made along the way! 🥰🥰

0

0

4

💥 ML Research Opportunity for under-represented undergrads at Oxford! 💥

Would appreciate help sharing this widely! UNIQ+ is an awesome way to spend two months getting stuck into ML at great groups

@oxcsml

@CompSciOxford

See proposed projects here:

0

1

3

@Hoper_Tom

@jeffclune

Thank you for kindly sharing these works! We will discuss these in the updated version of the paper, and also look forward to integrating the insights from your paper into our work!

0

0

3

We adapt the algorithm DrQ-v2 (

@denisyarats

) by adding an adaptive behavioral cloning term similar to TD3+BC, resulting in DrQ+BC. We also include a CQL and BC implementation in the same codebase. [3/N]

1

0

3

@jsuarez5341

@arankomatsuzaki

We found this as well in a project that sounds v close to both these efforts! See Table 3 of where we show diffusion synthetic data can help scale the network sizes in TD3 :)

1

0

2

Efficient online reinforcement learning with offline data ()

@philipjohnball

@ikostrikov

@smithlaura1028

showing a remarkably simple method to accelerating online pixel-based training with V-D4RL datasets!

[5/N]

1

0

2

@Stone_Tao

Thanks! At the moment, it's roughly 50/50 for diffusion vs. RL training. Big potential for speed-ups there though. DMC is on proprioceptive, visual transitions incoming! :)

1

0

2

Agent-controller representations: Principled offline rl with rich exogenous information ()

@riashatislam

@manan_tomar

learning how to handle rich amounts of irrelevant information commonly found in pixel-based datasets!

[2/N]

1

0

2

@vladkurenkov

@shaneguML

@ML_is_overhyped

Super cool work!! We also found a deeper networks to help in TD3+BC esp. with synthetic data ;) (Table 3 of )

0

0

2

@mejia_petit

@jeffclune

Hey! We released all outputs of our agent at the Google Drive link in

Link:

1

0

2

@kchonyc

We investigate this for offline MBRL, we sho that using BO and a small number of online evals we can get vastly improved performance:

0

1

2

Revisiting the Minimalist Approach to Offline Reinforcement Learning ()

@ML_is_overhyped

@vladkurenkov

integrating many simple recent algorithmic advances into the DrQ+BC baseline provided by V-D4RL!

[4/N]

1

0

1

@TesfayZemuy

Yes, they were certainly slower. No reason not to use diffusion models instead as the generative model :)

0

0

1