Stone Tao

@Stone_Tao

Followers

4K

Following

11K

Media

212

Statuses

3K

PhDing @UCSanDiego @HaoSuLabUCSD @hillbot_ai on scalable robot learning and embodied AI. Co-founded @LuxAIChallenge to build AI competitions. @NSF GRFP fellow

San Diego, CA

Joined January 2013

so far Genesis is the one of the most feature rich simulators out there, combining many many things and makes for a great general data gen platform. Unfortunately it's still some distance away from supporting fast & stable simulation for most things, including basic manipulation

Everything you love about generative models — now powered by real physics!. Announcing the Genesis project — after a 24-month large-scale research collaboration involving over 20 research labs — a generative physics engine able to generate 4D dynamical worlds powered by a physics

11

27

388

@MarioBalukcic automation is a lot of what engineers do, and this game is all about that. It’s like crack for engineers but as a game. It’s also one of the first of its genre (or the first to be well made at least), and has spawned a ton of other games in the automation genre.

2

2

319

2 million+ people just got an email from @kaggle with me looking awkwardly at another screen with an overdue haircut 🥲.but also RL is cool and u should compete and beat the humans on the leaderboard, right now humans are winning

3

19

228

@Alexwashere @tszzl i can’t imagine the amd nvidia GPUs ever beefing with each other, my culture and probably taiwanese culture is very against fighting relatives.

6

2

215

speedrunning some rl right now. Using cudagraphs from @VincentMoens PPO, finally broke the 1 minute barrier to solve a cube picking task in ManiSkill. reproducible code+script with google colab support:

6

15

205

a world first? PPO from pixels solving sim locomotion (with @anybotics Anymal robot) in 30 minutes! Left video shows 4 parallel envs with their 128x128 pixel observations on the right. Right video is the beautified render of the same task

11

19

190

maybe hot take i kind of don’t like how rendering robotics projects in blender is becoming more of a norm. I’m forced to render all my projects with ray tracing now and tune lighting to make it look nicer even though the render quality has 0 to do with the project.

9

8

193

Super excited to announce that I will be joining @UCSanDiego as a PhD student @ucsd_cse and will be advised by professor Hao Su @haosu_twitr 😊.

20

3

175

26k demos over 8 months by 35 tele-operators for an overall 75% success rate (with task specific models). A tiny step forward in supervised robot learning but unfortunately brings massive concerns about pure real world imitation learning. Some intuition 1/n.

🔥 Hot release: Aloha unleashed. World first demonstration of a robot able to tie shoelaces or hang t-shirts autonomously!. They trained a diffusion policy at scale: 26,000 demonstrations over 5 tasks on Aloha 2 robot. Retweet if you'd like them to open-source 😝. (video x4).1/🧵

2

12

166

🎉 Our new work tackling long horizon low-level manipulation in apartments is out! ~500GB of demonstration data in sim (you can generate more) and RL/IL baselines all provided. ManiSkill helped make this project scalable and faster to run via GPU sim compared to alternatives.

📢 Introducing ManiSkill-HAB: A benchmark for low-level manipulation in home rearrangement tasks!. - GPU-accelerated simulation.- Extensive RL/IL baselines.- Vision-based, whole-body control robot dataset. All open-sourced: 🧵(1/5)

4

19

152

two papers accepted at the #NeurIPS2022 deep RL workshop! Also my first ever first author works! Threads coming soon.

12

5

124

it seems like either YC doesn’t have enough / strong reviewers in robotics or they care more about the founders and less about the idea. This new YC startup simply added an exoskeleton to an existing open source project. Nothing special.

"We design and manufacture low-cost and easy-to-use devices for collecting human demonstration data" . 🤔 they forgot to mention git clone

8

1

127

on a streak of adding new tasks to maniskill for RL/IL benchmarking and nice demos: The @UnitreeRobotics humanoid G1 robot learns to place an apple in a bowl (reward function is surprisingly hard! this took me a whole day to write). RL takes < 1 hour to train with GPU sim

3

7

122

🤯 and yet people still aren’t buying that simulation and RL will be cornerstones of future embodied AI training.

Unitree G1 mass production version, leap into the future!.Over the past few months, Unitree G1 robot has been upgraded into a mass production version, with stronger performance, ultimate appearance, and being more in line with mass production requirements. We hope you like it.🥳

5

2

111

🚨 Introducing Reverse Forward Curriculum Learning (RFCL). A learning from demos algo that gets SOTA sample/demo efficiency on robotics tasks via state reset+curricula, solving many MetaWorld/ManiSkill/Adroit tasks from just 1 demo! At #ICLR2024 poster 283, Thurs 10:45AM .🧵(1/n)

1

18

104

just ran PPO with RGB inputs. Small CNN+MLP gets 5k to 10k FPS and this task is solved from vision+robot proprioception in about 1.2 hours using 20 million samples. Video below shows exact image observation given (128x128 RGB data)

A community member / student at UCSD contributed a GPU parallelized PushT task to ManiSkill. Very impressive and probably the only GPU simulated PushT task out there. Trains in like 10 minutes on a 4090 with PPO!

2

6

92

all the robotics related companies highlighted by Jensen at #CES2025 . notably a very high proportion of 4/6 robot brain/foundation model focused companies are started by current professors (including my own advisor!). Covariant, Hillbot, Physical Intelligence, and Skild AI

6

12

94

Another robotics company that leverages simulation. Exciting to see!

Thrilled to announce @SkildAI! Over the past year, @gupta_abhinav_ and I have been working with our top-tier team to build an AI foundation model grounded in the physical world. Today, we’re taking Skild AI out of stealth with $300M in Series A funding, led by @lightspeedvp,.

4

4

90

robocasa partially imported into ManiSkill shown below (GPU sim and closeups). Didn't realize how crazy the engineering efforts behind robocasa were until I tried to adapt it to our simulator. They generate cabinets on the fly! objects to pick/place to be added next

@Stone_Tao It would be great if you had robocasa assets in maniskill. It generates urdfs, it should be easy right?.

3

8

91

officially graduated from @ucsd_cse 🎉 (just to come back for another half decade) Looking forward to a lifetime of learning, research, and innovation

5

0

85

multiple people confused/asking about simulation tech stacks. Sharing this figure by @tongzhou_mu which sums it up quite cleanly! Detailing exactly each benchmark/sim frameworks choice of renderer, physics engines etc

3

15

85

NeurIPS didn’t categorize the papers at poster sessions even when they had banners for topics. So channeling my inner @_akhaliq here’s a short thread of some of the interesting ones in RL I saw today that may have gone under the radar.

1

8

74

15 minutes of training on the GPU and it is now mostly solved on every partnet mobility cabinet (note its made easier with state input, and very little noise). this task used to take hours on old maniskill versions

maniskill3 sneak peak 2: Diverse articulated simulation Each of the parallel envs has a different cabinet with different dofs. The green ball highlights the drawer to open and the link/joint info is accessible by a single object that "views" selected links across envs

2

9

75

sorry I lied. This is almost possible. Hacked together a GPU parallelized robotics drawing simulator 🖌️🎨.Left: 4 out of 256 parallel envs with rendering (8500 FPS), Right: teleoperation

don’t honestly think this is remotely easy to do but it’s cool people want to use our simulator for chinese calligraphy 🖌️

4

8

74

a ton of people in the lux competition are using RL this year and a huge part of it is thanks to @vwxyzjn excellent blog post on action masking which makes RL much more feasible in complex action spaces, just saw like 6 people reference it in my discord when they discussed RL!.

2

4

71

@jsuarez5341 a mindset i’ll probably adopt once im done with PhD. Paper submissions are really not worth it. True value is in whether someone uses the work or not.

2

0

70

two recent papers pushing this direction out of using sim states for fast robot learning:. RFCL (my paper): DemoStart (deepmind): the training efficiency gains are crazy high, both sample and walk time efficiency. Use state reset!

Question for people working on policy-gradients: if you assume access to a simulator that spits out trillions of samples, shouldn't you also assume (and build in) oracle access to it? It seems strange to me (and maybe only explainable from history) to have one but not the other?.

3

12

67

Thank you for posting an extremely comprehensive benchmark. I have not had time to verify the results but the numbers make more sense now in context and it's great to see the impressive scalability of Genesis compared to existing platforms. The analysis here is quite in-depth!.

We’re excited to share some updates on Genesis since its release:. 1. We made a detailed report on benchmarking Genesis's speed and its comparison with other simulators (. 2. We’ve launched a Discord channel and a WeChat group to foster communications.

3

3

66

🎉 Lux AI Season 3 has been accepted to #NeurIPS2024 .One reviewer even gave a 9 for our proposal and said they themselves might even participate!. Super excited to work with @kaggle again to launch large scale and exciting AI competitions around RL 😃.

2

5

62

Just accepted at #ICML2023 😃, looking forward to seeing old and new friends in Hawaii 🌴.

Preprint alert! ⛏️🧱.Abstract-to-Executable Trajectory Translation for One-Shot Task Generalization: We achieve strong one-shot task generalization on diverse tasks through RL with low-level control, like stacking a 28-block Minecraft castle IRL! 🧵(1/n)

2

3

59

Don’t have a real robot/setup but want to evaluate policies trained on real world datasets? Check out SIMPLER, fast, safe, and reliable evaluation of real robot policies in sim via ManiSkill 2. The ManiSkill 3 beta will port SIMPLER over soon so stay tuned!.

Scalable, reproducible, and reliable robotic evaluation remains an open challenge, especially in the age of generalist robot foundation models. Can *simulation* effectively predict *real-world* robot policy performance & behavior?. Presenting SIMPLER!👇.

2

6

59

seems some sim locomotion task rewards are pretty easy (there is a 1-line difference between this task and the one quoted). First try and it works, spin as fast as possible without falling. Perhaps a easy new control task for RL bench-marking that also looks somewhat realistic

a world first? PPO from pixels solving sim locomotion (with @anybotics Anymal robot) in 30 minutes! Left video shows 4 parallel envs with their 128x128 pixel observations on the right. Right video is the beautified render of the same task

2

5

56

None of the humanoid companies have showed reliability demos so it seems the manipulation AI is not yet at the hyped expecations. Really waiting for the first company to livestream their robot in a room responding to public user commands. That’s truly a good first demo.

Yes, it’s a robot. A cool one!. But seeing hyperbolic takes on product-readiness based on just a video. The emphasis on physical safety is great (soft body=safer hugs), but these demos are similar to other bots in 2024. Is it walking?.Teleoperated?.As always, is it *reliable*?.

1

3

57

community is building on ManiSkill/SAPIEN! A community member also helped set up this repo collecting ManiSkill related papers/projects, submit yours as well!

A Vision Pro teleoperation demo in ManiSkill. It’s still naive and hard to operate precisely. Maybe we can fully utilize the AR capability of Vision Pro to revolutionize the interaction mode in the future. ManiSkill is an impressive simulation environment—big thanks to

1

8

55

couldn’t wait / was working on docs/tutorials to build simulated tasks so went ahead and did the first step, add the assets in. next up is adding that reorienting fixture, then motion planning solution maybe to generate demos

This is a great task, exciting dataset for people to play with! I like that it contains.- precision placement and grasping.- re-grasping in order to re-orient a piece.- long horizon tasks.

4

2

55

#ICLR2024 submission accepted! . better yet, this was also the first ever research paper for my two interns/co-authors @arth_shukla @tsekaichan, was a great experience learning how to mentor them! . See you all in vienna 🇦🇹.

2

3

54

You all can look forward to a **even faster** ManiSkill 3 (official v3 coming out hopefully in may/june). Many many things are GPU parallelized, far more than Isaac. Also, it's a single pip install and ManiSkill 3 remains still a single pip install + runnable on Colab 😁.

I like this advice. If I was getting started as a robotics student these days, I think I would install ManiSkill2 and try to get some learning stuff working - I like that it has great simulations of "real" robot sensors, is fast, and easy to start with.

3

7

49

just rewrote this in pytorch and added support for GPU parallelized environments, our simulator is about to have the fastest learning from demos baseline (in sim), will share a cool demo of how fast you can go from built task to autonomous policy without dense rewards.

🚨 Introducing Reverse Forward Curriculum Learning (RFCL). A learning from demos algo that gets SOTA sample/demo efficiency on robotics tasks via state reset+curricula, solving many MetaWorld/ManiSkill/Adroit tasks from just 1 demo! At #ICLR2024 poster 283, Thurs 10:45AM .🧵(1/n)

0

9

48

an incredible part of Maniskill is that on one GPU you can do generalizable manipulation by parallel simulating 1000s of objects/articulations and solve all at once + render 100x faster than eg Isaac 🤯.If you work on manipulation this is a strong reason to switch to ManiSkill!.

We support diverse parallel simulation, where each env may have a different scene with different objects and articulations (hence everything everywhere all at once). You can simulate **every** cabinet in PartnetMobility and **every** YCB object at once on one GPU! (2/6)

0

6

47

unreal engine simulation 👀, more and more companies investing / exploring simulation for robotics!.

We've trained a voxel occupancy neural network to build live 3D maps using regular cameras. It runs at 3ms on nvidia A40 & 30ms on jetson nano orin. Training takes 5 days on 0.7M images from our @UnrealEngine simulation. @maticrobots @mehul @navneetdalal .vis in @rerundotio 🔥

1

1

45

generative sim + motion planning/RL for compute scalable diverse data generation, followed by imitation learning from point clouds with sim2real transfer. nice work!.

How to address the pain points of creating and solving simulation tasks and reducing sim-to-real semantic gaps in robotics? Super excited to release GenSim2 in #CoRL2024. This work addresses several challenges in extending GenSim to articulated, 6-dof robotic tasks.

0

2

43

Sometimes feel a little fomo for not pursuing more LLM centered work as there’s a lot of tools, money, hype, and low-hanging fruit. But i’ve always been up for challenges and look for ideas with unforeseen potential; exploring new directions often requires strong fundamentals.

I honestly think the undergrads should spend more time on understanding the foundamentals of math and science instead of chasing all these AI hypes. Knowing how Vicuna differs from Alpaca probably won't matter much in a year, but knowing how to do SVD will always help you . .

2

0

43

We now have an initial ManiSkill3 paper out on arXiv which you can cite, just in time for the ICLR submission deadline 😁.

for anyone using ManiSkill 3 in their research / upcoming conference deadlines, we will have a v1 preprint out some time week so you cite it! A v2 version with more experiments (mostly just running baselines + data collection atm) will come out later.

2

5

43

100% success rate is a very bold claim. I very much want to see these evaluation videos, how much generalization is being tested?.

It continues to blow my mind how well our robot neural networks perform. Today we had a complicated task, too hard to script with code, eval at 100% off a small training set.

4

1

41

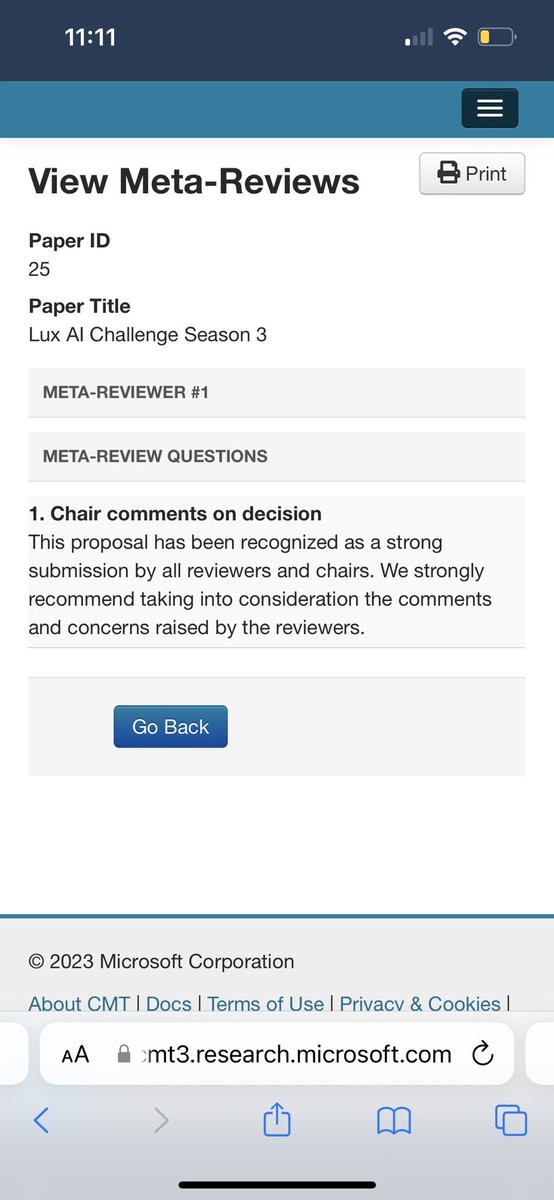

the @LuxAIChallenge has been accepted as a competition by #NeurIPS2023! (and seen as a strong submission by all reviewers). More details to follow 🎉

1

1

41

@ronawang according to some friends, this is partly why china did worse in the IMO for a few years. Before IMO used to nearly guarantee acceptance to top universities in China (maybe they could skip the 高考), they stopped that for a bit and suddenly China did worse in the IMO lmao.

4

0

39