Huaxiu Yao

@HuaxiuYaoML

Followers

2,926

Following

541

Media

78

Statuses

336

Assistant Professor of Computer Science @UNC @unccs @uncsdss | Postdoc @StanfordAILab | Ph.D. @PennState | #foundationmodels , #AISafety , #AIforScience | he/him

Palo Alto, CA

Joined May 2013

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Southport

• 671706 Tweets

Rebeca

• 473042 Tweets

Simone

• 414924 Tweets

Lula

• 388640 Tweets

Beirut

• 261788 Tweets

julia

• 241465 Tweets

Hezbollah

• 240374 Tweets

Lebanon

• 224678 Tweets

#OlympicGames

• 198024 Tweets

لبنان

• 154187 Tweets

#GinasticaArtistica

• 85892 Tweets

Padres

• 71442 Tweets

Green Day

• 59436 Tweets

イスラエル

• 56307 Tweets

ファール

• 55179 Tweets

#كاريزما24

• 46361 Tweets

#الضاحيه_الجنوبيه

• 43868 Tweets

Tigers

• 43636 Tweets

#GravityFalls

• 43404 Tweets

スペイン

• 34731 Tweets

Dodgers

• 30770 Tweets

Southend

• 30077 Tweets

Evans

• 26916 Tweets

Orioles

• 22275 Tweets

Marlins

• 20858 Tweets

Fuad Shukr

• 20636 Tweets

World Series

• 20147 Tweets

Flaherty

• 18641 Tweets

FURIA EN CARAJO

• 18058 Tweets

Kürtçe

• 17399 Tweets

The EDL

• 14407 Tweets

Daniel Wiffen

• 12598 Tweets

対象の連載2作品

• 12258 Tweets

$MSFT

• 10851 Tweets

Andy Murray

• 10574 Tweets

Last Seen Profiles

🚨 Unveiling GPT-4V(ision)'s mind! We're breaking down how even the brightest Visual Language Models get it wrong!

With our new 'Bingo' benchmark, we shed light on the two common types of hallucinations in GPT-4V(ision): bias and interference.

Led by

@cuichenhang

@AiYiyangZ

1

51

183

Excited to announce the Workshop on Reliable and Responsible Foundation Models at

@iclr_conf

2024 (hybrid workshop).

We welcome submissions! Please consider submitting your work here: (deadline: Fed 3, 2024, AOE)

Hope to see you in Vienna or

1

38

181

🚀 Can we directly rectify hallucination in Large Vision-Language Models (LVLMs)?

🛠 We introduce a hallucination revisor named LURE that mitigates hallucination in LVLMs, achieving over a 23% improvement!

Nice work, Yiyang Zhou &

@cuichenhang

1

24

149

Excited to announce Wild-Time, a benchmark of in-the-wild distribution shifts over time with 5 datasets spanning diverse real-world applications and data modalities in

#NeurIPS2022

.

A nice collab. w/

@carolineschoi

,

@AsukaTelevision

,

@yoonholeee

,

@PangWeiKoh

,

@chelseabfinn

2

13

102

We are actively seeking highly motivated students for Ph.D. positions (Fall 2024) at UNC NLP

@uncnlp

, including my group, the deadline is 12/12.

🚨🎓 We have several PhD (and postdoc) openings in NLP+CV+ML+AI in beautiful Chapel Hill 👇

Please RT+apply & ping our faculty for any questions (application-fee waivers & no GRE requirement)!

@mohitban47

@gberta227

@snigdhac25

@TianlongChen4

@shsriva

@HuaxiuYaoML

+ others

🧵

2

24

90

4

31

101

In regression, neural nets 1) face the challenge of overfitting; 2) are brittle under dist. shift

In

#NeurIPS2022

, we introduce a simple & scalable mixup method that improves the generalization in regression.

w/

@yipingw52742502

,

@zlj11112222

,

@james_y_zou

,

@chelseabfinn

1

19

94

🚨Excited to share our work on the seamlessness between Policy Models (PM) and Reward Models (RM) in RLHF🌟!

Motivation: Improving PM and RM separately doesn't translate to better RLHF outcomes.

Solution: We introduce SEAM, a concept that quantifies the distribution shift

#RLHF

is taking the spotlight. We usually focus on boosting reward and policy models to enhance RLHF outcomes. Our paper dives into the interactions between PM and RM from a data-centric way, revealing that their seamlessness is crucial to RLHF outcomes.

2

19

71

1

15

80

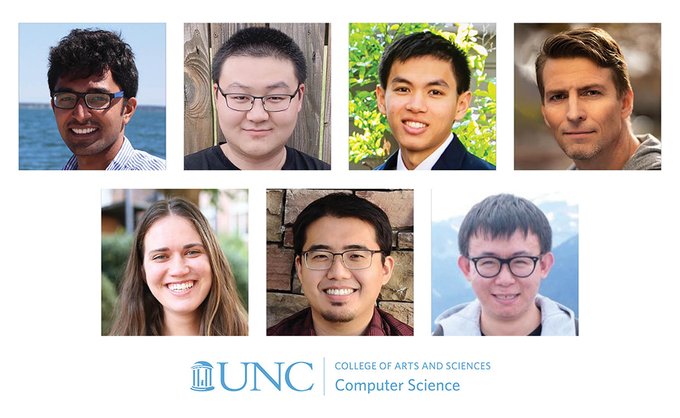

It’s my great pleasure to join UNC CS

@unccs

!

🎉Please join us in welcoming this year's new faculty cohort! From algorithms, security, machine learning, to graphics and computational optics, these faculty will maintain the standard of excellence at

@UNCCS

!

➡️

@UNC

@unccollege

@UNCResearch

@UNCSDSS

4

9

39

2

1

73

📢The reasoning ability of multimodal LLMs has been widely evaluated in single and static images, which are far more enough. Introduce '🎞️ Mementos': our new benchmark to push multimodal LLMs to understand and infer the behavior over image sequence.

Findings:

#GPT4V

and

#Gemini

🎬 Just like Nolan's 'Memento' rewrote storytelling, we're reshaping AI! Introducing '🎞️ Mementos': our benchmark pushing AI to understand sequences of images, not just stills. A real game-changer in AI's narrative.

#AIStorytelling

#Multimodal

#LLMs

#GenAI

7

45

550

1

17

76

We need more reviewers for the Workshop on Reliable and Responsible Foundation Models at

@iclr_conf

, if you are interested, please fill out the nomination form .

Excited to announce the Workshop on Reliable and Responsible Foundation Models at

@iclr_conf

2024 (hybrid workshop).

We welcome submissions! Please consider submitting your work here: (deadline: Fed 3, 2024, AOE)

Hope to see you in Vienna or

1

38

181

3

18

74

I'll be at

#CVPR2024

from June 16th to 22nd, looking forward to catching up with old friends and making new ones. In addition, I have 2-3 PhD openings next year.

Feel free to DM me to grab a ☕️ and chat about research and PhD opportunities if you're around!

0

7

73

📢Workshop on Reliable and Responsible Foundation Models will happen today (8:50am - 5:00pm). Join us at

#ICLR2024

room Halle A 3 for a wonderful lineup of speakers, along with 63 amazing posters and 4 contributed talks! Schedule: .

Excited to announce the Workshop on Reliable and Responsible Foundation Models at

@iclr_conf

2024 (hybrid workshop).

We welcome submissions! Please consider submitting your work here: (deadline: Fed 3, 2024, AOE)

Hope to see you in Vienna or

1

38

181

2

14

55

Thanks a lot, Mohit! If you are interested in joining my lab, kindly complete the application form and send an email to huaxiu.recruiting

@gmail

.com.

- Ph.D. student and intern application form:

- Postdoc application form:

🎉🥳 Excited to have

@HuaxiuYaoML

joining us (from

@StanfordAILab

) very soon this August 2023! Welcome to the

@unc

@unccs

@uncnlp

family, Prof. Huaxiu!! Looking forward to many awesome collaborations😀

PS. Students applying for spring/fall2024 PhD admissions, take note below 👇

0

4

36

1

5

54

👇LURE is accepted by

@iclr_conf

. Give it a shot to minimize object hallucination in your vision LLM if you encounter this challenge

🚀 Can we directly rectify hallucination in Large Vision-Language Models (LVLMs)?

🛠 We introduce a hallucination revisor named LURE that mitigates hallucination in LVLMs, achieving over a 23% improvement!

Nice work, Yiyang Zhou &

@cuichenhang

1

24

149

1

12

52

Super interesting and fruitful panel discussion at

#ICML2022

@PTMs_Workshop

. It was my honor to moderate the panel.

Thanks to all panelists:

@james_y_zou

,

@OriolVinyalsML

,

@maithra_raghu

,

@jasondeanlee

,

@endernewton

, and Zhangyang Wang.

1

3

52

🚀 New Paper Alert!

#AIChallenge

: How do we prevent "hallucination snowballing" in Large Language Models (LLMs)? And, how can we use verification results to enhance trustworthiness in AI-generated text? These are critical issues in

#LLMs

.

🧠 Introducing EVER (Real-time

2

14

52

We extend the deadline for one week, the new deadline is Feb 10, 2024, AOE! Looking forward to your submissions!

Excited to announce the Workshop on Reliable and Responsible Foundation Models at

@iclr_conf

2024 (hybrid workshop).

We welcome submissions! Please consider submitting your work here: (deadline: Fed 3, 2024, AOE)

Hope to see you in Vienna or

1

38

181

0

14

51

I'll be at

#ICLR2024

in Vienna🇦🇹 next week (from May 7th to 12th), looking forward to catching up with old friends and making new ones. Feel free to DM me to grab a ☕️ and chat if you're around!

0

3

48

I will be

#NeurIPS22

next week. I am on the academic job market and work on building machine learning models that are unbiased, widely generalizable, and easily adaptable to distribution shifts.

DM me to grab a ☕️ and chat if you are around!

0

3

47

Meta-learning typically randomly sample meta-training tasks with a uniform probability, where tasks are of equal importance.

However, tasks may be detrimental with noise or imbalanced.

In

#NeurIPS2021

, we propose a neural task scheduler (ATS) to adaptively select training tasks

1

6

47

🎉Our paper “Meta-learning with Fewer Tasks through Task Interpolation” has been accepted by

@iclr_conf

. A simple solution to improving the generalization of meta-learning by densifying the task, particularly works if you do not have a large number of training tasks.

Meta-learning methods need a large set of training tasks. We introduce a simple regularizer that helps, especially when you don’t have a lot of tasks.

Meta-Learning with Fewer Tasks through Task Interpolation

Paper:

with

@HuaxiuYaoML

,

@zlj11112222

1

36

191

1

5

43

I will attend

#KDD2023

from next Mon (Aug 7) - Wed (Aug 9).

📢My group

@unccs

will have multiple Ph.D. (fall 2024)/remote intern positions.

☕️DM me if you are interested in discussing

#foundationmodels

,

#AISafety

,

#MedicalAI

, or Ph.D. applications.

1

7

42

Excited to share our new work on Healthcare AI: can we assist doctors in recommending personalized newly approved medications to patients ()?

A nice work led by Zhenbang Wu, and collab w. the amazing

@james_y_zou

@chelseabfinn

@jimeng

and others.

2

9

36

🚀 Preference fine-tuning has shown immense power in boosting factuality in LLMs! Our straightforward strategy slashes factual errors by a whopping ~50% in LLama 1 & 2.

1

8

36

If you're at

#ICML2022

and interested in out-of-distribution generalization, please come to our talk (Wed 20 July 1:15 pm ET, Room 318 - 320) and poster (Wed 20 July 6:30pm, Hall E

#321

)!

Neural nets are brittle under domain shift & subpop shift.

We introduce a simple mixup-based method that selectively interpolates datapts to encourage domain-invariance

ICML 22 paper:

w/

@HuaxiuYaoML

Yu Wang

@zlj11112222

@liang_weixin

@james_y_zou

(1/3)

5

57

359

0

0

33

👇Our

#EMNLP2023

work suggests that RLHF-LLMs verbalize probabilities that are significantly better calibrated than the model's conditional probabilities, thus enabling a well-calibrated model.

LLMs fine-tuned with RLHF are known to be poorly calibrated.

We found that they can actually be quite good at *verbalizing* their confidence.

Led by

@kattian_

and

@ericmitchellai

, at

#EMNLP2023

this week.

Paper:

6

48

356

1

4

27

📢Excited to announce that our "Sixth Workshop on Meta-Learning" has been accepted in

#NeurIPS2022

.

w/ co-organizer

@FrankRHutter

,

@joavanschoren

,

@Qi_Lei_

,

@Eleni30fillou

,

@artificialfabio

.

Hope to see you in person in New Orlean, stay tuned for more info.

1

6

27

Thanks

@james_y_zou

for the unreserved support! I work on building

#MachineLearning

models that are unbiased, widely generalizable, and easily adaptable to in-the-wild shifts. DM me if you think I would be a good fit for your department!

@HuaxiuYaoML

is a super postdoc at

@StanfordAILab

He has done many interesting works on meta-learning, data augmentation and OOD learning to make

#ML

more reliable.

2

6

25

0

3

22

🔥Though I see bad reviewers and ACs in my other papers, LISA is luckily accepted to

@icmlconf

. An extremely simple model with super-cool results for tackling distribution shifts. Nice collab w/

@__YuWang__

,

@chelseabfinn

, and others🎉.

ArXiv👇 and code:

0

0

20

A nice collab. w/

@Xinyu2ML

@ShirleyYXWu

@linjunz_stat

@james_y_zou

Paper link:

Benchmark link (coming this week):

1

3

19

🌟Our code, model, and data are available at . Visit the project page for more details: .🌟

0

5

18

We systematically evaluate the safety and robustness of Vision LLMs, including adv attack and OOD generalization.

👇See detailed takeaways in Haoqin’s thread.

Nice collaboration with

@cihangxie

’s team!

0

6

18

We are hiring! Come and join us! Feel free to ping me if you are in the job market and have any questions about UNC SDSS.

We are excited to announce an open rank faculty hiring initiative for several positions to bolster research and innovation in the emerging field of

#DataScience

.

🎓100% SDSS position:

🎓All faculty positions:

0

10

7

0

5

16

Big Congrats

@james_y_zou

, well deserved

2

0

16

#EMNLP2021

Today (Nov 7th), I will present KGML () for few-shot text classification, where knowledge graph is used to bridge the gap between training and test tasks in the Oral Session 4A at 12:45 - 2:15 pm PST and Post Session at 3:00 - 5:00 pm PST.

3

2

15

If you are working on meta-learning and struggling with the overfitting issue, you should use MetaMix presented at

#ICML2021

. It is a simple task augmentation method to improve generalization in meta-learning.

Paper:

Code:

2

6

14

Welcome submissions to our ICML 2022 Pre-training Workshop!

Excited to announce 1st Pre-training Workshop at

@icmlconf

(hybrid workshop).

We welcome submissions! Please consider submitting your work here: (deadline: May 22, 2022, AOE)

Hope to see you in person in July, stay tuned for more info.

3

34

159

0

0

13

(8/8) In linear or monotonic non-linear models, our theory further shows that C-Mixup improves generalization in regression.

#NeurIPS2022

Paper:

Code: (coming soon)

0

1

13

Welcome, Jaehong!

😍I'm super excited to announce my next journey! After a great time at KAIST, I'll be working as a Postdoctoral Research Associate at UNC Chapel Hill (

@UNC

) this fall, working with Prof. Mohit Bansal (

@mohitban47

) and faculty+students in the awesome

@uncnlp

and

@unccs

groups!

1/3

12

18

121

2

2

12

If you don't have sufficient tasks in meta-learning, come and check our

#ICLR2022

oral "Meta-learning with Fewer Task through Task Interpolation" for an extremely simple solution. Nice collab with

@chelseabfinn

,

@zlj11112222

Oral: Wed 27, 9:30am PT.

Poster: Tue 26, 10:30am PT

🎉Our paper “Meta-learning with Fewer Tasks through Task Interpolation” has been accepted by

@iclr_conf

. A simple solution to improving the generalization of meta-learning by densifying the task, particularly works if you do not have a large number of training tasks.

1

5

43

0

1

11

I will be

#ICML

next week, really looking forward to my first in person conference after NeurIPS 2019.

DM me to grab a ☕️ and chat if you are around!

2

0

10

Thanks to all speakers:

@andrewgwils

,

@lilianweng

,

@denny_zhou

,

@weijie444

,

@_beenkim

,

@NicolasPapernot

,

@megamor2

,

@james_y_zou

0

0

10

If you are interested in joining my lab, kindly complete the application form and send an email to huaxiu.recruiting

@gmail

.com.

- Ph.D. student and intern application form:

- Postdoc application form:

0

2

9

Benchmark & Code (release soon):

Paper: .

Nice collaboration w/

@MarcNiethammer

,

@yunliunc

,

@hongtuzhu1

,

@jimeng

,

@james_y_zou

, and others.

0

0

6

very interesting

⚡️Excited to share our new

@NatureMedicine

paper where we used Twitter to build a vision-language foundation

#AI

for

#pathology

We curated >100K public Twitter threads w/ medical images+text to create PLIP for semantic search and 0-shot pred.

All our

17

162

624

0

1

8

📢 Any great papers that are rejected by ICML or planned to submit to NeurIPS? You can also submit these interesting works to our Pre-training workshop

@icmlconf

. 7 days left. more details 👇

Excited to announce 1st Pre-training Workshop at

@icmlconf

(hybrid workshop).

We welcome submissions! Please consider submitting your work here: (deadline: May 22, 2022, AOE)

Hope to see you in person in July, stay tuned for more info.

3

34

159

0

0

7

Project page:

Code:

Huggingface:

MJ-Bench dataset:

Leaderboard:

Nice collaboration w/

@rm_rafailov

,

@chelseabfinn

0

1

7

I would like to express my sincere appreciation to my advisors (

@chelseabfinn

,

@JessieLzh

), collaborators (

@james_y_zou

,

@ericxing

,

@jimeng

, etc.), friends, and family for their invaluable support throughout this journey. I eagerly look forward to embarking on the next chapter🎉

1

0

7

We are hiring! Come and join us! Feel free to ping me if you are in the job market and have any questions.

0

0

7

Welcome and join us tomorrow!

Pre-training workshop will happen tomorrow. Join us this Saturday at

#ICML2022

room Hall F for a wonderful lineup of speakers and panelists, along with 48 amazing posters and 3 contributed talks!

Schedule:

2

10

66

0

0

5

Join and submit your work to our MetaLearn workshop in

#NeurIPS2021

.

Our

#metalearning

workshop at

#NeurIPS2021

got accepted! Amazing speakers: Carlo Ciliberto,

@rosemary_ke

,

@Luke_Metz

,

@MihaelaVDS

,

@Eleni30fillou

,Ying Wei! Tentative submission deadline: Sept 17. Co-organizers:

@FerreiraFabioDE

,

@ermgrant

,

@schwarzjn_

,

@joavanschoren

,

@HuaxiuYaoML

0

10

56

0

0

6

Excited to share our new work on improving the generalization of meta-learning by densifying the task distribution with a simple regularizer.

Meta-learning methods need a large set of training tasks. We introduce a simple regularizer that helps, especially when you don’t have a lot of tasks.

Meta-Learning with Fewer Tasks through Task Interpolation

Paper:

with

@HuaxiuYaoML

,

@zlj11112222

1

36

191

0

0

6

Please consider submitting up-to-8 page papers to our Meta-Learning Workshop (

#MetaLearn2021

) @

#NeurIPS2021

by Sep 17!

The 5th edition of the Meta-Learning Workshop (

#MetaLearn2021

) is taking place on Workshop Monday (13th Dec.) @

#NeurIPS2021

, and the CfP is now out! Please submit your up-to-8-page research papers by Sept. 17th; more details at .

1

20

97

0

1

5

🧵[4/4] 🌟 We've conducted a human evaluation of concepts flagged as extrinsic hallucinations by ChatGPT. These flags are mostly accurate, enhancing transparency and trustworthiness in text generation. A step forward for

#TrustworthyAI

! 🤖🔍

0

0

4

We have a fantastic line up of speakers

@mengyer

,

@chelseabfinn

,

@KEggensperger

,

@aggielaz

,

@percyliang

,

@TheGregYang

,

@giffmana

.

0

0

3