Cihang Xie

@cihangxie

Followers

2,327

Following

797

Media

83

Statuses

609

Assistant Professor, @BaskinEng ; PhD, @JHUCompSci ; @Facebook Fellowship Recipient; 🐱

Santa Cruz, CA

Joined July 2014

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Georgia

• 349198 Tweets

Texas

• 283706 Tweets

Yankees

• 240299 Tweets

Never Trump

• 161662 Tweets

Arnold Palmer

• 137238 Tweets

Presiden

• 100902 Tweets

World Series

• 91818 Tweets

WANG YIBO IN THE GT FINALS

• 67199 Tweets

Juan Soto

• 61803 Tweets

期日前投票

• 61213 Tweets

CHENLE VICTORIOUS FIRST THROW

• 54629 Tweets

Stanton

• 44933 Tweets

GALA EN EL AUDITORIO

• 40178 Tweets

#DOMELIVE_Atlantis

• 33464 Tweets

Kirby

• 29215 Tweets

Rayados

• 25885 Tweets

#菊花賞

• 23703 Tweets

ZETA

• 23658 Tweets

#刀剣乱舞ONLINEもうすぐ十周年

• 22068 Tweets

#かぼちゃ大作戦シルエットクイズ

• 22021 Tweets

Ewers

• 19277 Tweets

Tigres

• 18822 Tweets

gerard

• 17564 Tweets

ダノンデサイル

• 16872 Tweets

アーバンシック

• 13840 Tweets

ねこあつめ2

• 11378 Tweets

コスモキュランダ

• 10216 Tweets

Last Seen Profiles

Pinned Tweet

Big thanks to

@_akhaliq

for the retweet! 🚀

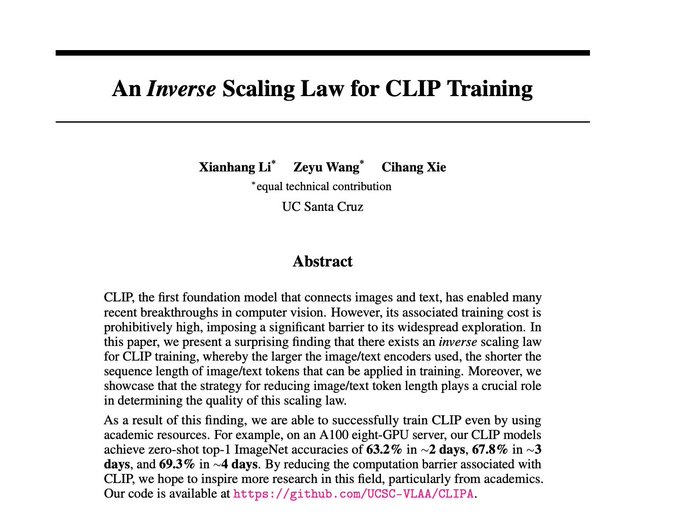

We are very excited about presenting 𝑹𝒆𝒄𝒂𝒑-𝑫𝒂𝒕𝒂𝑪𝒐𝒎𝒑-1𝑩, where we use a 𝐋𝐋𝐚𝐌𝐀-𝟑-powered LLaVA model to recaption the entire 𝟏.𝟑 𝐛𝐢𝐥𝐥𝐢𝐨𝐧 images from DataComp-1B. Compared to the original textual descriptions,

2

19

89

Thanks for tweeting,

@ak92501

! Regarding robustness, we surprisingly find that

1) ViTs are NO MORE robust than CNNs on adversarial examples; training recipes matter

2) ViTs LARGELY outperform CNNs on out-of-distribution samples; self-attention-like architectures matter

4

116

562

📢 Introducing CLIPA-v2, our latest update to the efficient CLIP training framework! 🚀✨

🏆 Our top-performing model, H/14

@336x336

on DataComp-1B, achieves an impressive zero-shot ImageNet accuracy of 81.8!

⚡️ Plus, its estimated training cost is <$15k!

1

26

107

If you plan to "attend"

@CVPR

, check out our Tutorial on AdvML in Computer Vision (). Our amazing speakers will cover both the basic backgrounds & their most recent research in AdvML.

Save the date (09:55 am - 5:30 pm ET, June 19), and we will see you soon

0

31

96

Last year, we showed ViTs are inherently more robust than CNNs on OOD.

While our latest work challenges this point---we actually can build pure CNNs with stronger robustness than ViTs. The secrets are:

1) patchifying inputs

2) enlarging kernel size

3) reducing norm & act layers

Thanks for tweeting,

@ak92501

! Regarding robustness, we surprisingly find that

1) ViTs are NO MORE robust than CNNs on adversarial examples; training recipes matter

2) ViTs LARGELY outperform CNNs on out-of-distribution samples; self-attention-like architectures matter

4

116

562

2

14

88

If you decide to apply for Ph.D. programs this year and are interested in adversarial machine learning, computer vision & deep learning, please consider my group

@ucsc

@UCSC_BSOE

. We will have multiple openings for Fall 2021.

No GRE required & DDL is Jan 11, 2021

RT Appreciated

👩🏾💻

@ucsc

’s Computer Science and Engineering department is accepting applicants to its Ph.D. and M.S. programs for Fall 2021! Applications due Jan. 11, 2021. No GRE required.

#BaskinEngineering

Learn more & apply:

0

9

23

2

25

71

Disappointed to see nearly 𝐢𝐝𝐞𝐧𝐭𝐢𝐜𝐚𝐥 reviews for two 𝐯𝐞𝐫𝐲 𝐝𝐢𝐟𝐟𝐞𝐫𝐞𝐧𝐭 submissions to

#NeurIPS

😮💨😮💨

5

1

61

📢

#CallForPaper

AROW Workshop is back

@eccvconf

! This year we have a great lineup of 9 speakers, and set 3 best paper prizes ($10k each, sponsored by

@ftxfuturefund

).

For more information, please check

2

16

57

Our best model, G/14 with 83.0% zero-shot ImageNet top-1 accuracy, is released at both OpenCLIP () and CLIPA ()

v2.23.0 of OpenCLIP was pushed out the door! Biggest update in a while, focused on supporting SigLIP and CLIPA-v2 models and weights. Thanks

@gabriel_ilharco

@gpuccetti92

@rom1504

for help on the release, and

@bryant1410

for catching issues. There's a leaderboard csv now!

2

18

147

0

11

56

🔥 Introducing HQ-Edit, a dataset with high-res images & detailed and aligned image editing instructions. Our fine-tuned InstructPix2Pix delivers superior editing performance.

Dataset:

Demo:

Project:

0

11

53

We are hosting a workshop on “Neural Architectures: Past, Present and Future” at

@ICCV_2021

, with 7 amazing speakers.

We invite submissions on any aspects of neural architectures. Both long paper and extended abstract are welcomed. Visit for more details

1

11

48

Check out our latest work on studying the effects of activation functions in adversarial training.

We found that making activation functions be SMOOTH is critical for obtaining much better robustness.

Joint work with

@tanmingxing

,

@BoqingGo

,

@YuilleAlan

and

@quocleix

.

1

10

36

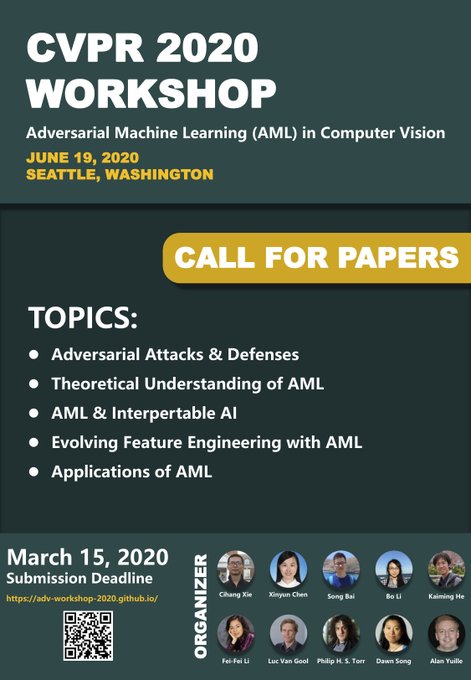

Our Adversarial Machine Learning Workshop

#CVPR2020

is tomorrow, with 9 amazing speakers:

@YuilleAlan

,

@aleks_madry

,

@EarlenceF

,

@MatthiasBethge

, Laurens van der Maaten,

@pinyuchenTW

, Cho-Jui Hsieh,

@BoqingGo

and

@tdietterich

.

Come and join us via

4

12

36

An incredible experience at

#ICML23

--- meeting over 50 Email/Twitter friends in person for the first time was truly amazing. Building real connections with real people (plus beautiful Hawaii) makes ICML unforgettable! 🥳🥳

0

0

32

I am happy to share that I will be joining

@UCSC_BSOE

as an Assistant Professor in the Computer Science and Engineering Department in Spring 2021! Thanks everyone for the great support along my Ph.D. journey 😀

2

0

32

Just arrived

#ICML2023

Looking forward to catching up with old friends and making new friends

2

0

31

The afternoon session of our

@CVPR

Workshop on The Art of Robustness starts now in Room 203-205!! Our first speaker is Nicholas Carlini

1

2

31

Happy to share that our D-iGPT is accepted by

#ICML2024

. By only using public datasets for training, our best model strongly attains 𝟗𝟎.𝟎% ImageNet accuracy with a vanilla ViT-H.

Code & Model:

Huge congrats to

@RenSucheng

🥳

1

10

32

Interested in benchmarking your NAS algorithm? Check out our

@CVPR

1st lightweight NAS challenge () with 3 competition tracks: Supernet Track, Performance Prediction Track, Unseen Data Track.

🏆The prize pool is $2,500 for each competition track

1

9

26

Our Adversarial Machine Learning in Computer Vision Workshop (co-organized with

@xinyun_chen_

,

@SongBai_

, Bo Li, Kaiming He,

@drfeifei

, Luc Van Gool, Philip Torr(

@OxfordTVG

),

@dawnsongtweets

and Alan Yuille) is now accepting submissions, with a BEST PAPER PRIZE!

DDL: Mar 15 AoE

1

8

25

I will co-advise 1-2 students with

@yuyinzhou_cs

. If you are interested, please submit your application via

I am seeking to hire 3-5 Ph.D. students

@ucsc

@BaskinEng

. If you are interested in computer vision, machine learning, or AI for Healthcare, feel free to reach out and to let me know to look for your application!

Application deadline: Jan 10 (GRE not required)

RTs appreciated

42

423

736

0

3

25

My fiancée

@yuyinzhou_cs

and I do PhD together with

@YuilleAlan

from 2016-2020. I think both of us do good jobs :)

1

0

25

Checking out our TMLR paper on how to scale down CLIP models

0

2

25

Our ICCV Workshop on Neural Architectures: Past, Present and Future will start soon (8:55 am EDT, Oct 11), with 7 amazing speakers.

Join us via Zoom from

@ICCV_2021

platform! We will also be streaming live on Youtube!

For more info, please visit

0

3

21

Thank you

@trustworthy_ml

for featuring me!

1/ 📢 In this week’s TrustML-highlight, we are delighted to feature Cihang Xie

@cihangxie

🎊🎉

Dr. Xie is an Assistant Professor of Computer Science and Engineering at the University of California, Santa Cruz.

1

4

18

0

1

22

We released CLIPA ~1 year ago, and it now has 𝟏𝟔.𝟖𝐤 𝐌𝐨𝐧𝐭𝐡𝐥𝐲 𝐃𝐨𝐰𝐧𝐥𝐨𝐚𝐝𝐬! Huge congratulations to the leading authors Xianhang and Zeyu 🥳

1

0

20

Just note that our 𝑹𝒆𝒄𝒂𝒑-𝑫𝒂𝒕𝒂𝑪𝒐𝒎𝒑-1𝑩 is now trending on Huggingface Dataset! 🌟 Check out the thread below for an introductory overview of our work. 👇

HF link:

Big thanks to

@_akhaliq

for the retweet! 🚀

We are very excited about presenting 𝑹𝒆𝒄𝒂𝒑-𝑫𝒂𝒕𝒂𝑪𝒐𝒎𝒑-1𝑩, where we use a 𝐋𝐋𝐚𝐌𝐀-𝟑-powered LLaVA model to recaption the entire 𝟏.𝟑 𝐛𝐢𝐥𝐥𝐢𝐨𝐧 images from DataComp-1B. Compared to the original textual descriptions,

2

19

89

0

4

19

We see another significant boost in the Monthly Downloads of our CLIPA models, from 𝟏𝟔.𝟖𝐤 to 𝟒𝟖.𝟐𝐤. (weights are available at )

We also plan to release stronger CLIP models (especially in Zero-Shot Cross-Modal Retrieval) in the coming weeks. Stay

1

5

17

Excited to share our newest project: developing a comprehensive safety evaluation benchmark for Vision-LLMs.

Also, a shout-out to our awesome project leader,

@HaoqinT

, who will apply for PhD this year. He is strong and always energetic about research. Go get him!

2

4

18

Thank

@_akhaliq

for highlighting our work.

We show autoregressive pretraining can create powerful vision backbones. E.g., our ViT-L attains an impressive 89.5% ImageNet accuracy. Larger models are coming soon.

Big shoutout to

@RenSucheng

for leading this incredible project! 🌟

0

1

18

Our unicorn benchmark is accepted by

#ECCV2024

. Kudos to our incoming PhD students

@HaoqinT

and Zijun.

Excited to share our newest project: developing a comprehensive safety evaluation benchmark for Vision-LLMs.

Also, a shout-out to our awesome project leader,

@HaoqinT

, who will apply for PhD this year. He is strong and always energetic about research. Go get him!

2

4

18

0

6

18

Our

@ICCVConference

Workshop on Adversarial Robustness is now accepting submissions!

📢 [Call For Papers] We invite participants to submit their work to the 4th Workshop on Adversarial Robustness In the Real World, ICCV 2023, France!

📷 Workshop Website:

#AROW

#ICCV2023

#AdversarialRobustness

#DeepLearning

#ComputerVision

#Paris

1

5

12

0

4

18

proud to see my students

@HaoqinT

and

@BingchenZhao

contribute to this interesting open-source project

0

3

17

D-iGPT is accepted as an Oral presentation 😎 Congratulations again to

@RenSucheng

on completing this exciting project.

Happy to share that our D-iGPT is accepted by

#ICML2024

. By only using public datasets for training, our best model strongly attains 𝟗𝟎.𝟎% ImageNet accuracy with a vanilla ViT-H.

Code & Model:

Huge congrats to

@RenSucheng

🥳

1

10

32

0

2

17

Lastly, huge kudo to the first author, Feng Wang from

@JHUCompSci

, for leading this interesting project.

arxiv:

project page:

1

4

17

@mcgenergy

@quocleix

@DigantaMisra1

Besides the functions we listed in the paper, we actually have tried a lot of other smooth activation functions (including Mish). The general conclusion is as long as your function is a good smooth approximation of ReLU, it will work.

1

1

16

I will be giving a talk entitled “Not All Networks Are Born Equal for Robustness” at this

@NeurIPSConf

competition. Check it out!

Which

#AI

#ComputerVision

models generalize and adapt best to new domains with different viewpoints, style, or missing/novel categories? Find out on Tue Dec 7 20:00 GMT at our

@NeurIPSConf

ViSDA'21 competition! Hear from winners and speakers; more at

0

8

36

0

0

16

Thanks

@arankomatsuzaki

for retweeting our work.

A detailed introduction of this project can be found at

0

3

16

Cannot wait to join

Happy first day of classes!!!

This year,

@ucsc

Baskin Engineering welcomes nine new faculty with specialties in statistical computing, computer security, human-robot interaction, wireless communications, serious games, AI, and more. Meet them here:

1

5

24

0

0

15

🚀 Excited about releasing MedTrinity-25M, a large-scale medical multimodal dataset with over 25 million images across 10 modalities, with multigranular annotations for more than 65 diseases

Try it at

0

2

14

Exciting to share our

@NeurIPSConf

paper on defending against score-based query attacks 🥳

The leading author,

@MrSizheChen

, will apply for Ph.D. programs this year. He is a strong researcher and awesome collaborator; try to get him 😉

Excited that our work with Prof.

@cihangxie

has been accepted by

#NeurIPS2022

after an unforgettable rebuttal (2 4 4 5 -> 7 7 4 7). Looking forward to seeing you!

3

0

10

1

5

13

an interesting work from our incoming PhD

@xiaoke_shawn_h

1

1

13

We offer a best paper prize and several travel grants!! The submission deadline is Feb 26, 2021 AoE.

Our Security and Safety in Machine Learning Systems Workshop at

@iclr_conf

(co-organized with Cihang Xie (

@cihangxie

), Ali Shafahi, Bo Li, Ding Zhao (

@zhao__ding

), Tom Goldstein, and Dawn Song (

@dawnsongtweets

)) is now accepting submissions, with a best paper prize! DDL: Feb 26.

1

6

26

0

6

13

Our

@CVPR

adversarial machine learning workshop starts now at EAST 3 meeting room. The first speaker is

@aleks_madry

, on talking about “Preventing Data-driven Manipulation”

2

2

11

👇This is exactly how I get my FAIR internship in 2018 summer

0

0

12

Proud Advisor Moment - Huge congratulations to my student, Xianhang Li, for winning the esteemed 2024-25 Jack Baskin & Peggy Downes-Baskin Fellowship. Your hard work and dedication truly shine through! 🥳🥳

@BaskinEng

1

1

12

I will be at CLIPA poster tomorrow morning from 10:45 am - 12:45 pm

Also I will be at

#NeurIPS2023

until this Saturday (Dec 16). Happy to chat with old friends and make new connections

0

0

11

Tomorrow (June 23) afternoon, we will be presenting our

@CVPR

work 𝐀 𝐒𝐢𝐦𝐩𝐥𝐞 𝐃𝐚𝐭𝐚 𝐌𝐢𝐱𝐢𝐧𝐠 𝐏𝐫𝐢𝐨𝐫 𝐟𝐨𝐫 𝐈𝐦𝐩𝐫𝐨𝐯𝐢𝐧𝐠 𝐒𝐞𝐥𝐟-𝐒𝐮𝐩𝐞𝐫𝐯𝐢𝐬𝐞𝐝 𝐋𝐞𝐚𝐫𝐧𝐢𝐧𝐠 at 126b.

Come visit! Both

@HuiyuWang3

and Xianhang Li will be there!

1

1

11

I will be (in-person) talking about this work at CVPR'23 Adversarial Machine Learning Workshop () tomorrow, from 10:40 am - 11:10am.

📢 Introducing CLIPA-v2, our latest update to the efficient CLIP training framework! 🚀✨

🏆 Our top-performing model, H/14

@336x336

on DataComp-1B, achieves an impressive zero-shot ImageNet accuracy of 81.8!

⚡️ Plus, its estimated training cost is <$15k!

1

26

107

0

2

11

Want to make your networks get better performance? Try AdvProp

#CVPR2020

! By using adversarial examples, AdvProp achieves 85.5% accuracy on ImageNet. Join me via at 10AM PT TODAY!

Joint work with

@tanmingxing

,

@BoqingGo

,JiangWang,

@YuilleAlan

and

@quocleix

0

2

11

Our Workshop on Security and Safety in Machine Learning Systems is tomorrow, starting at 8:45 am PT

Join us at

Our SecML Workshop at

@iclr_conf

is tomorrow, with 11 amazing speakers/panelists:

@zicokolter

,

@AdamKortylewski

,

@aleks_madry

,

@catherineols

,

@AlinaMOprea

, Aditi Raghunathan, Christopher Ré,

@RaquelUrtasun

, David Wagner,

@YuilleAlan

,

@ravenben

.

Link:

1

12

49

0

3

11

Just found my covid test is positive. If you talked to me at

#CVPR

, maybe should closely monitor your health situation in the next few days….. sorry

2

2

11

Today is the submission DDL of our

@eccvconf

AROW Workshop!!

𝐴𝑠 𝑎 𝑟𝑒𝑚𝑖𝑛𝑑𝑒𝑟, 𝑤𝑒 𝑤𝑖𝑙𝑙 𝑠𝑒𝑙𝑒𝑐𝑡 3 𝑏𝑒𝑠𝑡 𝑝𝑎𝑝𝑒𝑟𝑠, 𝑎𝑤𝑎𝑟𝑑𝑖𝑛𝑔 𝑒𝑎𝑐ℎ 𝑤𝑖𝑡ℎ 𝑎 $10,000 𝑝𝑟𝑖𝑧𝑒.

📢

#CallForPaper

AROW Workshop is back

@eccvconf

! This year we have a great lineup of 9 speakers, and set 3 best paper prizes ($10k each, sponsored by

@ftxfuturefund

).

For more information, please check

2

16

57

0

2

9

Attending

#CVPR2020

?

Please check out our work on fooling object detectors in the physical world!

Q&A Time: 10:00–12:00 and 22:00–00:00 PT on June 16

Link:

Code:

Paper:

We also have a very cool demo👇

1

2

9

Missed our

#CVPR2020

Adversarial Machine Learning Workshop? No worries, the full recording is available at

0

1

9

We will be presenting CLIPA at

@NeurIPSConf

on Tuesday, Dec 12.

This work shows that larger models are better at dealing with inputs at reduced token length, enabling fast training speed.

The code is released at , including G/14 at 83.0 zero-shot acc.

UCSC AI Group will be at

#NeurIPS23

and

#EMNLP23

, presenting research covering LLMs, T2I Generation/Editing/Evaluation, VLM Efficiency, Embodied Agents, Explainable AI, Machine Unlearning, Fairness ML, etc.

Check out the blogpost for detailed schedule: .

2

5

38

1

0

8

Anyway, I am very disappointed with

#neurips2020

--- reject your works without peer-reviews AND do not allow you to do a rebuttal.

If my works cannot be properly reviewed in a conference, then what is the value of submitting a paper there?

1

2

8

Please consider submitting your works to this workshop! We will also offer two Titan RTX GPUs for prize winners!

We have a great lineup of speakers at our upcoming workshop on the importance of Adversarial Robustness in the Real World () at

#ECCV2020

with

@MatthiasBethge

@YuilleAlan

@honglaklee

@pappasg69

@RaquelUrtasun

@judyfhoffman

. Consider submitting a paper!

1

16

43

1

0

8

Our "Shape-Texture Debiased Neural Network Training" paper is just accepted by ICLR. Congrats

@yingwei_li

github:

0

0

8

Meet Sucheng, a remarkable first-year PhD student at

@JHUCompSci

! 🌟 Sucheng has an impressive 16 publications, covering multimodal learning, self-supervised learning, and knowledge distillation. Check his work at with CV at (5/n)

1

0

8

Say hello to Junfei Xiao from

@JHUCompSci

, a second-year PhD student with expertise in segmentation, multimodal learning, and large vision-language model, boasting 8 publications. 🚀 Discover his profile at and access CV at (4/n)

2

0

7

If you are working on robustness, please consider submitting your work(s) to our

@eccvconf

AROW workshop. 𝐖𝐞 𝐰𝐢𝐥𝐥 𝐬𝐞𝐥𝐞𝐜𝐭 𝐓𝐇𝐑𝐄𝐄 𝐛𝐞𝐬𝐭 𝐩𝐚𝐩𝐞𝐫 𝐚𝐰𝐚𝐫𝐝𝐬, 𝐞𝐚𝐜𝐡 𝐰𝐢𝐭𝐡 𝐚 $𝟏𝟎,𝟎𝟎𝟎 𝐩𝐫𝐢𝐳𝐞!

CMT site:

DDL: Aug 1, 2022

📢

#CallForPaper

AROW Workshop is back

@eccvconf

! This year we have a great lineup of 9 speakers, and set 3 best paper prizes ($10k each, sponsored by

@ftxfuturefund

).

For more information, please check

2

16

57

0

5

7

The devil is in the details

Implementation details affect the robust accuracy of adversarially trained models more than expected. For example, a slightly different value of weight decay can send TRADES back to

#1

in the AutoAttack benchmark. A unified training setting is necessary.

3

6

30

0

0

7

The submission deadline is August 1, 2021 Anywhere on Earth (AoE). Both long paper (8-page) and extended abstract (4-page) are welcomed!!

CMT site:

We are hosting a workshop on “Neural Architectures: Past, Present and Future” at

@ICCV_2021

, with 7 amazing speakers.

We invite submissions on any aspects of neural architectures. Both long paper and extended abstract are welcomed. Visit for more details

1

11

48

0

1

7

Our SecML workshop starts now!

After

@xinyun_chen_

's opening remark,

@AlinaMOprea

will talk about "Machine Learning Integrity in Adversarial Environments" at 9am PT.

The link is here 👇

0

3

6

arxiv links are and

Both works are led/co-led by the amazing intern

@llleizhang

. He is applying for PhD positions 📢📢 Hiring him 😉

1

1

4

@pinyuchenTW

@ak92501

Hi Pin-Yu, thanks for sharing this interesting work. We will definitely take a look and discuss it in the next version :)

0

0

5

Same here, with two very vague comments: (1) not supported with sufficient empirical evidence, or important baselines are missing; (2) not sufficiently positioned with respect to prior work, either in terms of the presentation or the empirical validation.

1

0

5