Weijie Su

@weijie444

Followers

5K

Following

4K

Media

49

Statuses

794

Associate Professor @Wharton & CS Penn. coDir @Penn Research #MachineLearning. PhD @Stanford. #MachineLearng #DeepLearning #Statistics #Privacy #Optimization.

Philadelphia, PA

Joined September 2011

New Research (w/ amazing @hangfeng_he). "A Law of Next-Token Prediction in Large Language Models". LLMs rely on NTP, but their internal mechanisms seem chaotic. It's difficult to discern how each layer processes data for NTP. Surprisingly, we discover a physics-like law on NTP:

8

92

426

How does training time in #deeplearning depend on the learning rate? A new paper ( uncovers a fundamental *distinction* between #nonconvex and convex problems from this viewpoint, showing why learning rate decay is *more* powerful in nonconvex settings.

3

57

235

It feels so good to write single-author papers! In we introduce a phenomenological model toward understanding why #deeplearning is so effective. It is called *neurashed* because it was inspired by watershed. 8 pages and ~15mins read!

6

38

233

A postdoc position is available. Pls shoot me an email if you're interested in any of the following three: the theoretical foundation of #deeplearning, #privacy-preserving machine learning, and game-theoretic approaches to estimation. Appreciate it very much for RTing🙏 1/4.

3

56

122

Good news while attending #ICML2023: Our deep learning "theory" paper *A Law of Data Separation in Deep Learning* got accepted by PNAS! . w/ amazing @hangfeng_he.

7

8

124

Very honored to receive 2020 Sloan Research Fellowship #sloanfellow. Many thanks to collaborators, colleagues @Wharton @Penn and students for help & support along the way. Excited to use the funding by @SloanFoundation to further my research in #MachineLearning and #DataScience.

8

14

108

How does #deeplearning separate data throughout *all* layers?. w/ @hangfeng_he we discovered a precise LAW emerging in AlexNet, VGG, ResNet: . The law of equi-separation. What is this law about? Can it guide design, training, and model interpretation? 1/n

3

25

109

Happy to share that this paper is accepted to AOS. Excited not just for the acceptance, but (more) for the recognition of watermarking for LLMs as a topic in statistics. Watermarking is (basically) a pure stats problem—so many ideas in stats will light the path forward! 🚀📊 #AI.

New Research:. Watermarking large language models is a principled approach to combatting misinformation, but how do we evaluate their statistical efficiency and design even more powerful detection methods? 🤔 . Our new paper addresses these challenges using a new framework. 1/n

4

8

110

I'm very humbled to receive the 2022 Peter Gavin Hall IMS Early Career Prize. Thanks to my advisor, mentors, colleagues, and students who have helped me make it possible.

Weijie Su @weijie444 Wins Peter Gavin Hall IMS Early Career Prize . "for fundamental contributions to the development of privacy-preserving data analysis methodologies; for groundbreaking theoretical advancements in understanding gradient-based optimization methods; . 1/5

5

1

104

Is it worth 4 hours making a poster for #NeurIPS2021? Perhaps NOT for my paper "You Are the Best Reviewer of Your Own Papers: An Owner-Assisted Scoring Mechanism" cos from my experience there'll be ~5 ppl each spending 2mins skimming my poster in Gather Town on Dec 7 evening EST.

3

8

96

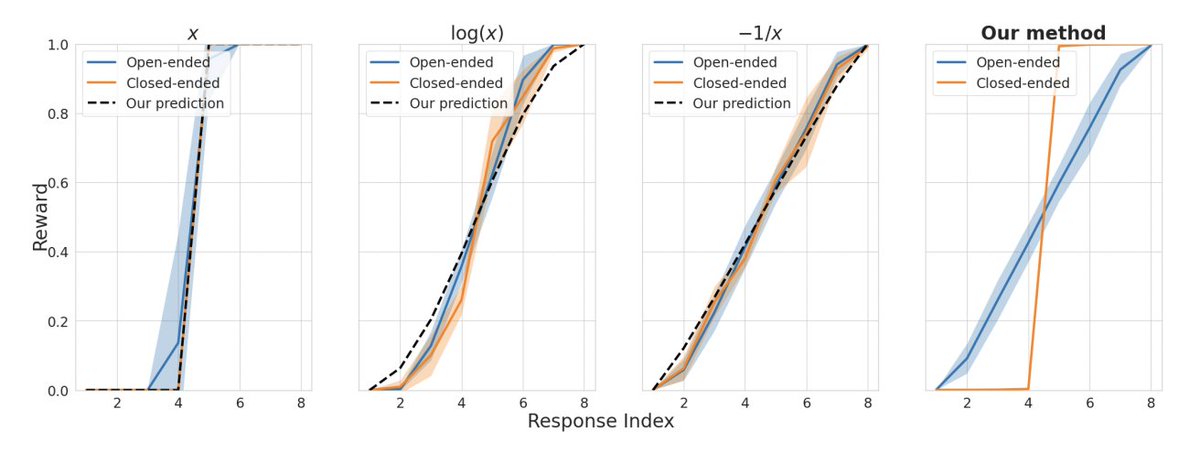

In a #NeurIPS2021 paper (, we introduced a *phenomenological* model toward understanding deep learning using SDEs, showing training is *successful* iff local elasticity exists---backpropagation has a larger impact on same-class examples.

3

16

69

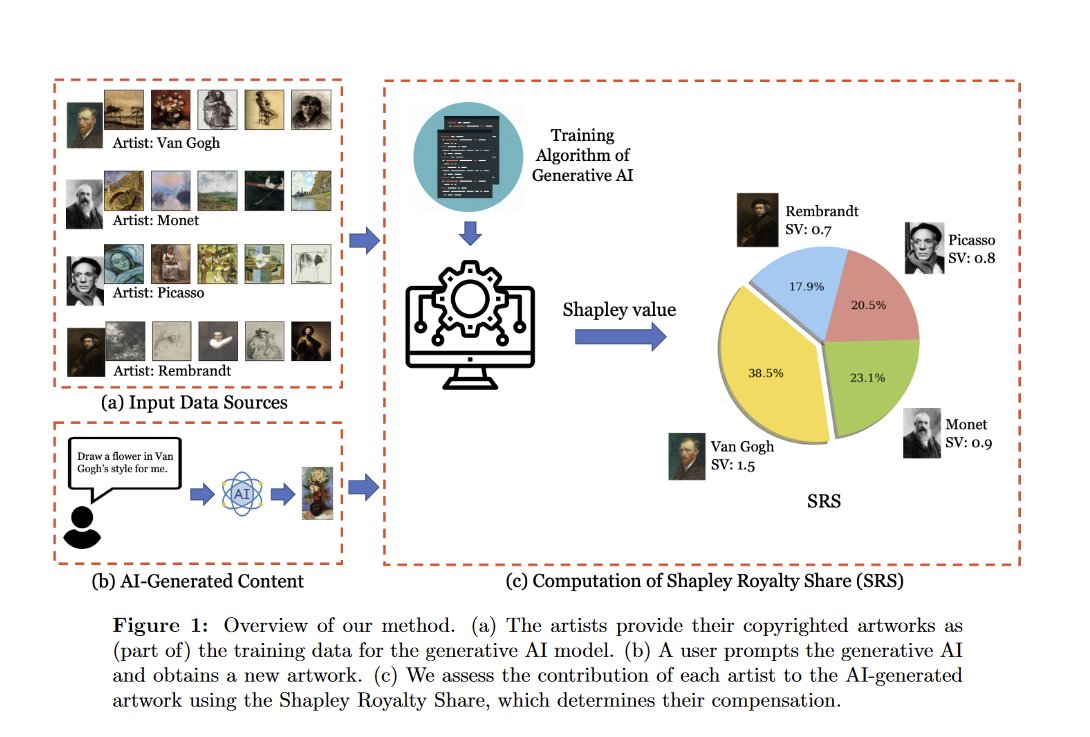

Ongoing lawsuits against GenAI firms over possible use of #copyrighted data for training raise vital questions for our society. 🤖⚖️ How can we address the copyright challenges?. New research proposes a solution: . "An Economic Solution to Copyright Challenges of Generative AI"

1

13

70

Heading to the @TheSIAMNews Conference on Mathematics of Data Science #SIAMMDS22. Looking forward to seeing many colleagues and attending great talks.

2

9

67

Very excited to give a short course on large language models at #JSM2024 in Portland! w/ Emily Getzen and @linjunz_stat AI for Stat and Stat for AI! @AmstatNews

1

6

68

📢 #ICML2024 authors! Help improve ML peer review! 🔬📝. Check your inbox for an email titled "[ICML 2024] Author Survey" and rank your submissions. 🏆📈. Your confidential input is crucial, and won't affect decisions. 🔒✅. Survey link in email or "Author Tasks" on OpenReview.

0

18

53

How to maintain the power of #DeepLearning while preserving #privacy? We recently applied Gaussian differential privacy to training neural nets, obtaining improved utility. Check out the paper ( and happy Thanksgiving!.

2

8

54

One-year postdoc position available in my group. Ideal for one on the job market but wishing to defer the tenure clock. Pls email me if you are interested in #privacy, #deeplearning theory, #optimization, high-dimensional statistics, and other areas of mutual interests.

0

9

46

@HighFreqAsuka Agree, I would emphasize 'mathematical thinking' rather than math formulae, equations, inequalities. .

2

1

47

#NeurIPS reviews just came out. It confirmed that "I am the best reviewer of my own papers."☹️.

I’ll be giving a talk “When Will You Become the Best Reviewer of Your Own Papers?” at @iccopt2022 tomorrow starting at 4:05 in Rauch 137. #optimization #iccopt2022.

1

2

44

Our paper "Understanding the Acceleration Phenomenon via High-Resolution Differential Equations" ( was accepted by Mathematical Programming. Thanks to my wonderful coauthors Bin, @SimonShaoleiDu, and Mike!.

3

4

44

Heading to Vancouver tomorrow for #NeurIPS2024, Dec 10-14! Excited to reconnect with colleagues and enjoy Vancouver's seafood! 🦐.

4

1

43

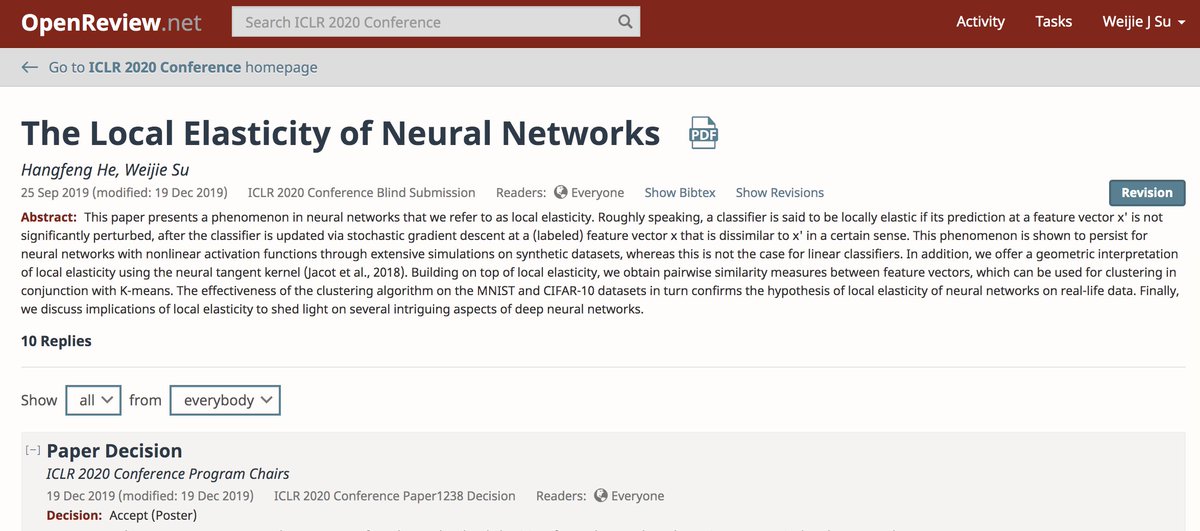

Our *local elasticity* paper ( was accepted to #ICLR2020! Takeaway: DNNs learn locally and understand elastically, with slides (. First #DeepLearning paper, first paper w/o theorems. Excited to visit the land of Queen of Sheba 2020!

0

3

38

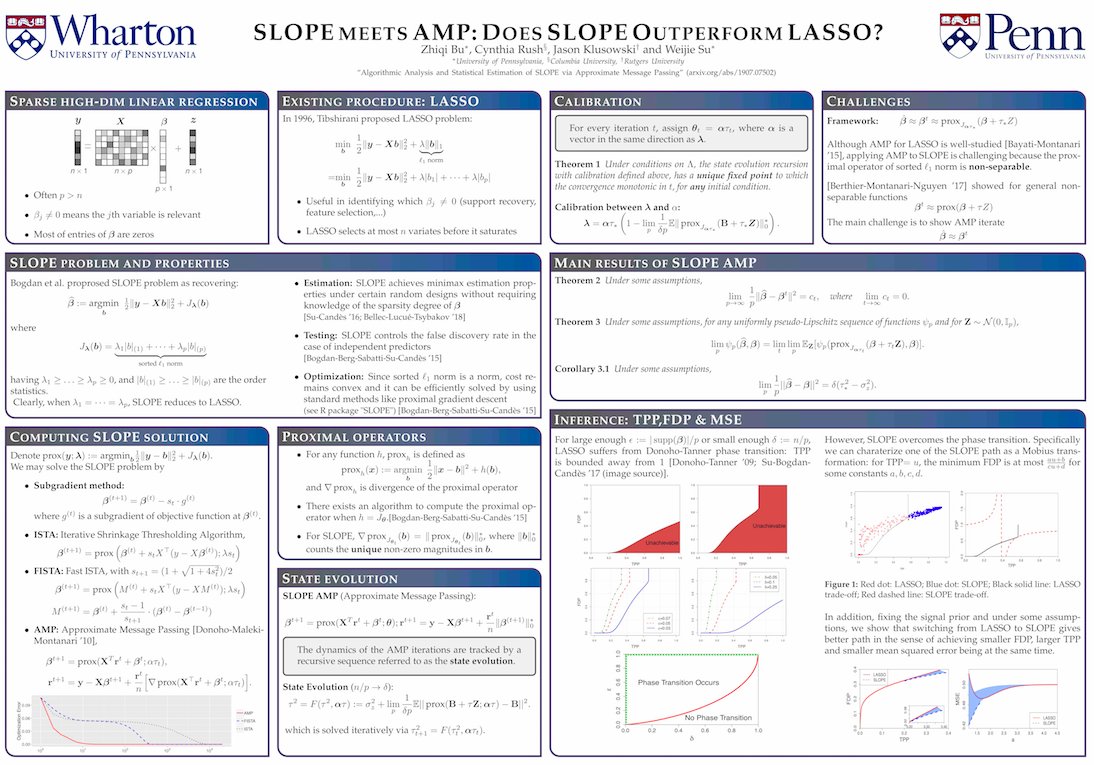

A new paper with Zhiqi, @JKlusowski, @CindyRush on type I and II errors tradeoff of SLOPE, asking: is there any fundamental difference between l1 and sorted l1 regularization?. Our analysis leverages approximate message passing, developed by Donoho, Maleki, and @Andrea__M.

1

3

35

Too much competition leads to inefficiency, happening e.g. in the #gaokao exam taken by 10M students next week. That's the price we pay for involution. Surprisingly, our paper ( shows the price of competition appears in high-dimensional linear models. 1/n

1

4

33

@qixing_huang Exactly! If everyone were at the Einstein level, doing mediocre research would win you a Nobel prize!.

0

0

33

I will be giving a talk at @Yale @yaledatascience this Monday introducing a new notion of differential privacy (. This was inspired by the hypothesis testing interpretation of privacy, joint work with Jinshuo and @Aaroth.

0

5

32

Attending #NeurIPS2023 and find it more exciting than in past years. But each work/poster/oral talk individually becomes less interesting. Anyone has the same feeling?.

1

0

31

I’ll be giving a talk “When Will You Become the Best Reviewer of Your Own Papers?” at @iccopt2022 tomorrow starting at 4:05 in Rauch 137. #optimization #iccopt2022.

0

2

31

I'll be attending #neurips2022 from Nov 29-Dec 1. Looking forward to seeing old and new friends out there, as well as my former adviser Emmanuel Candes' plenary talk on conformal prediction!.

0

3

29

ICML 2024 authors: Please participate in our study on improving peer review in ML! Rank your submissions confidentially under "Author Tasks" on OpenReview. More details at Thank you! @ENAR_ibs.

You have received an email titled "[ICML 2024] Author Survey" with a link to confidentially rank your submissions based on their relative quality. The survey can also be accessed under "Author Tasks" on OpenReview.

0

5

28

Again, my favorite papers got rejected. Really hope that I can "review" my own papers myself:

Verdict from @icmlconf:.3 out of 3 . rejected. If I go by tweet statistics, ICML has rejeted every single paper this year 🤣.

2

2

26

The Isotonic Mechanism was experimented in @icmlconf 2023, requiring authors to provide rankings of their submissions to compute rank-calibrated review scores. A challenge is how to deal with multiple coauthors?. A (not perfect but performant) solution:

1

5

25

Very pleasant visit at Yale; will give the same talk tomorrow @Wharton (. BTW, got best advice yesterday that it would double attendance with deep learning in the title. So the title now is Gaussian differential #privacy, w/ application to deep learning.

I will be giving a talk at @Yale @yaledatascience this Monday introducing a new notion of differential privacy (. This was inspired by the hypothesis testing interpretation of privacy, joint work with Jinshuo and @Aaroth.

2

1

23

As ICML 2023, we will again collect rankings of submissions from authors at ICML 2024. Rankings will be used to assess the modified ratings from the Isotonic Mechanism. It won’t affect decision making, and pls provide rankings for improving peer review in the future!.

Now that at NeurIPS is upon us shortly . it's time to start planning for ICML😀! Thrilled to serve with @kat_heller @adrian_weller @nuriaoliver as PCs, and @rsalakhu as general chair. Call for papers is here: Intro blog post:

0

8

24

Happening soon. Our poster (#40) at #neurips19 will be presented starting 5pm: how can we efficiently do variable selection with confidence? It's a marriage between a #statis method and a signal processing algorithm. Paper with @CindyRush, Jason, Zhiqi.

0

5

23

Surprised that it led to confusion, which was certainly not what I meant. As a non-Indo-European language speaker, I know how difficult it is to speak and write in English. I speak English with an accent, and I also speak Mandarin with a Wu accent. 1/2.

I receive large volumes of emails from Chinese students asking for summer internships. The English was often broken, but it has recently become native sounding😅.

2

0

23

Will be attending #ICML starting tomorrow. Looking forward to seeing many friends for the first time in 3 years!.

1

0

23

Our Penn Institute for Foundations of.Data Science received an NSF award (. Thanks to amazing PI Shivani, co-PIs Hamed, Sanjeev and @Aaroth. Looking forward to advancing data science at @Penn by integrating strength from @PennEngineers,@Wharton and beyond!.

1

2

23

In 2021, Abel went to Wigderson (TCS); In 2024, Wolf went to Shamir (crypto). I won't be surprised if someday it becomes a norm that Fields often goes to applied mathematicians.

BREAKING NEWS.The Royal Swedish Academy of Sciences has decided to award the 2024 #NobelPrize in Physics to John J. Hopfield and Geoffrey E. Hinton “for foundational discoveries and inventions that enable machine learning with artificial neural networks.”

0

1

22

How to accurately track the overall #privacy cost under composition of many private algorithms? Our new paper ( offers a new approach using the Edgeworth expansion in the f-#differentialprivacy framework. w/ Qinqing Zheng, @JinshuoD, and Qi Long.

1

4

20

Many models can explain phenomena in deep learning. OK, but do you see one predicting a *new* surprising phenomenon? Super excited to share a paper "Layer-Peeled Model: Toward Understanding Well-Trained.Deep Neural Networks" (. w/ Fang, @HangfengH, Long.

1

4

21

Have lived in the States for 9 years. My first interview in English, however, reveals that I still maintain a Confucian way of thinking.

Three Penn professors have received the Sloan Fellowship which recognizes young scholars for their "unique potential to make substantial contributions to their field.".

0

1

20

Thanks, @emollick! Yes, it's fine to train your model on my data, but please pay me accordingly!.

There has been a lot of debate on how to deal with copyright issues for AI art, but this interesting paper is one of the first to offer a solution as to how compensation of copyright holders could technically work, given the explainability problem in AI.

1

2

20

Jinshuo will present our work Gaussian Differential #Privacy at #NeurIPS2019 9:05am East Meeting Rooms 8+15. Please stop by!

Submissions are open for the NeurIPS 2019 Workshop on Privacy in Machine Learning (PriML 2019)! Anything at the intersection of privacy and machine learning is welcome! #privacy #NeurIPS2019.

1

1

19

New paper: In *Federated f-Differential Privacy* (, we proposed a new privacy notion tailored to the setting where the clients ally in the attack. This privacy concept is adapted from f-differential privacy. w/ Qinqing Zheng, @ShuxiaoC, and Qi Long.

0

4

20

Glad that our papers on using AMP to study SLOPE ( and on accelerated optimization methods ( are accepted to NeurIPS 2019!@CindyRush @SimonShaoleiDu.

0

3

20

@a11enhw Yes, the ultimate goal is to understand nature. But we cannot expect any individual to have such a high standard. Being pragmatic is what an average person cares about.

1

0

18

Will attend #NeurIPS2019 in Vancouver 12/10-12/14. Very much looking forward to meeting old and new friends!.

0

0

14

Bravo @MuratAErdogdu !.

Congratulations to Monica Alexander (@monjalexander), @MuratAErdogdu & Stanislav Volgushev for being among this year’s winners of the @UofT Connaught New Researcher Award. 👏👏👏

1

1

15

Congrats, Dr. Dong!.

Congratulations to Dr. Dong @JinshuoD for defending his PhD "Gaussian Differential Privacy and Related Techniques" earlier today!

0

0

15

The poem of privacy.

Our discussant @XiaoLiMeng1 with a poem to conclude the session on Differential Privacy (hosted by @weijie444). He gives a shoutout to @TheHDSR, featuring some nice articles on DP, by @DanielOberski and @fraukolos, and by @mbhawes.

0

0

12

Around #JSM2020? Want to know more about #privacy research from ml and stats perspectives? Want to enjoy the humor of @XiaoLiMeng1, please join the session ( tmrw at 1pm EST. Speakers @thegautamkamath, Jordan Awan, Feng Ruan and @JinshuoD.

1

3

13

The @Wharton Stats Dept is seeking applicants for tenure track professor positions (). Future colleagues please apply!.

0

3

12

Nowadays it’s so common to rediscover the wheels, even for the best minds in our time. The amazing result #eigenvectorsfromeigenvalues ( appeared long ago: Principal submatrices II: The upper and lower quadratic inequalities by Thompson and McEnteggert.

1

1

12

The most fundamental recipe in #privacy research is perhaps to understand “how private are private algorithms.” Here comes a nice read: BTW, the author @JinshuoD is on the job market!.

My very first tweet about my very first blog post: How Private Are Private Algorithms?In fact, this question is answered 87 years ago. #OldiesButGoodies #differentialprivacy.

0

2

12

Fairness @#JSM2022.

How to make classical fair learning algorithms work for deep learning? How to deal with severe class imbalance and subgroup imbalance? Excited to talk about our new work at JSM on Aug. 8th.

0

1

12

Congrats to Lin Xiao for the TOT award #NeurIPS2019! Very well deserved. Still miss a bit the summer of 2013 working with Lin at Redmond.

Congratulations to our colleague Lin Xiao @MSFTResearch for the #NeurIPS2019 test of time award!!!.Online convex optimization and mirror descent for the win!! (As always? :-).).

1

0

12