Maithra Raghu

@maithra_raghu

Followers

17,379

Following

481

Media

76

Statuses

417

Cofounder and CEO @Samaya_AI . Formerly Research Scientist Google Brain ( @GoogleAI ), PhD in ML @Cornell .

Joined July 2017

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

満塁ホームラン

• 95339 Tweets

大谷翔平

• 85595 Tweets

Brighton

• 73759 Tweets

#ラヴィットロック2024

• 72593 Tweets

花火大会

• 64739 Tweets

#V最協S6

• 57027 Tweets

ML IN MACAU

• 52362 Tweets

Vielfalt

• 45728 Tweets

ALNP FANMEET IN HK

• 39774 Tweets

#FNTHWIN

• 37788 Tweets

Täter

• 33131 Tweets

大谷さん

• 31126 Tweets

ケンタッキー

• 31125 Tweets

#BNGWIN

• 29071 Tweets

グランドスラム

• 27625 Tweets

Slogan

• 27579 Tweets

悪役令嬢の中の人

• 26333 Tweets

全力応援

• 25839 Tweets

オオタニサン

• 19564 Tweets

STRAY KIDS DOMINATE SEOUL

• 17926 Tweets

V最本番

• 12889 Tweets

Last Seen Profiles

Pinned Tweet

Does One Large Model Rule Them All?

New post with

@matei_zaharia

and

@ericschmidt

on the future of the AI ecosystem.

Our key question: does the rise of large, general AI models means the future AI ecosystem is dominated by a single general model? ⬇️

27

85

292

A Survey of Deep Learning for Scientific Discovery

To help facilitate using DL in science, we survey a broad range of deep learning methods, new research results, implementation tips & many links to code/tutorials

Paper

Work with

@ericschmidt

Thread⬇️

15

432

1K

A few months ago I left Google Brain to pursue my next adventure: building

@samaya_AI

! We're excited to bring the latest AI advances to the Knowledge Discovery process!

17

21

464

Many of these trends don't hold. Last week we celebrated

@geoffreyhinton

's retirement, and a few weeks earlier saw

@kkariko

receive the Nobel Prize. Their research took decades to come together, and had enormous impact at a world scale. We'd be much worse off if they'd pivoted!

12

35

423

Our paper on Understanding Transfer Learning for Medical Imaging has been accepted to

#NeurIPS2019

!!

Preprint:

As a positive datapoint: we had a good reviewing experience, with detailed feedback and mostly useful comments. Thanks to the Program Chairs!

8

57

281

Delighted our new paper "Anatomy of Catastrophic Forgetting: Hidden Representations and Task Semantics" just won Best Paper at the Continual Learning Workshop at

#ICML2020

!!

Paper:

Oral *tomorrow*, details at:

⬇️ Paper thread

3

40

262

NeurIPS poster presentation happening tomorrow, 8:30am - 10am PT. Hope to see you there!

#NeurIPS2021

@NeurIPSConf

6

26

242

Happy to share our paper on ViTs and CNNs was accepted to

#NeurIPS2021

!

Our other two submissions this year were rejected. I still think they have some great results and am looking forward to improving the papers with the received feedback.

3

19

219

Do Wide and Deep neural networks Learn the Same Things?

Paper:

We study representational properties of neural networks with different depths and widths on CIFAR/ImageNet, with insights on model capacity effects, feature similarity & characteristic errors

Do wide and deep neural networks learn the same thing? In a new paper () with

@maithra_raghu

and

@skornblith

we study how width and depth affect learned representations within and across models trained on CIFAR and ImageNet. 1/6

2

33

148

4

18

202

Excited to share the

@icmlconf

2022 Workshop on Knowledge Retrieval and Language Models

Please consider submitting!

We welcome work across topics including LM grounding, open-domain Q&A, bias in retrieval, analyses of scale, transfer and LM phenomena.

3

26

189

New blogpost on citation trends in

@NeurIPSConf

and

@icmlconf

: I scraped paper citations and studied topic trends, citation distributions and academia/industry splits. Releasing scraper, data and a tutorial!

Post:

Code/Data:

5

33

167

ICLR Town: Pokemon-esque environment to wander around and bump into people, which syncs almost seamlessly with video-chatting capabilities.

What a fun idea for virtual (research) conferences! Thanks

@iclr_conf

organizers!!

#ICLR2020

#iclr

(Uses )

1

27

157

Rapid Learning or Feature Reuse?

New paper:

We analyze MAML (and meta-learning and meta learning more broadly) finding that feature reuse is the critical component in the efficient learning of new tasks -- leading to some algorithmic simplifications!

Rapid Learning or Feature Reuse? Meta-learning algorithms on standard benchmarks have much more feature reuse than rapid learning! This also gives us a way to simplify MAML -- (Almost) No Inner Loop (A)NIL. With Aniruddh Raghu

@maithra_raghu

Samy Bengio.

9

163

602

2

26

152

Excited to see this article by

@QuantaMagazine

overviewing the development of the Vision Transformer, insights on how it works, and promising new applications!

0

36

132

Excited to attend

#NeurIPS2020

!

My amazing collaborator

@thao_nguyen26

**who is applying to PhD programs this year** will be presenting Do Wide and Deep Neural Nets Learn the Same Things? at

@WiMLworkshop

posters *today* & in Inductive Biases Workshop

2

18

125

Lost in the Middle: How Language Models Use Long Contexts

Exciting work exploring the effectiveness of long context, led by

@nelsonfliu

and with Kevin Lin, Ashwin Paranajape, John Hewitt,

@percyliang

@Fabio_Petroni

@MicheleBevila20

4

24

125

Very excited about our latest preprint: , joint work with

@arimorcos

and Samy Bengio. We apply Canonical Correlation (CCA) to study the representational similarity between memorizing and generalizing networks, and also examine the training dynamics of RNNs.

Do different networks learn similar representations to solve the same tasks? How do RNN representations evolve over training? What can representational similarity tell us about generalization? Using CCA,

@arimorcos

and

@maithra_raghu

try to find out!

5

97

299

0

41

117

My entry to

#MachineLearning

(from another field) wouldn't have happened without

#NIPS2014

. But the reason I went, and found a welcoming community was due to

#WiML2014

. Now

#WiML2018

's organizer call is open . Apply by 25/03! The impact can't be overstated.

0

40

111

How do representations evolve as they go through the transformer? How does the Masked Language Model objective affect these compared to Language Models? How much do different tokens change and influence other tokens?

Answers in the paper by

@lena_voita

: !

0

22

103

Deep Learning: Bridging Theory and Practice happening tomorrow at

#NIPS2017

! Final Schedule:

@lschmidt3

@OriolVinyalsML

@rsalakhu

We have an exciting program with talks by Yoshua Bengio

@goodfellow_ian

Peter Bartlett Doina Precup Percy Liang Sham Kakade!

2

25

95

How does transfer learning for medical imaging affect performance, representations and convergence? Check out the blogpost below and our

#NeurIPS2019

paper for some of the surprising conclusions, new approaches and open questions!

How does transfer learning for medical imaging affect performance, representations and convergence? In a new

#NeurIPS2019

paper, we investigate this across different architectures and datasets, finding some surprising conclusions! Learn more below:

3

174

473

2

27

87

Presenting this at

@iclr_conf

*today*!

Talk and Slides:

Poster Sessions: (i) 10am - 12 Pacific Time, (ii) 1pm - 3pm Pacific Time

Thanks to the organizers for a *fantastic* virtual conference, hope to see you there!

#iclr

#ICLR2020

Rapid Learning or Feature Reuse? Meta-learning algorithms on standard benchmarks have much more feature reuse than rapid learning! This also gives us a way to simplify MAML -- (Almost) No Inner Loop (A)NIL. With Aniruddh Raghu

@maithra_raghu

Samy Bengio.

9

163

602

1

15

87

Motivating the Rules of the Game for Adversarial Example Research:

Fantastic and nuanced position paper by

@jmgilmer

@ryan_p_adams

@goodfellow_ian

on better bridging the gap between research on adversarial examples and realistic ML security challenges.

0

46

88

First foray into Deep RL We test on a game with continuously tuneable difficulty and *known* optimal policy. We study different RL algorithms, supervised learning, and multiagent play.

@jacobandreas

0

29

84

Our paper on using Machine Learning (Direct Uncertainty Prediction) for predicting doctor disagreements and medical second opinions will be at

@icmlconf

next week!

Blog:

Paper:

#icml2019

#DeepLearning

1

18

82

Really enjoyed this discussion with

@jaygshah22

on our work on exploring neural network hidden representations, our recent paper on ViTs and CNNs, and PhD experiences + the ML research landscape!

Video:

In a chat with

@maithra_raghu

, Sr. Research Scientist at

@GoogleAI

about analyzing internal representations of

#DeepLearning

models, comparing vision transformers and CNNs, how she developed her interest in ML, and useful tips for researchers/PhD students!

0

0

10

1

7

79

NIPS workshop on theory and practice in Deep Learning

#nips2017

@NipsConference

@OriolVinyalsML

@lschmidt3

@rsalakhu

2

19

79

Had a fantastic week learning about exciting research directions and meeting old and new friends at

#NeurIPS2019

. Thanks to the organizers, volunteers and participants for a wonderful conference!

My talk at

#ML4H

is at (~44 mins), and posters below!

1

8

70

Looking forward to attending

#ICLR2021

next week! We're presenting three papers on questions exploring neural network representations, properties of training and algorithms for helping the learning process.

1

7

69

Excited to announce our

@icmlconf

workshop on understanding phenomena in deep neural networks! With fantastic speakers including

@orussakovsky

@ChrSzegedy

@KordingLab

@beenwrekt

@AudeOliva

Submission deadline May 5!

#DeepLearning

#AI

#MachineLearning

1

12

67

Delighted to be named one of this year's

#STATWunderkinds

for our work on machine learning in medicine:

Grateful to my collaborators and mentors for their advice and support throughout!

@statnews

4

9

55

On AGI and Self-Improvement

With

@ericschmidt

Questions on AGI are at heart of debate on AI capabilities & risks. To get there AI must learn "on the fly". We outline definitions of AGI, explore this gap, and examine the crucial role of *self-improvement*

5

6

54

A blogpost I wrote on our paper SVCCA, at

#nips2017

! With Justin Gilmer,

@jasonyo

@jaschasd

-- hoping many people will try it out on their networks with the open source code:

0

16

46

Looking forward to speaking about Artificial and Human Intelligence in Healthcare at the

#OReillyAI

conference ! Will discuss developing better AI systems and human expert interactions:

1

10

44

Fantastic workshop on the theory of deep learning at Bellairs in Barbados! Five days of incredible talks from

@ShamKakade6

@HazanPrinceton

@ylecun

@suriyagnskr

@prfsanjeevarora

and many others! Huge thanks to the organizers (

@prfsanjeevarora

and Denis Therien)!

Bellairs. Day 5

@HazanPrinceton

and myself: double feature on controls+RL. +spotlights:

@maithra_raghu

: meta-learning as rapid feature learning. Raman Arora: dropout, capacity control, and matrix sensing .

@HanieSedghi

: module criticality and generalization! And that is a wrap!🙂

0

3

33

1

5

38

Exploring the AI Landscape:

New blog by

@bclyang

and me! We'll be covering topics in AI from fundamental research to considerations for deployment.

Our first post: is on Digital Health and AI for Health, a longstanding interest!

0

5

39

Another

#AI

startup acquisition, this time the conversational AI startup Semantic Machines, acquired by Microsoft:

1

8

38

Excited to be speaking at REWORK's Deep Learning in Healthcare summit!

#reworkHEALTH

I'll be speaking about our work on Direct Uncertainty Prediction for Medical Second Opinions:

2

7

38

Excellent page by

@Worldometers

:

has detailed statistics on the coronavirus --- number of cases, severity, breakdown by country, and many others.

1

17

36

@geoffreyhinton

often wrote quick matlab code and even computed gradients by hand! (I was always inspired that even at that level of seniority, he could quickly prototype his own ideas!)

2

0

35

Heading to

#NeurIPS2018

this week! Looking forward to meeting old friends and new! Let me know if you'll be around and want to chat.

@arimorcos

and I will be presenting our paper on the Wednesday poster session, hope to see you there!

0

4

37

It's a delight and privilege to work with such an amazing team at

🎉🌐 Big news from

@samaya_AI

. We have two shiny new offices in

#London

&

#MountainView

🏢, staffed with an incredible team of brilliant minds💡🚀. Check out our freshly launched website at 🌟

3

10

97

1

0

33

Website of our (

@OriolVinyalsML

@lschmidt3

@prfsanjeevarora

@rsalakhu

)

#nips2017

workshop ! Speaking:

@goodfellow_ian

2

16

35

I'm deeply saddened to hear about the passing of

@SusanWojcicki

We met just a couple months back, and she offered sage advice on running a company, even giving feedback on our new product features. I was struck by her insight, her groundedness and her warmth. Sending her family

0

0

34

AI winning IMO gold would be impressive, but an AI coming up with IMO *questions* would be even more impressive to me.

Can it understand and use different theorems intelligently to come up with hard, creative and truly new questions? Can it do this consistently?

6

1

29

Excited to be speaking at

@reworkdl

deep learning summit today , and Stanford's HealthAI

@ai4healthcare

hackathon tomorrow!

What with the ICML deadline just wrapping up, it's been a busy week 😅

1

2

31

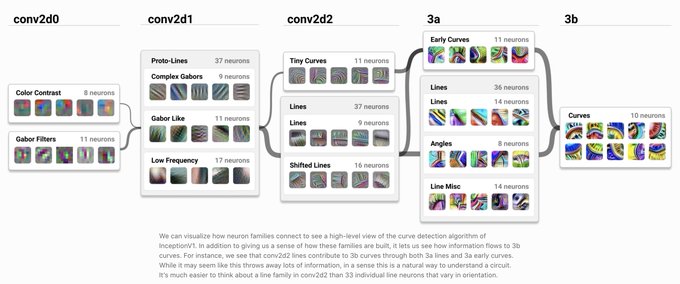

Very interesting work on identifying, understanding and reconstructing the representations learned by neural networks!

(I've also enjoyed

@distillpub

's "Building Blocks of Interpretability" and "Zoom In" which this work builds on)

0

3

32

Very exciting work by

@matei_zaharia

@alighodsi

and quite literally all of

@databricks

(who created the dataset!)

Lots of interesting followup questions from this --- how well can we use this to bootstrap synthetic data, etc.

2

2

31

I've been enjoying reading

@beenwrekt

's posts on

#ReinforcementLearning

: (new post today!), and it's great to see these insights come together in paper format!

"Simple random search provides a competitive approach to reinforcement learning", by Mania, Guy and

@beenwrekt

Paper:

Code:

Blog:

6

128

447

0

12

30

1) Do Wide and Deep Neural Networks Learn the Same Things?

(led by

@thao_nguyen26

& with

@skornblith

)

2) Teaching with Commentaries

(led by

@RaghuAniruddh

& with

@skornblith

@DavidDuvenaud

@geoffreyhinton

)

1

5

30

Thrilled to be working with my amazing co-founder

@Fabio_Petroni

, and a growing team of incredible researchers & engineers!

1

0

30

Transformer representations can generalize across data modalities! Very interesting result, lots of promise for more progress in multi-modal learning!

What are the limits to the generalization of large pretrained transformer models?

We find minimal fine-tuning (~0.1% of params) performs as well as training from scratch on a completely new modality!

with

@_kevinlu

,

@adityagrover_

,

@pabbeel

paper:

1/8

4

71

353

0

5

29

Another research update: Final version of our

#nips2017

@NipsConference

paper SVCCA: with accompanying code:(!!) We look at deep learning dynamics and interpret the latent representations. With Justin Gilmer,

@jasonyo

,

@jaschasd

1

7

28

Looking forward to speaking at

@RAAISorg

this Friday! Many exciting ML research areas, from health to privacy to bioengineering. Details on the talks, research and speakers at:

T-10 days

@RAAISorg

! Secure your spot: . Ft:

- AI chips w/

@CerebrasSystems

- Health w/

@saraheeberry

@pearsekeane

@maithra_raghu

- Private ML w/

@DeepMind

@zama_crypto

- Ethics w/

@SandraWachter5

@b_mittelstadt

@c_russl

- AVs w/

@sarnoud

- Bioeng w/

@DoctorJosh

0

5

8

0

10

27

It was awesome having

@samaya_AI

as part of the first batch of AI Grant companies! Grateful to

@natfriedman

and

@danielgross

for creating an energizing community for AI-native products. Consider applying!

0

2

20

Looking forward to heading to

#NeurIPS2023

next week! This year marks a decade(!) of attending NeurIPS!

It's remarkable to see how much the field has advanced in 10 years!

These past 2 years of building

@samaya_AI

has been incredible, and we are continuing to grow!

1

1

28

Thanks to

@atJustinChen

and

@statnews

for an in-depth followup discussion on our research work and motivations.

We talk about neural networks, techniques to better understand them, and ways this can inform their design and usage as assistive tools.

0

2

27

Sending best wishes to friends, former colleagues and the team at

@OpenAI

. You've made incredible, world changing contributions to AI, and it was sad to see the developments of the past few days. Wishing you the best in navigating these transitions.

0

0

21

Thanks so much to the organizers and

@MITEECS

for hosting the EECS Rising Stars 2018! Entertaining, insightful and inspiring discussion by panelists and speakers on research and academia, and a truly unique opportunity to meet my fantastic peers across all of EECS!

0

0

22

Totally agree. Public criticism disproportionally impacts the graduate student leading the project, and ML publishing is already very high pressure. Twitter also isn't the right place for a nuanced scientific discussion.

I realize this is seemingly an unpopular opinion, but I can't get onboard with these Twitter criticisms of some of the recent

#ICML2022

best paper awardees. I've been thinking about this all day. A thread... 🧵 1/N

20

86

911

0

0

23

So excited to be working together!!

Today is my first day as a CTO (and co-founder) of

@samaya_AI

.

The last 4 years at FAIR have been incredible.

Now I'm looking forward to bringing the latest knowledge discovery technologies to market!

17

10

202

0

0

23

New paper Teaching with Commentaries

We introduce commentaries, metalearned information to help neural net training & give insights on learning process, dataset & model representations

Led by

@RaghuAniruddh

& w/

@skornblith

@DavidDuvenaud

@geoffreyhinton

Teaching with Commentaries:

We study the use of commentaries, metalearned auxiliary information, to improve neural network training and provide insights.

With

@maithra_raghu

,

@skornblith

,

@DavidDuvenaud

,

@geoffreyhinton

Thread⬇️

2

6

52

0

3

22

Intriguing invited talk at

#DeepPhenomena

from Chiyuan Zhang on the effect of resetting different layers: Are all layers created equal?

#ICML2019

@icmlconf

1

3

22

Enjoyed speaking at

@RealAAAI

workshop on Learning Network Architectures During Training:

I overviewed our work on techniques to gain insights from neural representations for model & algorithm design.

All talk videos are on the workshop page above! ⬆️

0

3

21

@karpathy

I usually mute all notifications and put on do not disturb. Sometimes takes me a little longer to respond to things, but the mental space is worth it :)

2

0

22