Denny Zhou

@denny_zhou

Followers

17K

Following

3K

Media

68

Statuses

742

Founded & lead the Reasoning Team in Google Brain (now part of Google DeepMind). Build LLMs to reason. Opinions my own.

Joined August 2013

My talk slides: on key ideas and limitations of LLM reasoning, largely based on work in GDM. Thank you so much for the invitation, Dawn!.

Really excited about the enthusiasm for our LLM Agents MOOC: 4000+ already joined within 2.5 days of announcement! 🎉🎉🎉. Join us today at online for 1st lecture on LLM reasoning, @denny_zhou @GoogleDeepMind, 3:10pm PT!

11

67

452

Our new model is still experimental, and we’re eager to hear your feedback! One thing that I want to particularly highlight is that our model transparently shows its thought process.

Introducing Gemini 2.0 Flash Thinking, an experimental model that explicitly shows its thoughts. Built on 2.0 Flash’s speed and performance, this model is trained to use thoughts to strengthen its reasoning. And we see promising results when we increase inference time.

18

16

299

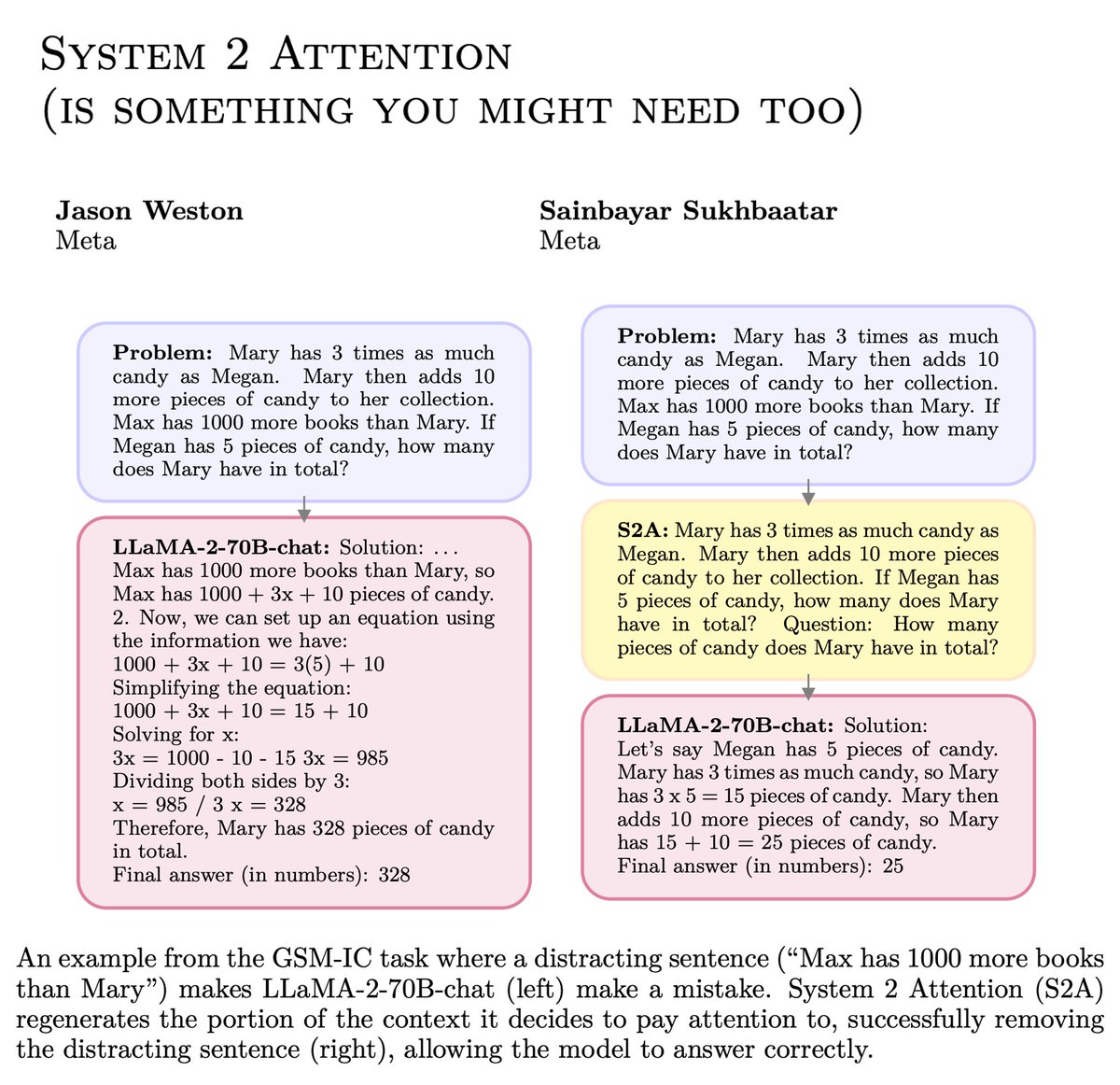

A key finding in this work: adding irrelevant context to GSM8k problems causes LLMs to fail at solving them, as we demonstrated in our ICML 2023 paper, "Large Language Models Can Be Easily Distracted by Irrelevant Context" (. The differences in prompt

1/ Can Large Language Models (LLMs) truly reason? Or are they just sophisticated pattern matchers? In our latest preprint, we explore this key question through a large-scale study of both open-source like Llama, Phi, Gemma, and Mistral and leading closed models, including the

25

47

264

In our ICML 2023 paper "Large language models can be easily distracted by irrelevant context" (, we simply asked LLMs to ignore the irrelevant context and then they worked well. E.g. the example showed in your paper can be solved as follows:

🚨 New paper! 🚨.We introduce System 2 Attention (S2A). - Soft attention in Transformers is susceptible to irrelevant/biased info.- S2A uses LLM reasoning to generate what to attend to.Improves factuality & objectivity, decreases sycophancy. 🧵(1/5)

7

19

256

To know more about this paradigm shift, a good starting point is the STAR paper ( by Eric(@ericzelikman), Yuhuai (@Yuhu_ai_), and Noah. If letting me guess what star in Q-star means, it has to be this STAR, the most undervalued work on finetuning.

5

16

173

Instead of using the terms “system 1/2,” for LLM reasoning, it is more appropriate to view reasoning as a continuous spectrum, defined by inference time.

system 1 vs system 2 thought is bullshit imo, and i honestly think it's a neuroscience old wives tale clung onto by researchers who couldn't make their inference algorithms scale beyond math problems. the goal is trading model scale for extended thought 1:1.

14

16

154

Self-debug has worked quite well on the raw GPT3.5 model: code-davinci-002 (not instruction tuned): .

GPT-4 has one emergent ability that is extremely useful and stronger than any other models: self-debug. Even the most expert human programmer cannot always get a program correct at the first try. We look at execution results, reason about what's wrong, apply fixes, rinse and

8

33

127

Fantastic comments by (@jkronand, @enjoyingthewind) connect our LLM reasoning work to Polya. Then checked the book "How to solve it". Page 75: "decomposing and recombining". Maps to "Least to Most Prompting" Page 98: "do you know a related problem".

4

9

124

I believe OpenAI will continue to achieve remarkable breakthroughs as long as people like Ilya and Alec are still there. The next big innovation would make most of the current techniques (if not all) irrelevant. The imitation game is just at its very beginning.

I deeply regret my participation in the board's actions. I never intended to harm OpenAI. I love everything we've built together and I will do everything I can to reunite the company.

7

10

116

I dont think there is magic here: text-davinci-002 and other 002 models in GPT-3, and instruct GPT should have been finetuned with "let's think step by step . ". I tried 001 models in GPT3 and none of them works with this kind of prompt while CoT still works.

Large Language Models are Zero-Shot Reasoners. Simply adding “Let’s think step by step” before each answer increases the accuracy on MultiArith from 17.7% to 78.7% and GSM8K from 10.4% to 40.7% with GPT-3.

8

14

109

Automate chain-of-thought prompting simply by letting LLMs recall relevant questions/knowledge that they have seen. Fully matches hand crafted CoT performance on reasoning/coding benchmarks and even performs better.

Introducing Analogical Prompting, a new method to help LLMs solve reasoning problems. Idea: To solve a new problem, humans often draw from past experiences, recalling similar problems they have solved before. Can we prompt LLMs to mimic this?. [1/n]

2

13

104

Why does few-shot prompting work without any training or finetuning? . Few-shot prompting can be equivalent to fine-tuning running inside of an LLM!. Led by @akyurekekin, great collaboration with amazing folks: Dale Schuurmans, @jacobandreas, @tengyuma!.

3

12

104

A simple yet effective approach to fill the performance gap between zero-shot and few-shot prompting. Xinyun Chen @xinyun_chen_ is going to present our recent work LLM analogical reasoning ( this afternoon in the exciting #MathAI workshop of #NeurIPS2023.

3

20

99

A new conference is dedicated to language modeling for everyone. Great to see any feedback that you would have:

Introducing COLM ( the Conference on Language Modeling. A new research venue dedicated to the theory, practice, and applications of language models. Submissions: March 15 (it's pronounced "collum" 🕊️)

3

6

82

Amazing! Met @demi_guo_ in Google Beijing about 5 years ago. She came with Xiaodong He, my invitee and collaborator, who did pioneering work on text-to-image. Now Pika's work is just like magic.

Excited to share that I recently left Stanford AI PhD to start Pika. Words can't express how grateful I am to have all the support from our investors, advisors, friends, and community members along this journey! .And there's nothing more exciting than working on this ambitious &.

3

4

74

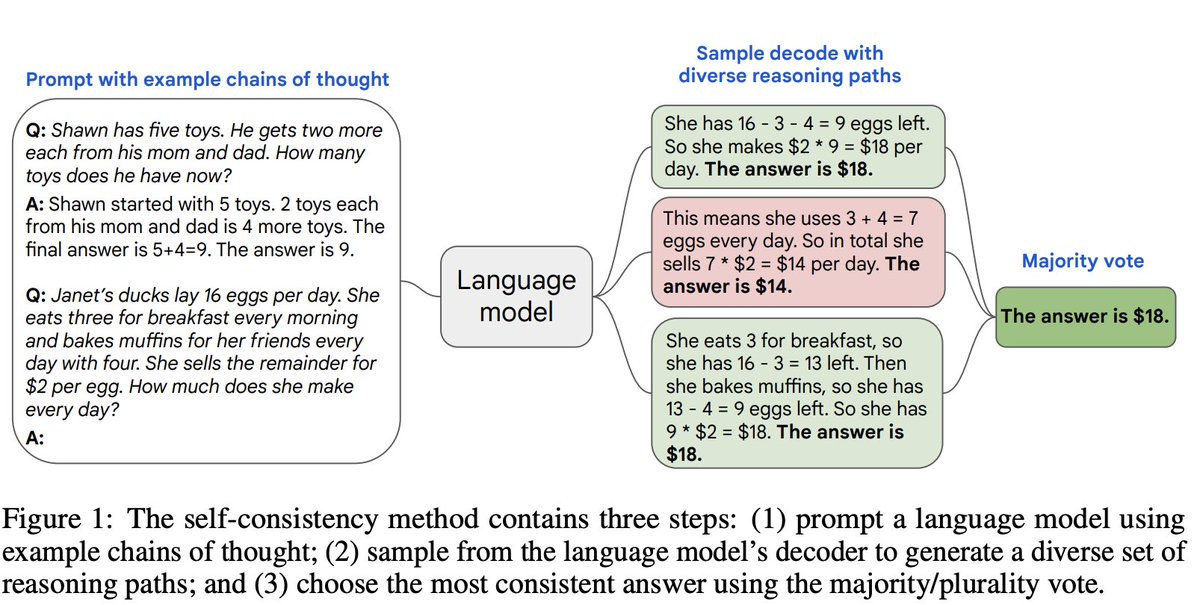

A simple quiz to check if one understands the principle underlying self-consistency (:. If we ask the LLM to directly generate multiple responses instead of sampling multiple responses, and then aggregate the answers, will this work as well as.

Mathematical beauty of self-consistency (SC) . SC ( for LLMs essentially just does one thing: choose the answer with the maximum probability as the final output. Anything else? Nothing. Don't LLMs choose the optimal answer by default? Not necessarily.

8

3

66

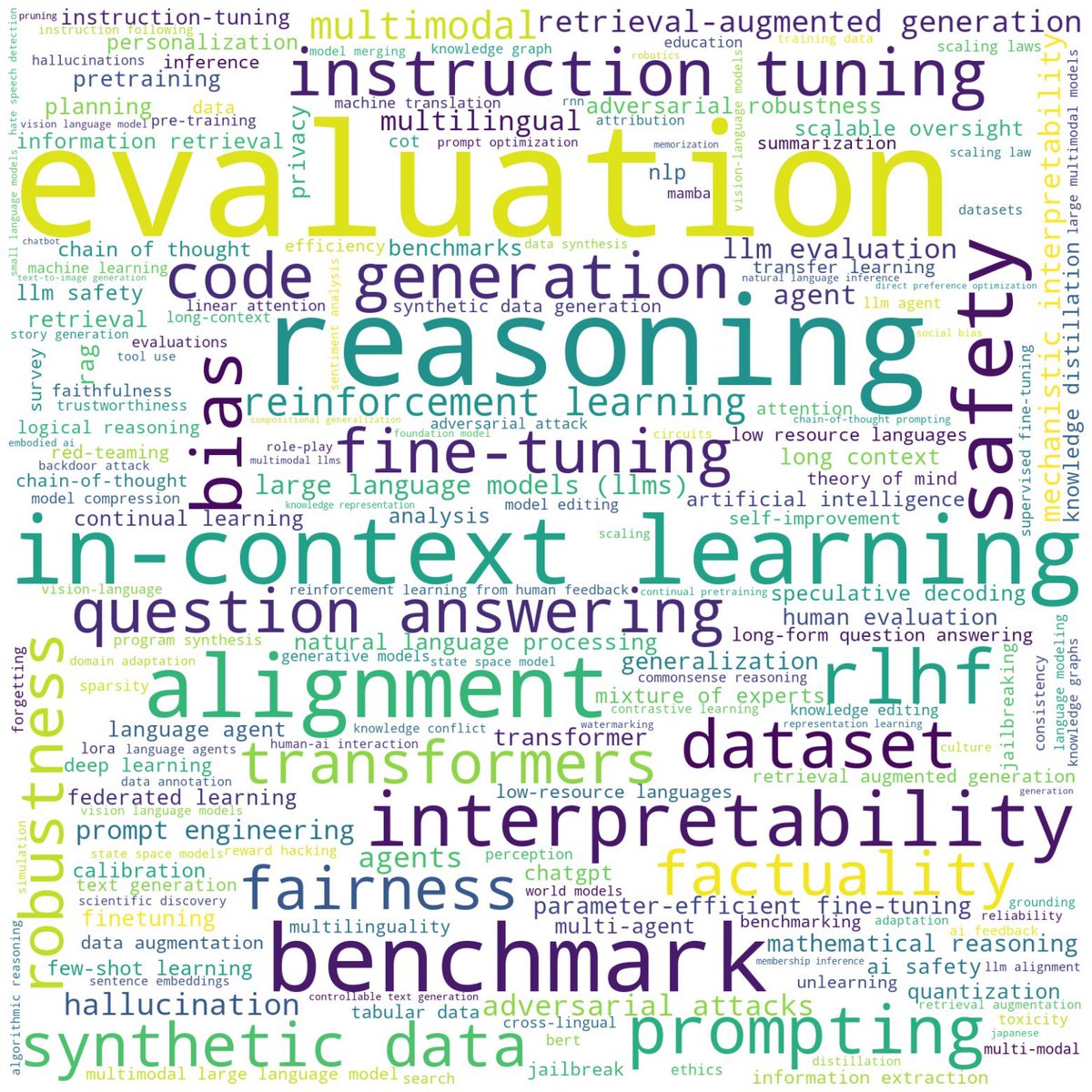

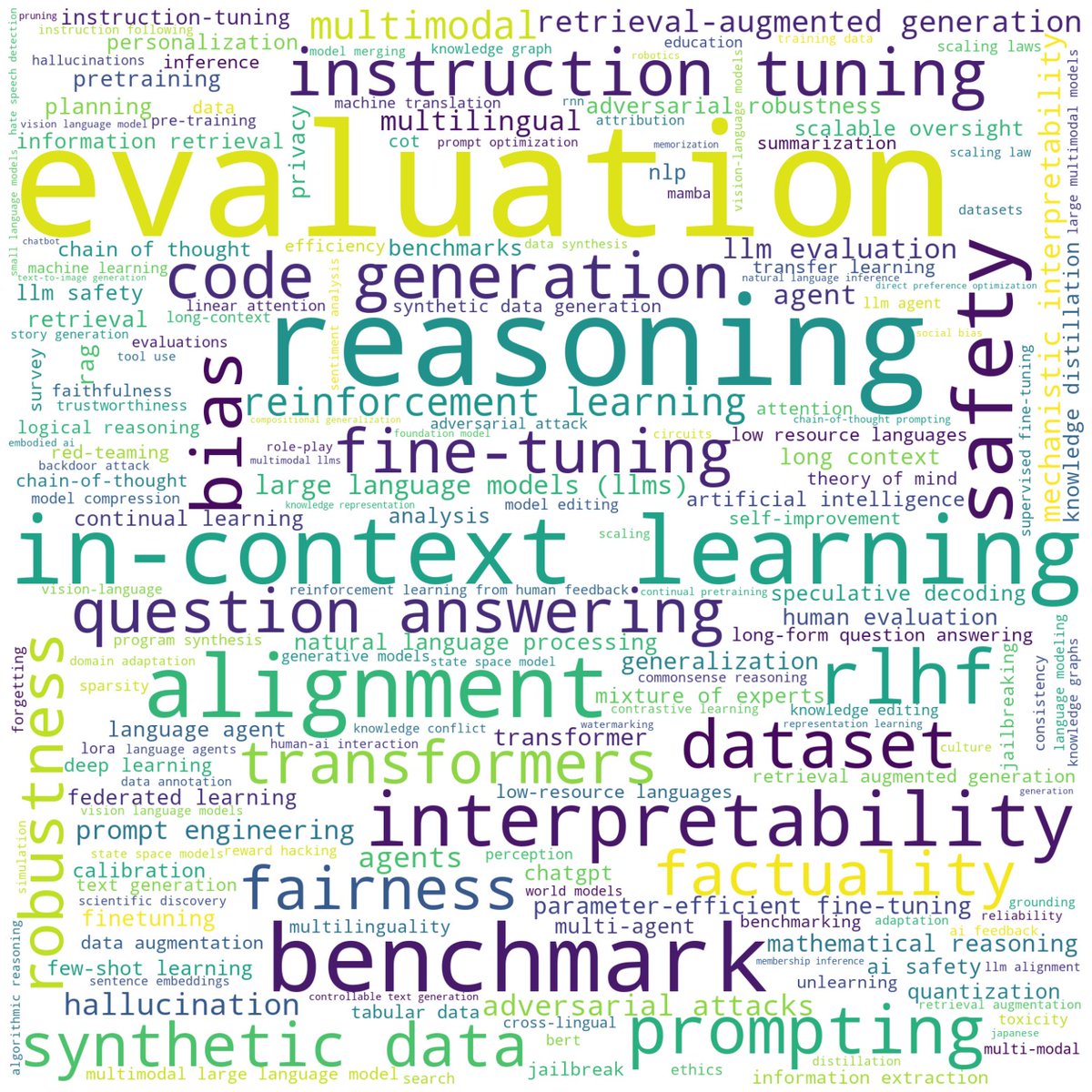

Welcome to the new era of AI: "Deep" was once the buzzword at AI conferences, but it's no longer the case in COLM.

Folks, some @COLM_conf stats, because looking at these really brightens the mood :).We received a total of ⭐️1036⭐️ submissions (for the first ever COLM!!!!). What is even more exciting is the nice distribution of topics and keywords. Exciting times ahead! ❤️

1

11

61

Although our work is about LLMs, I actually don't think humans do better than LLMs on self-correcting. Look at those countless mistakes in AI tweets.

Interesting papers that share many findings similar to our recent work (, in which we argue that "Large Language Models Cannot Self-Correct Reasoning Yet". I'm happy (as a honest researcher) and sad (as an AGI enthusiast) to see our conclusions confirmed

2

11

60

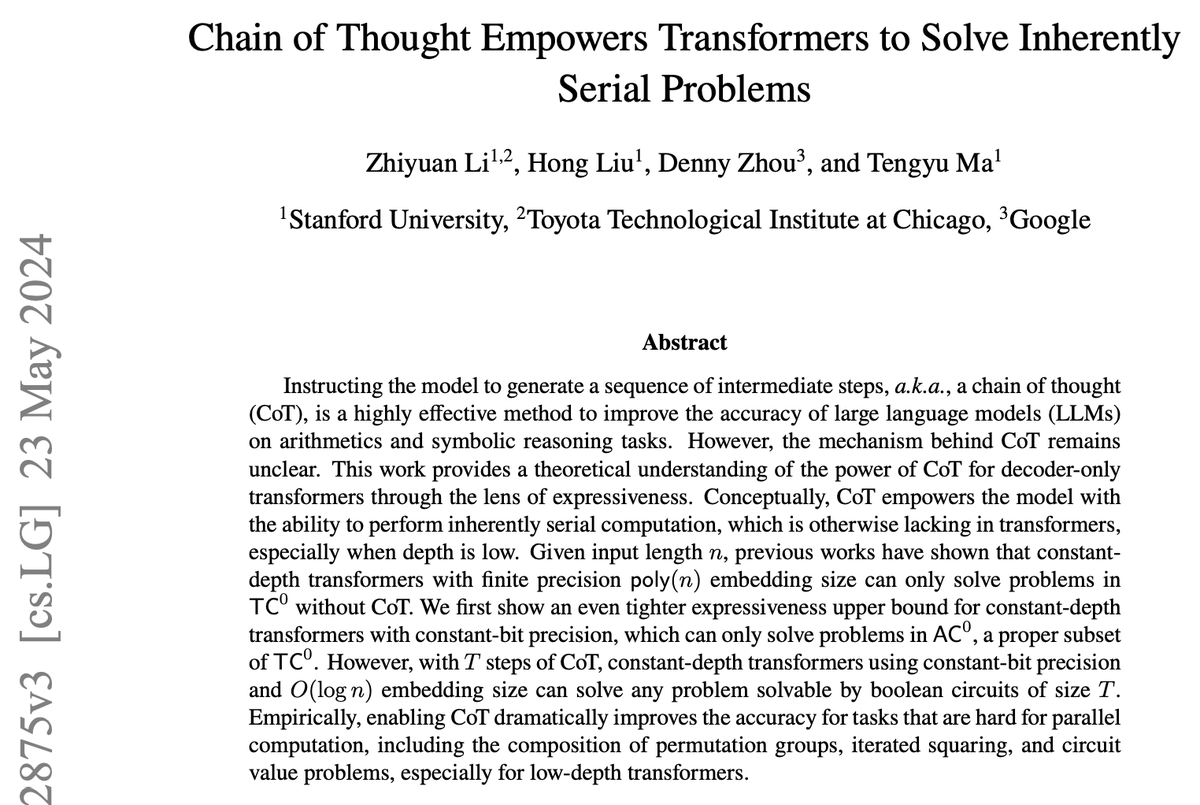

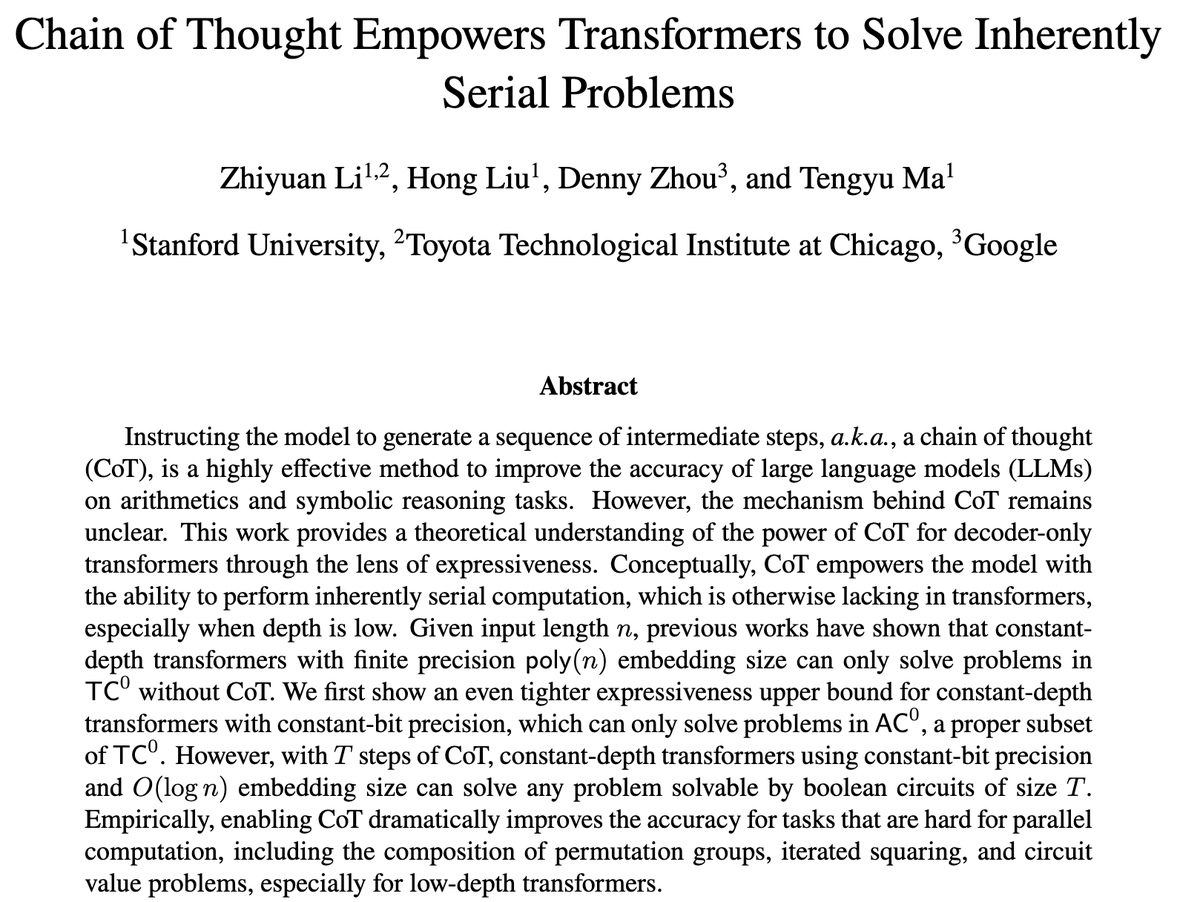

For those familiar with complexity theory, the figure below illustrates the precise results. For further details, please refer to @zhiyuanli_ post.and our paper.

Why does Chain of Thought (CoT) work?. Our #ICLR 2024 paper proves that CoT enables more *iterative* compute to solve *inherently* serial problems. Otoh, a const-depth transformer that outputs answers right away can only solve problems that allow fast parallel algorithms.

0

8

60

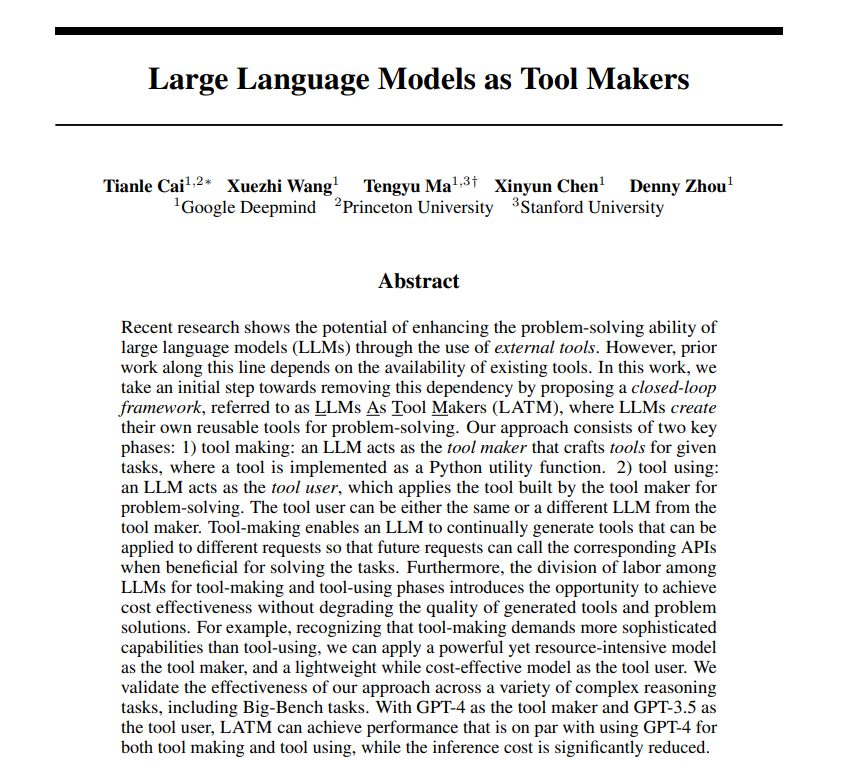

A nice combination of LLMs and Google. Like least-to-most prompting, a complex questions is decomposed into a set of easier subquestions. The key difference here is that Google is used to answer those subquestions instead of LLMs.

We've found a new way to prompt language models that improves their ability to answer complex questions .Our Self-ask prompt first has the model ask and answer simpler subquestions. This structure makes it easy to integrate Google Search into an LM. Watch our demo with GPT-3 🧵⬇️

1

12

59

@arankomatsuzaki The truth should be simple: Text-davinci-002 (175B) or other 002 model, or instruct GPT have been finetuned with "let's think step by step. ". I tried 001 models and none of them works with the proposed method while CoT still works well.

5

6

53

@DrJimFan Self-debug: .

Teaching LLMs to Self-Debug. -Prompt instructs LLM to execute code then generate a feedback message based on result.-W/ out any feedback on code correctness, LLM is able to identify its mistakes by explaining its code. -SoTA performance on code generation.

4

6

52

@ericzelikman @Yuhu_ai_ Here is a demo of thought generation by Gemini 2.0 flash thinking.

Excited to share an early preview of our gemini 2.0 flash thinking model with all it's raw thoughts visible. Here's the model trying to solve a Putnam 2024 with multiple approaches, and then self-verifies that it's answer was correct.

5

0

50

Will be attending NeurIPS. Excited to meet COLM co-organizers in person, and receive suggestions on COLM from everyone in NeurIPS.

Some COLM updates - ( . * Added amazing @aliceoh and @monojitchou as DEI Chairs.* PCs are hard at work on org.* OpenReview Interest has been overwhelming, (400 surveys responses!) but the team is awesome and it's going to be great.

1

0

50

With only 0.1% examples, matched the SoTA in the literature (specialized models w/ full training) on the challenging CFQ benchmark; and achieved new great SoTA with only 1% examples. Opens a great opportunity to use knowledge graphs by natural language! Well done team!.

🚨 New preprint! 🚨. We refine least-to-most prompting and achieve sota on CFQ (95% accuracy), outperforming previous fully supervised methods. Joint first author work with the formidable Nathanael Schärli.

0

10

49

Surprised at seeing @kchonyc understands self-consistency as minimum bayesian risk (MBR). Self-consistency (SC) has nothing to do with MBR. Mathematically, SC is marginalizing the latent reasoning processes to compute the full probability of the final answer. If there is no.

modern LM research seems to be the exact repetition of MT research. here goes the prediction; someone will reinvent minimum Bayes risk decoding but will call it super-aligned, super-reasoning majority voting of galaxy-of-thoughts.

5

0

45