Jie Huang

@jefffhj

Followers

4,998

Following

607

Media

56

Statuses

425

Building intelligence @xAI . PhD from UIUC CS

Joined July 2017

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Elon

• 931256 Tweets

#गल_काटे_सो_कुफर_कसाई

• 170666 Tweets

Michigan

• 161554 Tweets

#UFC307

• 127040 Tweets

Vandy

• 120254 Tweets

Watch Sant RampalJi YouTube

• 119253 Tweets

Tennessee

• 112383 Tweets

Dodgers

• 107418 Tweets

Vanderbilt

• 84022 Tweets

Dark MAGA

• 81758 Tweets

トッキュウジャー

• 80796 Tweets

#BUS_KnockKnockKnock_Korat

• 74482 Tweets

Nico

• 73472 Tweets

Pereira

• 71472 Tweets

Bama

• 71222 Tweets

Ohtani

• 70989 Tweets

ドジャース

• 66207 Tweets

プリキュア

• 49368 Tweets

Khalil

• 47556 Tweets

Arkansas

• 37667 Tweets

DONBELLE ASAP RESURGENCE

• 36863 Tweets

Aldo

• 33358 Tweets

ポストシーズン

• 30748 Tweets

Bautista

• 26629 Tweets

Save Sanatan Dharma

• 26347 Tweets

Pennington

• 22963 Tweets

Ifigenia Martínez

• 18954 Tweets

Machado

• 18888 Tweets

京都大賞典

• 17903 Tweets

Poatan

• 14451 Tweets

大谷さん

• 14355 Tweets

山本由伸

• 11684 Tweets

Last Seen Profiles

Life Update: joined

@xai

recently and witnessed the release of Grok-2.

Amazed by the passion of the team and how fast we are moving. Join us at

9

18

459

Certainly, this paper deserves more attention.

4

78

405

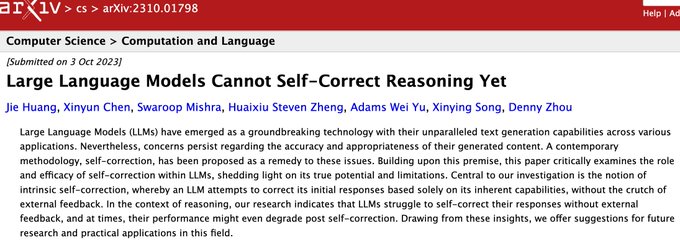

Interesting papers that share many findings similar to our recent work (), in which we argue that "Large Language Models Cannot Self-Correct Reasoning Yet".

I'm happy (as a honest researcher) and sad (as an AGI enthusiast) to see our conclusions confirmed

7

63

338

A reflection of the "reflection":

- CoT distilled from larger model + Multiple Generations + Voting

- CoT + Multiple Generations + Voting

- CoT + Single Generation (But Multiple Responses in the Generation) + Voting

I guess the "reflection" here is essentially CoT (or thinking

3

23

197

Xinyun (

@xinyun_chen_

) and I have updated a version of our paper, which will appear at

#ICLR2024

. In summary:

1. Current LLMs Cannot Self-Correct Reasoning Intrinsically (tested on ChatGPT/GPT-4 and Llama-2 using various prompts).

2. Multi-Agent Debate Does Not Outperform

3

25

177

I authored a critique paper titled "Large Language Models Cannot Self-Correct Reasoning Yet" () 20 days ago.

I’ve observed two distinct groups misinterpreting the content in two different ways:

For LLM Critics: "LLMs Cannot Self-Correct Reasoning" !=

5

30

158

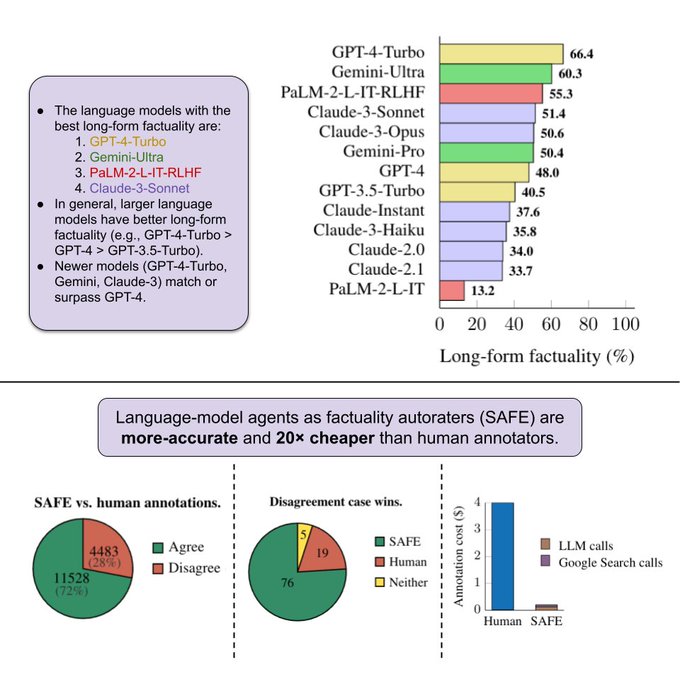

Last year, when I interned at

@GoogleDeepMind

, my first task was to improve the factuality of Bard (now called Gemini). I'm very happy to see that part of my internship work has now been refined as the SAFE system.

To enhance factuality, the first step is to develop a method for

New

@GoogleDeepMind

+

@Stanford

paper! 📜

How can we benchmark long-form factuality in language models?

We show that LLMs can generate a large dataset and are better annotators than humans, and we use this to rank Gemini, GPT, Claude, and PaLM-2 models.

9

77

368

2

13

157

Another typical 10 days for a PhD student after his typical 10 days:

• Draft 80% of a paper (2 days)

• Conduct experiments (3 days)

• Draft a grant proposal with Kevin

• Prepare for and pass the PhD preliminary exam

• Attend 7 project meetings

• Prepare and deliver two

8

5

120

Today is my last day as a research intern at

@GoogleDeepMind

.

It has truly been an incredible experience working with my hosts

@xinying_song

@denny_zhou

& many other brilliant minds.

I will be staying in the Bay Area for the next week and will soon be on the job market.

4

0

118

6 papers were accepted to

#EMNLP2023

(including findings and demos). Congrats to all of my students and collaborators.

Interestingly, all of my PhD work so far has been published at ACL/EMNLP. I rarely submitted papers to other venues, though I do more now :)

5

0

114

A well-written blog by

@Francis_YAO_

!

Additionally, our survey on reasoning in LLMs has been accepted to

#ACL2023

(Findings, though it received an average review score of 4 and a best paper recommendation 🫠)

I will update a new version soon :)

3

15

86

I'd like to reintroduce a paper I wrote last year (), which mirrors and helps explain a bit of the Reversal Curse ().

It found that LLMs perform quite well in verbatim recovery (memorization) but struggle to associate relevant

4

10

81

🔥🔥🔥Exciting News!!! I'm thrilled to share that I'll be continuing into the 4th year of my PhD at

@IllinoisCS

this fall (after finishing the 3rd year of my PhD at

@IllinoisCS

👍👍👍)!!!! I am so grateful for the support from my advisors, families, and friends!!!!! 🎉🎉🎉👏👏👏

2

2

69

Thanks for the invitation! Happy to share our work on self-correction & reasoning in LLMs.

My presentation will mainly revolve around two questions:

1) Are LLMs really able to self-correct their responses?

2) Are LLMs really able to reason?

Note: Both questions don't have simple

Our community-led Geo Regional Asia Group is excited to welcome

@jefffhj

on Wednesday, January 17th to present "Large Language Models Cannot Self-Correct Reasoning Yet."

Learn more and add this event to your calendar:

2

5

16

2

11

67

Grok-2 is now officially in the Chatbot Arena leaderboard and ranked at the

#2

spot!

Feel free to share your feedback. Stay tuned for what we have coming next ;)

2

3

68

Three papers were accepted to

#ACL2023

. See you in Toronto!🍁

👉Preprints:

Reasoning in LLMs:

Diversified Generative Commonsense Reasoning:

Specificity in Languag Models:

#LLMs

#Reasoning

1

7

65

Unfortunately, all three LLM evaluation papers I reviewed for

#EMNLP2023

simply selected several benchmarks, reported numbers, and drew obvious conclusions. They provided almost no "insights".

To be honest, I could guess the conclusions just from the title of the paper...

2

7

62

A relevant discussion is "Are Large Language Models Really Able to Reason?" – a question I posed in our reasoning survey last year ().

My opinion is not to treat this question as a binary yes/no. The model certainly has some ability to reason, though it's

2

9

59

Have received 50+ emails/DMs and am surprised by everyone’s enthusiasm, lol. This is a crazy era with a lot of exciting things to explore.

Thanks to everyone who reached out. I may not be able to reply to all messages, but I’ll read each one over the next two days (I’m

1

1

56

As an AC for

#NAACL

, I've noticed that half of the papers in my batch are categorized under "Resources and Evaluation". However, these papers are actually focused on LLM prompting😅

From what I recall, this was not a particularly popular track in the past, as it is typically

3

1

49

Gave my first in-person oral presentation at

#ACL2023

(only remote oral or in-person poster presentations before).

In this day and age, research on entity/concept relationships might not draw the general audience's attention. Nevertheless, it's a unique topic that I have

2

3

42

LLMs are trained to follow instructions and align with human preferences.

If you give a wrong instruction or indicate an incorrect preference, and they follow the instruction to do something wrong or not meet your preference, it's your problem, not the problem of LLMs.😅

5

8

35

Are Large Pre-Trained Language Models Leaking Your Personal Information? Welcome to check the answer in our recent

#EMNLP2022

paper on LM *Memorization* vs *Association*

Paper:

Code:

1

6

35

Another clarification is, although we claim "Large Language Models Cannot Self-Correct Reasoning Yet", we don't completely refute the concept of self-correction.

In the discussion section (I highly recommend reading the full paper if you haven't yet :), we mention that

3

6

32

Glad to have five papers accepted to

#EMNLP2022

on descriptive knowledge graphs, privacy in LMs, definition modeling, topic modeling, and dialogue state tracking!

4

5

31

@rao2z

The common observation is that the performance on reasoning tasks degrades when LLMs attempt self-correction without external feedback (e.g., oracle labels). The main reason is that LLM itself cannot reliably judge the correctness of its reasoning.

1

1

28

Read this paper today. Like the comprehensive analysis :)

We also study LMs' memorization on long-tail entities in our

#EMNLP2022

paper (specific to email address)

Some conclusions are different, e.g., we found that larger LMs can memorize/associate more

0

2

28

Accel🚀

This weekend, the

@xAI

team brought our Colossus 100k H100 training cluster online. From start to finish, it was done in 122 days.

Colossus is the most powerful AI training system in the world. Moreover, it will double in size to 200k (50k H200s) in a few months.

Excellent

5K

8K

75K

1

0

27

+ my two cents on proposing and answering a question:

1) Be critical about the question—assess whether it's meaningful enough to warrant solving.

2) Seldom answer a question with a simple binary of yes/no; otherwise, you are likely to become overly optimistic or overly critical.

0

2

26

Next semester, Kevin and I will be launching a course on Large Language Models at

@UofIllinois

. Looking forward to having a lot of fun along the way!

1

1

22

Will appear in

@solarneurips

. I still believe that "citation" is essential for LLMs from intelligence, transparency, and ethical standpoints, but it seems they still do not receive enough attention.

However, my primary purpose here is to convey my guilty for missing the review

0

5

21

In academia, chasing SOTA performance isn't the ideal pursuit these days, especially for tasks solvable by

#GPT4

or future versions.

Small models boasting comparable performance to GPT-4 could simply be overfitting benchmarks.

Achieving good results using GPT-4, e.g.,

2

4

20

Another interesting point to share is that in the paper, "Language Models (Mostly) Know What They Know" () from

@AnthropicAI

, it has already been observed that LLMs struggle to self-evaluate their generated answers without self-consistency (though this

1

5

19

Have seen several instances where my friends' research work was overshadowed by similar works from bigger names or groups, but they could not release/advertise their work due to the anonymity deadline. Have also seen multiple instances where people rushed to submit a low-quality

0

2

19

Finish my paper review for

#ACL2023

. I think *excitement* is very subjective. I feel excited about everything when I started doing research. And now, I'm more critical and rarely get excited. To avoid bias and try to give higher scores, I simplely treat it as overall assessment🙂

0

0

17

Fun fact about LLM and related research: If your idea is truly groundbreaking,

@OpenAI

will perform better and faster than you.

The rest:

1) The idea is too difficult, so you can't tackle it either.

2) The idea is too small to capture their attention. 🙃

2

1

16

I feel deeply fortunate and grateful for the encouragement and support from my advisor, Kevin. I am also honored and lucky to have received the support of my wonderful committee members

@haopeng_nlp

,

@hanghangtong

,

@tianyin_xu

,

@Diyi_Yang

2

0

15

TBH, I felt very bored on reading papers that focus on achieving marginal improvements on a specific dataset(s) and then try to tell a story (i.e., overfitting + stroytelling).

It's time to shift towards goals that can bring about real-world impact and advancements. 🫡

#GPT4

1

0

13

@YiMaTweets

It's not surprising to me either, lol. However, many people misunderstand it. That's why the critique paper we wrote is crucial: to urge researchers to approach this domain with a discerning and critical perspective and to offer suggestions for future research and practical

1

0

8

🤔Want to understand jargon?

We propose to combine definition extraction and definition generation. Experiments demonstrate our method outperforms SOTA for definition modeling significantly, e.g., BLEU 8.76 -> 22.66.

👉

👉

#EMNLP2022

0

2

11

Though I haven't chatted with

@hhsun1

, I really enjoy the discussions with the students in

@osunlp

, like the atmosphere they have, and enjoy reading their recent work on LLMs.

Hiring multi Ph.D. students this cycle in areas:

#LLM

train/eval, trustworthiness of LLMs incl. privacy & safety, LLM for biomedicine/chemistry. see below for representative work. I won't be

#EMNLP23

, but pls talk to my (former) students there

@xiangyue96

@BoshiWang2

@RonZiruChen

5

23

91

1

1

11

Want to create an open and informative knowledge graph without human annotation?

In our

#EMNLP2022

paper DEER🦌, we propose Descriptive Knowledge Graph where relations are represented by free-text relation descriptions

👉

🦌

1

2

9

Congrats

@xiangyue96

on the great benchmarking work! Truly impressive.

1

0

9