Andrew Drozdov

@mrdrozdov

Followers

2,464

Following

1,495

Media

524

Statuses

11,779

retrieving and generating things at mosaic x @databricks

NYC

Joined August 2010

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Adalet

• 242820 Tweets

Chester

• 112909 Tweets

ベイマックス

• 109370 Tweets

Merchan

• 102490 Tweets

Jean Carroll

• 81133 Tweets

Milli

• 76841 Tweets

#2KDay

• 76197 Tweets

Eagles

• 68396 Tweets

Ayşenur Ezgi Eygi

• 40881 Tweets

Franco Escamilla

• 40443 Tweets

#CristinaMostraElTitulo

• 36548 Tweets

Packers

• 31129 Tweets

Barcola

• 31055 Tweets

Dick Cheney

• 22786 Tweets

Mert

• 16855 Tweets

Bafana Bafana

• 15974 Tweets

Ganesh Chaturthi

• 15542 Tweets

Galler - Türkiye

• 14877 Tweets

Kenan

• 13602 Tweets

#FRAITA

• 12925 Tweets

Chaine

• 12597 Tweets

Go Birds

• 12344 Tweets

Sergio Mendes

• 11027 Tweets

#BizimÇocuklar

• 10994 Tweets

Olise

• 10126 Tweets

Last Seen Profiles

✨ Accepted at Findings of EMNLP 2022: You can't pick your neighbors, or can you? When and how to rely on retrieval in the kNN-LM ✨

We improve kNN-LM by incorporating retrieval quality.

Joint work with

@shufan_wang_

,

@Negin_Rahimi

,

@andrewmccallum

,

@HamedZamani

,

@MohitIyyer

1

11

128

Want to train and deploy large neural nets? Make them fast and robust? Mosaic x

@Databricks

is hiring. We're especially looking for research engineers (at all levels).

Send me a DM or email if you're interested. Happy to chat more about what this job is like.

3

7

108

Now with paper link:

And code:

New results on unsupervised parsing: +6.5 F1 compared to ON-LSTM (2019), +6 F1 compared to PRLG (2011).

The Deep Inside-Outside Autoencoders have been accepted as a long paper at

#NAACL2019

Unsupervised parsing and constituent representation with amazing co-authors

@pat_verga

Mohit Yadav

@MohitIyyer

@andrewmccallum

1

5

50

1

16

78

You can't win at

#EMNLP2023

.

Paper 1: Reviewer complains we focus too much on a GPT-3 based model. How about performance on open source baselines?

Paper 2: Reviewer complains we focus too much on open source baselines. Would this work for GPT-3?

9

3

71

@dirk_hovy

I’m not sure either direction that this argument works. I presented this stance to a friend, and they used “the brain is just a bunch of neurons” as a counter point.

4

0

55

The Deep Inside-Outside Autoencoders have been accepted as a long paper at

#NAACL2019

Unsupervised parsing and constituent representation with amazing co-authors

@pat_verga

Mohit Yadav

@MohitIyyer

@andrewmccallum

1

5

50

Have you thought about taking some time off in order to improve your programming skills? The

@recursecenter

could be a good fit for you. Let me know if you're interested in applying or want to learn more. Happy to discuss my experience there — coffee on me!

3

7

49

I only now realized the

@stanfordnlp

logo is a combination of constituency parsing and dependency parsing.

2

3

50

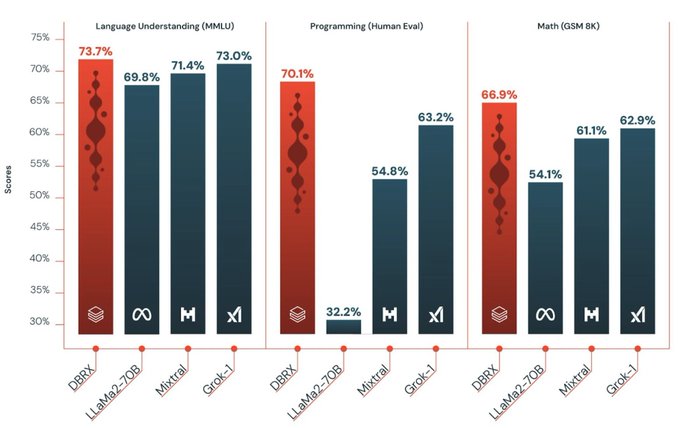

⭐️⭐️⭐️ Checkout DBRX, the new open source LLM from

@Databricks

! ⭐️⭐️⭐️

I've only been here for a few weeks, but if there's one thing I've learned it's that this is a team that can execute on big and challenging projects while having a good time doing it. Glad to have played a

Meet DBRX, a new sota open llm from

@databricks

. It's a 132B MoE with 36B active params trained from scratch on 12T tokens. It sets a new bar on all the standard benchmarks, and - as an MoE - inference is blazingly fast. Simply put, it's the model your data has been waiting for.

33

263

1K

1

7

46

Noticed that

@pytorch

1.0 has epic support for in-place operations using views. Simple refactor gave 1.7x speed up. 🙌

1

3

46

Interested in text-to-SQL?

👀 Take a look at our paper! 👀 It extends least-to-most prompting and improves performance on related tasks. We includes advanced techniques such as query decomposition + demonstration retrieval.

cc

@llama_index

@jerryjliu0

@LangChainAI

@ItakGol

0

4

45

🎓

So proud to have hooded my first five PhDs today:

@tuvllms

,

@kalpeshk2011

,

@simeng_ssun

,

@mrdrozdov

, and Nader Akoury. Now, they're either training LLMs at Google, Nvidia, and Databricks, or staying in academia at Virginia Tech and Cornell. Excited to watch their careers blossom!

11

8

275

0

0

42

This was my 1st PhD project, and I learned so much developing a bespoke neural architecture (with amazing collaborators) and working on a challenging task. Self-supervised learning for NLP was only starting to become a thing—bert was published at the same conf as us!

1/2

Beautiful paper by

@mrdrozdov

@pat_verga

Mohit Yadav et al: build RNN shaped like the inside--outside dynamic program w/ soft child selection. Train outside reprs of leaves to predict their words. CKY decode on child scores gives SOTA unsup const parser!

1

25

124

1

6

41

It was a whirlwind of a first day! Couldn't be more thrilled to be part of the team

@databricks

/

@MosaicML

2

0

39

One of the perks of

@recursecenter

is you’re encouraged not to “feign surprise” exactly to make it easier to ask questions like this.

2

5

35

Advice I got early on: you shouldn't do a PhD, and if after hearing this you still want to do a PhD, then maybe you should do a PhD.

1

0

34

@jxmnop

Take

@MohamedMZahran

's GPU Architecture & Programming class and you'll become a master

1

0

34

We are here

@emnlpmeeting

with unsupervised parsing and DIORA! We're presenting how to improve parsing performance using distant supervision from easy to acquire span constraints.

#EMNLP2021

In-Person Poster: Mon, 9:00-10:30am AST

Virtual Poster: Mon, 2:45-4:15pm AST

1

9

32

In 2015, we met during a programming languages lecture. Our Professors are outcome focused, but not sure they expected this 💍🥂

💕

@stochasticdoggo

💕

6

1

33

@kohjingyu

@srush_nlp

LangChain is pretty great and makes it easy to do "sequential prompting", where the output of your first prompt becomes input for your next prompt.

The docs are pretty detailed:

1

2

32

Okay this is kind of epic. DBRX can do linear regression using in-context demonstrations. 🤯

2

4

32

❤️🎉

a random thought on RAG, inspired by the (successful) phd defense of

@mrdrozdov

(the committee consists of

@andrewmccallum

,

@MohitIyyer

,

@JonathanBerant

,

@HamedZamani

and me)

16

18

146

7

0

32

ParaNet! Simply translate from source language to target one and back in order to generate paraphrases.

#repl4nlp

0

8

29

One of my favorite papers from

#EMNLP2022

. A clever way to collect and update a real world benchmark at almost any time scale.

1

3

31

We introduce S-DIORA, an extension that "fine-tunes" DIORA using hard instead of soft vector weighting. This leads to improved unsupervised parsing, and can read the work here:

Will be at the main conference in

#emnlp2020

. I'll summarize in this thread.

1

8

30

1/1 short papers accepted to

#emnlp2019

. Details out soon, related to unsupervised parsing, message me if interested. Thanks to my outstanding collaborators!

0

0

31

Catch me at

#emnlp2019

! Will be presenting our poster on unsupervised *labeled* constituency parsing with collaborator Yi-Pei Chen. Tuesday from 3:30pm to 4:18pm, poster

#1234

(Session 3).

Joint work with

@pat_verga

@MohitIyyer

@andrewmccallum

Paper:

1

10

29

🚨New Paper Alert🚨

Despite the long line of research in search and open-domain QA, it still feels like early days for RAG. In this paper we talk about the design patterns that are starting to emerge and future research opportunities for this space.

(1/9) 🧠 Ever wondered if there's a unified framework for RAG? We've formalized the retrieval enhancement paradigm with consistent notations, synthesizing research across all ML domains. 🧵👇

w/

@SalemiAlireza7

@mrdrozdov

@841io

@HamedZamani

3

21

48

1

4

30

After a 24-hour layover in Istanbul, I’m ready for some conferencing.

#ICLR2023

Almost joined the marathon but decided to save my strength. 😏

3

2

30

Feels like all the tricks from building good search engines are now being used to build good LLM training data.

1

1

28

First paper talk — not a bad way to spend my birthday! Thanks all for attending and for asking great questions. Looking forward to more great discussions this week

#NAACL2019

@NAACLHLT

@mrdrozdov

presenting our work on DIORA, an unsupervised constituency parser, at

#naacl2019

!

0

1

17

2

0

25

@yminsky

I enjoy Foundations of Data Science. Free online book by Blum, Hopcroft, and Kannan.

I particularly like that it covers topics that are interesting for someone with a software engineering background (e.g. thinking of the web as a markov chain).

1

3

23

That’s a wrap! Thanks everyone who stopped by and the interesting discussion.

Catch me at

#emnlp2019

! Will be presenting our poster on unsupervised *labeled* constituency parsing with collaborator Yi-Pei Chen. Tuesday from 3:30pm to 4:18pm, poster

#1234

(Session 3).

Joint work with

@pat_verga

@MohitIyyer

@andrewmccallum

Paper:

1

10

29

0

0

20

✅ Openreview.

✅ Edits allowed during discussion.

✅ Reviews made public for accepted papers.

✅ Submissions to arXiv allowed.

✅ Cute-ish llama.

2

1

23

I'm incredibly enthusiastic about the RAG effort at Databricks, both on the research and product side.

Join us and work on exciting things at the intersection of retrieval and generation.

0

4

23

At

#NeurIPS2018

, find me if you want to talk about hierarchical representations for text or emergent behavior of RL agents! Especially happy to talk with new researchers.

0

2

23

This is a must read... Totally raises the bar for what to expect out of long-context tasks and evals. Great job

@YekyungKim

and friends!

1

0

22

The irony is that since no one caught this earlier means that posting to arxiv didn’t even impact the review process.

1

3

23

On my way to

#SIGIR2024

. Looking forward to conversations from a range of topics including data collection to automated judges to caching & systems optimization to generative retrieval. See you in DC!

2

2

22

Hoping that

#EMNLP2023

reviewers and ACs re-read "ACL 2023 peer review policies: writing a strong review" for the rebuttal.

Some of the criticisms reviewers make are clearly unfair, at least according to the reviewer guidelines.

1

1

22

It was a wonderful time at

#EMNLP2022

, catching up and having research discussions with old friends and new.

Now that the week is done I hope we keep the conversations going! In addition to Twitter, would suggest Mastodon. Sign up and cross-post (T ➡️ M).

1

0

21

Celebrating our 1-month anniversary with some downtime in the Catskills. Some might even call it a honeymoon. 💕

@stochasticdoggo

💕

In 2015, we met during a programming languages lecture. Our Professors are outcome focused, but not sure they expected this 💍🥂

💕

@stochasticdoggo

💕

6

1

33

2

0

21

Pretty great idea. If you think of prompting as an optimization procedure, then this reminds me of "line search" except now you can define powerful/flexible constraints, described in natural language (or probably programmatically too).

🚨Announcing 𝗟𝗠 𝗔𝘀𝘀𝗲𝗿𝘁𝗶𝗼𝗻𝘀, a powerful construct by

@ShangyinT

*

@slimshetty_

*

@arnav_thebigman

*

Your LM isn't following complex instructions?

Stop prompting! Add a one-liner assertion in your 𝗗𝗦𝗣𝘆 program: up to 35% gains w auto-backtracking & self-refinement🧵

11

51

316

0

2

21

Check out our "Multistage Collaborative Knowledge Distillation (MCKD)", which will be presented by first author Jiachen Zhao (

@jcz12856876

) at

#ACL2024

.

This work is so cool, and I think is a general approach that could take a whole PhD, although it is JZ's MS project. 1/N

1

4

21

Hey

#NLProc

, is there a good reference "regularizing the model weight" for transfer learning / fine-tuning? Specifically, adding a regularization term s.t. the final weights are not too different from the initial weights. I think I first heard about this from a

@colinraffel

prez.

7

3

19

@soumithchintala

Is an assumption here that companies have a C++ trained engineering team (or equivalent) that can build out an efficient CPU pipeline?

Because that might be more expensive than buying some GPUs :D

2

0

20

During

@DeepLearn2017

we cover roughly the same amount of material as you would in 1 year of a Master's degree, except in 1 week.

1

4

19

GPT-4 is maybe the 4th time during my PhD that there was an existential crisis in NLP caused by breakthrough results.

Graham’s tweet reminds me of a talk Kristina Toutanova gave during EMNLP 2019, urging researchers to be more ambitious. Maybe time to take it to heart!

1

0

18

I'm a PhD student in Computer Science. Happy to talk with anyone at the high school level (regardless of where you attend) about my experience and anything else that might be useful. I certainly believe you can have a fruitful career without going to a big name school.

0

0

19

I’ll be giving a lightning talk about RAG at the NYC one of these. Should be fun. 🤘

Want to talk AI research and best practices with the people working on it? The

@DbrxMosaicAI

research team is running meetups worldwide in May.

9

16

85

0

1

19

Feeling adventurous at

#NeurIPS2023

? Here are some recommendations to explore beyond the conference center.

1. The Syndney and Walda Besthoff Sculpture Garden. Don't miss the iconic Cafe Du Monde nearby, or enjoy some minigolf at City Putt.

2

5

18

Perhaps a good time to remind folks we demonstrated prompted LLMs are very strong semantic parsers, better than supervised finetuning. Our pipeline relies on syntactic parsing (and retrieval, and joint exemplar selection, and chain of thought).

2

0

17

Cool work by the folks at

@brevdev

for getting started with DBRX

DBRX is the newest MoE model from

@databricks

that's outperforming GPT-3.5, 2x faster than Llama-70b

We spent the day messing around with it

Here's a notebook that uses vllm and

@gradio

to run interactive inference. Check it out, link below 😊🤙🔥

3

3

34

0

2

16

Huge congrats to my brother

@SamuelDrozdov

who made the it onto the 2022 Forbes 30 Under 30!! In their words… “Facebook is showing up late to the metaverse — Ben and Sam have been there since they founded their company.” :))

1

0

16

So honored to be mentioned on this list.

I learned so much from my brief time working w/ Sam. Besides being kind and caring, he is always asking insightful and important questions, and makes research enjoyable and rewarding.

Could not recommend joining their lab highly enough!

Thanks to my group, and especially to first batch of students and collaborators at NYU:

@adinamwilliams

@mrdrozdov

@meloncholist

@W4ngatang

@a_stadt

@phu_pmh

@kelina1124

. I really didn't have much NLP research experience when I got here and we were figuring a lot out together.

2

2

53

0

0

16

@boknilev

The Parti paper has a brief guide to systematic cherry-picking (sec 6.2) that might be helpful.

0

1

15