Stephanie Chan

@scychan_brains

Followers

3,633

Following

2,008

Media

23

Statuses

531

Staff Research Scientist at Google DeepMind. Artificial & biological brains 🤖 🧠 Views are my own

San Francisco, CA

Joined November 2018

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

#湊あくあ卒業ライブ

• 706228 Tweets

taeil

• 643164 Tweets

ホロライブ

• 195917 Tweets

あくあ色

• 152563 Tweets

Aqua

• 75750 Tweets

伝説のアイドル

• 52418 Tweets

#TaraftarİstifaBekliyor

• 48279 Tweets

BBドバイ

• 42157 Tweets

最高のアイドル

• 35766 Tweets

#笑コラ朝まで同期卍会

• 32272 Tweets

たんのこと

• 26859 Tweets

最高のライブ

• 24126 Tweets

スタテン

• 19692 Tweets

あくたん6年間

• 18999 Tweets

#あついトキメキをあなたに

• 16313 Tweets

西鉄バス

• 13524 Tweets

テイルさん

• 12793 Tweets

#いれいすライブレポ

• 12192 Tweets

オースティン

• 10995 Tweets

おつあくあ

• 10885 Tweets

サイヤ人級

• 10327 Tweets

Last Seen Profiles

First day at

@DeepMind

tomorrow!! Incredibly excited to be working with

@FelixHill84

, Stephen Clark,

@AndrewLampinen

, and many other amazing researchers!!

13

4

283

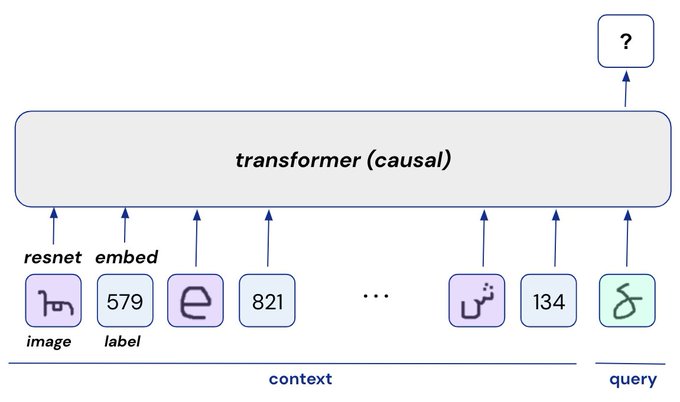

This is an incredible result. Transformers can meta-learn to do RL, completely from context -- no weight updates.

2

14

122

Apparent progress in ML research doesn't always map to real progress - it often isn't generalizable, usable or meaningful.

Tomorrow at the ML Evaluation Workshop

@iclr_conf

, join our many distinguished speakers in discussing and improving this situation!

The field of ML has seen massive growth and it is becoming apparent it may be in need of self-reflection to ensure that efforts are directed towards real progress. To this end, we are organizing an

@iclr_conf

workshop on "ML Evaluation Standards". [1/N]

1

101

437

3

21

89

We've released the codebase for the paper "Data Distributional Properties Drive Emergent In-Context Learning in Transformers" 🥳

2

14

80

Why do transformers work so well?

@FelixHill84

explains how the architectural features of transformers correspond to features of language!

Alternatively check out his excellent lecture covering similar topics:

1

10

72

Inspired by

@AnthropicAI

's Constitutional AI, I've been thinking of another legal metaphor: "AI alignment as common law"🧑⚖️

Models are trained to be consistent with prior decisions — prev examples of good behavior (SL) or prev judgments of good/bad (RLHF) — i.e. "precedent"

1/

6

8

69

Our new paper delves into the circuits and training dynamics of transformer in-context learning (ICL) 🥳

Key highlights include

1️⃣ A new opensourced JAX toolkit that enables causal manipulations throughout training

2️⃣ The toolkit allowed us to "clamp" different subcircuits to

1

10

66

Tokenization really matters for number representation!

E.g. tokenizing numbers right-to-left (instead of left-to-right) improves GPT-4 arithmetic performance from 84% to 99%!!

Awesome important work by

@Aaditya6284

@djstrouse

Ever wondered how your LLM splits numbers into tokens? and how that might affect performance? Check out this cool project I did with

@djstrouse

: Tokenization counts: the impact of tokenization on arithmetic in frontier LLMs.

Read on 🔎⏬

10

33

181

0

9

67

This work was done with the amazing Ishita Dasgupta, Junkyung Kim,

@dharshsky

,

@AndrewLampinen

,

@FelixHill84

📜 Paper:

Curious to hear your thoughts on possible origins of these biases, and their practical consequences!

(6/)

7

5

62

Impressive results by LLMs on causal reasoning benchmarks!

I'm curious though how much this is driven by the LLMs' priors about what causal structures are reasonable (e.g. ), rather than causal reasoning per se. 1/

2

8

58

Turns out -- a feature is represented more strongly based on factors beyond its relevance to the training task. E.g. whether it's easy vs hard to compute, or learned early vs late in training. This is true even when comparing features that are learned equally well!

These biases

0

5

58

With long contexts of up to a million tokens, we can now move from few-shot to *many-shot learning*

By using 100s or 1000s of shots, we saw significant improvements on math, reasoning, QA, planning, etc. We may not even need labels in many cases!! 🤯

3

8

55

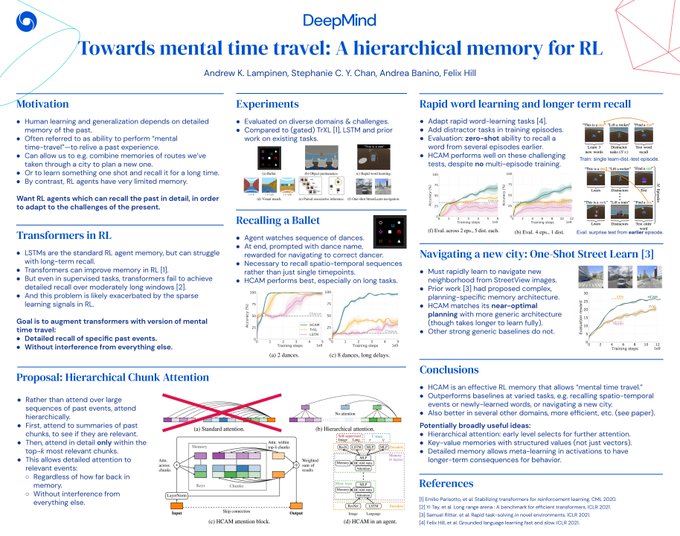

Come check out our NeurIPS poster today, on hierarchical memory for RL agents!

Interested in how RL agents could recall the past in detail, in order to overcome the challenges of the present? Come chat with us about "Towards mental time travel: A hierarchical memory for RL agents" at

#NeurIPS2021

poster session 1 (4:30 GMT/8:30 PT, spot E1)!

4

31

195

0

4

50

An impressive new kind of generative foundation model!

Trained completely unsupervised, it generates endless *controllable world models* that are (1) controllable via interpretable discrete actions (2) generated based on image prompts including drawings!

So proud of the Genie

I am really excited to reveal what

@GoogleDeepMind

's Open Endedness Team has been up to 🚀. We introduce Genie 🧞, a foundation world model trained exclusively from Internet videos that can generate an endless variety of action-controllable 2D worlds given image prompts.

145

571

3K

1

2

41

How does the brain learn a "model" for planning and model-based decision making? We found evidence that OFC activity is associated with learning the state-to-state transition function

1

13

43

@AndrewLampinen

is one of my favorite people in the world to collaborate with, and anyone would be lucky to work with him. Please apply to the team if you're interested in any of the cognitively-oriented research described below!

1

7

39

Congratulations to the team for this awesome robotics result!! It really stands out in that (a) it works in an extremely fast-paced setting, unlike most robotics algos (b) it's interesting that it was helpful to have a hierarchical controller that selects different skills and

1

5

36

More people should know about this interesting paper! It solves exact learning dynamics for a class of nonlinear networks, and uncovers properties that help subnetworks learn faster and win the race over others. Could eg help explain which ones end up "lottery tickets"

@jefrankle

0

4

35

A big step forward for fast, sample-efficient RL!

1

4

37

@_jasonwei

If you need an antidote to The Bitter Lesson malaise, check out our new work! 💊🙂 We show that it's the *distributional properties* of data, rather than scale per se, that leads to an interesting behavior like few-shot learning in transformers

1

0

36

Agree so hard that, esp with the advent of extremely long context, we need to think deeply about how information behaves differently when you put it in context vs weights vs other kinds of memory!

Thanks so much

@xiao_ted

for the shout-outs to our work! Check out e.g.

0

3

32

Excitingly, the ML Evaluation Standards workshop

@iclr_conf

will be collaborating with

@SchmidtFutures

to grant $15k in awards for workshop submissions and reviewers!

More info below 👇

3

11

32

Our paper comparing human and LM reasoning -- now published (open source)!

Pleased to share that the final version of our work "Language models, like humans, show content effects on reasoning tasks" has now been published in

@PNASNexus

(open access)! For a still-mostly-up-to-date summary, see this thread.

1

13

77

0

8

32

To date, it's taken half a century to map 35% of human proteins. Now

@DeepMind

has released predictions on almost the entire human proteome, for free! So many implications.. so proud of the team for this work

Today with

@emblebi

, we're launching the

#AlphaFold

Protein Structure Database, which offers the most complete and accurate picture of the human proteome, doubling humanity’s accumulated knowledge of high-accuracy human protein structures - for free: 1/

99

3K

7K

1

1

31

Updated: our work comparing humans and language models on reasoning tasks!

Neither humans nor LMs are perfect reasoners, and in fact show very similar patterns of errors. E.g., both perform better when the correct answer accords with situations that are familiar and realistic.

0

1

31

I'll be at

@iclr_conf

in Kigali next week -- message me if you'll be there and would like to meet up! ☺️

2

2

31

5/ Thanks to my amazing collaborators -- I've really loved working with them on this project ❤️:

@FelixHill84

,

@santoroAI

,

@AndrewLampinen

,

@janexwang

,

@Aaditya6284

,

@TheOneKloud

, and Jay McClelland

1

1

28

@ilyasut

I disagree with this! Given the amount of physical analogies that we use in describing these math concepts, even in ML courses, I think it's clear that physics is a more intuitive setting in at least some instances

1

0

27

So many of these researchers are heroes and role models to me, not least my PhD co-advisor

@yael_niv

😊

Thanks so much to all of you for being amazing trailblazers and advocates!

@ashrewards

@natashajaques

@ancadianadragan

@chelseabfinn

@FinaleDoshi

Doina Precup and many others

Really nice initiative by

@ben_eysenbach

, who prepared these posters (hung around

@RL_Conference

) of notable women in RL !

4

41

249

1

1

27

It's finally here!! "Ada and the Supercomputer", written by my dear friend Doris and now Amazon

#1

for teen fiction!

The book is inspired by Doris's math PhD, startups, her immense ambition.. The result is adventure+STEM+coming-of-age, and fully a story about resiliency.

link👇

1

1

27

This is so great..

@kpeteryu

et al took our insights on data distributions + in-context learning to videos, and improved few-shot learning for video narration!!

Amazing to be part of a vibrant research community where others can take our work much farther than we can ourselves 😍

Ever wondered what it would take to train a VLM to perform in-context learning (ICL) over egocentric videos 📹? Check out our work EILEV!

@SLED_AI

@michigan_AI

Website:

Technical Report:

A thread 🧵

1

12

28

2

1

23

Language models are influenced by prior beliefs when they perform reasoning tasks... in similar ways to humans!

Important for understanding the limitations of LMs, and parallels with human reasoning. And demonstrates how ML can usefully borrow ideas from cognitive science

1

3

22

Amazing work by the Astra AI Assistant team!! Huge potential for accessibility or for folks who have low vision

We’re sharing Project Astra: our new project focused on building a future AI assistant that can be truly helpful in everyday life. 🤝

Watch it in action, with two parts - each was captured in a single take, in real time. ↓

#GoogleIO

223

1K

4K

1

1

22

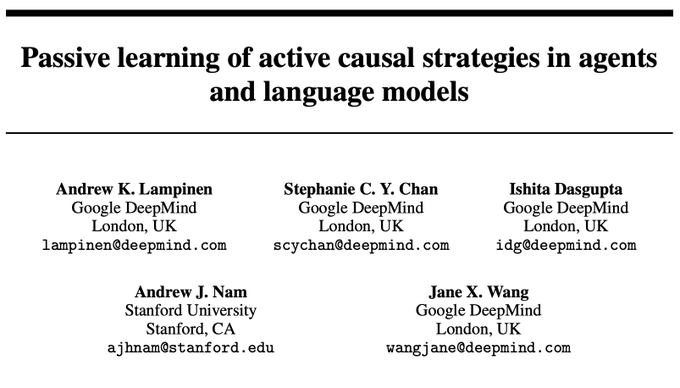

It's often said that causal learning requires active intervention.. but e.g. many of us learn how to do science just from reading about it!

This is a more nuanced take on how passive learning (as in language models) can lead to learning about causality and experimentation

0

3

22

@AlexGDimakis

If you'd like your faith restored in the endeavor of ML research, check out our new work! We show that the *distributions* of data matter, rather than scale, for eliciting an interesting behavior like few-shot learning

1

1

21

@YiTayML

@jacobmbuckman

Agree with

@YiTayML

that you'd have a hard time getting the same results with LSTMs. See our results showing that LSTMs don't exhibit in-context/few-shot learning when transformers do, matched on data + num params (Fig 7)

2

1

20

❤️ Yes thank you

@WiMLworkshop

for the feature!

Surprising results about surprise.. OFC aids learning about state transitions, but not via prediction errors. Instead OFC activity correlated with humans correctly expecting a more probable outcome.. i.e. more optimal predictions!

Thank you,

@WiMLworkshop

, for highlighting this work by

@scychan_brains

, inspired by rodent experiments from Geoff Schoenbaum's lab, first piloted in humans in our lab by

@ninalopatina

maybe 13 years ago!! This was one of those long projects... All the kudos to

@scychan_brains

!!

0

0

8

0

1

19

So awesome to see how RL is now effective on complex real world problems. Still remember reading

@AlexIrpan

's superb essay "Deep RL doesn't work yet", and the general uncertainty around RL's efficacy, just a few years ago!

Excited to share the details of our work at

@DeepMind

on using reinforcement learning to help large-scale commercial cooling systems save energy and run more efficiently: .

Here’s what we found 🧵

9

77

485

1

3

19

Join us for the

#NeurIPS

panel today on transformer-related topics!

@tsiprasd

and I will discuss our two papers on in-context learning in transformers, both selected as orals!

This year, orals will be presented as 15-min deep-dive discussions 🥽🫧🫧

1

3

18

Just out: Our new commentary on how AI and Psychology can learn from each other to address challenges in generalizability. "Fast publishing" (more common in AI) promotes rapid iteration and inclusivity, while "slow publishing" (more common in Psych) integrates knowledge over time

New commentary on "The Generalizability Crisis" by

@talyarkoni

: "Publishing fast and slow: A path toward generalizability in psychology and AI." We argue that these fields share similar generalizability challenges, and could learn from each other.

2

11

51

0

1

17

Congrats to

@agarwl_

et al on the Outstanding Paper award

@NeurIPS

!! It's such important work.

If you're a fan of rigorous RL evaluation, you may also be interested in our ICLR 2020 work on measuring the reliability of RL itself:

Congratulations to the authors of “Deep RL at the Edge of the Statistical Precipice”, a

#NeurIPS2021

Outstanding Paper ()! You can learn more about it in the blog post below, and we look forward to sharing more of our research at this year’s

@NeurIPSConf

.

7

75

384

2

0

18

Amazing how far language-conditioned robotics has come, from just a couple years ago!

@coreylynch

was one of the earliest to see the potential. Congrats to him,

@peteflorence

, and the other authors!

1

1

16

Absolutely. We will inevitably need additional new methods, but newer larger LMs (based on the same architectures) can already solve a number of tasks that were previously deemed out of reach (i.e. not tailored for LMs)

0

0

17

This work was done with my amazing collaborators!!!

@Aaditya6284

@ted_moskovitz

@ermgrant

@saxelab

@FelixHill84

N/N

1

0

17

New preprint with

@AndrewLampinen

, Andrea Banino, and

@FelixHill84

!

0

0

16

Pet peeve: Anyone doing multiple-choice evals needs to account for "surface form competition"!

TLDR: the highest probability answer isn't always the one with highest model "belief", because a single concept can take multiple forms in text. The good news: it's easy to account for

🔨ranking by probability is suboptimal for zero-shot inference with big LMs 🔨

“Surface Form Competition: Why the Highest Probability Answer Isn’t Always Right” explains why and how to fix it, co-lead w/

@PeterWestTM

paper:

code:

5

43

175

0

2

17

These are great resources for teachers and for educating kids about AI -- the

@RaspberryPi_org

team has been so impressively thoughtful in creating them, and I'm really excited to see them released today!!

EXCITING NEWS 🎉

Experience AI launches today in partnership with

@DeepMind

.

Our new AI and machine learning programme for teachers, students, and other educators.

Find out more 👉

#AI

#MachineLearning

#DeepMind

#ExperienceAI

18

232

985

0

3

16

One of those gems that is both theoretically interesting and has practical import!

📣📣📣 We are excited to announce our new paper, “Grokfast: Accelerated Grokking by Amplifying Slow Gradients”! 🤩

Reinterpreting ML optimization processes as control systems with gradients acting as signals, we accelerate the

#grokking

phenomenon up to X50, making a step

5

14

121

1

1

16

*Transformer inductive biases*

Come check out our

#NeurIPS

poster today at the MemARI workshop! (and check out the rest of the workshop too -- a really interesting lineup!)

Video for those who can't make it in person:

1

1

15

Pause the excitement about language models for a second -- lots of innovative and exciting things are still happening in control!

Co-evolving a mechanical body with a neural network (but using gradient descent!). Read to the last animation

0

0

15

Very cool work on controlling the balance between in-context and in-weights learning

How robust are in-context algorithms? In new work with

@michael_lepori

,

@jack_merullo

, and

@brown_nlp

, we explore why in-context learning disappears over training and fails on rare and unseen tokens. We also introduce a training intervention that fixes these failures.

2

11

79

0

1

15

Come to Rotterdam and chat about in-context learning with us!

Excited to announce our full-day workshop on “In-context learning in natural and artificial intelligence” at CogSci (

@cogsci_soc

) 2024 in Rotterdam (with

@JacquesPesnot

@akjagadish

@summerfieldlab

and Ishita Dasgupta).

4

20

83

1

1

14

Really excited to participate in these discussions next week.. registration is still open (and free!)

Program now live!

June 29 – Why Compositionality Matters for AI

w/

@AllysonEttinger

,

@paul_smolensky

,

@GaryMarcus

& myself

June 30 – Can Language Models Handle Compositionality?

w/

@_dieuwke_

,

@tallinzen

,

@elliepavlick

,

@scychan_brains

&

@LakeBrenden

4

28

110

0

0

12

Like many others, we argue that ML could benefit from slower, more careful publishing. But also -- perhaps unfashionably -- we argue that "fast science" has benefits too.. for inclusivity, rapid iteration, and more

Excited that our commentary "Publishing fast and slow: A path toward generalizability in psychology and AI" is out now!

The legendary

@talyarkoni

even agrees with some of it.

1

9

37

1

1

11

@DeepMind

@santoroAI

@AndrewLampinen

@janexwang

@Aaditya6284

@TheOneKloud

@FelixHill84

Also see our related work, on Zipfian environments for reinforcement learning! Code will be released soon, for those of you itching to start exploring non-uniform distributions for RL:

0

2

11

This is a game changer. Replace RLHF with a theoretically identical (and much cheaper to train) form of supervised learning

0

1

10

Check out our new work on how **generating explanations** can help RL agents, by enabling better representations of the causal and relational structure of the world!

1

1

10

@OwainEvans_UK

Very cool results!! Do you have thoughts on why the models succeeded zero-shot on these tasks, but the e.g. reversal curse is still an issue?

3

2

9

@_jasonwei

Our work (and now others' replications in multiple domains) show that this would significantly harm in-context learning abilities, unfortunately

0

0

9

Stay tuned ;)

Excited for our transience work to be highlighted in the update from

@ch402

@AnthropicAI

.

Their Transfomer Circuits thread has always been an inspiration to me -- actively working on mechanistic analyses of transience and should have updates soon :)

1

4

28

0

0

9

Great overview by

@weidingerlaura

on how to think more precisely about the potential risks of LLMs

Had a great time talking to

@weidingerlaura

about some of the recent papers she and her colleagues at

@DeepMind

have published about LLMs - big fan!

0

4

7

0

2

8

RLAIF may not be beneficial if you do SFT with a strong teacher

0

0

9

Super impressive work led by

@thtrieu_

on solving IMO geometry problems

0

0

8

Really loved the "compositionality gap" metric in this paper, where (correctness on final answer)/(correctness on subproblems) stayed constant at 40% across model sizes! Very curious whether this holds for GPT-4 as well

1

0

8

YC is a careful, thoughtful scientist bringing together social psychology and neuroscience in new and interesting ways, and I've always thought that he would be an exceptional mentor -- I highly recommend taking a look at his lab opening!

I am looking to hire a lab manager to help set up my new lab

@UChicagoPsych

! If you’re interested in studying how motivation influences how we see, think, decide, and interact with others, please consider applying. More info: . Please help spread the word!

38

164

311

0

0

8