Misha Laskin

@MishaLaskin

Followers

8,957

Following

184

Media

151

Statuses

685

Something new. Prev: Staff Research Scientist @DeepMind . @berkeley_ai . YC alum.

NYC

Joined August 2013

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Brazil

• 1180576 Tweets

Elon Musk

• 721961 Tweets

Meu Twitter

• 483724 Tweets

Alexandre de Moraes

• 471351 Tweets

Bluesky

• 214134 Tweets

Adeus Twitter

• 183091 Tweets

Politico

• 160259 Tweets

Xandão

• 158295 Tweets

#BUS1stFANCON_KnockKnockKnock

• 142808 Tweets

Wisconsin

• 122381 Tweets

Tchau

• 95672 Tweets

Caitlin Clark

• 67630 Tweets

Temple

• 59620 Tweets

ブラジル

• 41051 Tweets

Angel Reese

• 34390 Tweets

#プレイバックガチャ

• 33552 Tweets

Michigan State

• 23195 Tweets

#INZM_30Mviews

• 21988 Tweets

Janta Kee Awaaz

• 20278 Tweets

野菜の日

• 18524 Tweets

#GiftOfEducation

• 17048 Tweets

Djokovic

• 13593 Tweets

Stanford

• 12119 Tweets

カネゴン

• 11431 Tweets

#TheLastDriveIn

• 10770 Tweets

台風一過

• 10188 Tweets

Last Seen Profiles

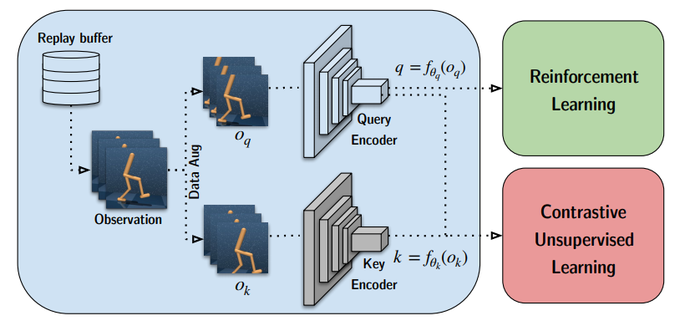

New paper led by

@astooke

w/

@kimin_le2

&

@pabbeel

- Decoupling Representation Learning from RL. First time RL trained on unsupervised features matches (or beats) end-to-end RL!

Paper:

Code:

Site:

[1/N]

7

113

418

Over the last few years, unsupervised learning has produced breakthroughs in CV and NLP. Will the same thing happen in RL?

@denisyarats

and I wrote a blog post discussing unsupervised vs supervised RL and the unsupervised RL benchmark.

1

53

251

I was wondering how ChatGPT managed to interleave code with text explanations. Was hoping this was an emergent behaviour.

Turns out it’s likely straight up imitation learning on curated contractor data. Makes sense but kind of deflating.

I just refused a job at

#OpenAI

. The job would consist in working 40 hours a week solving python puzzles, explaining my reasoning through extensive commentary, in such a way that the machine can, by imitation, learn how to reason. ChatGPT is way less independent than people think

63

264

1K

13

19

251

@abacaj

Important caveat - the scale of the model small. Generalization might only emerge at scale

9

10

248

@AndrewYNg

a few areas, some applied / some basic research

- climate change

- computational drug discovery

- ethical AI

- generalization to new tasks

- world models / representation learning

- long-horizon problem solving / better hierarchies

1

1

137

Excited to share a paper on local updates as an alternative to global backprop, co-led with

@Luke_Metz

+

@graphcoreai

@GoogleAI

&

@berkeley_ai

.

tl;dr - Local updates can improve the efficiency of training deep nets in the high-compute regime.

👉

1/N

1

21

116

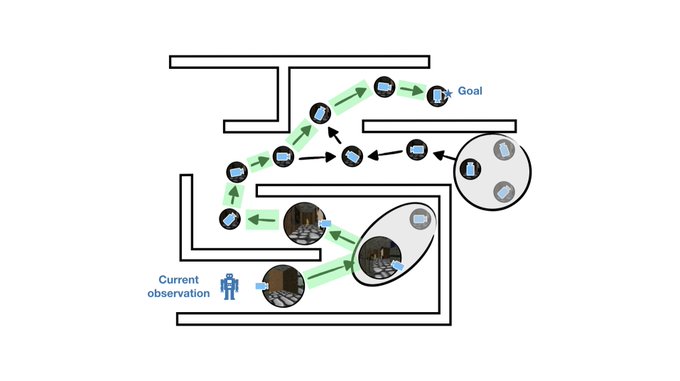

New paper coming up at

@NeurIPSConf

- Sparse Graphical Memory for Robust Planning uses state abstractions to improve long-horizon navigation tasks from pixels!

Paper:

Site:

Co-led by

@emmons_scott

,

@ajayj_

, and myself.

[1/N]

1

16

108

We're launching a benchmark for unsupervised RL. Like pre-training for CV / NLP, imo unsupervised RL will lead to the next big breakthroughs in RL and bring us closer to generalist AI.

Our goal is to get us there faster. LFG!!!

Code / scripts:

1/5

Currently It is challenging to measure progress in Unsupervised RL w/o having common tasks & protocol. To take a step in addressing this issue we release our

#NeurIPS2021

paper: (URLB) Unsupervised RL Benchmark!

Paper:

Code:

1/N

2

55

230

1

19

102

Can pixel-based RL be as data-efficient as state-based RL? We show for the first time that the answer is yes, new work with

@Aravind7694

and

@pabbeel

website 👉

code 👉

2

23

101

In-context RL at scale. After online pre-training, the agent solves new tasks entirely in-context like an LLM and works in a complex domain. One of the most interesting RL results of the year.

0

9

90

Can RL From Pixels be as Efficient as RL From State? BAIR blog post detailing recent progress in pixel-based RL describes CURL / RAD & tradeoffs. Was a fun collaboration!

w/

@AravSrinivas

@kimin_le2

@stookemon

@LerrelPinto

@pabbeel

1

27

86

DeepMind control from pixels seems beaten. PlaNet -> SLAC -> Dreamer -> CURL -> RAD & DrQ.

Now Plan2Explore shows zero-shot SOTA performance on DMControl relative to Dreamer.

great work!

@_ramanans

@_oleh

@KostasPenn

@pabbeel

@danijarh

@pathak2206

0

13

74

We'll soon be able to fully outsource some categories of knowledge work to AI models. But we are not there yet - today’s models are unreliable & require close human supervision.

Had fun discussing how we can leverage insights from Gemini and AlphaGo to overcome these challenges.

🤖 New

@Sequoia

Training Data episode! Featuring

@MishaLaskin

, f research scientist at

@DeepMind

& CEO of Reflection AI. Full ep:

@sonyatweetybird

and I chat w Misha about 1) why we’re still far from the promise of AI agents, 2) what we need to unlock

4

12

86

3

8

63

First RL algo to solve the diamond challenge in Minecraft without demonstrations. Congrats

@danijarh

!

1

3

57

Would argue this also applies to AI research. It's important to iterate on ideas quickly (e.g. by implementing them in code and launching experiments). Most ideas will be bad. But you learn from them and give yourself enough opportunities to spot a winner.

0

7

50

Thanks to

@kharijohnson

for the thoughtful coverage on Reinforcement Learning with Augmented Data. Read more about it on

@VentureBeat

:

w/

@kimin_le2

@stookemon

@LerrelPinto

@pabbeel

@Aravind7694

1

19

52

This was a fun project with contributions from many collaborators. Luyu Wang,

@junh_oh

, Emilio Parisotto, Stephen Spencer,

@RichiesOkTweets

,

@djstrouse

@Zergylord

,

@filangelos

, Ethan Brooks,

@Maxime_Gazeau

,

@him_sahni

, Satinder Singh,

@VladMnih

12/N

11

3

45

If you're interested in working with the General Agents team at Google DeepMind, please apply asap. Applications close tomorrow 4pm EDT.

Research Scientist:

Research Engineer:

2

4

42

@RokoMijic

This is misleading. Loss functions only look like this for simple problems with narrow datasets.

For large scale training grokking happens frequently enough across diverse enough prediction tasks that the average is relatively smooth and the likelihood of a big drop is very

1

2

35

@TaliaRinger

A less cynical take. Context: did phd in physics, now ML posdoc. (i) ML experiment cycles are *very* fast vs other sciences (ii) other sciences require years of background education. ML does not. A gifted high school student could contribute. These are positive things.

1

0

30

A new text-to-video generation startup launched by a pioneer of diffusion models. Excited for this direction and the future of video! "Make a dramatic thriller about a Corgi astronaut escaping a black hole, trending on HBO, narrated by Werner Herzog." I'd watch.

0

3

28

Wenlong & co continue to produce bangers at the intersection of LLMs and robotics. Very cool work

1

0

27

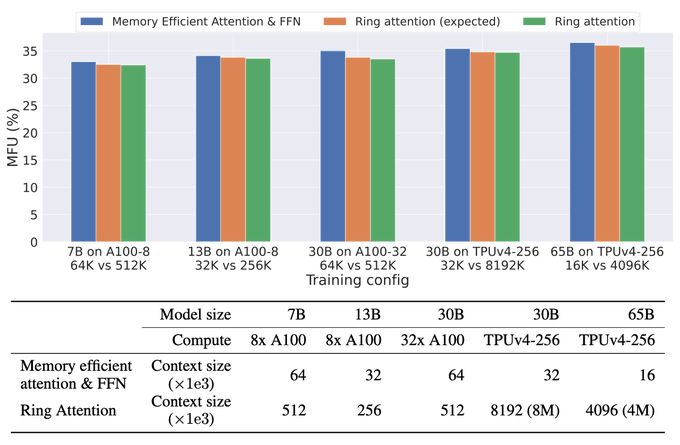

Very impressive new work on long context transformers. This particular bit is valuable - going from 4K to 32k context length with a 13B model on just 8 GPUs!

1

3

26

I’d argue the opposite - LangChain seems very valuable. My mental model is that LangChain is to LLMs what React is to web apps.

1. Has powerful boilerplate abstractions (e.g. prompt chaining, streaming) akin to react components.

2. Is LLM provider agnostic. Akin to react being

3

1

24

If this was interesting, consider following

@MishaLaskin

. Will post similar calculations for Transformers soon + other topics that will help strengthen intuition for deep learning.

12/N

END

1

0

23

It was pretty mind-blowing finding out that Transformers can do RL. No value estimates, no policy gradients, just sequence modeling. A glimpse of what RL might look like in the future. Congrats to all collaborators and especially co-leads

@lchen915

@_kevinlu

!

0

0

23

Frozen language models can be used to generate plans for robots. Comes with a great explainer video.

0

1

22

Tomorrow I'll give a talk on RL with Transformers (a tutorial and new work) at AI for Ukraine, a non-profit that helps the Ukrainian community learn about AI.

Please consider donating or supporting.

Last call📣

Tomorrow's AI for Ukraine session will be led by

@MishaLaskin

, Senior Research Scientist at

@DeepMind

.

We'll talk about data-driven Reinforcement Learning with Transformers. See you on October 26 at 7 pm (GMT+3).

Learn more and register at

0

1

8

0

5

21

It’s remarkable that AI research continues to produce multiple incredible demos per year. It also seems like the rate of mind blowing results is accelerating and that we’re far from the saturation point of the “scaling up” phase of deep learning 🤯

0

5

21

the issue with getting reliable outputs from LLMs as a user is that you don't know what prompts were used during RLHF when the model was aligned

so you are forced to manually explore the space of possible prompts

0

1

20

@JosephJacks_

@_akhaliq

Is it? Transformers + RNNs have been done before (e.g. TransformerXL) but performed worse despite the larger memory. Seems like a similar outcome here

2

0

19

Very impressive 3D mesh extraction from using the iPad's camera & LIDAR sensor. Before, extracting quality 3D meshes required expensive camera equipment and took a long time to process, now can be done from an iPad and renders in real-time. Amazing work

@cpheinrich

!

Huge news! The Polycam

#lidar

3D scanning app is now live 🚀! If you have a 2020 iPad Pro you can download at: . If you like this sort of thing and want to see it grow please share and retweet 🙏!!

41

245

1K

0

2

19

Great explainer video of Algorithm Distillation. I especially liked that it got into some of the more nuanced points and limitations of the work.

New video covering RL Algorithm Distillation, a paper about learning algorithms for RL with ML!

Great paper from

@MishaLaskin

and colleagues

3

2

45

1

2

18