Archit Sharma

@archit_sharma97

Followers

3,661

Following

343

Media

28

Statuses

262

Final-year CS PhD student @Stanford . Previously, AI Resident @Google Brain, undergraduate @IITKanpur , research intern @MILAMontreal .

Joined July 2015

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Alberto

• 477565 Tweets

Lawrence

• 145645 Tweets

Fabiola

• 129642 Tweets

shawn

• 93661 Tweets

Tamara

• 88967 Tweets

renjun

• 85336 Tweets

Marçal

• 69186 Tweets

Olivos

• 65930 Tweets

Tucker

• 58259 Tweets

#DebateNaBand

• 53905 Tweets

नीरज चोपड़ा

• 49488 Tweets

Rogan

• 46403 Tweets

Jerry

• 41571 Tweets

Nunes

• 36020 Tweets

Caresha

• 32892 Tweets

大蛇に嫁いだ娘

• 29795 Tweets

Datena

• 23207 Tweets

Comedor

• 21051 Tweets

नाग पंचमी

• 20988 Tweets

ハグの日

• 20333 Tweets

Tabata

• 18885 Tweets

Santana

• 17030 Tweets

Flor Peña

• 14211 Tweets

#يوم_Iلجمعه

• 14208 Tweets

#祝INI初ミリオンTHE_FRAME

• 14000 Tweets

花咲徳栄

• 13260 Tweets

Last Seen Profiles

🥺

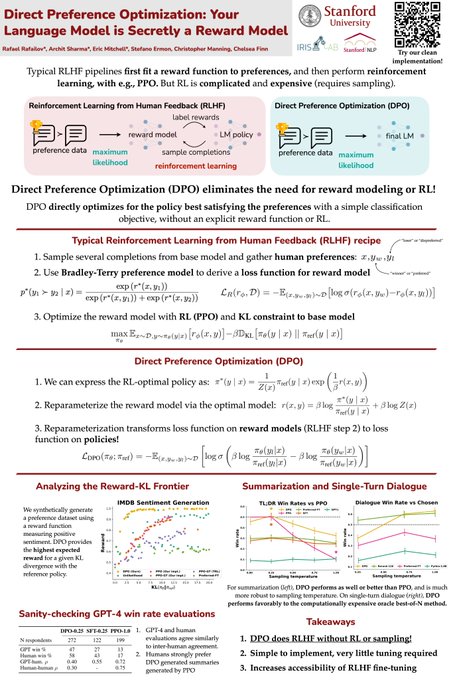

It is only rarely that, after reading a research paper, I feel like giving the authors a standing ovation. But I felt that way after finishing Direct Preference Optimization (DPO) by

@rm_rafailov

@archit_sharma97

@ericmitchellai

@StefanoErmon

@chrmanning

and

@chelseabfinn

. This

91

805

5K

21

3

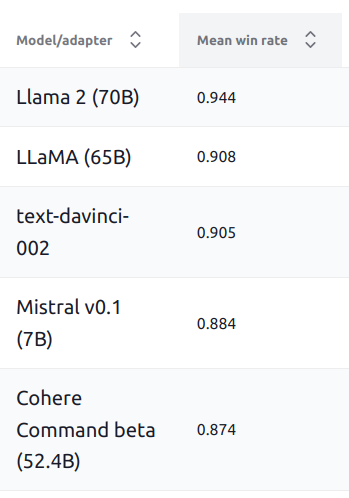

339

I cannot emphasize how good Mistral 7B is. It has been crushing some of the experiments I have been doing, makes such a huge difference in the fine-tuned performance. Kudos

@MistralAI

for creating and open-sourcing this model!

7

28

227

This is the “right” way to interpret DPO, ie. setting Z(x) to 1 and removing the DoF.

But, I think the narrative of removing RL from RLHF has played its part, so I want to course-correct a bit this new year — is DPO still RL(HF), which requires answering, what is RL? 🧵->

3

15

179

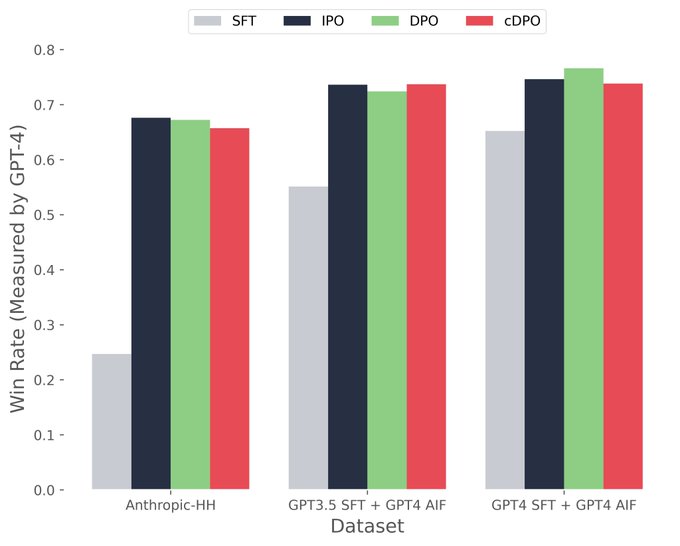

So, since we are posting science straight to twitter now,

@ericmitchellai

and I have some updates for potential overfitting in DPO.

TL;DR: we compared DPO to IPO and cDPO (DPO + label noise) on 3 different datasets, and we didn't observe any significant advantage (yet). 🧵->

7

33

175

I wrote an accessible post on why unsupervised learning is important for reinforcement learning and robotics, and how our work on DADS is improving skill discovery and making it feasible in real life. Check it out here!

Introducing DADS, a novel unsupervised

#ReinforcementLearning

algorithm for discovering task-agnostic skills, based on their predictability and diversity, that can be applied to learn a broad range of complex behaviors. Learn more at:

15

199

614

2

24

146

It feels hard to do robotics research these days. There seems to be this pressure to deliver "large scale" results to keep up with vision/language, to tie your results to the LLM bandwagon and ride the wave

4

7

118

Being able to learn continually without human interventions is critical for building autonomous embodied agents. Introducing MEDAL, an efficient algorithm to do so. Presenting tomorrow

@icmlconf

!

overview/arxiv/code:

w/ Rehaan Ahmad,

@chelseabfinn

1/

1

15

95

I am very excited about this line of work, lots of cool ideas seem to be possible with this simplification!

more details on arxiv:

code release soon!

a wonderful collaboration w/

@rm_rafailov

,

@ericmitchellai

,

@StefanoErmon

,

@chrmanning

,

@chelseabfinn

4

15

92

I am rather excited about this work for a couple reasons:

(1) We have a clear recipe for pre-training on offline data and fine-tuning language conditioned policies in the real world efficiently.

(2) We may be able to scale real robot datasets with minimal supervision...

2

5

69

Breaking demonstrations into waypoints is a promising way to scale imitation learning methods to longer horizon tasks.

We figured out a way to extract waypoints from demos _without_ any additional supervision so that the robots can do cool stuff like make coffee ☕️->

3

9

60

@4evaBehindSOTA

You can also just do SFT on the preference dataset and then do DPO on the same prompts. The issue often is that the preference distribution is off-policy with respect to the reference distribution, and doing SFT on preference dist before DPO helps address that

2

4

48

This work was accepted to NeurIPS 2021 :)

Come checkout our poster “Autonomous Reinforcement Learning via Subgoal Curricula” on Dec 7, 8.30 am PT.

0

5

40

This is a reckless and a myopic decision. The stakeholders (us) were not consulted at all, and I do not condone this pivot.

5

2

37

Just checked in to New Orleans for

#NeurIPS2023

, and this time i'm looking to settle the debate on the best beignets. Unrelated,

@rm_rafailov

@ericmitchellai

and I will be giving an oral presentation for DPO on Dec 14 3:50pm CT in Session Oral 6B RL, and poster on Dec 14 5pm CT.

2

2

37

Go ask Eric all the hard questions about DPO, on how you can fine-tune LLMs on preference data without RL using a simple classification loss. Make him sweat and don't let him dodge any questions.

Curious how to take the RL out of RLHF?

Come check out our

#ICML2023

workshop poster for Direct Preference Optimization (aka, how to optimize the RLHF objective with a simple classification loss)!

Meeting Room 316 AB, 10am/12:20/2:45 Hawaii time

5

25

203

2

2

35

Happening in ~3 hours! Come find out how all these instruction following models are trained:

Just checked in to New Orleans for

#NeurIPS2023

, and this time i'm looking to settle the debate on the best beignets. Unrelated,

@rm_rafailov

@ericmitchellai

and I will be giving an oral presentation for DPO on Dec 14 3:50pm CT in Session Oral 6B RL, and poster on Dec 14 5pm CT.

2

2

37

0

2

26

There isn’t a lot of robot data to train on. Human collected demonstrations are important ✅ but the collection does not scale ❌. What if the robots could collect data autonomously? Checkout my post on steps and challenges towards 100x-ing robot learning data in the real world:

Human-supervised demonstration data is hard to collect for embodied agents in the real world. Learn how we can enable robots to autonomously learn in the real world for 100x larger datasets in

@archit_sharma97

's blog post! 🤖

0

7

25

1

4

24

Also fwiw, I wanted to title the post “Leave your agents unsupervised with DADS”. But I guess the editors do not like dads jokes ... strange for an org with

@JeffDean

at its fore.

0

0

23

Can language models search in context? Turns out that explicitly training on search data and fine-tuning with *reinforcement learning* can substantially improve the ability of the model to search. Check out this work led by

@gandhikanishk

!

0

0

22

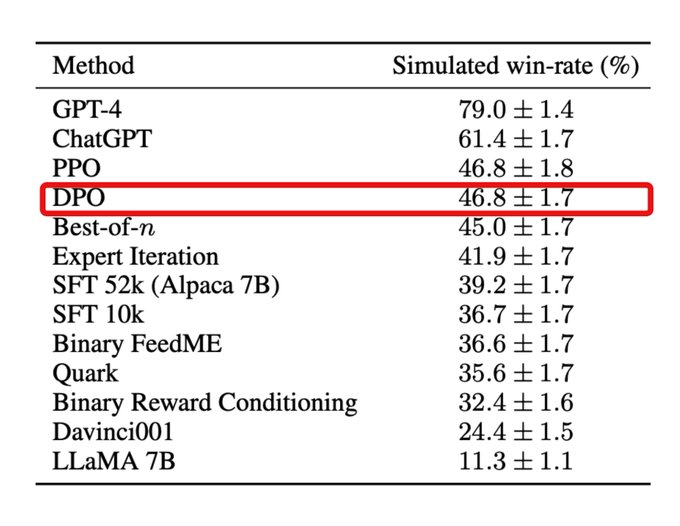

Some explicit evidence comparing DPO vs PPO :) My bias rn is that given tuned implementations for PPO and DPO and "right" preference datasets, DPO should be sufficiently performant. It would be good to find situations where PPO's computational complexity is justified over DPO.

1

1

20

What if we want to change a LLM’s behavior using high-level verbal feedback like “can you use less comments when generating python code”? Check out our work on RLVF led by

@at_code_wizard

on how to fine-tune LLMs on such feedback without **overgeneralizing**!

1

2

17

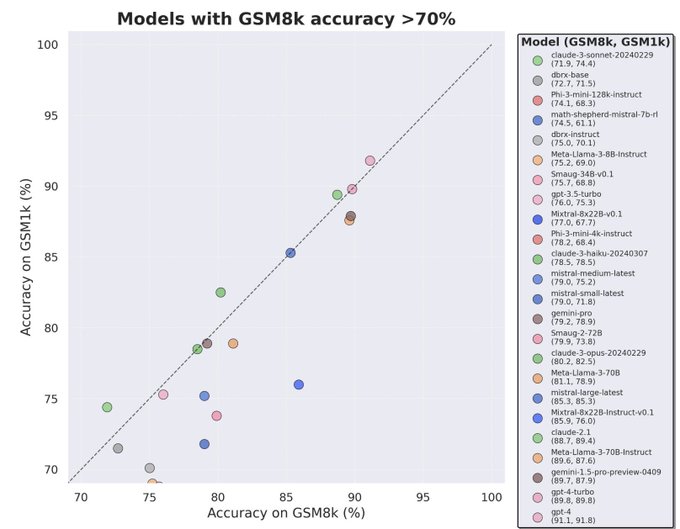

Have people tried encrypting their benchmarks?

download -> decrypt -> evaluate

This should quell any perf boosts from benchmarks leaking into training data, as long as LLM providers are not *intentionally* doping their models

ofc till AGI makes encryption meaningless

Academic benchmarks are losing their potency. Moving forward, there’re 3 types of LLM evaluations that matter:

1. Privately held test set but publicly reported scores, by a trusted 3rd party who doesn’t have their own LLM to promote.

@scale_AI

’s latest GSM1k is a great example.

41

157

881

5

1

19

@Teknium1

@huggingface

Nice set of experiments! I think the range of betas for IPO needs to be much smaller for better performance.

For KTO, has anyone run an experiment with unpaired preferences? It is generally pretty hard, so would be quite cool to see some progress on that

3

1

16

@mblondel_ml

1/ My tweet simplifies the discussion; our main contribution is that the OG RLHF prob statement can be solved exactly with supervised learning, when viewed from a weighted regression POV. Our derivation yields a principled choice for ranking loss (amongst many possible ones).

2

0

16

Sasha is really setting the standard for research videos!

Check out the DROID dataset — robot learning has been missing data collection in diverse natural scenes!

1

1

16

@ericmitchellai

If someone wants an exp to go along with this, I ran something which seemed to indicate that this might be helpful, notice how adding the noise (orange curve) regularizes the DPO margin and DPO reward acc to be reasonable. For this exp, win rate w/o and w/ noise was 20% and 45%.

1

2

13

This is a great talk! My research these days is very much motivated by moving towards truly autonomous agents. Check it out if you are interested in building generally capable agents for the real world.

0

0

12

Sometimes I wonder what it would have been like to talk to von neumann. I'm glad I can hear

@jacobcollier

play music to get a sense what it would have been like:

2

0

12

with the amazing team:

@sedrickkeh2

,

@ericmitchellai

,

@chelseabfinn

,

@karora4u

,

@tkollar

!

the full paper:

the code:

1

3

12

Paper:

website:

shoutout to my amazing collaborators:

@abhishekunique7

,

@svlevine

,

@hausman_k

,

@chelseabfinn

1

1

11

Try out our simple, principled supervised learning recipe for learning from preference data:

0

1

11

@scottniekum

If you are still troubled about this question in a few days, we will have a paper soon that you might like :)

1

0

11

@sherjilozair

@natolambert

@finbarrtimbers

As an example, PPO and various actor-critic algorithms can be understood to minimize a reverse KL divergence, whereas weighted regression methods often imply a forward KL divergence. This has implications for mode-covering / collapse.

0

0

11

Had a fun time talking with

@kanjun

and

@joshalbrecht

!! Check it out for some in-depth overview of why autonomous agents are important, what the challenges are in building them and some (spicy? lukewarm?) takes about ML research and ecosystem

Learn about agents that can figure out what to do in unseen situations without human help with

@archit_sharma97

from Stanford!

0

1

7

0

2

10

Our work was accepted to

#RSS2020

!

I’m really excited for the future of unsupervised learning for robotics.

1

0

10

@teortaxesTex

This paper flew under the radar. Some of the points they are making seem pretty nice (lack of effectiveness of KL reg for finite data). But, I am surprised by their claim that DPO overfits while conventional reward models underfit -- this claim seems central but unsubstantiated

0

2

8

@yingfan_bot

Great question, something we are in the process of understanding as well. It's important to note that you can continue to use the language model as a reward model, and we have no reason to believe that it will generalize worse.

1

0

8

@abacaj

Two things:

(1) try early stopping, potentially even before an epoch

(2) try doing SFT on preferred completions before doing DPO (or SFT on both preferred and dispreferred)

1

0

7

@generatorman_ai

That’s a great question! We have evaluations on Anthropic HH dataset (only single-turn dialogue). However, the questions around diversity of generations are still valuable and interesting to understand.

0

0

7

As usual, this work would not have been possible without my amazing collaborators:

@imkelvinxu

, N. Sardana,

@abhishekunique7

,

@hausman_k

,

@svlevine

,

@chelseabfinn

.

1

0

6

@teortaxesTex

@ericmitchellai

@yacineMTB

@erhartford

@Teknium1

@ethayarajh

@natolambert

@abacaj

@arankomatsuzaki

@_lewtun

@AnthropicAI

@akbirthko

@thomasahle

@cohere

@tszzl

@rasbt

@ClementDelangue

@Thom_Wolf

@main_horse

@4evaBehindSOTA

Eric and I have discussed this several times, feel free to checkout the experiment snapshot I posted in the comments :)

1

0

6

@Teknium1

@huggingface

Oh implementation wise it is fine, I haven’t seen a model improve meaningfully from *just* unpaired data. I’d love to see some experiments!

2

0

6

@Teknium1

In all seriousness, if you have preference data, DPO fine-tuning runs are a miniscule fraction of pre-training costs. So, I would just send it. But, do some SFT first :)

1

0

6

@VAribandi

@4evaBehindSOTA

Yeah, this balance is tricky. I am not aware of controlled experiments for this, but usually I do only 1 epoch of SFT on preferred data.

0

0

6

@norabelrose

There is a fair amount of bias against change from what I have gathered re: DPO vs RLHF in places that started aligning their models pre-DPO. But, this is good for newer places, they can build their alignment pipelines off of DPO :)

0

0

6

@teortaxesTex

@Teknium1

I am generally critical of this practice of using AI feedback indiscriminately (esp when the feedback is from the same model you are evaluating under), but the paper's algorithmic improvements seem nice and meaningful -- someone should try it on some real RLHF settings

1

0

6

@lucy_x_shi

did an incredible job leading the experiments. Look out for her PhD app this cycle, you don't want to miss it!

And ofc,

@tonyzzhao

created this surprisingly easy and effective bi-arm setup, which allowed us to go from simulation to real-robot results in < 1 week!

0

0

5

“Uncertainty about the world doesn’t imply uncertainty about the best course of action!” -

@slatestarcodex

1

0

5

@sherjilozair

@natolambert

@finbarrtimbers

Generally agree with the sentiment in this thread, but Q-learning, actor-critic and weighted regression methods have differences in efficiency and optima you reach for finite sample + FA. I wouldn't rule out their being meaningful differences for LLMs between method classes.

1

0

5