Ian Osband

@IanOsband

Followers

8,170

Following

367

Media

71

Statuses

567

Research scientist at OpenAI working on decision making under uncertainty.

Joined July 2012

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

#GranHermanoCHV

• 75001 Tweets

広瀬めぐみ

• 44018 Tweets

#TrainAccident

• 42834 Tweets

BINI BOYCOTT GENOCIDE FUNDERS

• 22134 Tweets

大麻パーティ

• 20385 Tweets

キラーT細胞

• 19068 Tweets

रेल मंत्री

• 18049 Tweets

本イベント

• 17517 Tweets

Soler

• 11987 Tweets

Antonia

• 10235 Tweets

Last Seen Profiles

Pinned Tweet

A truth-seeking AI needs to know what it doesn't know.

This requires an *epistemic* neural network.

...

@ibab_ml

knows this, he covets "the epinet" for Grok.

... could this be why

@elonmusk

is suing OpenAI?

... is this what

@roon

saw?

Listen to

@TalkRLPodcast

to find out:

6

3

31

This feels like a real breakthrough:

Take the same basic algorithm as AlphaZero, but now *learning* its own simulator.

Beautiful, elegant approach to model-based RL.

... AND ALSO STATE OF THE ART RESULTS!

Well done to the team at

@DeepMindAI

#MuZero

5

196

751

Are you interested in

#ThompsonSampling

and

#exploration

, but looking for a good reference?

A Tutorial on Thompson Sampling

This tutorial covers the algorithm and its applications, illustrating the concepts through a range of examples... check it out!

2

87

414

Have you heard of "RL as Inference"?

... you might be surprised that this framing completely ignores the role of uncertainty!

(confusing, since it talks a lot about "posteriors")

Our

#ICLR

spotlight tries to make sense of this:

7

69

316

Really excited to release

#bsuite

to the public!

- Clear, scalable experiments that test core

#RL

capabilities.

- Works with OpenAI gym, Dopamine.

- Detailed colab analysis

- Automated LaTeX appendix

Example report:

2

53

205

Really excited about this research...

The culmination of a lot of peoples' hard work!

- Cool insights on marginal/join predictions

- Opensource code for a new testbed in the field

... I definitely learnt a lot working on this, you might too!

5

22

169

A lot of the value from ChatGPT comes in mundane/mindless drudgery, not deep thinking.

People like

@GaryMarcus

overlook the genuine value here:

- installing nvidia drivers

- sorting out messed up ruby version

- setting up custom domain name

Many such cases.

25

6

130

Einstein's paper on Brownian motion:

~4 pages A4, easy to follow, Nobel Prize

Self-Normalizing Neural Networks:

>100 pages, reams of numerical equations, SELU=slightly bent RELU

...

@zacharylipton

I don't know what you mean 🤷♂️

5

15

115

Excited to share some of our recent work!

Fine-Tuning Language Models via Epistemic Neural Networks

TL;DR: prioritise getting labels for your most *uncertain* inputs, match performance in 2x less data & better final performance

Discussion (1/n)

4

19

115

Excited to (finally) present our work on Epistemic Neural Networks as a spotlight for

#NeurIPS23

"Get better uncertainty than an ensemble size=100 at cost less than 2x base models"

Poster 1924

5

11

102

Big thanks to

@pbloemesquire

for a great tutorial:

Transformers from scratch

If (like me) you're excited about

#GPT3

but found yourself waving your hands through various NN diagrams on self-attention... this is the cure! 🙌

1

18

96

"One weird trick" for DQN in large (continuous) action spaces:

- Initialize uniform action-sampling distribution.

- Choose sampled action with highest Q.

- Train sampling to produce "best action" + also some entropy.

- ... Works surprisingly well!

Great stuff

@dwf

,

@VladMnih

!

1

9

87

Totally agree:

The part that is hard for humans (symbolically solving the cube) is pretty easy for computers...

The part that is totally trivial for humans (twisting a cube with two hands) is still essentially impossible for RL robotics!

7

7

82

@WiMLworkshop

@wimlds

@QueerinAI

@AiDisability

@black_in_ai

@Khipu_AI

@DeepIndaba

@_LXAI

@women_in_ai

... we need you!

The Efficient Agent Team is hiring:

- New DeepMind group in California

- Focus on RL, data efficiency and rich feedback

- Looking to scale up theory --> practice

If you’re advertising a machine learning or AI scholarship or job on Twitter, please consider announcing it to

@QueerinAI

@AiDisability

@black_in_ai

@Khipu_AI

@DeepIndaba

@_LXAI

@WiMLworkshop

@women_in_ai

and other groups who care about diversity and inclusion. Thanks

1

61

295

5

19

78

Thought-provoking book, thanks

@demishassabis

:

The Order of Time

TL;DR:

Time as we know it (fundamentally ordered from past to future) does not exist.

Our perception of time is a side-effect of us residing in a low-entropy region of space + 2nd law.

1

5

75

We just updated our

@NipsConference

spotlight paper

"Randomized Prior Functions for Deep Reinforcement Learning"

If you're too lazy to read the paper... then just head to our accompanying website - we have

#CODE

+ demos you can run in the browser!

2

11

68

Amazing work from everyone on the team... incredible what a great team working together can accomplish.

... did we mention that this is also availble FOR FREE 🫡

4

2

66

This paper is not long, and very easy to read... so I definitely recommend it.

The combination of:

1) Simple and targeted experiments

2) Sane and sensible writing

3) Excellent figures

Helps to provide a lot of insight to

#DeepLearning

- more please!

0

13

59

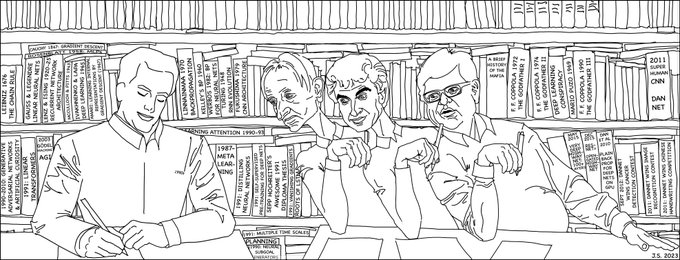

It says a lot that I had to honestly check if this was a troll account...

As expected:

- Homology detection is not the same as protein prediction.

- People used neural nets for this before 2007.

- AlphaFold is not using an LSTM.

...

@SchmidhuberAI

it's not a good look for you!

1

1

51

Extremely magnanimous of your "laudation of Kunihiko" to clarify that it was in fact

@SchmidhuberAI

that invented the Transformer in 1991! 🤣

#annusmirabilis

#ididitfirst

#cookielicking

0

4

45

Fantastic talk from

@SebastienBubeck

on the "Physics of AI":

- Intelligence has emerged: why? how?

- Let's study this with *controlled experiments* and *toy models*

- Clean and clear insights that peer slightly behind the magic curtain

1

8

44

As part of the

#bsuite

release, we also include bsuite/baselines:

These are simple, clear, and correct agent implementations in

#TF1

,

#TF2

and

#JAX

... many in under 100 lines of code!

2

13

44

And once you've been through

@pbloemesquire

's tutorial, you have to check out

@karpathy

's tutorial code:

Focus on the key points,

#simple

,

#sane

, and such a valuable resource in teaching... this stuff is really great!

Big thanks to

@pbloemesquire

for a great tutorial:

Transformers from scratch

If (like me) you're excited about

#GPT3

but found yourself waving your hands through various NN diagrams on self-attention... this is the cure! 🙌

1

18

96

1

3

43

@_aidan_clark_

this is a classic case of conflating *a bad RL algorithm* (policy gradient ?) vs *the RL problem*...

You're highlighting efficient exploration as one of the outstanding problems to solve - I agree.

... and that's something that only really studied in RL!

1

1

42

Paper summary:

- Tabular Q-learning converges to optimal with infinite data.

- You might hope Q-learning + function approx converges similarly to the best policy in that class.

- But actually that's not true... Basically because MDP with function approx ~= POMDP

#NeurIPS2018

Congratulations to Google researchers

@tylerlu

,

@CraigBoutilier

and Dale Schuurmans, whose paper “Non-delusional Q-learning and Value Iteration” has received a

#NeurIPS2018

Best Paper Award! Check it out at .

2

57

260

2

5

42

Great talk from

@jacobmbuckman

on STEVE - stochastic ensemble value expansion.

"If you want to roll forward a model, it's important to incorporate uncertainty estimates - and bootstrap ensemble works well for this"

Nice work, and very clear+engaging talk!

#NeurIPS2018

0

6

41

If you are submitting an RL paper to AAAI, you should include a

#bsuite

evaluation (+ automated LaTeX appendix).

- Paper:

- Github:

- Report:

If you're interested, but having trouble then get in touch!

1

0

39

Meet the professors at

@MILAMontreal

:

… …

I knew

#MachineLearning

was pretty hyped... but now it is getting

#HYPE

!

@slashML

@boredyannlecun

@dwf

2

4

38

It's nice that

@SchmidhuberAI

is using his fame/expertise/brainpower to tackle the important issues:

❌ COVID-19 Pandemic

❌ Black Lives Matter

❌ Global Warming

❌ Existential risks of AI

❌ Any research post "annus mirabilis"

✔️ The 2018 Turing award

... really?

ACM lauds the awardees for work that did not cite the origins of the used methods. I correct ACM's distortions of deep learning history and mention 8 of our direct priority disputes with Bengio & Hinton.

#selfcorrectingscience

13

67

314

1

0

38

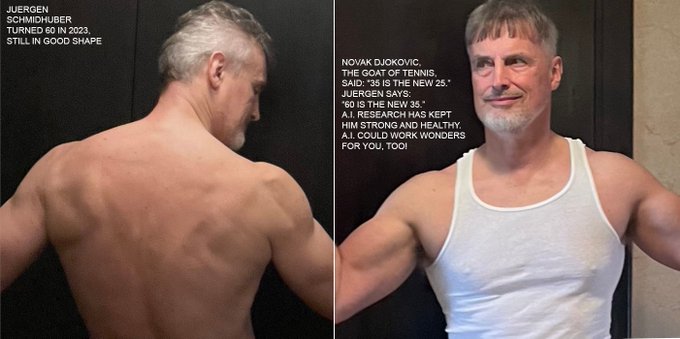

I hope this becomes a new form of copypasta... will we see more ML researchers posting thirst traps?

The GOAT of tennis

@DjokerNole

said: "35 is the new 25.” I say: “60 is the new 35.” AI research has kept me strong and healthy. AI could work wonders for you, too!

165

152

2K

1

2

37

According to

@ylecun

#neurips2018

RL gets one scalar = weak signal

"self supervised" = strong signal

But to succeed in RL you have to understand state, transitions, and how the world works!

Rewards help shape what you care about, but it's so very far from the "only" signal in RL

0

6

35

Great talk from Ben Van Roy at the

#NeurIPS2019

workshop on optimization for RL.

Is it time for the field to move beyond "MDP"?

Thinking about "agent state" might be a better perspective for learning in complex worlds... the real world "state" is just too complex!

1

3

36

Congratulations

@marcgbellemare

- a huge achievement and a great success for RL in the real world!

1

2

36

#MachineLearning

conference review burdens are getting out of control... too many low quality submissions + reviews!

Here's a controversial solution:

- $100 fee to submit a paper for review

- Waived for papers that pass some "quality bar"

- Use proceeds to fund D&I initiatives

5

3

33

This is why you need

@PlotNine

:

It's

@matplotlib

under the hood but uses a "grammar of graphics" that copies

@hadleywickham

's

#ggplot2

from R... Almost like

#keras

to

#tf

Seriously only takes 1 day to get up to speed... You will not regret it.

1

5

31

Come see our

#bsuite

poster at the

#NeurIPS2019

Deep RL Workshop... Bring your questions!

West Exhibition Hall C

1

6

31

@__nmca__

@JAslanides

@geoffreyirving

If you're interested in:

- Uncertainty

- Alignment

- RL from human feedback

- Language models

Recent papers:

Consider applying for internships/positions in the "Efficient Agent Team" working in MTV (with

@ibab_ml

@goodfellow_ian

nearby) ;D

(5/5)

1

9

31

100% another great paper in this area from

@mrtz

@OriolVinyalsML

and more:

Understanding Deep Learning Requires Rethinking generalization

I love something that gets the conversation (or controversy) going! 😜

Large model != Poor generalization

1

5

30

Great first day of the conference - thanks so much

@marcgbellemare

and

@LihongLi20

!

Particularly recommend the panel discussion:

Top experts discussing "offline reinforcement learning":

@EmmaBrunskill

@tengyuma

@svlevine

@ofirnachum

@pcastr

🔥

Excited to kick off the Deep Reinforcement Learning theory workshop at the Simons Institute today, co-organized with

@LihongLi20

. Today's topic is Offline reinforcement learning 🔥 Schedule is here:

1

20

99

1

2

30

I actually don't think this is controversial... And I'm definitely "team Bayes"

Yes, an independent Gaussian prior over NN weights is nonsense... We know the *interaction* is the most important part!

But there's still huge potential for effective Bayesian deep learning!

1

3

28

Welcome

@SchmidhuberAI

to Twitter!

Approaching 10k followers... But yet to follow a single account... Who will be first?

Ivakhnenko and Fukushima seem more likely than

@ylecun

and

@geoffreyhinton

.

0

1

27

Thanks a lot to

@robinc

for hosting me + such a great job driving the discussion!

As mentioned in the podcast, I am especially interested to hear from people who are NOT on the same page as me...

Episode 49: Ian Osband

@IanOsband

research scientist at OpenAI (ex

@GoogleDeepMind

,

@Stanford

) on decision making under uncertainty, information theory in RL, uncertainty, joint predictions, epistemic neural networks and more!

4

8

64

0

2

25

This "double descent" keeps cropping up... it's quite a funny thing!

I like this paper from Mei+Montanari:

Precise asymptotics for a stylized MLP.

0

5

26

I missed this paper when it came out!

Really glad that

@vincefort

brought it to my attention...

Even if ensemble + prior function is not the precise posterior at least it's not overconfident... and it will eventually concentrate with data. 🥳

1

4

26

You could still drive efficient exploration in an Actor-Critic algorithm though... and use policy gradient as a sub-procedure.

For example, you could keep a distribution (or ensemble) of plausible value functions, and optimize a policy for each of these.

@adityamodi94

@nanjiang_cs

@HaqueIshfaq

Actually, I don't think this is a coincidence...

If you want to explore efficiently, you first need to be able to reason counterfactually: "what might things be like if I went and did XYZ?".

Basic policy gradient is not going to be able to do this effectively.

0

0

4

0

6

24

A giant bag of ham has been delivered to my work addressed to me, and I don't know why... Where do I go from here?

#hamgate

7

0

25

We also

#opensourced

all the

#code

for the book:

Recently upgraded from Py2 -> Py3 and made sure everything was still running as expected 😆

As an added bonus, you can now run this all in your browser without installing anything!

2

2

24

One problem that hierarchical RL has is that it's not totally clear how it *could* pan out convincingly...

(Separate from standard RL)

If we could distil some simple examples that embody what it means to be "good at hierarchical RL" that would be a great first step!

5

1

24

Missed this one at the time

@SebastienBubeck

!

The videos from the

#ICML2018

workshop on

#exploration

are all online:

Please get in touch - especially if there are parts you disagree with! ;D

0

5

24

@GaryMarcus

We need a new "geometric intelligence" Gary!

Looking forward to when you blow these LLMs out the water 🙏

3

1

24

Congratulations

@OriolVinyalsML

@maxjaderberg

and everyone else on the team!

Amazing stuff 🤖🏆🏅

0

1

24

Huge congratulations to

@ilyasut

and team... Definitely one of the biggest results in AI research this year!

We're releasing "Dota 2 with Large Scale Deep Reinforcement Learning", a scientific paper analyzing our findings from our 3-year Dota project:

One highlight — we trained a new agent, Rerun, which has a 98% win rate vs the version that beat

@OGEsports

.

52

581

2K

0

2

24

... of course this "control perspective" completely ignores one of the biggest question in reinforcement learning: EXPLORATION.

If you're interested in how/why this is such a problem - come to the keynote talk "what is exploration"

Sunday 9am

#ICML2018

4

3

23

Very interesting results on "train" vs "test" in simulated RL domains from

@OpenAI

1

0

19

@GaryMarcus

Also... think you probably know this... but company valuations are typically dominated by *future* earnings.

Agree that people are betting on big growth in the sector - maybe you should start "shorting" these companies!

You could become quite rich.

6

0

19

Go-Explore attains *by far* the best scores of

#MontezumaRevenge

- impressive!

However, we should be clear about what is the goal of research in

#RL

(and

#exploration

in particular):

There is plenty enough room for all this research in

#MachineLearning

Montezuma’s Revenge solved! 2 million points & level 159! Go-Explore is a new algorithm for hard-exploration problems. Shatters Pitfall records too 21,000 vs 0 Blog: Vid By

@AdrienLE

@Joost_Huizinga

@joelbot3000

@kenneth0stanley

& me

18

188

484

1

5

18

Amazing work from the team - very exciting!

Thrilled to announce our first major breakthrough in applying AI to a grand challenge in science.

#AlphaFold

has been validated as a solution to the ‘protein folding problem’ & we hope it will have a big impact on disease understanding and drug discovery:

162

2K

8K

0

0

18

Bellmansplaining: take big deep neural networks, train supervised from human data and a huge amount of tinkering +1000x more data/compute than before, declare fundamental breakthroughs due to RL research.

0

0

18

Cool new

#RL

competition: learn to mine a diamond in minecraft in 4 days CPU training.

... but something really triggers me about calling this competition"sample-efficient"... they limit your

#COMPUTE

*not* your

#DATA

... why not limit number of frames??

Excited to announce our

#NeurIPS2019

competition: The MineRL Competition for Sample-Efficient Reinforcement Learning! With

@rsalakhu

@katjahofmann

@diego_pliebana

@flippnflops

@svlevine

@OriolVinyalsML

@chelseabfinn

and others!

Participate here!

7

116

264

1

1

18

Just found out that

#bsuite

made it to the big time...

@karoly_zsolnai

made one of his famous "two minute papers" videos - thanks a lot!

0

0

18

What would you do with so much money?

Why not start with something small and demonstrate scaling properties: theorems, experiments.

Then, come back and ask for more money with a clear plan.

Didn't people already give you $$$ for Geometric Intelligence?

Don't you have tenure?

2

0

17

Pretty disappointed in

@yaringal

after I tried to work together!

But, if we're doing an

#ML

#showdown

... let's do points not typos:

- Dropout "posteriors" give bad decisions.

- Doesn't even pass linear sanity checks!

- Alternative?

Get it going

@slashML

@yaringal

Would have preferred to do this via email, but:

- lambda/d should be lambda/np in (6), thanks!

- this typo in the appendix doesn't affect *any* other statements/proof.

- "concrete" dropout does not address the issues we highlight.

- happy to add this baseline for clarification.

1

0

10

3

1

17

This did make me lol

@svlevine

Favourite quote from Emo Todorov at

#NeurIPS0218

on hearing that

#bostondynamics

has started using some reinforcement learning: “Oh good! That will slow them down.”

0

6

41

0

0

16

Excited for the final day of workshops at

#ICML2018

!

If you're interested in what I have to say:

9am - "What is Exploration" (Exploration)

11.30am - "Deep Exploration via Randomized Value Functions" (PGM)

4.30pm - Panel Discussion (Exploration)

Particularly if you disagree! ;D

0

1

16

Congratulations to Leon Bottou and

@obousquet

for the

#NeurIPS2018

test of time award:

Outlining the benefits of imperfect (but fast) SGD vs batch training.

Particularly good talk from

@obousquet

... Would recommend watching the recording!

1

5

16

Example: I had a website I set up ~8y ago while hunting for a job with

@GoogleDeepMind

It was out of date, and I'd completely forgot the arcane css/Jekyll/ruby I had used to make it... Let alone customize the domain.

A few minutes later: 📈

4

0

15

Awesome results from

@OpenAI

:

"use prediction error on a random network as a bonus for exploration."

You could even call this a follow up on our

@NIPS

2018 spotlight paper:

"Randomized Prior Functions for Deep Reinforcement Learning"

Very impressive!

1

3

15

Straight up - I'm not usually a GAN man... But that's pretty cool...

NVIDIA Research developed a

#deeplearning

model that turns rough doodles into photorealistic masterpieces. Like a smart paintbrush, this GANs based tool converts segmentation maps into life-like images:

#GTC19

17

374

998

0

0

14

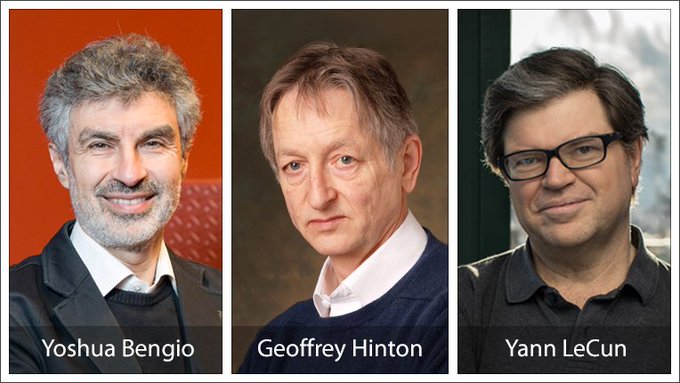

Massive congratulations to the Big 3 of Deep Learning!

... but didn't

#schmidhuber

win the

#ACMTuringAward

back in the 90s?

Yoshua Bengio, Geoffrey Hinton and Yann LeCun, the fathers of

#DeepLearning

, receive the 2018

#ACMTuringAward

for conceptual and engineering breakthroughs that have made deep neural networks a critical component of computing today.

28

1K

3K

2

1

14

Not since the days of

@iaindunning

has there been such a high profile "Ian" at DeepMind! 🚀

I'm excited to announce that I've joined DeepMind! I'll be a research scientist in

@OriolVinyalsML

's Deep Learning team.

152

239

7K

0

0

13

I mostly agree... But my most recent experience with JMLR took well over a year for the first review!

I'm not sure it's always worth it to wait that long for a high quality gradient update Vs many more noisy SGD steps via conference.

1

0

14

ChatGPT (+ other LLMs) take actions grounded in the real world:

interacting with human users to satisfy their requests

Things really are backwards if you think that playing Goat Simulator 3 for thousands of years of simulated gameplay to finally reach 200%-relative simulated

2

0

13

I'd say that the hundreds of millions of

@ChatGPTapp

users is a pretty real grounding 🥴

2

0

13

Looking forward to reading this one!

What actually constitutes a good representation for reinforcement learning? Lots of sufficient conditions. But what's necessary? New paper: . Surprisingly, good value (or policy) based representations just don't cut it! w/

@SimonShaoleiDu

@RuosongW

@lyang36

2

32

178

0

1

13

Great to see high-quality software open source from

@berkeley_ai

! 👏

But why do these

#RL

frameworks end up with so many complex Agent interfaces:

(OpenAI Baselines + Dopamine are similar)

Why not:

- agent.act(observation)

- agent.observe(transition)

New reinforcement learning library rlpyt in pytorch thanks to Adam Stooke from

@berkeley_ai

(and previously intern with me at

@DeepMindAI

). There are a whole suite of RL algorithms implemented and framework for small and medium scale distributed training.

3

114

427

2

3

13

commoditize your complement

0

0

13