Haozhi Qi

@HaozhiQ

Followers

1,836

Following

665

Media

40

Statuses

211

Ph.D. Student at UC Berkeley

Berkeley, CA

Joined February 2019

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

THE LOYAL PIN THAI GL NO1

• 1123238 Tweets

Djokovic

• 518279 Tweets

England

• 364020 Tweets

Rotherham

• 220305 Tweets

Noah Lyles

• 204573 Tweets

#BBNaija

• 184804 Tweets

ヒロアカ

• 148968 Tweets

Polônia

• 116826 Tweets

NBA Twitter

• 94073 Tweets

World Champion

• 77180 Tweets

Gabi

• 67971 Tweets

フワちゃん

• 57271 Tweets

堀越先生

• 55825 Tweets

Thompson

• 48644 Tweets

Carol

• 46052 Tweets

Rosamaria

• 41134 Tweets

Evandro

• 36509 Tweets

Jamaica

• 35359 Tweets

Bolt

• 30604 Tweets

GABRIELA

• 29756 Tweets

Omanyala

• 29465 Tweets

Peillat

• 28361 Tweets

Seville

• 26779 Tweets

#VoleiNoSporTV

• 26383 Tweets

thaisa

• 24456 Tweets

38x36

• 22032 Tweets

Simbine

• 20581 Tweets

Kerley

• 16242 Tweets

Greggs

• 16011 Tweets

Solari

• 13383 Tweets

Nyeme

• 13059 Tweets

#النصر_غرناطه

• 12937 Tweets

#VoleiNaGlobo

• 12864 Tweets

Peñarol

• 11408 Tweets

Tami

• 11032 Tweets

Last Seen Profiles

💡We release Hora: a single policy capable of rotating diverse objects 🎾🥝🧊🍋🍊⌛🥑🍅🍐🍑 with a dexterous robot hand.

No cameras. No touch sensors. Hora is trained entirely in simulation and directly deployed in the real world.

see

#CoRL

@corl_conf

1/

7

68

312

If you are at

#CoRL2022

, come to check our poster at the Poster Lobby 352 (4:45 pm - 6:00 pm).

See how our multi-finger robot hand rotates an orange peel.

@corl_conf

Website:

Code:

3

13

95

Getting rich object representation from vision/touch/proprioception stream, like how we human perceive objects in-hand.

🎺Webiste:

➡️Led by

@Suddhus

.

0

8

68

Had an amazing time at the robot learning workshop

#NeurIPS2023

! Thanks for the organizers (especially🤝

@shahdhruv_

) for such a great event!

I was also thrilled to receive the outstanding demo reward. Related Projects are: and .

Afficianados of robot learning: join us in Hall B2 at

#NeurIPS2023

for some cutting-edge talks, posters, a spicy debate, and live robot demos!

The robots are here, are you?

We also have some GPUs for a "Spicy Question of the Day Prize" 🌶️, don't miss out

0

6

42

0

1

53

We are organizing a workshop on touch processing

@NeurIPSConf

2023!

If you want to learn about the current status of future applications of touch processing, join us on Dec 15th at Room 214!

Don't miss our amazing lineup of speakers! For more info:

1

7

41

Our work on learning visual dynamics is accepted by

#ICLR2021

. We obtained state-of-the-art results on multiple prediction tasks as well as the

#PHYRE

physical reasoning benchmark.

Check our latest results at

0

5

40

We’ve been thinking about this for a while: how to simulate diverse objects in a singular, abstract form? We show one example with generalization to an assortment of 🫙.

Time to give two hands to your favorite

#humanoid

! Twisting lids (off) is our first step, and more to come!

0

5

31

Check out our new bi-dex-hands.

To me, it is quite a fun experience to move away from my comfort zone of sim-to-real, and learn about how to collect data. I was very bad at playing video games (teleop), but with this, even I can collect the steak training data in about 3 hours.

1

6

28

Really enjoyed all the talks, spotlights, and panel discussion of our 1st touch processing workshop! A lot of fun and inspiring discussion! Thank you to everyone who contributed to making it a success! 🙌

We are organizing a workshop on touch processing

@NeurIPSConf

2023!

If you want to learn about the current status of future applications of touch processing, join us on Dec 15th at Room 214!

Don't miss our amazing lineup of speakers! For more info:

1

7

41

1

1

24

so cute 😀 congrats to the team!!

0

3

21

📢 RSS 2024 Dexterous Manipulation workshop.

Deadline extended to: June 14th (this Friday)! Don't miss this opportunity to share your exciting work.

Submit your contribution here:

0

7

21

Recordings available at (also on our website). Check them out for the great invited talks and spotlights.

1

4

19

Heading to New Zealand for

@corl_conf

! It has been 4 years since my last international travel!

And ... this is the first time I'm travelling with a robot!

We will present our poster on Friday, Dec 16, 4:45PM-6:00PM, with the help of a robot 🤖. Check out the summary thread:

💡We release Hora: a single policy capable of rotating diverse objects 🎾🥝🧊🍋🍊⌛🥑🍅🍐🍑 with a dexterous robot hand.

No cameras. No touch sensors. Hora is trained entirely in simulation and directly deployed in the real world.

see

#CoRL

@corl_conf

1/

7

68

312

0

0

17

Amazing!

0

2

16

Checkout

@carohiguerarias

’s work on estimating object-environment contact using tactile sensing, and how it benefits downstream manipulation.

And if you want to simulate touch sensing in isaacgym, make sure you also check our code!

0

3

15

videos available now!

We are organizing a workshop on touch processing

@NeurIPSConf

2023!

If you want to learn about the current status of future applications of touch processing, join us on Dec 15th at Room 214!

Don't miss our amazing lineup of speakers! For more info:

1

7

41

0

3

15

very nice work! capturing scene geometry using (vision and) touch. Also with correspondence!

NeRF captures visual scenes in 3D👀. Can we capture their touch signals🖐️, too?

In our

#CVPR2024

paper Tactile-Augmented Radiance Fields (TaRF), we estimate both visual and tactile signals for a given 3D position within a scene.

Website:

arXiv:

9

23

116

3

1

14

📢 Announcing 1st "Workshop on Touch Processing: a new Sensing Modality for AI" at NeurIPS 2023. If you are interested in touch sensing & machine learning, don’t miss the opportunity to submit your work and participate! 📷 Call for Papers: .

#NeurIPS2023

1

5

14

Great work! Exciting time for sim2real transfer!

1

2

12

Unitree's progress is incredible! One of the hardest robot learning task I saw recently.

Unitree H1 The World's First Full-size Motor Drive Humanoid Robot Flips on Ground.

Unitree H1 Deep Reinforcement Learning In-place Flipping !

#Unitree

#UnitreeRobotics

#AI

#Robotics

#Humanoidrobots

#Worldrecord

#Flips

#EmbodiedAI

#ArtificialIntelligence

#Technology

#Innovation

68

412

2K

0

0

11

Great opportunity for incoming PhD students!

0

0

12

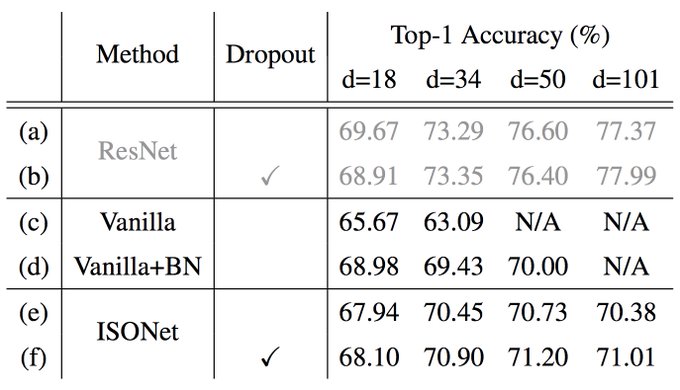

Excited to share our new work with

@xiaolonw

, Chong You, Yi Ma, and Jitendra Malik

1

3

10

When I first saw the video, I was quite impressed by the complexity of the task and the efficiency of the data collection. It definitely reshapes my opinion on robot data collection!

Checkout the new paper and fully open-sourced system by

@chenwang_j

and team!

1

0

10

🚀 Deadline Extended to Oct 2nd for our touch processing workshop at NeurIPS 2023!

We encourage relevant works at all stages of maturity, ranging from initial exploratory results to polished full papers!

Also don't miss our amazing lineup of speakers!

📢 Announcing 1st "Workshop on Touch Processing: a new Sensing Modality for AI" at NeurIPS 2023. If you are interested in touch sensing & machine learning, don’t miss the opportunity to submit your work and participate! 📷 Call for Papers: .

#NeurIPS2023

1

5

14

0

1

7

Thanks to the amazing speakers: Katherine J. Kuchenbecker, Chiara Bartolozzi,

@jiajunwu_cs

, Satoshi Funabashi, Ted Adelson,

@nathanlepora

, Jeremy Fishel, and Veronica Santos; and my co-organizers

@RCalandra

,

@perla_maiolino

, Mike Lambeta, Yasemin Bekiroğlu, and

@JitendraMalikCV

.

0

0

3

Thanks a lot

@stokel

for writing this!

My latest for

@newscientist

is on this cool robotic hand which has the dexterity to handle tiny, irregular-shaped objects in a way I've never seen before

1

0

3

0

0

5

to correct my previous tweet: I receieved an email saying one paper I was reviewing for

#NeurIPS2022

is desk rejected by PC. That is [2 days] before the review deadline. I guess many reviewers finished/drafted their reviews and thus part of our time is wasted.😀

1

1

4

Feel free to open an issue, email me, or join the Discord channel for further discussions.

arxiv:

work done with:

@ashishkr9311

,

@RCalandra

,

@YiMaTweets

, and Jitendra Malik

0

1

5

The robots are so cute! Looking forward to trying out!

1

0

5

very interesting task! robot dogs also need to exercise 😀

0

0

5

w/

@brenthyi

,

@Suddhus

, Mike Lambeta,

@YiMaTweets

,

@RCalandra

,

@JitendraMalikCV

.

@berkeley_ai

and

@MetaAI

2

0

4

Cool sim-to-real results! The lightbulb example is quite impressive!

1

2

4

cool work on using sim data to achieve in-the-wild generalization!

1

0

4

Lead by amazing Jessica Yin and collaborate with

@JitendraMalikCV

,

@LabPikul

, Mark Yim,

@t_hellebrekers

Thanks the support from

@AIatMeta

,

@GRASPlab

,

@berkeley_ai

, and

@UWMadEngr

Website:

Paper:

0

0

4

@dhanushisrad

still working on that. We decide to first extend the axes set, then extend object set.

0

0

3

Congrats!

@Jiayuan_Gu

0

0

3

Thank you

@QinYuzhe

! Your works are inspiring and helpful for our project! Looking forward to seeing more exciting advancement in this area.

Glad to see another sim2real work on dexterous hand!

When imitation learning are dominant nowadays for manipulation, sim2real are still powerful for complex dynamical system.

Congrats the authors for the great work

@ToruO_O

@zhaohengyin

@HaozhiQ

1

2

15

0

0

3

also thanks for the help from my great co-organizers

@RCalandra

,

@perla_maiolino

, Mike Lambeta,

@YsmnBekiroglu

, and

@JitendraMalikCV

.

0

0

3

Great place if you like robot hands!

Seeking robotics wizards to join our quest! 🧙♂️🤖

Join our cutting-edge team and shape the future of dexterous robots. We're seeking brilliant minds to push the boundaries of what's possible in robot manipulation.

Link:

#Robotics

#AI

#RobotLearning

0

22

134

0

0

3

(just joking) I was reading social psychology last year. When I read "Insufficient Justification Effect", I realize that's how school decides salaries:

2

0

1

Super excited to have Katherine J. Kuchenbecker, Chiara Bartolozzi,

@jiajunwu_cs

, Satoshi Funabashi, Ted Adelson,

@nathanlepora

, Jeremy Fishel, Veronica Santos as our speakers!

1

0

2

Also congrats Zilin Si, Kevin Lee Zhang,

@fzeyneptemel

,

@Oliver_Kroemer

for winning the best paper award (tilde) and

@kenny__shaw

@pathak2206

for winning the best presentation award (leap hand v2)!

0

0

2

cool work! very fast progress.

Introduce OmniH2O, a learning-based system for whole-body humanoid teleop and autonomy:

🦾Robust loco-mani policy

🦸Universal teleop interface: VR, verbal, RGB

🧠Autonomy via

@chatgpt4o

or imitation

🔗Release the first whole-body humanoid dataset

19

68

385

1

0

2

@whoisvaibhav

Human hand is so great. Hardware is not so good. But we’ll ping you when we get closer.

1

0

2

Also thanks for my amazing co-organizers:

@taochenshh

@QinYuzhe

@dngxngxng3

@contactrika

@asmorgan24

@xiaolonw

@pulkitology

0

0

1

@FahadAlkhater9

@ashishkr9311

@UCBerkeley

@Tesla

@Teslasbot

thank you! we also have a summary post here in case you are interesetd

💡We release Hora: a single policy capable of rotating diverse objects 🎾🥝🧊🍋🍊⌛🥑🍅🍐🍑 with a dexterous robot hand.

No cameras. No touch sensors. Hora is trained entirely in simulation and directly deployed in the real world.

see

#CoRL

@corl_conf

1/

7

68

312

1

0

1