Yuzhe Qin

@QinYuzhe

Followers

2,785

Following

594

Media

23

Statuses

418

Robot Learning @ UC San Diego; prev: @NVIDIA Robotics @GoogleX

San Diego, CA

Joined December 2020

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

キャンペーン開催中

• 191348 Tweets

U STEAL MY HEART 1STMEET

• 190753 Tweets

LINGORM CHEFPOM

• 130713 Tweets

#CDTVライブライブ

• 79915 Tweets

高市さん

• 78026 Tweets

#海のはじまり

• 56806 Tweets

iPhone16

• 45040 Tweets

Hyper Band

• 24807 Tweets

ジャミル

• 24627 Tweets

くつろぎマイルーム

• 24293 Tweets

Namaz

• 21496 Tweets

SB19 PODCAST SPECIAL EPISODE

• 20808 Tweets

Draghi

• 18998 Tweets

99SULWHASOO X GULF

• 15947 Tweets

PIERROT

• 13839 Tweets

#بيولي_مرفوض_من_النصراويين

• 12257 Tweets

海ちゃん

• 11605 Tweets

カヤック

• 11536 Tweets

全員無事

• 11192 Tweets

Last Seen Profiles

Through my years of PhD research and working with undergrad and master's students, I've realized that finding the sweet spot between guidance and freedom when advising others is a real challenge. But

@xiaolonw

has made it throughout my PhD journey. Over the past four years, his

17

2

131

Thanks

@_akhaliq

for posting our work!

Teleoperation demo in simulation is more powerful than we thought when considering the powerful data augmentation capability of physical simulators.

0

12

74

Many people are impressed by the bravery of the model (that's me) for allowing a robot to touch my face. However, it's not as risky as it might seem. Here are the takeaways of how common robot manipulation systems enhance safety:

1. Hardware Level Protection: Most robots

4

9

68

🚀 Upgrade your

#IsaacGym

coding experience with a simple command:

pip install isaacgym-stubs --upgrade

Compared with the previous stub, we now have:

✨ Improved code completion features

📚 Full doc string for each API function

💻 Detailed function docs right in your editor

4

6

64

Robot Synesthesia is accepted in

#ICRA2024

! Congrats to all the authors especially

@Ying_yyyyyyyy

for leading the effort.

0

12

53

Will the future AI be artists, shaping aesthetic visuals and rhythmic poetry like SORA and GPT? I'd argue no.

🎨Art should remain a human expression. Let AI do the housework instead, so we can savor more moments with our beloved Peppa Pig cartoon🖼️

1

6

45

Choosing a teleoperation pipeline: Vision-based or exoskeletons?

😇The answer: Combine both!

Vision-based methods excel for dexterous hand control, while exoskeletons offer precise arm tracking. Together, they create a more comprehensive solution.

1

8

43

Just dropped a paper on Dynamic Handover - our latest sim2real work on teaching robots to throw and catch.

Fun fact: No need for XArm's impedance control to nail this task - just some good old trajectory prediction by the catcher🦾

Project Page:

0

6

42

I'm in Auckland and presenting our work on PointCloud RL for dexterous hand! If you're attending

@CoRL2022

and interested in visual RL, point cloud or dexterous hand, feel free to stop by at our poster in OGG room 040, 4-5pm!

1

6

39

For the learning from human demonstration setting, we try to answer two questions: 1. How to make data collection interface customized with more convenience for human. 2. How to transfer the demonstration to different embodiment with more generalization to robot.

1

2

37

Finished my first full marathon in US

@theSFmarathon

Although hard to get the same pace as the marathon have attended during undergraduate, it is glad that I can still make the 26.2 miles after several years.

1

0

37

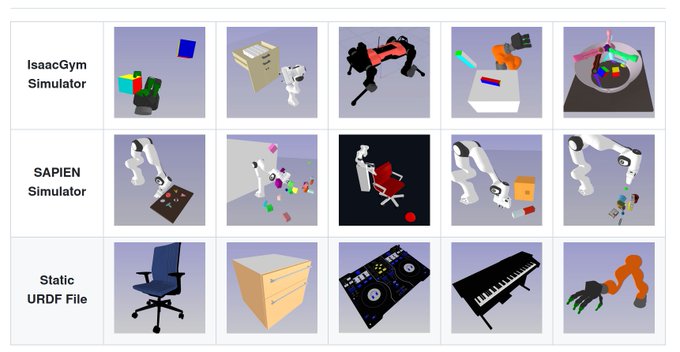

New to robotic simulation? Dive into our beginner tutorial on a range of simulators perfect for starters:

It covers 8 distinct simulation platforms, complete with overviews to kickstart your journey for simulated robot

(1/3)

🚀Excited to announce Simulately🤖, a go-to toolkit for robotics researchers navigating diverse simulators!

💻 Github:

🔗 Website:

Let’s level up our robotics research with Simulately!

#Robotics

#Simulators

#ResearchTool

3

12

31

0

1

32

Excited to share our latest work on in-hand manipulation 🔥🔥

How to achieve generalizable in-hand manipulation without perception? The work lead by

@zhaohengyin

and

@binghao_huang

provides an answer: touch sensing!

Project website:

0

2

31

TechXplore highlights AnyTeleop, a project I had the pleasure of contributing to during my previous internship at NVIDIA. Exciting to see the teleoperation initiative gaining recognition!

📡 🤖 Recent advances in

#robotics

and

#AI

have opened exciting new avenues for teleoperation, the remote control of robots to complete tasks in a distant location via

@techxplore_com

.

0

7

33

0

3

32

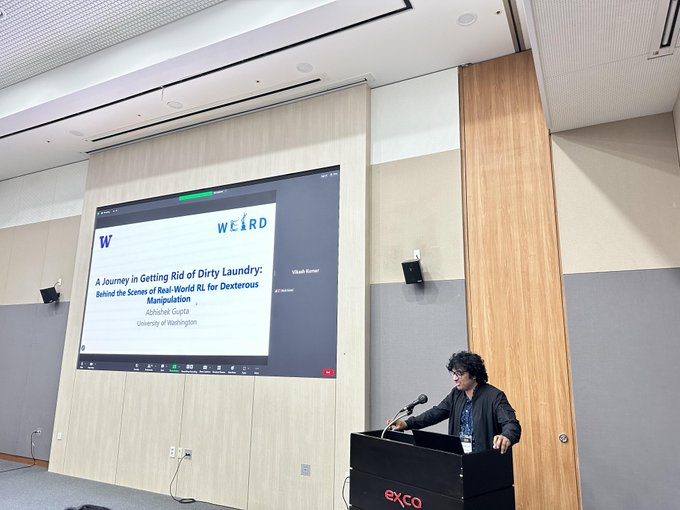

Exciting news! Our workshop on Learning Dexterous Manipulation is now available on YouTube. Don't miss this chance to watch it at your convenience!

Our Learning Dexterous Manipulation () at RSS was a hit!

Thank you for the speakers

@pulkitology

@Vikashplus

@t_hellebrekers

@abhishekunique7

Carmelo,

@haoshu_fang

, and the organizers, especially in-person ones

@QinYuzhe

@LerrelPinto

@notmahi

@ericyi0124

3

5

55

1

11

32

🚀Simulator speed is just as crucial as dataset scale.

Check out the ManiSkill 3 beta (powered by SAPIEN 3) for faster robot learning advancements.

Batched simulation + batched rendering.

Arm + Hand + Dog + Humanoid + Mobile Platform

Code:

1

4

31

Learning information-seeking behavior through RL is always hard. However, our DexTouch model can learn to find objects using touch only, no visual cues—especially useful in the dark 🕶️

Project page:

0

3

27

Fantastic humanoid control from lab mates!

Sim2Real is useful when dealing with complex motions. Congrats to the authors!

0

3

24

It is the 2023 that we are still working on hand-eye calibration🤖

Despite well-established theory, the engineering challenge of hand-eye calibration like occluded marker troubles robotics researchers. Check this work from

@LinghaoChen97

to save your time👾

0

4

23

Works on tactile sensors, hand-object perception algorithms, and groundbreaking robot learning methods are also welcomed at our RSS 2023 workshop! 🤖✋

🔗

0

4

21

Exciting new work from Nicklas

@ncklashansen

to extend the model-based RL to hierarchical settings. We may expect more powerful capability for the model-based approach.

🥳Excited to share: Hierarchical World Models as Visual Whole-Body Humanoid Controllers

Joint work with

@jyothir_s_v

@vlad_is_ai

@ylecun

@xiaolonw

@haosu_twitr

Our method, Puppeteer, learns high-dim humanoid policies that look natural, in an entirely data-driven way!

🧵👇(1/n)

13

66

381

0

6

22

A brilliant usage for dexterous robot hand, skillfully merging its pick-and-place capabilities with in-hand re-orientation. A feat simply unachievable for a parallel gripper.

1

3

21

How can dexterous robot learn from human video? Here we propose a novel pipeline to facilitate multi-finger imitation learning. This is 1 year of my PhD work jointly with

@yh_kris

@stevenpg8

@hanwenjiang1

@RchalYang

@yangfu0817

@xiaolonw

Project page:

0

3

21

Truly amazed by how joint space control can be executed with such ease. It's a clever use of kinematics equivalence for joint space allocation.

When operating the Gundam, we do not need to be as tall as Gundam, just mirroring the joints.

1

1

20

The leading author of this project, Jun Wang

@Junwang_048

is applying for Ph.D.

In a remarkably short period, Jun has become proficient in robot simulation and the complex Allegro hand hardware, positioning himself as a formidable contender in the field of robot learning!

0

3

19

Dexterity comes from simplicity. Glad to see so many wonderful data collection ideas at the beginning of 2024. Congrats for the great work

@chenwang_j

1

1

16

🤲Join us for the 2nd Dexterous Manipulation Workshop at

@RoboticsSciSys

2024!

We welcome submissions on all topics related to dexterous manipulation, including tactile sensing. Don't miss the deadline on June 10, 2024.

0

4

17

🎹Exiting dexterous piano playing and more exciting interactive demo from

@kevin_zakka

2

1

15

Glad to see another sim2real work on dexterous hand!

When imitation learning are dominant nowadays for manipulation, sim2real are still powerful for complex dynamical system.

Congrats the authors for the great work

@ToruO_O

@zhaohengyin

@HaozhiQ

1

2

15

Navigating robotics benchmarking can be frustrating when you have access to open-source datasets but lack the right hardware to utilize them effectively.

Leveraging simulations for reproducible evaluations is a valuable strategy worth exploring!

0

1

15

Touch sensor is like a natural friend of multi-finger hand. It is excited to see another fantastic project on Dex Hand + tactile sensor🤖

0

1

16

@xiaolonw

@yh_kris

@xiaolonw

, your mentorship has been a guiding light throughout my PhD. Your advice has continuously shaped my "value function", not only for research but also for my attitude toward life. This value function will remain a vital part of my decision-making process, even after

0

0

15

Work done w/

@Ying_yyyyyyyy

, Haichuan Che,

@binghao_huang

,

@zhaohengyin

, Kang-Won Lee, Yi Wu, Soo-Chul Lim,

@xiaolonw

Check out our work here

Project: Paper:

(7/7)

3

3

15

Leveraging visual prompts with keypoint annotations offers a intuitive method. Keypoint-centric techniques enjoyed popularity in the years preceding the advent of imitation and reinforcement learning.

Today, VLM breathes new life into this approach with fresh possibilities.

0

3

12

The workshop is happening now! Join our at room 323 if you are attending RSS2023 in Daegu.

The first workshop on Learning Dexterous Manipulation at

@RoboticsSciSys

is starting now! Check out our speaker lineup at or tune in via zoom at if you are not in person.

0

6

20

0

4

12

Great athletic robot from

@ZhongyuLi4

! Maybe next time we can have a robot coach🏃♀️🏃♂️🏃

0

1

10

Generalizable 3D representation helps the robot to understand the surroundings, which is crucial for interactions. Great to see 3D helps robotics again!

0

2

11

Vision-based teleoperation for whole-body control of humanoid robot!

Introduce OmniH2O, a learning-based system for whole-body humanoid teleop and autonomy:

🦾Robust loco-mani policy

🦸Universal teleop interface: VR, verbal, RGB

🧠Autonomy via

@chatgpt4o

or imitation

🔗Release the first whole-body humanoid dataset

19

68

388

0

1

11

The dexterous manipulation frontier awaits at

#CoRL2024

Munich.

We are hosting another dexterous manipulation workshop at CoRL. Join us in pushing the boundaries of robotic dexterity. 🖐️💡

0

0

10

Huge thanks to all the speakers for the excellent presentation!

Our Learning Dexterous Manipulation () at RSS was a hit!

Thank you for the speakers

@pulkitology

@Vikashplus

@t_hellebrekers

@abhishekunique7

Carmelo,

@haoshu_fang

, and the organizers, especially in-person ones

@QinYuzhe

@LerrelPinto

@notmahi

@ericyi0124

3

5

55

0

0

10

📢 RSS 2024 Dexterous Manipulation workshop: Paper submission deadline extended to June 14th (this Friday)!

We welcome all workshop paper submissions, even if already submitted to a conference like CoRL. 🤖🔬

Demo-only submission is also welcomed!

Learn more:

0

1

10

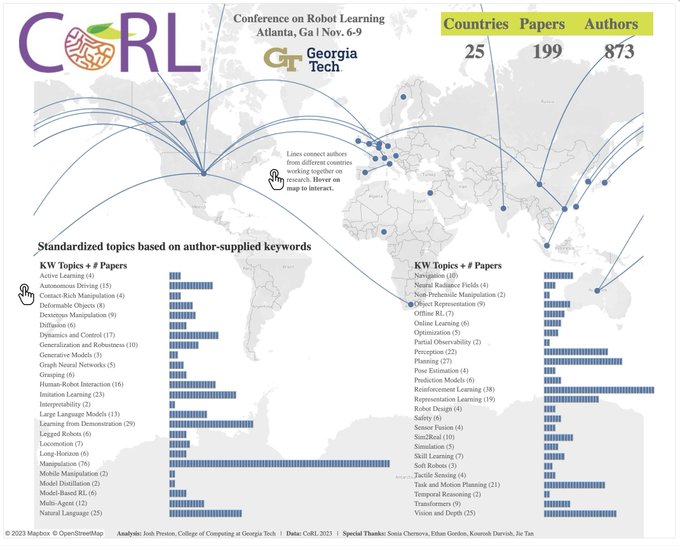

Manipulation continues to be the most trending topic at CoRL

0

1

6

Super excited work from

@chenwang_j

by combing high-level video demo and low-level teleoperation demo.

0

0

6

Very clear explanations for the relationship between trajectory optimization and RL. “Cached Optimization” can be more powerful.

0

0

6

📣 Great news! The deadline for submitting to the Learning Dexterous Manipulation workshop has been extended to June 16th.

We're excited to see your incredible work! 🤖✍️

🔗

0

2

6

@zc_alexfan

ARCTIC is really great, it also opens up some opportunity for some of the robot manipulation research!

Awesome work!

1

0

5

Want to use simulator for embodied AI research but find it hard to start? Our tutorial will offers a practical guideline (with) to build your simulated environment. It is happening now @ CVPR2022.

Ever thought of applying your vision algorithm to embodied tasks but cannot find one? Why not make one yourself?

Our

#CVPR2022

tutorial is starting Monday at 13:00 CT!

We'll show you hand-by-hand on building Embodied AI environments from scratch!

1

11

80

0

0

5

@haosu_twitr

@Jiayuan_Gu

@xiaolonw

I am grateful for your congratulations and support throughout my academic journey, from my time as a master's student in your lab in 2018 to the completion of my PhD. Your mentorship has been instrumental in shaping my research skills and helping me grow as a scholar. Thank you

0

0

5

Really enjoyable conference experience

@corl_conf

in New Zealand. Fantastic view and most importantly, wonderful food😛

0

0

5

@LerrelPinto

Thanks, Lerrel! We've gained so much from Open Teach too!

Technically, VisionPro's 8 embedded cameras enable broader workspace hand tracking, allowing for larger operator motions. Meanwhile, Quest offers affordability and a robust open-source community!

0

0

3

@kevin_zakka

Congrats! It looks like the mechanical structure of the gripper works pretty well in this demo! I am curious that whether it can still be parallel when interacting with objects? Will the equity constraint break with large force between the gripper and object.

1

0

4

@dhanushisrad

Cool system! Just wondering that why the human hand is facing upward when the robot hand facing downward? It is related to the sensor position, i.e. you need to capture the hand pose from VR headset.

1

0

4

@HarryXu12

Thanks, Huazhe. The person who writes the controller code should be the first to test it 😉

1

0

4

@TairanHe99

@chatgpt4o

Cool work Tairan! Excited to see more awesome work from Human2Humaniod family😃

1

0

3

@YunfanJiang

Really nice sim2real performance! I was curious that how do you simulate the soft TPU-printed gripper in simulator.

1

0

3

@kai_junge

Fantastic job! I'm curious, is the game controller in your left hand used for overarching control functions of the robot, such as an emergency stop or other high-level commands?

1

0

2

@binghao_huang

@yh_kris

Thank you Binghao, the best dexterous hand buddy. Working closely with you to tackle the challenges of the Allegro hand has been an incredible learning experience for me. Your knowledge and dedication have been truly inspiring. Wish you remarkable success in your research, and I

1

0

3

A special robotics dataset from

@litian_liang

, pushing the understanding of multi-modal manipulation.

0

0

3

A shoutout to the amazing team for the collaboration on this work!

@Junwang_048

@KuangKaiming

@yigitkkorkmaz

@ Akhilan Gurumoorthy

@haosu_twitr

@xiaolonw

Project page:

Paper:

1

0

3

@kevin_zakka

Maybe one of the most important reason to use type hint in Python is better code completion.

1

0

2

@Vikashplus

@MyoSuite

Great presentation! Learned a lot from the innovative perspective between dexterity and physiology evidence.

1

0

2