Vikash Kumar

@Vikashplus

Followers

4K

Following

3K

Media

253

Statuses

1K

Studying intelligent embodied behaviors. Ad. Prof. @CMU_Robotic | Sr. research scientist @OpenAI @GoogleAI @AIatMeta | @berkeley_ai @UWcse #MuJoCo

NewYorkCity

Joined February 2016

✈️On the way to @corl_conf for my first-ever keynote. ℝ𝕠𝕓𝕠𝕥𝕚𝕔𝕤 – 𝕥𝕙𝕖 𝕗𝕦𝕥𝕦𝕣𝕖 𝕚𝕤 𝕒𝕟𝕪𝕥𝕙𝕚𝕟𝕘 𝕓𝕦𝕥 𝕠𝕡𝕥𝕚𝕞𝕒𝕝!. a bit excited ✨ & a bit nervous🤪 .(+2 more talks. details👇)

5

11

134

#𝗥𝗼𝗯𝗼𝗔𝗴𝗲𝗻𝘁 -- A universal multi-task agent on a data-budget. 💪 with 12 non-trivial skills.💪 can generalize them across 38 tasks.💪& 100s of novel scenarios!. 🌐w/ @mangahomanga @jdvakil @m0hitsharma, Abhinav Gupta, @shubhtuls

6

69

253

#MuJoCo 3.1.4 drops💧. Lots of new features - . - Gravity compensation. - Non-linear least squares (ala SysID✅). - MJX features (yup, still no tendons🫤). - many more.

3

24

179

📢 Announcing a breakthrough in science robotics.@SciRobotics - 𝙑𝙞𝙨𝙪𝙖𝙡 𝘿𝙚𝙭𝙩𝙚𝙧𝙞𝙩𝙮. 🏳️🌈 any object .🌈 any rotation .📉 a low-cost hand (D'Claw).📷single camera. A single policy capable of in-hand reorientation of.novel & complex objects .(thread👇)

6

31

179

Just wait until you realize that this is full-blown physics with contacts, not just a visual rendering!. 💪#MuJoCo 3.0 is packed with features.👉 XLA: Accelerated physics with JAX.👉 Non-convex collisions.👉Deformable bodies.Give it a try -

1

15

169

Human Dexterity is the epitome of motor control. My PhD goal was to study this grand challenge. Amazes me to-date, we found 1st behavioral prior for human dexterity #MyoDex. It's a step change🪜in our understanding, & ability to synthesize, physiological motor control. #proud.

Excited to present MyoDex at @icmlconf today (poster #200 at 10:30AM). We propose:.🧪 Open-Sourced MyoSuite benchmark w/ 50+ contact-rich manipulation tasks.🧠 The MyoDex prior for quickly learning novel tasks w/ RL.🦾 All validated on a biologically accurate musculoskeletal arm

2

24

156

What most fails to realize. - We can solve problems that are an order more complex than ones found in robotics.- Most #RL algorithms don’t direct scale to higher dim search space .- contact discontinuities,once considered evil, aren’t daunting anymore. 1/N🧵.

How does the brain control the numerous muscles of the body? Let's say you want to rotate two balls in your hand, how does your brain achieve that?. Read our article in @NeuroCellPress to learn more!.

2

12

144

New paper - RelayPL. Takeaway: Goal conditioned hierarchical policy when infused with unstructured play data and a relabelling trick can solve REALLY LONG horizon tasks!. Website: With A. Gupta, @coreylynch , @svlevine, @hausman_k

1

28

120

It has been a long roller coaster journey that started in 2008 when I was an intern under Emo Todorov. 12 years and now it's in the hands of the open source community. Excited for the next phase . Big congratulations to team MuJoCo and @DeepMind.

We’ve acquired the MuJoCo physics simulator ( and are making it free for all, to support research everywhere. MuJoCo is a fast, powerful, easy-to-use, and soon to be open-source simulation tool, designed for robotics research:

0

11

117

ATLAS is such a show-off 😎. Had a phenomenal visit to @BostonDynamics discussing "Foundation Models for Low Level Motor Control". (Recording coming soon). Thanks @KuindersmaScott for the invite #bigfan. He is onto something cool, keep your eyes peeled and fingers crossed.

4

16

98

📢Major update to #RoboHive v0.7🔥."Unified Robot Learning Framework". Seamlessly work across #Gym & #Gymnasium w multiple teleop interfaces(sim & hardware) on latest #MuJoCo. 🌐 🏗️ pip install robohive

1

15

90

Learned controller (white) vs classical controller (grey) compete head to head @ #corl2022 .PS: both are being tele-oprerated

2

15

83

Woohoo,.#R3M won the best paper award at .#ICRA Scaling robot learning workshop. (). @SurajNair_1, @aravindr93 , @chelseabfinn, Abhinav Gupta

6

6

82

Can we solve robotics using off-domain, non-robotics datasets? . I'm bullish on➡️ LLMs for high-level plans 🔄 human videos for low-level robot skills. 📢𝑯𝑶𝑷𝑴𝑨𝑵 -- zero-shot manipulation in the wild from human videos!. with @mangahomanga , Abhinav Gupta, @shubhtuls.🧵👇

2

7

77

We are building something unimaginable 🧐. Hiring #ML, 3D #vision experts and tech-artists at all levels. Will love to help out. DMs open.

Between Apple SPG shutdown, Cruise layoffs, and shakeup at Google Robotics there are >1,000 top notch AI & Robotic engineers on the market in the Bay Area 🙌. Bay area continues to be top place imo for robotics - 5yrs ago i moved from NYC here for this very reason.

4

6

77

Why does Reinforcement Learning(RL) struggle with high-dim control problems?➡️Exploration. Announcing the biggest speed-up in RL-algos in recent history 𝙎𝙮𝙣𝙚𝙧𝙜𝙞𝙨𝙩𝙞𝙘 𝘼𝙘𝙩𝙞𝙤𝙣 𝙋𝙖𝙩𝙝𝙬𝙖𝙮𝙨!.✅compatible with all RL-libs.😀fully automatic.❌no demos required.

(1/8) In July, we presented SAR at #RSS2023. We show that SAR enables SOTA high-dim control by applying the neuroscience of muscle synergies to reinforcement learning!. Check out our thread on SAR below 👇. Site (code, vids): Paper:

1

9

76

The uniqueness of human intellect lies at the juncture of 1. cognitive decision making, &.2. musculoskeletal motor-control to express them. Introducing #MyoSuite -- a framework to investigate these two facets of intelligence.

1

8

62

Often overlooked in AI, morphological representations are one of the strongest form of prior behind ALL intelligent being . - acquired over generations.- has no replacement.- more fundamental+critical than acquired representations (world models, concepts, etc).

ダチョウから着想を得た脚部クラッチにより、最小限の制御でエネルギー効率の高い歩行を実現した鳥ボット. #BirdBot #robot #robotics #Biorobotics #BioRob #biomimicry #バイオミミクリー #生物模倣 #ダチョウロボット

1

10

57

Presenting the newest member of the #ROBEL family: .⭐️D'Manus⭐️- a learning platform for tactile aware manipulation . 📉 Low cost ($3500).👌10 DoF prehensile hand.👋 Surface tactile sensing (ReSkin).💪 Robust (>10k operational hours) .✅ Open-source. 1/n

3

11

63

Reproducibility in robotics is desired, but extremely challenging, especially with hardware results. Our effort in making #ALOHA reproducible is paying off. Multiple folks have been able to reproduce and extend it to new systems. A thread 👇.

2

10

56

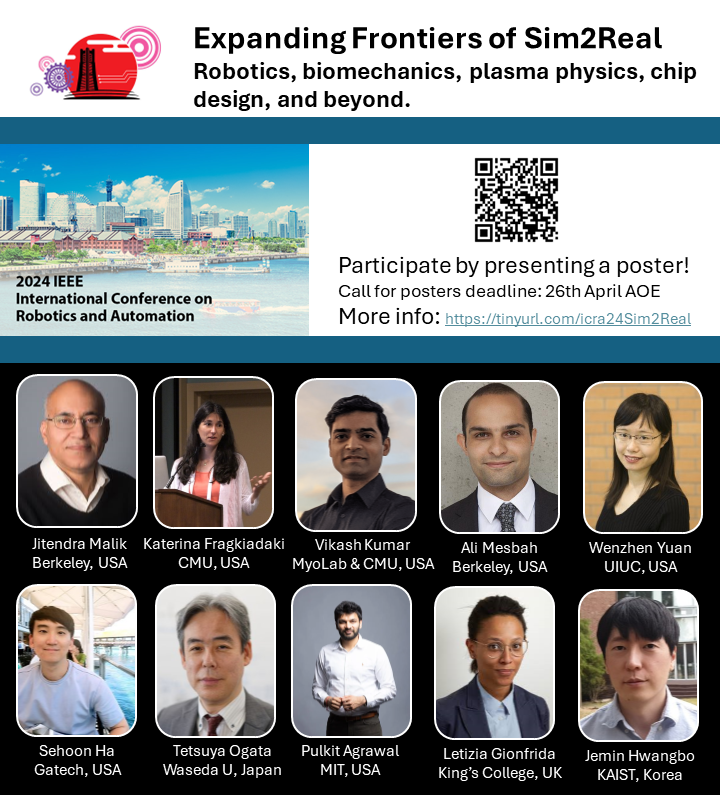

🌈𝙀𝙭𝙥𝙖𝙣𝙙𝙞𝙣𝙜 𝙁𝙧𝙤𝙣𝙩𝙞𝙚𝙧𝙨 𝙤𝙛 #𝙎𝙞𝙢2𝙍𝙚𝙖𝙡🌈. 📢 #ICRA workshop deliberating a transformative paradigm that has pushed many frontiers. 🔎 will it keep scaling/delivering?. 🔎 open/missed opportunities?. 📩Accepting submissions. See you at @ieee_ras_icra

1

12

55

What’s truly remarkable about @UnitreeRobotics and @BostonDynamics is they let their results do most of the talking! . It almost seems like Unitree has caught up (in technology) with Boston Dynamics despite having 2 decades of age gap. Anyone knows about their business numbers?.

Daily Training of Robots Driven by RL.Segments of daily training for robots driven by reinforcement learning. Multiple tests done in advance for friendly service humans.😊.The training includes some extreme tests, please do not imitate. #AI #Unitree #AGI #EmbodiedIntelligence

4

1

54

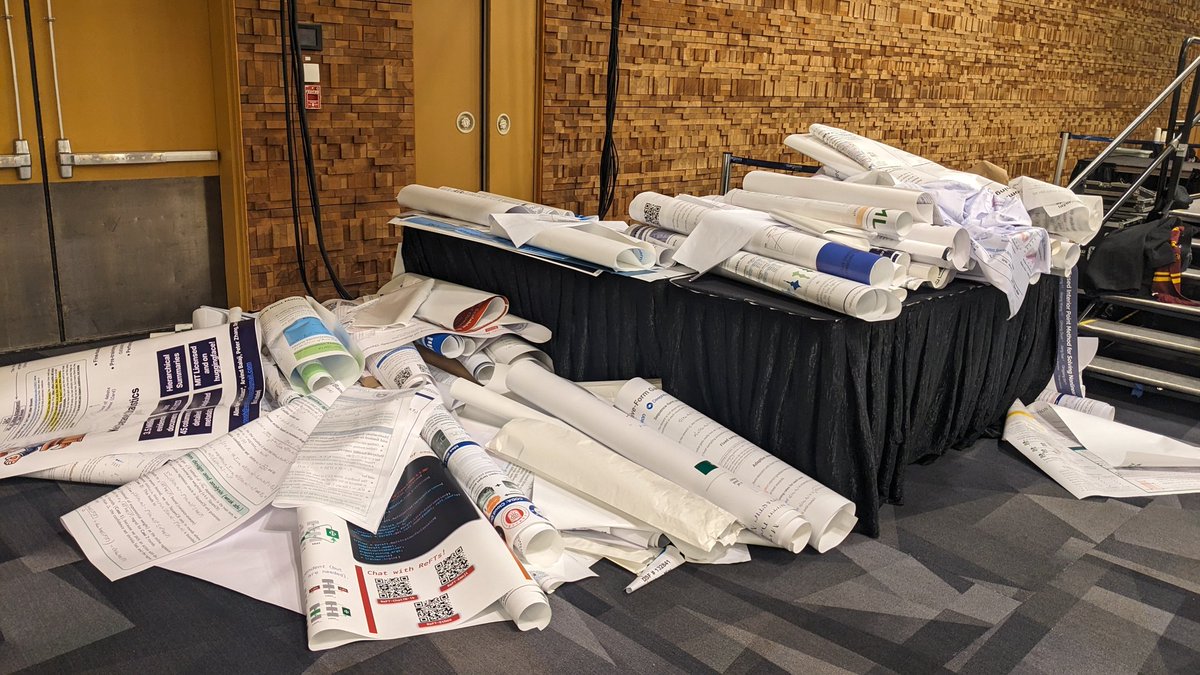

Both cost roughly the same - one is way more reusable and could have saved > 500,000$ in waste at @NeurIPSConf . 💡Idea: Buy TVs & present posters, donate to local communities post conferences

It costs $89-$199 for a poster printing.Estimated $260.000-$597.000 for ~3k posters (main conference). $0.5M dollars go to the trash bin right after 3 hours 😭. I feel sad, is there any more sustainable way to reduce this waste?? 🥹 #NeurIPS2024 #NeurIPS

8

3

54

The official RL library of #PyTorch is finally out📢📣.Thrilled to have played a small role. Take it for a whirl 🌪️ Looking forward to your valuable feedback.

TorchRL has its paper!.With Albert Bou, @MatteoBettini98, Sebastian Dittert, @Vikashplus, @shagunsodhani, Xiaomeng Yang, @gdefabritiis and @VincentMoens .

1

8

50

📢Announcing: #MyoChallenge @ #NeurIPS2022.🎯Goal: Physiological Dexterity.💡Task: Develop controllers for physiologically realistic musculoskeletal #MyoHand to solve complex dexterous manipulation tasks.🎲🔃 die reorientation.⚪🔄 simultaneous rotation of two Baoding balls.1/3

3

9

51

When discussions extend well beyond research, & you learn more than you teach them. @rlfromlux wraps up his visit in style w/ phenomenal results (coming 🔜) & by beating me in the farewell match (or did he 🤣). 🚨🆕 We're #HIRING postdocs, interns, full-time.(details👇)

1

3

52

🎉 Quite pleased to share our #GenerativeAI efforts in robotics-.#𝗚𝗲𝗻𝗔𝘂𝗴 has been nominated for the 𝗕𝗲𝘀𝘁 𝗦𝘆𝘀𝘁𝗲𝗺 𝗣𝗮𝗽𝗲𝗿 𝗔𝘄𝗮𝗿𝗱🏅of #RSS2023! . w- @ZoeyC17 @shokiami @abhishekunique7 at @uwcse, @MetaAI.

Lack of scale & diversity in robot datasets is demanding a change towards scalable alternatives- LLMs, Sim2Real, etc. GenAug presents a powerful recipe for using text2image generative models to demonstrate widespread generalization of robot behaviors to novel scenarios. 🧵(1/N)

3

2

49

How robot videos should be - uncut and at 1x . Kudos to the @1x_tech team for this amazing feat.

3

3

48

While others r moving to Imitation Learning, #OG of model-based control @BostonDynamics deploys new SPOT capabilities with RL! . What's exciting? ideological camps in robotics are breaking down. The conversation is about mixing, not choosing between different approaches. (1/2)

2

4

46

Rethinking dexterous manipulation from the first principles-.✅Algorithm (MTRF) that can learn behaviors autonomously without *any* human intervention.✅Paradigm that can learn multiple tasks simultaneously.✅New D'Hand that is robust to over ~2000 hours of on-hardware training!!.

After over a year of development, we're finally releasing our work on real-world dexterous manipulation: MTRF. MTRF learns complex dexterous manipulation skills *directly in the real world* via continuous and fully autonomous trial-and-error learning. Thread below ->

0

8

44

Early years is all about building representations (Vision, motor, world, morphology) strong enough to simplify decision making and execution. My 2023 realization is representation>control.

Lots of roboticists talk about how inspiring watching babies learn is. Total nonsense, worst manipulation learning algorithm I've ever seen. 2 months of basically constant supervision and no applications to real-world tasks at all. It's like grad school all over again.

4

2

43

🏆Nominated for Best Manipulation Paper at @ieee_ras_icra. #HopMan outlines a new paradigm towards a generalizable universal agent ➡️No RL, no imitation, only zero-shot translation of Human Interaction Plans😯. ⏲️Today.- Talk: WeAA1-CC.2 <10:30-12:00>.- Poster: <13:30-15:00>.

Excited to share our latest on generalizable zero-shot manipulation in the real world!. We can train a single goal-conditioned policy that scales to over 100 diverse tasks in unseen scenarios, including real kitchens and offices. w/ @Vikashplus Abhinav Gupta @shubhtuls . 1/n

3

5

43

📢 #𝗠𝘆𝗼𝗦𝘂𝗶𝘁𝗲 𝟮.𝟬.Towards generalizable agents ✍️🤾♀️🤳🏃♀️. If generalization was easy.it would be stupid for evolution.to discover its solution in.complex neural & morphological architectures!. #MyoSuite 2.0 is packed with evolutionary secrets!💰✨.(1/N)🧵

1

9

38

@svlevine @JitendraMalikCV My favorite from @JitendraMalikCV @ keynote panel . “I strongly pushed for robot learning benchmarks initially. I thought the field was wrong for not having one. I’ve done some robotics now. Unlike Vision benchmark, robotics ones are not obvious, or easy. I accept I was wrong”.

1

3

41

Three projects at #ICML2023 this week w amazing students & colleagues🙏. 1⃣ 𝗠𝘆𝗼𝗗𝗲𝘅➡️@CaggianoVitt @SudeepDasari .2⃣ 𝗟𝗜𝗩➡️@JasonMa2020 @yayitsamyzhang @obastani @dineshja.3⃣ 𝗩𝗶𝘀𝘂𝗮𝗹 𝗗𝗲𝘅𝘁𝗲𝗿𝗶𝘁𝘆➡️@taochenshh M.Tippur, S.Wu, E.Adelson @pulkitology . Details🧵👇

1

3

39

Embodied beings🏃discussing embodied intelligence🦾🤖@ieee_ras_icra. Topics:.- 3 fingers on an 🐘elephant?🤯.- @Vikashplus on an 🐘 in 2013🤫.- Humanoid: future & timelines.- Legs, Hands: mistake/opportunity?. @pulkitology @mukadammh @shaneguML @CaggianoVitt @yuvaltassa @erikfrey

1

4

39

#ImitationLearning is progressing fast. Will it overshadow #Sim2Real's success? ⛈️. Let's debate 🗣️. We are creating a safe platform at @ieee_ras_icra w\ @MyoSuite. 📩Submissions open

1

9

38

🌟It was an honor to be recognized with the.**Early Career Keynote** at @corl_conf🚀. Inspiring to share the stage with the best minds in #Robotics, especially now when all eyes—academic, startup, & VC—are on the #EmbodiedAI frontier. 🧵on how I prepared and what I shared👇

1

2

37

Officially released! #ROBEL's a bet to stimulate & facilitate real-world progress in robotics. It has benefitted a wide diversity of projects (from dexterous manipulation to agile locomotion) & breath of researchers (from novice to theoreticians) at @UCBerkeley and @GoogleAI.

Check out ROBEL, an open-source platform for cost-effective robots and curated benchmarks designed to facilitate research and development on real-world hardware. Learn more below ↓

2

12

36

⚡️ALERT: Postdoc opportunities available at @MetaAI . 📢 We are especially looking for robot learning candidates.🤖🤖🤖🤖🤖🤖🤖🤖🤖🤖🤖🤖🤖🤖🤖🤖🤖🤖🤖. Send me a ping if you curious and wanna know more .

0

6

37

Congratulations #CoRL2022 best papers winners! .@hausman_k, @pathak2206, @dineshjayaraman and collaborators

0

3

36

🔍In search of something interesting? Stay tuned .A bunch of interesting releases lined up on @MyoSuite!

1

2

34

Last week, we presented our *single-task* multi-scene agent Today we are announcing #CACTI - a scalable *multi-task* multi-scene framework that delivers agents capable of visuomotor skill generalization to 100s of scenes ➡️1/N🧵

1

7

31

Reproducibility in robotics research is necessary but has proven challenging, especially with hardware results. we took a lot of effort into packaging our research on #ALOHA for reproducibility. And its is spreading! First signs coming in. A few more are in works. .

2

5

31

Hidden behind the limelight of the new electric ATLAS, @BostonDynamics also unveiled impressive dynamic manipulation abilities. Given the progress in high-level semantic reasoning via VLMs, task-specific robust low-level (MPC) planners are the key missing ingredient!. BD? 👀

6

4

32

The gap between quadruped and biped is narrowing fast. To the best of my knowledge CHIMP from. @CMU_Robotics was the first to attempt this idea during #DRC. Any other ? .

Wait?! 😮 A Cyber "Monkey" Dog?! Lite3 robot dog stands bipedally. #DEEPRobotics AI+ algorithm boosts Lite3 learning more than you can imagine. #robotics #robot #robotdog #ai #quadrupedrobot #tech

1

6

29

#RoboAgent is - .⚡️ 6x better than .🤯 needs 18x less data! . Appearing today in the Robot-Learning workshop @NeurIPSConf (Hall-B). w @mangahomanga @m0hitsharma @jdvakil Abhinav Gupta, @shubhtuls.

#𝗥𝗼𝗯𝗼𝗔𝗴𝗲𝗻𝘁 -- A universal multi-task agent on a data-budget. 💪 with 12 non-trivial skills.💪 can generalize them across 38 tasks.💪& 100s of novel scenarios!. 🌐w/ @mangahomanga @jdvakil @m0hitsharma, Abhinav Gupta, @shubhtuls

0

4

31

#Robotics is progressing through a transformative self-discovery🔁 toward the next era. I have been a proponent of both.- 𝑖𝑛-𝑑𝑜𝑚𝑎𝑖𝑛 𝑅𝐿 &.- 𝑙𝑒𝑎𝑟𝑛𝑖𝑛𝑔 𝑓𝑟𝑜𝑚 𝒉𝑢𝑚𝑎𝑛s. @TUDarmstadt, I'll argue if at all to learn from #humans?🤨. Detail:

1

4

31

A perfect example why - Robotics is a quest at the intersection of hardware and software.

LocoMan.= Quadrupedal Robot + 2 * Loco-Manipulator. Powered by dual lightweight 3-DoF Loco-Manipulators and the Whole-Body Controller, LocoMan achieves various challenging tasks, such as manipulation in narrow spaces and bimanual-manipulation. 👇👇👇

0

3

31

Happening today at 2:00 @corl_conf.

✈️On the way to @corl_conf for my first-ever keynote. ℝ𝕠𝕓𝕠𝕥𝕚𝕔𝕤 – 𝕥𝕙𝕖 𝕗𝕦𝕥𝕦𝕣𝕖 𝕚𝕤 𝕒𝕟𝕪𝕥𝕙𝕚𝕟𝕘 𝕓𝕦𝕥 𝕠𝕡𝕥𝕚𝕞𝕒𝕝!. a bit excited ✨ & a bit nervous🤪 .(+2 more talks. details👇)

2

6

30

Real world is the litmus test for Robotics. One can't assume information or cheat. Let's bring robots out of the labs and simulations into the real world. Shout out to @chris_j_paxton and team who have been passionately working hard over an year to make it happen!.

The future of robot butlers starts with mobile manipulation. We’re announcing the NeurIPS 2023 Open-Vocabulary Mobile Manipulation Challenge!.- Full robot stack ✅.- Parallel sim and real evaluation ✅.- No robot required ✅👀.

2

7

28

An approachable blog of our #ALOHA and #ACT efforts -. A few follow up works from us that also might be of interest .- MTACT - - MobileAloha -

✍️ Are you curious about Deep Learning and robotics?. The Action Chunking Transformer by @tonyzzhao et al. is a fascinating and critically important piece of research!. As a weekend fun project, I wrote a blog post to help others and me understand it:.

0

4

28

An independent study identifies #MuJoCo’s as best and easy to use simulator for deformable objects studies 💪.

We recorded data of two robots performing dynamic (left) and quasi-static (right) cloth manipulation. Why? .The dynamic motion produces a large deformation on cloths, really challenging to simulate!.The quasi-static requires accurate simulation of frictional and inertia forces.

0

3

29

❤️Extremely grateful for this special honor from my alma mater @IITKgp! Thanks for nurturing & providing the launch platform. 🙏Sincere gratitude to mentors(EmoTodorov @svlevine @fox_dieter17849 @gupta_abhinav_ ) & collaborators. 🍷🍾 to the whole team!

6

2

29

"Touch at the very least refines, & at the very best disambiguate visual estimates during in-hand manipulation"-- @Suddhus . A pearl of crucial wisdom, yet challenging to convincingly leverage & exploit in robotic dexterous manipulation. Reliable surface sensing is the key!.

Neural feels with neural fields: Visuo-tactile perception for in-hand manipulation. paper page: Neural perception with vision and touch yields robust tracking and reconstruction of novel objects for in-hand manipulation.

1

2

29

✨Curiosity around the sample efficiency of #𝗥𝗼𝗯𝗼𝗔𝗴𝗲𝗻𝘁 triggered multiple requests on its (i.e. #RoboSet) training data distribution🤔. Attached is a stop motion of the initial object configurations for a particular scene

#𝗥𝗼𝗯𝗼𝗔𝗴𝗲𝗻𝘁 -- A universal multi-task agent on a data-budget. 💪 with 12 non-trivial skills.💪 can generalize them across 38 tasks.💪& 100s of novel scenarios!. 🌐w/ @mangahomanga @jdvakil @m0hitsharma, Abhinav Gupta, @shubhtuls

0

1

28

Visiting beautiful Boston for a few days for a few talks at @BostonDynamics @MITCSAIL @amazon robotics, @Northeastern. 🤓

0

0

29

Time and again @UnitreeRobotics has changed the game with Robotics’s capabilities at a reasonable cost. For H1 .- form factor is lean .- motors are strong .- battrey reasonable .- cost needs to come down a bit. Reliability for continuous deployment - jury’s out there testing ⏳.

Unitree H1 Breaking humanoid robot speed world record [full-size humanoid] Evolution V3.0 🥰.The humanoid robot driven by the robot AI world model unlocks many new skills!.Strong power is waiting for you to develop!.#Unitree #AI #subject3 #BlackTech

1

2

26

🌟Exciting updates on recent submissions🌟. 🙏Grateful for the students, mentors, & collaborators for these fun projects -.@CaggianoVitt @SudeepDasari @JasonMa2020 @yayitsamyzhang @obastani @dineshjayaraman @ZoeyC17 cameron @tonyzzhao @abhishekunique7 @chelseabfinn @svlevine

3

2

28

🪃 Heading to #ICRA2023 this week6⃣contributions from our group+collaborators!. Feeling extremely grateful and lucky to be a part of these collaborations.A thread with details🧵👇

2

3

28

For a change there is scientific rigor🔬 on twitter, not lofty claims!! 📣. While there are many technical reasons to read this report, I wanna call it for the exquisite demonstration of candor and professionalism by the genesis team (@zhou_xian_ et al) and open source community.

We’re excited to share some updates on Genesis since its release:. 1. We made a detailed report on benchmarking Genesis's speed and its comparison with other simulators (. 2. We’ve launched a Discord channel and a WeChat group to foster communications.

0

1

28

𝙈𝙤𝙩𝙤𝙧 𝙗𝙚𝙝𝙖𝙫𝙞𝙤𝙧 𝙞𝙨 𝙖 𝙧𝙚𝙖𝙙 𝙤𝙪𝙩 𝙤𝙛 𝙞𝙣𝙩𝙚𝙡𝙡𝙞𝙜𝙚𝙣𝙘𝙚.Over the last 2 years, I have been focusing on understanding physiological behavior synthesis, & building @MyoSuite. In this talk, I summed up the lessons learned and its relevance to Robot Learning

The first workshop on Learning Dexterous Manipulation at @RoboticsSciSys is starting now! Check out our speaker lineup at or tune in via zoom at if you are not in person.

3

5

27

🤯 How did we train a universal agent with merely 7k trajectories?. Join #RoboAgent team today @ieee_ras_icra .- Big Data in Robotics and Automation Session at 4.30 pm (CC-313) .- Poster Session 10.30 am - 12 pm (Board 03.05).

#𝗥𝗼𝗯𝗼𝗔𝗴𝗲𝗻𝘁 -- A universal multi-task agent on a data-budget. 💪 with 12 non-trivial skills.💪 can generalize them across 38 tasks.💪& 100s of novel scenarios!. 🌐w/ @mangahomanga @jdvakil @m0hitsharma, Abhinav Gupta, @shubhtuls

1

5

28

I gave a positioning talk at the human modeling workshop during @ieee_ras_icra, Japan. 🧠AGI vs Embodied SensoryMotor intelligence.🧠Motor-behavior vs Behavioral-Intelligence. 🤔How can we build embodied foundation models capturing human dexterity+agility.

1

4

27

- 0.6 m/s.- model based .- motion plan + state machine .- addition of arm sways etc for naturalness .=> immersive nibble locomotion. Would be exciting to see some error recover @julianibarz.

3

2

26

The next version of our #ALOHA➡️ #MOBILE_ALOHA . @tonyzzhao @zipengfu & co. outdid themselves! Congratulations. 💗My favorites: Bloopers toward the ends of the video. Don't miss out.

Introducing 𝐌𝐨𝐛𝐢𝐥𝐞 𝐀𝐋𝐎𝐇𝐀🏄 -- Hardware!.A low-cost, open-source, mobile manipulator. One of the most high-effort projects in my past 5yrs! Not possible without co-lead @zipengfu and @chelseabfinn. At the end, what's better than cooking yourself a meal with the 🤖🧑🍳

0

2

26

Quite surprised by reception of #RoboAgent. Most knew about it in details, had great feedback, and share new extensions they are working on. Kudos to @tonyzzhao, @mangahomanga @m0hitsharma @jdvakil @ZoeyC17 @ZhaoMandi student leads on this research stream #ACT #GenAug, #CACATI

🤯 How did we train a universal agent with merely 7k trajectories?. Join #RoboAgent team today @ieee_ras_icra .- Big Data in Robotics and Automation Session at 4.30 pm (CC-313) .- Poster Session 10.30 am - 12 pm (Board 03.05).

0

3

27

Team #RoboAgent is honored to be featured as a spotlight story on @CarnegieMellon 's homepage 🙏 . 🌐 w @mangahomanga @m0hitsharma @jdvakil Abhinav Gupta, @shubhtuls

#𝗥𝗼𝗯𝗼𝗔𝗴𝗲𝗻𝘁 -- A universal multi-task agent on a data-budget. 💪 with 12 non-trivial skills.💪 can generalize them across 38 tasks.💪& 100s of novel scenarios!. 🌐w/ @mangahomanga @jdvakil @m0hitsharma, Abhinav Gupta, @shubhtuls

0

6

27

I often get asked by VCs+academics- when will robotics happen?. 💪I’ve always responded with huge optimism about a short timeline but with brutal honestly about the technical+non technical challenges!. For once, a form factor that I wouldn’t be afraid of bringing into my home.🏠.

Unitree G1 mass production version, leap into the future!.Over the past few months, Unitree G1 robot has been upgraded into a mass production version, with stronger performance, ultimate appearance, and being more in line with mass production requirements. We hope you like it.🥳

1

2

27

Another big realization of 2023 . 🤏compliant gripper prongs are the easiest/simplest change that will give you the biggest performance boost. #ROI. Now try communicating & getting a paper accepted about that !!.

Dyson Robot Learning Lab is Hiring full-timers and interns! 🤖. 1x Research Scientist: 1x Data Engineer (Data Collection & ML Training): 3x PhD Internship: Come join our lab of 12; located in London, UK 🇬🇧

2

3

27

If you can induce a training distribution that is super set of your test distribution, it’s almost stupid to do anything but #sim2real. We are organizing an @ieee_ras_icra workshop on Expanding frontiers of #sim2real - Details to follow soon.

We trained ANYmal to go into confined spaces such as under collapsed buildings. To be presented at #ICRA2024. Title: Learning to walk in confined spaces using 3D representation.Arxiv: Video: Summary Page:

0

2

27

To further mark the role of off-domain datasets in robotics - . Ecstatic to announce📢next in the line of our .𝗙𝗼𝘂𝗻𝗱𝗮𝘁𝗶𝗼𝗻 𝗠𝗼𝗱𝗲𝗹𝘀 𝗳𝗼𝗿 𝗥𝗼𝗯𝗼𝘁𝗶𝗰𝘀.from our group & collaborators.#RRL➡️#PVR➡️#R3M➡️#VIP➡️#H2R▶️#𝗟𝗜𝗩. 🧵👇.

Excited to share our #ICML2023 paper ✨LIV✨!. Extending VIP, LIV is at once a pre-training, fine-tuning, and (zero-shot!) multi-modal reward method for (real-world!) language-conditioned robotic control. Project: Code & Model: 🧵:

1

4

26

RL suffers from ineffective search due to credit assignment problems in high dimension spaces. Not reward tuning, but exploration based methods like #LATTICE, #DepRL (@rlfromlux), SAR (Cameron), MyoDex (@CaggianoVitt @SudeepDasari) are breaking new grounds. Exciting times!!!.

Tomorrow Wed 13 Dec 10:45 a.m. CST, @a_marinvargas and I will present Lattice at #NeurIPS2023 .Lattice is a new latent exploration method for Reinforcement Learning! Also top-ranked solution of the @MyoChallenge!. Poster #1401:

0

4

24

@svlevine Behind every successful real-world training is a reset mechanism that no one talks about. Highlighting the unsung backstage heroes of our show.

0

2

24

An unpopular opinion in hot humanoid era 🦾🦿. My bet is on @hellorobotinc as a safe and welcoming form factor most poised to enter unstructured home environments. It’s a step in the right direction but more work is needed.

We still have a lot of work in front of us but our dream of home robots that benefit everyone is coming into focus!.

3

3

24

- search is still very inefficient. There is a lot of room to improve.- sim & real distribution is slowly collapsing. This has transformed robotics, & it’s just the beginning .- the future is robust, not optimal control. @MyoSuite is an enabling research platform for all of above.

1

1

24

PhD students should try to organize at least 1 workshop before they graduate. ➡️ Best opportunity to mark+catalyze your research subfield.➡️ Best way to build academic network.➡️Best opportunity to closely interact with senior members.➡️Teaches skills school/publications doesn't.

Interested in encouraging debate/discussion on a focussed topic in Robot Learning? .Consider submitting a workshop proposal to CoRL this year. 📅 Deadline: June 22nd, 2023.⚡️Decisions: June 30th, 2023.🎤Workshops: November 6, 2023.

0

3

23

I really like the transparency in communication .- It's autonomous. - It's 16% human speed!.- It's wired (no video gimmick to hide it).=> It's amazing 👍.

Figure 01 is now completing real world tasks. Everything is autonomous:. -Autonomous navigation & force-based manipulation.-Learned vision model for bin detection & prioritization.-Reactive bin manipulation (robust to pose variation).-Generalizable to other pick/place tasks

3

2

24

𝗗𝗮𝗿𝘄𝗶𝗻'𝘀 𝘁𝗵𝗲𝗼𝗿𝘆 𝘄𝗼𝘂𝗹𝗱 𝗯𝗲 𝘄𝗿𝗼𝗻𝗴 if natural selection picked such complex embodiment to just make computation a challenge for our🧠. Morphological complexity is what enables our nimbleness.𝗔𝗜 𝗮𝗴𝗲𝗻𝘁𝘀 𝗻𝗲𝗲𝗱 𝗺𝗼𝗿𝗽𝗵𝗼𝗹𝗼𝗴𝗶𝗰𝗮𝗹 𝗽𝗿𝗶𝗼𝗿𝘀.

Want natural motion with RL and muscles, but mocap data is limiting? biological objectives + constraints now achieve natural walking with 90 muscles! .Thanks @tgeijten for contributions with #Hyfydy and @CaggianoVitt & @Vikashplus for #MyoSuite . preprint.

0

4

23

Challenge with hands is not how to built the ONE, but how to build it to last; for it is what collides with the rest of the world for all of robots life. Hands are a robots portal into the real world. 🦾.

HOT 🔥 fastest, most precise, and most capable hand control setup ever. Less than $450 and fully open-source 🤯.by @huggingface, @therobotstudio, @NepYope. This tendon-driven technology will disrupt robotics! Retweet to accelerate its democratization 🚀. A thread 🧵

3

1

24

🚨We're building something unique toward @vkhosla's #1. 👀 We're #hiring for a role in language-based reasoning between human embodiments.<intern🌐/FTE(preferably🇺🇸)>. 💪Got a knack for complex multi-modal reasoning? Got an exceptional understanding of #LLM APIs?. 📩Drop me a DM.

Entrepreneurs, with passion for a vision, invent the future they want. These are my predictions for abundant, awesome, technology-based, Possible Tomorrows (2035-2049) . if we allow them to happen! #TED2024 @TEDTalks

0

5

24

It’s RL - the 🍒- stealing the show again!! . Embodied motor control requires generalization far exceeding LLM & VLMs. AGI experts should pay attention. @JitendraMalikCV has been claiming this as eloquently as possible for quite some time now.

Unitree B2-W Talent Awakening! 🥳.One year after mass production kicked off, Unitree’s B2-W Industrial Wheel has been upgraded with more exciting capabilities. Please always use robots safely and friendly. #Unitree #Quadruped #Robotdog #Parkour #EmbodiedAI #IndustrialRobot

0

1

24

Appearing in #RSS2023 this week, 4⃣strong papers from our group!. From incredible lead authors 💪 .🎯#GenAug - @ZoeyC17(best system paper nomination).🎯#ALOHA - @tonyzzhao .🎯#SAR - Cameron Berg.🎯#LIV - @JasonMa2020 . Details in thread 🧵🧵🧵.(all times GMT+9, Korea local time)

2

2

24