Elan Rosenfeld

@ElanRosenfeld

Followers

1,197

Following

191

Media

31

Statuses

329

Final year ML PhD Student at CMU working on principled approaches to robustness, security, generalization, and representation learning.

Joined September 2018

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Madrid

• 956268 Tweets

برشلونة

• 887285 Tweets

Barca

• 537846 Tweets

Mbappe

• 435479 Tweets

#ElClásico

• 323805 Tweets

Bernabeu

• 296464 Tweets

#UFC308

• 270670 Tweets

Lamine Yamal

• 260355 Tweets

Lewandowski

• 153645 Tweets

Raphinha

• 153274 Tweets

Topuria

• 147368 Tweets

Hansi Flick

• 140677 Tweets

Ancelotti

• 121854 Tweets

Holloway

• 119868 Tweets

Michelle Obama

• 111050 Tweets

Perez

• 110350 Tweets

Vini

• 107992 Tweets

Bellingham

• 100245 Tweets

Pedri

• 89525 Tweets

Khamzat

• 74445 Tweets

#كلاسيكو_الارض

• 58697 Tweets

Gavi

• 54761 Tweets

Checo

• 44478 Tweets

Iñaki Peña

• 44105 Tweets

Sainz

• 41049 Tweets

Vitória

• 38481 Tweets

Cruzeiro

• 36327 Tweets

الريال

• 32554 Tweets

Mendy

• 31791 Tweets

#precure

• 30602 Tweets

Xavi

• 27983 Tweets

انشيلوتي

• 20348 Tweets

Juventude

• 19059 Tweets

Gerson

• 17606 Tweets

Gabigol

• 17097 Tweets

Nuggets

• 16925 Tweets

ليفا

• 16438 Tweets

Last Seen Profiles

Another round of "publication incentives are messed up". He's right, of course, but the people who say this are almost always those who publish lots and are no longer beholden to the game.

This is something I've thought a lot about. As a senior PhD student, I have no...

1/n

1

10

133

@thegautamkamath

While this response is overtly disrespectful, reviews are frequently just as flippant and blatantly devoid of empathy. We've all received such reviews.

The difference is the reviewers are in a position of power, so they can be disrespectful under the guise of "peer review".

2

3

79

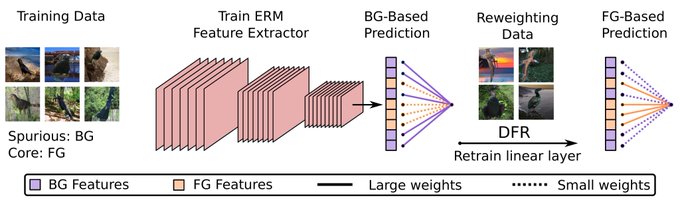

DNNs trained with ERM are often said to use the “wrong” features, thus suffering under distribution shift. Minimizing an alternate objective end-to-end *implicitly assumes* the entire network is to blame.

But is it?

New work: w/ P. Ravikumar,

@risteski_a

2

7

49

Excited to attend

#NeurIPS2023

this week! DM me to chat about robustness, generalization, Opposing Signals, etc.

Among others, I'll present work on provable + tight error bounds under shift:

and giving a talk on Opposing Signals at ATTRIB.

List below 👇

0

2

45

Want to learn more about the failure modes of recent work on invariant feature learning (e.g., Invariant Risk Minimization)?

Check out my CMU AI seminar talk, based on work at ICLR’21 w/

@risteski_a

and Pradeep Ravikumar. 1/3

1

11

38

As amazing as Shai's research is, let's please make an effort to stop attributing works solely to their senior author, who isn't lacking recognition; and ignoring the grad student(s), who probably did most of the work (and possibly all of it)?

@ToscaLechner

is easy to find.

2

0

33

@thegautamkamath

I would never post a response like this. But we're kidding ourselves if we act like the kind of reviews people give on these platforms aren't basically just as insulting, without being so explicit about it.

1

0

30

Nice to see an independent corroboration of our findings!

The improved accuracy and reduced computational cost are exactly the kind of benefits I had in mind. More evidence that this is a promising direction moving forward.

Last Layer Re-Training is Sufficient for Robustness to Spurious Correlations. ERM learns multiple features that can be reweighted for SOTA on spurious correlations, reducing texture bias on ImageNet, & more!

w/

@Pavel_Izmailov

and

@andrewgwils

1/11

13

72

522

0

2

24

@deepcohen

Actually, I also saw this happen for a fixed architecture but a different *optimizer* (and with the same batch ordering).

2

0

24

@shortstein

I don't disagree with this.

However, there are many cases where it is abundantly clear a reviewer did _not_ try their best. I've been assigned papers that I anti-bid, and I still put in the effort. I don't think "I didn't want to review this" is a valid excuse for a poor review.

0

0

22

Great news! To give people a little more flexibility around the

#NeurIPS2022

deadline, we are pushing the

@icmlconf

PODS workshop deadline to May 25th.

We still strongly encourage submissions by the 22nd to help keep the reviewing timeline on schedule.

Good luck everyone!

1

3

21

Enjoyed talking with

@ml_collective

about our recent work on Opposing Signals. Got some great Qs, thanks to

@savvyRL

for hosting me!

If you want to learn more about 𝘸𝘩𝘢𝘵 𝘢𝘤𝘵𝘶𝘢𝘭𝘭𝘺 𝘩𝘢𝘱𝘱𝘦𝘯𝘴 when you train a NN, check out the recording:

1

1

20

Work w/ my amazing advisor

@risteski_a

CC:

@PreetumNakkiran

,

@TheGradient

,

@prfsanjeevarora

,

@ShamKakade6

Also thanks to

@kudkudakpl

for the earlier (anonymized) shoutout 🙂

1

1

19

I will be giving a (very) brief talk on this work at the ATTRIB workshop at 10:35 tomorrow morning. Stop by if you're interested!

I'll present the poster there and at the heavy tails workshop.

0

1

18

A sequence of videos of Will Smith eating spaghetti, overlaid with the shutterstock logo. In some clips he uses a fork and in others his hands overflow with spaghetti as he shovels it into his mouth. In each clip he is wearing a different outfit. One clip has two Will Smiths.

1

2

19

@leonpalafox

True, but if you look at the trend I think it's reasonably likely this'll hold even once it updates.

1

0

14

IRM has become one of the go-to methods for OOD generalization, but it turns out it can have some rather pernicious failure cases.

Check out our new paper, "The Risks of Invariant Risk Minimization"!

First foray into OOD generalization, joint with new co-advisee

@ElanRosenfeld

and Pradeep Ravikumar:

We show a few (mostly negative) results about IRM:

1

8

58

0

4

15

The unfortunate thing about doing research ahead of its time in ML is that when people get around to realizing its value, they've forgotten about the papers--and it's against their self-interest to do the work of finding them.

2

0

13

@EduardoSlonski

You may be interested in our work showing a similar "opposite" pattern as it evolves over time during training:

1

1

12

@roydanroy

You can build ships without knowing anything about fluid mechanics.

You can develop better-than-random medicine without knowing anything about germ theory.

You can selectively breed animals without knowing anything about genes and heritability.

It's the rule.

0

0

12

@yoavgo

You forgot "unrelated theoretical guarantee for an overly simple setting which would never apply when using the proposed architecture".

1

0

11

Not a diss, just want to let people who are unaware know that Alec and the CLIP paper did not invent caption matching for multimodal alignment.

Last Summer I worked with

@FartashFg

from whom I learned this is an old idea (e.g. , he didn't invent it either)

2

1

10

@thegautamkamath

For what fraction of papers would I read and it say "that's a good paper"? Very low.

What fraction would I read and say "that's good enough to get into NeurIPS"? A lot.

0

0

11

@prfsanjeevarora

Isn't this just another instance of "moving the goalposts"? If you aren't willing to accept that his attack breaking the challenge is evidence of InstaHide being broken, why did you put up the challenge?

1

0

10

Tomorrow at AISTATS I’ll present our work analyzing online Domain Generalization which suggests that “likelihood reweighting” (aka group shift) may be an insufficient model of dist. shift

w/ P. Ravikumar,

@risteski_a

Link:

Poster session 5, 11:30 AM EDT

2

3

9

One thing I find really encouraging is that this doesn't require industry-scale clusters. Not that "low compute = better", but the other winners all used massive compute (n.b. DPO reduces that need).

This paper is proof that thoughtful, impactful work can be done on a budget.

0

0

9

I once reviewed a paper for ICML that had all sorts of problems. But it had been hyped up so much that I felt it was better for a corrected version to be published than not at all. I was in the minority in favor of acceptance and I made my reservations clear.

It got an oral 🙃

0

0

8

@hardmaru

Also, if anyone would like to learn about this work in more detail I will be giving a talk at the CMU AI Seminar next week:

0

2

8

@norabelrose

This paper came out 5 years ago...

See also our recent paper explaining why this happens

1

0

9

Wow, the two points in the abstract of number (1) are clever and I'm surprised I haven't seen this interpretation before. Is this widely known?

0

1

7

To add to this, we (i.e., humans) are notoriously bad at credit assignment, and we tend to underestimate the degree of luck involved in our life trajectory. Goes with the mention of survivorship bias, but also we want to believe we have much more foresight than we actually do.

1

0

7

Related, possibly controversial:

As a rule of thumb, if the abstract uses the phrase "We present/propose", I regard it with greater skepticism. It sounds to me like the authors are marketing a product, and you should always be skeptical of marketing.

(skeptical ≠ disbelieving)

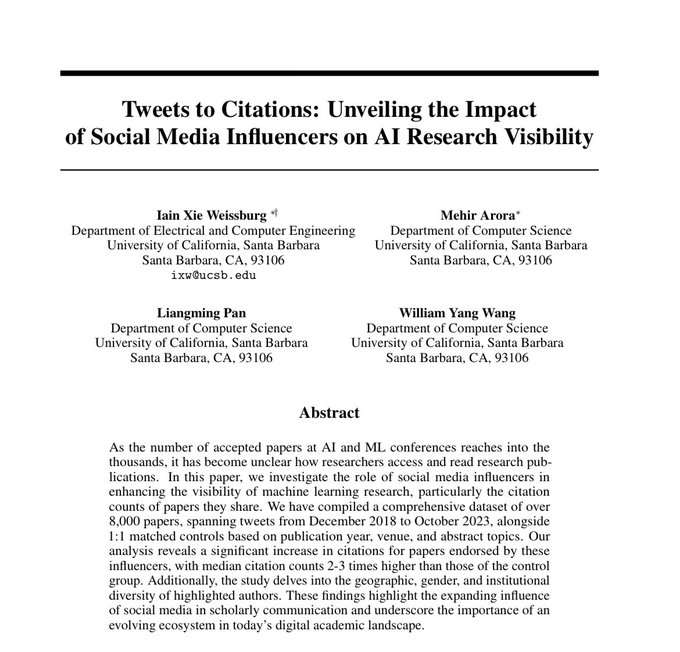

Crazy AF. Paper studies

@_akhaliq

and

@arankomatsuzaki

paper tweets and finds those papers get 2-3x higher citation counts than control.

They are now influencers 😄 Whether you like it or not, the TikTokification of academia is here!

64

284

2K

0

0

7

@thegautamkamath

I think it is reasonably likely for both to receive no scrutiny by experts at all.

0

0

6

Forgot to say this work will be at 3 Neurips workshops, w/ a short talk at ATTRIB.

Our work on provable error bds under shift will also be at the main conf:

Come by to discuss either! Or recent foray into strategic classification:

P.S. If you're coming to

#NeurIPS2023

, come hear Elan talk about it at the ATTRIB workshop, and/or come to our poster at the M3L and Heavy Tails workshops.

0

0

4

0

0

5

This looks like a very interesting paper!

Though I wish people would stop perpetuating the use of ColoredMNIST---It is not and has never been a meaningful benchmark. If you reduce the absurd 25% label noise to something more reasonable, vanilla ERM does just fine.

Empirical Study on Optimizer Selection for Out-of-Distribution Generalization

#learning

#distributional

#classification

0

4

21

3

2

6

machine learning academia in 2022

0

1

5

@bremen79

@ToscaLechner

Yeah, it's tricky. I get that it's a lighthearted joke (also I'm a sucker for puns 😁). But it contributes to the culture.

I get why the media does it---they don't know better and they need a "name" to latch on to. But as past/current students, we should stop normalizing it.

0

0

5

Say you have (what you believe to be) a very interesting idea for a long term project (multiple papers) on a major open question in Deep Learning.

You have a related paper that arose from it but you're about to finish your PhD and can't pursue it to fruition. Do you:

Post short paper on arxiv

80

Put it in your job talk

29

Wait 1.5 yrs til next job

15

Other (what?)

19

4

0

5

Along the way, we also derive a more effective surrogate loss for maximizing multiclass disagreement.

We evaluate on an extensive array of shifts and training methods, and we've released our code and all features/logits:

Work done w/

@saurabh_garg67

1

0

5

@deepcohen

I saw this tweet at the end of the thread without reading the previous ones and I thought "oh, he's finally decided to admit it" 😉

0

0

5

PSA:

@openreviewnet

takes a few minutes to display a new review after you first submit it. Not sure what the cause is but it's been the case for a while now. So no, your review is not gone, just wait a bit before refreshing!

#ICLR2023

0

0

5

@shortstein

@thegautamkamath

Unfortunately it depends on the content of the reviews and rebuttal.

If an AC sees mostly low scores and decides "no reason to get involved, this paper will probably be rejected", why even have an AC for these papers in the first place?

1

0

4

@sherjilozair

Everyone is talking about it because it's from Google and got tweeted by someone with followers. Not because it says anything surprising or new.

1

0

4

@giffmana

@sarameghanbeery

So while I agree this is an outdated motivation, I think the general idea behind it still stands.

I think of the "cows on a beach" example as pointing out more generally that these systems lack common sense and will often give incorrect outputs with high confidence.

1

0

4

@aldopacchiano

@chris_hitzel

That's a good point which I had not considered. It's hard to avoid optimizing for pure publication/citation count when failure means deportation.

I guess I'm encouraging those who can afford to take the risk, so that future int'l students won't have to face that choice.

0

0

4

@Lisa_M_Koch

@zacharylipton

We will stream the talks, but there is no conference support for virtual poster sessions. If you have an accepted paper but cannot attend in person, you will be able to submit a video and send your poster to the ICML organizing committee who will print it and set it up for you.

1

0

3

@OmarRivasplata

@zacharylipton

Yes, the first link is my paper! And the other one I've read.

Yet there still seems to be the idea that "it does work sometimes" in the zeitgeist and I cannot understand where this comes from.

1

0

3

@ericjang11

I noticed the same thing about rhyming, and spent a while trying to fix it. This included asking GPT-4 to read and assess its response, and then determine if it succeeded. It didn't work---GPT-4 kept assuming that it had succeeded after the second try...

1

0

3

@rcguo

Surprised this isn't common knowledge yet, but IRM doesn't work on Colored MNIST. The experiments in the paper validate on the test domain. Check the code.

1

1

3