Polina Kirichenko

@polkirichenko

Followers

3,003

Following

1,129

Media

42

Statuses

211

Machine learning researcher; prev. PhD at New York University, Visiting Researcher at @MetaAI FAIR Labs 🇺🇦

New York City, NY

Joined November 2018

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Judge

• 132800 Tweets

Internacional

• 114261 Tweets

Karol G

• 100781 Tweets

Yellowstone

• 99138 Tweets

Carolina

• 62053 Tweets

土用の丑の日

• 50356 Tweets

SUPERPOWER OUT NOW

• 46022 Tweets

デッドプール

• 33279 Tweets

Rosário

• 31427 Tweets

Mets

• 31317 Tweets

#WWENXT

• 28777 Tweets

Saint Dr MSG Insan

• 25463 Tweets

ゲリラ豪雨

• 23294 Tweets

श्री गणेश

• 22214 Tweets

#GiftOfSmile

• 19302 Tweets

Renê

• 17145 Tweets

KIMPAU MovieAnncmnt OnSHOWTIME

• 17036 Tweets

Toy Bank

• 16413 Tweets

#DesafioXX

• 16232 Tweets

Soto

• 16200 Tweets

EFM94 X JAM

• 15917 Tweets

Guru Purnima Celebration

• 15720 Tweets

Vatican

• 15661 Tweets

Skenes

• 13475 Tweets

Last Seen Profiles

Pinned Tweet

I recently defended my PhD thesis and graduated from NYU! 🎉 Thank you so much to my committee members

@andrewgwils

@sainingxie

@mengyer

@Qi_Lei_

@orussakovsky

@rob_fergus

(sadly I forgot to take a common zoom picture)

Happy graduation photos below! 👩🎓

46

4

357

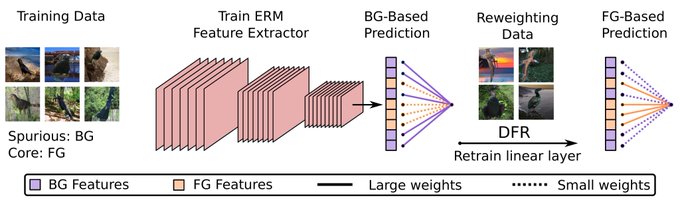

Last Layer Re-Training is Sufficient for Robustness to Spurious Correlations. ERM learns multiple features that can be reweighted for SOTA on spurious correlations, reducing texture bias on ImageNet, & more!

w/

@Pavel_Izmailov

and

@andrewgwils

1/11

13

71

525

Excited to share our

#NeurIPS

paper analyzing the good, the bad and the ugly sides of data augmentation (DA)! DA is crucial for computer vision but can introduce class-level performance disparities. We explain and address these negative effects in: 1/9

4

74

427

Why Normalizing Flows Fail to Detect

Out-of-Distribution Data

We explore the inductive biases of normalizing flows based on coupling layers in the context of OOD detection (1/6)

The inductive biases of normalizing flows can be more of a curse than a blessing. Our new paper, "Why Normalizing Flows Fail to Detect Out-of-Distribution Data", with

@polkirichenko

and

@Pavel_Izmailov

:

5

62

344

1

65

351

We are excited to present our breakout session on robustness to distribution shift at

@WiMLworkshop

@icmlconf

together with

@shiorisagawa

@LotfiSanae

! Join our session & discussion tomorrow, Monday July 18, at 11am at the Exhibit Hall G at Level 100 Exhibition Halls 🙂

#ICML2022

1

26

271

We will be presenting "Why Normalizing Flows Fail to Detect Out-of-Distribution Data" at

@NeurIPSConf

tomorrow at 12 EST!

1

20

219

Come see our paper "Last Layer Re-Training is Sufficient for Robustness to Spurious Correlations" at

#ICLR2023

(notable-top-25%)! Spotlight talk on Wed 10:10am Oral 6 Track 5 and poster session 6 at 10:30am! (joint work w/

@Pavel_Izmailov

@andrewgwils

)

Last Layer Re-Training is Sufficient for Robustness to Spurious Correlations. ERM learns multiple features that can be reweighted for SOTA on spurious correlations, reducing texture bias on ImageNet, & more!

w/

@Pavel_Izmailov

and

@andrewgwils

1/11

13

71

525

2

28

203

I’m in Baltimore for

#ICML2022

! Really excited to attend the first in-person conference in a few years, connect with people and meet old friends! Ping me if you want to grab coffee or chat about research in deep learning robustness or other topics 🙂

1

8

198

💯 I will add my two cents here as a Russian student on F-1 visa in the US. While a PhD program typically takes 5-6 years (which is also indicated in all the legal documents, school invitation etc), Russian citizens can only get a student F-1 visa for 1 YEAR max!! 🤯😭 1/🧵

3

25

188

Excited to be in New Orleans for

#NeurIPS2022

! Ping me if you want to grab coffee or lunch and chat about distribution shift, robustness, OOD generalization, jazz and other topics! 1/2

5

7

188

Excited to lead a breakout session on Uncertainty in Deep Learning at WiML Un-Workshop

@icmlconf

tomorrow, July 13 at 6:35pm GMT (2:35pm EDT)!

Together with

@MelaniePradier

and Weiwei Pan

1

13

120

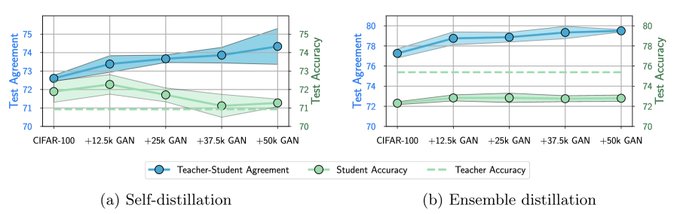

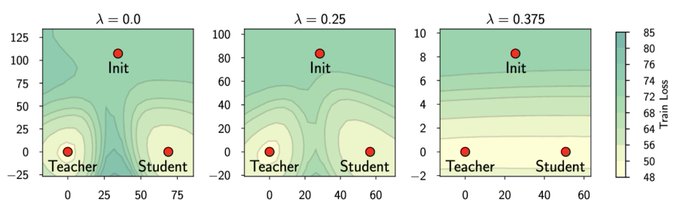

While most papers on knowledge distillation focus on student accuracy, we investigate the agreement between teacher and student networks. Turns out, it is very challenging to match the teacher (even on train data!), despite the student having enough capacity and lots of data.

Does knowledge distillation really work?

While distillation can improve student generalization, we show it is extremely difficult to achieve good agreement between student and teacher.

With

@samscub

,

@Pavel_Izmailov

,

@polkirichenko

, Alex Alemi. 1/10

7

73

344

3

15

118

An image is worth more than one caption!

In our

#ICML2024

paper “Modeling Caption Diversity in Vision-Language Pretraining” we explicitly bake in that observation in our VLM called Llip and condition the visual representations on the latent context.

🧵1/6

5

20

119

We will be presenting "Why Normalizing Flows Fail to Detect Out-of-Distribution Data" tomorrow 1pm EST at the INNF+ workshop

#ICML2020

! Will be very happy to chat about flows and OOD detection :)

2

11

117

Happy to share I am presenting on applications of normalizing flows at two

@icmlconf

workshops on Friday! First, I am giving an invited talk at the Workshop on Representation Learning for Finance and e-Commerce at 11:30-12pm EST (already available online)

1

12

103

Join our breakout discussion session "Leveraging Large Scale Models for Identifying and Fixing Deep Neural Networks Biases" at

@WiMLworkshop

@icmlconf

tomorrow Friday July 28, 11am in room 316C, with

@megan_richards_

@ReyhaneAskari

@vpacela

@mpezeshki91

!

#ICML2023

@trustworthy_ml

1

15

79

I am presenting a spotlight talk on our paper "Last Layer Re-Training is Sufficient for Robustness to Spurious Correlations" at the

#ICML2022

SCIS workshop ()!

Tmrw 11:40am with poster sessions 1:30 & 5:45pm (rooms 340-342)

w/

@Pavel_Izmailov

&

@andrewgwils

Last Layer Re-Training is Sufficient for Robustness to Spurious Correlations. ERM learns multiple features that can be reweighted for SOTA on spurious correlations, reducing texture bias on ImageNet, & more!

w/

@Pavel_Izmailov

and

@andrewgwils

1/11

13

71

525

0

10

72

Excited to share our

#NeurIPS2022

paper “On Feature Learning in the Presence of Spurious Correlations”! We show that features learned by ERM are enough for SOTA performance, while group robustness methods are actually not learning better representations.

Spurious features are a major issue for deep learning. Our new

#NeurIPS2022

paper w/

@pol_kirichenko

,

@gruver_nate

and

@andrewgwils

explores the representations trained on data with spurious features with many surprising findings, and SOTA results.

🧵1/6

5

55

327

1

13

73

Very excited to give a talk about our research on normalizing flows at this seminar on 10/21 10am EST!

Are you interested in Normalizing flows?

Feel free to join our online seminar this Wednesday (21/10) at 16:00 CET.

@__DiracDelta

,

@polkirichenko

and

@WehenkelAntoine

will give us a short introduction and present their research.

More info:

3

14

97

0

3

64

Come to our poster on data augmentation biases today at the Poster Session 6 at 5-7pm, poster

#1619

!

#NeurIPS

@trustworthy_ml

Excited to share our

#NeurIPS

paper analyzing the good, the bad and the ugly sides of data augmentation (DA)! DA is crucial for computer vision but can introduce class-level performance disparities. We explain and address these negative effects in: 1/9

4

74

427

2

6

61

Check out this video series on uncertainty in ML! I made a talk on Uncertainty Estimation via Generative Models, and there’s a lot of interesting talks from collaborators covering fundamentals & best practices. Thanks

@AndreyMalinin

for putting this together!

I would like to share our Uncertainty in ML video series which broadly covers range of topics in uncertainty estimation.

Thanks to

@senya_ashuha

@polkirichenko

@DanHendrycks

@provilkov

@LProkhorenkova

@BMlodozeniec

@ekolodin98

for making this possible!

1

22

101

0

5

55

Excited to co-lead this breakout session on uncertainty estimation at WiML Un-Workshop

@icmlconf

! Join us today at 7:25pm ET

Join us July 21 7:25 pm ET at WiML Un-Workshop at

@icmlconf

for a breakout session on "Does your model know what it doesn’t know? Uncertainty estimation and OOD detection in DL"

Together with

@polkirichenko

@AkramiHaleh

@sharonyixuanli

@sergulaydore

0

4

17

0

8

50

Excited to share our

#ICLR2024

work led by

@megan_richards_

on geographical fairness of vision models! 🌍

We show that even the SOTA vision models have large disparities in accuracy between different geographic regions.

Does Progress on Object Recognition Benchmarks Improve Generalization on Crowdsourced, Global Data?

In our

#ICLR2024

paper, we find vast gaps (~40%!) between the field’s progress on ImageNet-based benchmarks and crowdsourced, globally representative data.

w/

@polkirichenko

,

1

0

22

1

5

40

Come to our poster presentation on knowledge distillation at

#NeurIPS2021

7:30pm EST! 🙂

We are presenting our paper "Does Knowledge Distillation Really Work?" at

#NeurIPS2021

poster session 2 today - come check it out! Joint work with

@Pavel_Izmailov

,

@polkirichenko

,

@alemi

, and

@andrewgwils

.

Poster:

Paper:

2

14

83

3

6

35

Come see our work "SWALP: Stochastic Weight Averaging in Low-Precision Training" at

@icmlconf

!

Spotlight: 5:05pm in Hall B, poster: 6:30-9pm in Pacific Ballroom

#58

. Joint work with

@YangGuandao

,

@Tianyi_Zh

, Junwen Bai,

@andrewgwils

,

@chrismdesa

.

Paper:

0

10

29

I am very grateful to

@andrewgwils

for his advising and support and for many exciting research projects we worked on together! It was a huge privilege to have been a part of Wilson lab and to do my PhD in a great lab environment with incredibly smart and ambitious colleagues!

1

0

19

To this day there is no way to get any US visa type in Russia at all and not clear whether it would be possible to do ever again. Similarly to

@newspaperwallah

story, we have to monitor visa appointment slots in Telegram in other countries and the # of slots is very limited 4/

1

0

19

The works featured in the talks are:

- semi-supervised learning

- anomaly detection

- continual learning

and a 🧵on the continual learning paper:

0

0

14

Come to our poster at NeurIPS!

W/ amazing co-authors

@randall_balestr

Mark Ibarhim

@D_Bouchacourt

@rama_vedantam

@mamhamed

@andrewgwils

And check out Randall’s thread too!

9/9

Excited to share our

#NeurIPS2023

paper explaining part of the per-class accuracy degradation that data augmentation introduces: it creates asymmetric label-noise between coarse/fine classes of the same object e.g. car and wheel! We also find a remedy⬇️

1

12

82

2

1

15

Come talk to us about spurious correlations robustness today at 4-6pm in Hall J 103!

#NeurIPS2022

We will be presenting "On Feature Learning in the Presence of Spurious Correlations" today (Nov 29) at

#NeurIPS2022

w/

@polkirichenko

@gruver_nate

@andrewgwils

!

Hall J

#103

, 4-6 pm

Paper:

4

4

25

0

1

14

Several works (incl ) found strong DA is not beneficial for all classes: while the accuracy improves on the majority of ImageNet classes, it drops by as much as 20% on some of them! Check out

@ylecun

thread for more details! 2/9

1

1

14

A huge shoutout to

@gruver_nate

who will be presenting the talk and poster in person since unfortunately I couldn't travel due to immigration constraints 🙏

0

0

9

With a great team of coauthors at

@DeepMind

@GoogleAI

and

@NYUDataScience

:

@MFarajtabar

,

@drao64

,

@balajiln

, Nir Levine,

@__angli

, Huiyi Hu,

@andrewgwils

and

@rpascanu

!

0

0

7

@tdietterich

@Pavel_Izmailov

@andrewgwils

ERM is now common terminology for standard training in the robustness & domain generalization literature to make a distinction with methods like IRM () or GDRO (), which are optimizing a different loss function, not standard risk.

1

0

6

Many more results in the paper incld analysis of representations and adding image patch tokens to the contextualization. See a thread from Sam who led the effort!

Together w/

@lavoiems

@marksibrahim

@mido_assran

@andrewgwils

@AaronCourville

Nicolas Ballas

1

0

6

See paper for many more results!

Huge thanks to my co-authors

@Pavel_Izmailov

,

@gruver_nate

and

@andrewgwils

🙂

1

0

5

If you’re at ICLR check out Megan’s poster on Friday!

Come chat at our poster, Friday 10:45am, Halle B Poster

#213

! :) And see our full paper here: .

1

0

8

0

0

4

@ericjang11

@andrewgwils

@Pavel_Izmailov

I'll have to read more about the VIB objectives, but I think latent variable models are indeed promising for OOD detection. In the paper we are not advocating for using flows or proposing a specialized R-NVP as a new method, it's more about understanding the problem :)

1

0

3

Using additional learnable tokens in ViTs (called registers) was also discussed in the recent paper by

@TimDarcet

et al for improving interpretability of ViT attention maps, but they don’t use the registers for computing the visual representation.

3/6

1

0

3

@KateLobacheva

was my mentor during my undergrad, and she's always been an inspiration for me! Check out a new cool paper on deep ensembles from her and other collaborators from

@bayesgroup

:)

Our paper “On Power Laws in Deep Ensembles” has been accepted to

#NeurIPS

as a spotlight! Thanks to my co-authors

@nadiinchi

, Maxim Kodryan, Dmitry Vetrov and to all

@bayesgroup

Paper:

2

8

49

0

0

3

@serrjoa

@andrewgwils

@ericjang11

@Pavel_Izmailov

Hi Joan, we read your paper and connect our observations to your work in sec. 6 :)

1

0

2

@senya_ashuha

@IsomorphicLabs

@maxjaderberg

@wojczarnecki

@SergeiIakhnin

@demishassabis

Congrats Arsenii! 🎉 🥳

0

0

2

@tarantulae

@Pavel_Izmailov

@tdietterich

@andrewgwils

They are quite different. We show that standard ERM learns the core features even when it relies on the spurious features. By retraining just the last layer, we are able to remove the reliance on spurious features in ERM-trained models... 1/2

1

0

2

@ericjang11

@andrewgwils

@Pavel_Izmailov

The experiments in sec. 7 where we change masking and coupling layer networks are aimed at understanding what goes wrong in regular flows, not proposed as a solution

0

0

2

@tomgoldsteincs

I'm super late to the party, but just saw this paper , and it sounds very relevant!

Our paper 'Proper Reuse of Image Classification Features Improves Object Detection'

#backbone_reuse

(with

@viggiebirodkar

and

@dumoulinv

) , will be present at CVPR 2022 as an Oral presentation on June 23. You can check it on

6

7

38

0

0

1