Sanae Lotfi

@LotfiSanae

Followers

2,163

Following

318

Media

41

Statuses

373

PhD candidate at NYU, research intern @MSFTResearch | @GoogleDeepMind and @MSFTResearch Fellow | Prev. @MetaAI (FAIR) and @AmazonScience

New York City, NY

Joined August 2020

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

田中さん

• 361485 Tweets

田中敦子さん

• 341266 Tweets

声優さん

• 190884 Tweets

NuNew x Butterbear

• 152341 Tweets

ネルフェス

• 82725 Tweets

ALRIGHT POSTER

• 77071 Tweets

LINGORM THE STYLER

• 67438 Tweets

ショック

• 66309 Tweets

LIFESTYLE ASIA IDOL GULF

• 57492 Tweets

#ZMZM生配信

• 44367 Tweets

#大神ミオ生誕ライブ2024

• 41744 Tweets

ラストマイル

• 40362 Tweets

攻殻機動隊

• 39102 Tweets

攻殻機動隊

• 39102 Tweets

#アイナナ9周年生放送

• 38769 Tweets

メディアさん

• 24827 Tweets

キャスター

• 24320 Tweets

ベヨネッタ

• 21657 Tweets

女性の声

• 19620 Tweets

ミオしゃ

• 17774 Tweets

息子の声優・田中光

• 15383 Tweets

フランメ

• 15316 Tweets

闘病生活

• 14437 Tweets

メアリー

• 13876 Tweets

カーミラさん

• 12377 Tweets

Last Seen Profiles

Pinned Tweet

This Moroccan Arab Muslim first-generation woman just gave her first long talk for her award-winning paper at

#ICML2022

! I dedicate this achievement to all the underrepresented groups that I proudly represent!

So overwhelmed by all the support that I received! Many thanks! 1/2

61

67

1K

I'm so grateful and honored to receive the Microsoft Research PhD Fellowship

@MSFTResearch

!!🥳

This fellowship means that my research and that of my group led by

@andrewgwils

is recognized as meaningful and impactful for the machine learning community! 1/3

38

31

745

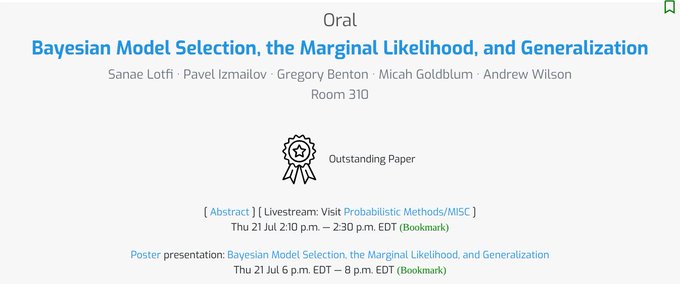

I'm so proud that our paper on the marginal likelihood won the Outstanding Paper Award at

#ICML2022

!!! Congratulations to my amazing co-authors

@Pavel_Izmailov

,

@g_benton_

,

@micahgoldblum

,

@andrewgwils

🎉

Talk on Thursday, 2:10 pm, room 310

Poster 828 on Thursday, 6-8 pm, hall E

I'm happy that this paper will appear as a long oral at

#ICML2022

! It's the culmination of more than a decade of thinking about when the marginal likelihood does and doesn't make sense for model selection and hyper learning, and why. It was also a great collaborative effort.

4

19

219

13

33

322

I appreciate this apology, but I am saddened that you are still implying a lack of scientific integrity. 1/N

I'm sorry for the negative attention I brought on

@LotfiSanae

in what should have been a joyous moment and who has authored an amazing & exciting paper on a complex topic. My intention was scientific integrity, but I lost sight of the broader context of this academic debate &

1

0

56

7

11

187

It was a great honor to be distinguished as a Rising Star in ML by

@ml_umd

🥳 Many thanks to the organizers and professors with whom I had great discussions!

Next stop:

#NeurIPS2023

; so excited to share our work on generalization bounds for LLMs and host

@MuslimsinML

there!

6

6

143

Went to Harvard to give a talk about our work on the marginal likelihood and PAC-Bayes bounds, ended up meeting

@ConanOBrien

there. It turns out he’s a big Bayesian!

2

2

143

Can LLMs generalize meaningfully beyond their training data?

We answer this question by computing the first non-vacuous generalization bounds for LLMs. Wanna learn more? Find us the M3L and SSL workshops 😉

w/

@m_finzi

@KuangYilun

@timrudner

@micahgoldblum

@andrewgwils

1

13

135

Had a great time presenting our work on PAC-Bayes bounds. Thanks everyone who stopped by; many insightful discussions!

I’m at

#NeurIPS2022

this week, ping me if you want to discuss generalization in deep learning or Taylor Swift concert tickets!

Paper:

5

7

122

🚨

#NeurIPS2022

poster today: 4-6pm, Hall J

#306

🚨

Why do CNNs generalize so much better than MLPs? Why can neural networks fit random labels and still generalize? What is the value of encoding invariances in our models?

1/N

2

16

104

We’re organizing Muslims in ML

@NeurIPSConf

this year 🥳

Submit a two-page abstract if you would like to showcase and present your work at the workshop by September 22 ⏰

Excited to announce that the Muslims in Machine Learning (MusIML) workshop is back

@NeurIPSConf

!

If you self-identify as Muslim, or work on research that address challenges faced by Muslims, we'd love to showcase your work. Submit an abstract by Sep 22:

1

5

31

2

17

98

This competition resulted in hundreds of submissions and many interesting solutions that will be presented by the winners 🏅 tomorrow starting 1pm ET 🎉 Join us to discover these scalable approximate inference methods for Bayesian Deep Learning:

I heard a rumour there is this amazing Approximate Inference in Bayesian Deep Learning competition at

#NeurIPS2021

tomorrow, starting at 1 pm ET. From what I understand, the winners will be revealing their solutions, and the link to join is . 🤫

0

43

193

3

17

90

Very excited to announce that I will be joining the Center for Data Science at New York University as a PhD student.

I am also very honored to receive the DeepMind Fellowship.

Thank you

@DeepMind

and

@NYUDataScience

!

8

4

87

So happy we ended up having a last minute talk by

@MarzyehGhassemi

on Ethical AI in Health

@MuslimsinML

!

I am a big fan of

@MarzyehGhassemi

’s work and I also like her so much as a female researcher, mentor, and role model. Very thankful I got a front seat to her talk! 🥳

2

8

82

That’s not true. We re-ran any experiments affected by a minor bug (one panel only in the main text) in the camera ready, and the qualitative results remain unchanged. We engaged extensively with all your questions via email, up through you publicizing your review.

2

1

58

Wrapped up my Boston visit with a talk at

@MIT_CSAIL

! Many thanks to all the brilliant researchers who invited me or made time to chat! Thank you

@MarzyehGhassemi

,

@irenetrampoline

and Yaniv for being amazing hosts as well!

Bonus: I discovered more Taylor Swift fans in academia!

0

0

56

I am at ICML this week and would love to meet and chat with some of you there! If you would like to talk about generalization in deep learning and other topics, ping me here or find me somewhere in the Baltimore Convention Center!

#ICML2022

0

2

48

Our extended paper on the marginal likelihood was accepted to JMLR 🎉

In this version, we expand on the marginal likelihood’s connection to PAC-Bayes bounds, its approximations, and its use for architecture search.

Check the thread👇for more details and stay tuned for more!

3

4

44

Thrilled that this work with

@Pavel_Izmailov

,

@g_benton_

,

@micahgoldblum

, and

@andrewgwils

got accepted for a long oral presentation at ICML 2022. It was fun to investigate and articulate the subtle ways in which the marginal likelihood is different from generalization!

2

3

44

Come join me tomorrow, alongside

@polkirichenko

and

@shiorisagawa

, to discuss methods and challenges in tackling distribution shift in deep learning. No matter what you are working on, come share with us how distribution shift affects your work and/or its applications!

#ICML2022

We are excited to present our breakout session on robustness to distribution shift at

@WiMLworkshop

@icmlconf

together with

@shiorisagawa

@LotfiSanae

! Join our session & discussion tomorrow, Monday July 18, at 11am at the Exhibit Hall G at Level 100 Exhibition Halls 🙂

#ICML2022

1

25

269

2

2

32

🔥Talk tomorrow, Dec. 5, at the

@NorthAfricansML

workshop, 10-11 AM GMT+1 🔥

"Are the Marginal Likelihood and PAC-Bayes Bounds the right proxies for Generalization?"

1/11

1

5

30

Did you engage with the authors of the papers that you review today?

Channeling my inner

@kchonyc

😅

0

3

29

Big thanks to my amazing co-authors

@Pavel_Izmailov

,

@g_benton_

,

@micahgoldblum

, and my incredible advisor

@andrewgwils

who put a lot of effort into this work and supported me all along!

Want to learn more about this work? check our poster 828, hall E between 6 and 8 pm EST!

0

1

26

When is it responsible to deploy a general purpose AI system?

@OpenAI

’s

@_lamaahmad

is answering this question right now

@MuslimsinML

!

Very exciting talk about identifying the potential risks of

@OpenAI

’s systems through Red Teaming!

1

4

20

We will release this exciting work soon w/

@m_finzi

,

@snymkpr

, Andres Potapczynski,

@micahgoldblum

and

@andrewgwils

! 🔥

@thegautamkamath

Best title so far

"PAC-Bayes Compression Bounds So Tight That They Can Explain Generalization"

I'm intrigued..

2

0

11

0

0

19

@biotin10000mcg

"A formal definition of a first-generation college student is a student whose parent(s) did not complete a four-year college or university degree." My parents actually never went to school. So proud of them for raising us and educating us to appreciate science this much!

1

0

18

I'd like to thank my incredible advisor

@andrewgwils

for his continuous support, my supportive colleagues

@Pavel_Izmailov

and

@micahgoldblum

for their feedback on my proposal, and my amazing masters advisor

@69alodi

and Prof. Julia Kempe for supporting my application! 2/3

2

0

16

Very cool work! I was just yesterday mentioning during the lab I TA that we have more intuition about the function space than the parameter space, and that it would be cool to thoroughly investigate function space MAP estimation — we don’t have to wonder anymore! 🥳

When training machine learning models, should we learn most likely parameters—or most likely functions?

We investigate this question in our

#NeurIPS2023

paper and made some fascinating observations!🚀

Paper:

w/

@ShikaiQiu

@psiyumm

@andrewgwils

🧵1/10

3

23

139

0

4

17

Some of the PhD struggle is necessary and a byproduct of growth, but some of it is not and needs to be dealt with effectively and compassionately! We’ll try to figure which is which with amazing peers

@SCSatCMU

👇

Let’s keep discussing PhD student mental health as a community!

📣📣

@SCSatCMU

PhD students: I'm so excited to share that

@charvvvv_

,

@LotfiSanae

, Yaniv Yacoby, and I are bringing this amazing workshop to CMU!

Join us to reflect on PhD student mental health, community-building🫂, and academic culture. (1/n) 👇

1

16

89

0

2

15

I just donated too! Thanks

@bneyshabur

for bringing this into my feed! So grateful and proud of

@ml_collective

and

@DeepIndaba

for their effort to support African researchers!

P.S: you can also help with your time through the Indaba Mentorship Programme:

8 researchers from Nigeria got accepted into Deep Learning Indaba

@DeepIndaba

but couldn't afford the trip. Access to research, mentorship, and networking opportunities like this is vital for early-stage researchers. You can help make their trips possible!

7

199

245

1

4

15

@shortstein

The reviewer in me likes it, the author in me does not. What a dilemma!

In general, I believe we should treat the papers we review the same way we want our papers to be treated, so I'll probably pass on this option whenever possible😅

1

0

14

@BlackHC

That’s also incorrect. We did not cherry-pick the experimental setting as I used exactly the same models and checkpoints in both arxiv versions. I did not do any re-training after we fixed the bug. I’m sorry but we don’t think you’re engaging in good faith. Thanks for the review.

1

0

10

So excited to be presenting our work on PAC-Bayes bounds 🔥tonight🔥 alongside an amazing lineup of female speakers!

Join us if you want to hear more about this work w/

@m_finzi

,

@snymkpr

, A. Potapczynski,

@micahgoldblum

,

@andrewgwils

; or to chat!

Paper:

Join us at the Women in AI Ignite today 6:00 pm CT

@NeurIPSConf

Great lineup

#womeninai

A room full of inspiring female figures reminds me of how important it is to support women around the world to live freely and reach their true potential.

#WomenLifeFreedom

@anoushnajarian

0

7

24

0

0

8

Been using CoLA pretty much since it came out and I like it a lot! It makes large matrix inversion, eigenspectrum decomposition and accessing other quantities I need for my research much more memory-efficient and scalable. Works with PyTorch, JAX and supports GPUs. Try it out!

Like differentiation, numerical linear algebra is a notorious bottleneck that is ripe for automation and acceleration. At

#NeurIPS2023

we introduce Compositional Linear Algebra (CoLA)!🥤

w/ A. Potap, G. Pleiss,

@andrewgwils

. 🧵 [1/7]

4

44

270

0

0

7

Big thanks to the

@NorthAfricansML

workshop organizers as well. It's great to see a NeurIPS workshop that is fully accessible in the North African timezone. Hopefully we get to see NeurIPS in Africa soon!

11/11

0

2

7

@FelixHill84

@BlackHC

I have no doubt! I realize how Twitter can make things escalate quickly and amplify misunderstandings, especially when it comes to technical and nuanced discussions!

0

0

7

The final phase of our

#NeurIPS

2021 competition "Approximate Inference in Bayesian Deep Learning" has officially started!

You can test the fidelity of your approximate inference procedure with the opportunity to present it at the

#NeurIPS

Bayesian deep learning workshop (+$)!

0

2

7

@bdl_competition

@riken_en

@tmoellenhoff

@ShhhPeaceful

@PeterNickl_

@EmtiyazKhan

@niket096

@ArnaudDelaunoy

Congratulations to the winners 👏

0

0

7

We explore these questions in our new

#NeurIPS2022

paper “PAC-Bayes Compression Bounds So Tight That They Can Explain Generalization” w/

@m_finzi

,

@snymkpr

, Andres Potapczynski,

@micahgoldblum

and

@andrewgwils

!

2/N

1

0

5

@nsaphra

@BlackHC

@hewal_oscar

Thanks for pointing that out Naomi. The camera ready has updated figures indeed and a discussion about how we never considered cross validation to be a competing metric (see appendix L).

1

0

5

@69alodi

@NYUDataScience

@polymtl

@DS4DM

It was a pleasure to be supervised by you and Dominique. I am so grateful to you,

@69alodi

for all the freedom and guidance you offered. I also feel immense gratitude towards our team at

@DS4DM

. Congratulations on creating this positive, supportive, and collaborative environment.

0

1

5

Join the Q&A session at 18:30 ET to learn more about our work "Stochastic Damped L-BFGS with Controlled Norm of the Hessian Approximation", with Tiphaine Bonniot, Dominique Orban and

@69alodi

OPT2020 Workshop schedule:

Paper:

1

1

5

@Khalid_Montreal

@69alodi

@mxmmargarida

@lyeskhalil

@ssriram1992

@akazachk

@Jiaqi_Liang7

@ChunCheng2

@PeymanKafaei

@GabrieleDrag8

@DS4DM

@jegonzalezj

@wachzetina

Thank you Khalid! Happy new year to everyone!

0

0

4

Join us tomorrow at the workshop to discuss these results and open questions!

Big thanks to collaborators:

@Pavel_Izmailov

,

@g_benton_

,

@m_finzi

,

@snymkpr

, A. Potapczynski,

@micahgoldblum

, and

@andrewgwils

.

10/11

1

1

4

@OpenAI

@_lamaahmad

@MuslimsinML

Lots of great questions, impressed by how much work goes into alignment and risk assessment

@OpenAI

!

0

1

4

@andrewgwils

@gruver_nate

@m_finzi

@ShikaiQiu

Very happy to see the final paper and extensive exploration, well done 👏

0

0

3

@Khalid_Montreal

First place goes to

@riken_en

(

@tmoellenhoff

, Y. Shen,

@ShhhPeaceful

,

@PeterNickl_

,

@EmtiyazKhan

) in both tracks!

Second place goes to

@niket096

and A. Thin are in the extended and tie with

@ArnaudDelaunoy

for second in the light track.

We'll hear from them all tomorrow 🎉

0

0

3

@akazachk

Good luck for your next journey Aleks! I am sure you will be a great professor and a fantastic mentor to all your future students!

1

0

3

Very interesting work on how to properly do transfer learning by capturing much more than just the initialization from pre-trained models.

0

0

3

PAC-Bayes bounds are another expression of Occam’s razor where simpler descriptions of the data generalize better, that can be used to understand generalization in deep learning.

5/11

🚨

#NeurIPS2022

poster today: 4-6pm, Hall J

#306

🚨

Why do CNNs generalize so much better than MLPs? Why can neural networks fit random labels and still generalize? What is the value of encoding invariances in our models?

1/N

2

16

104

1

0

2

@Khalid_Montreal

@DS4DM

Thank you very much

@Khalid_Montreal

! Your help and support helped a lot with that !

0

0

2

@micahgoldblum

You should at least add her in the acknowledgement paragraph for moral support and feedback (pretty sure she gives you feedback, doesn't she?)

1

0

2

@KyleCranmer

@irina_espejo

@NYUDataScience

@ATLASexperiment

@iris_hep

Great article! Proud of you

@irina_espejo

!

0

0

2

@EmtiyazKhan

Thank you for participating, we are excited to learn more about your solution tomorrow!

0

0

1

@ShresthaRobik

@polkirichenko

@shiorisagawa

I don't think so but we can try to make the slides available.

@polkirichenko

@shiorisagawa

1

0

1

@ZerzarBukhari

@Pavel_Izmailov

@g_benton_

@micahgoldblum

@andrewgwils

Thank you very much for the support Zerzar!

0

0

1

@69alodi

I am very lucky that I have and had your support as my master's co-supervisor and my mentor to this day! The trust, support and mentorship you keep offering me are so much appreciated! Thank you very much Andrea!

1

0

1