Micah Goldblum

@micahgoldblum

Followers

6K

Following

1K

Media

55

Statuses

890

🤖Prof at Columbia University 🏙️. All things machine learning.🤖

Joined December 2014

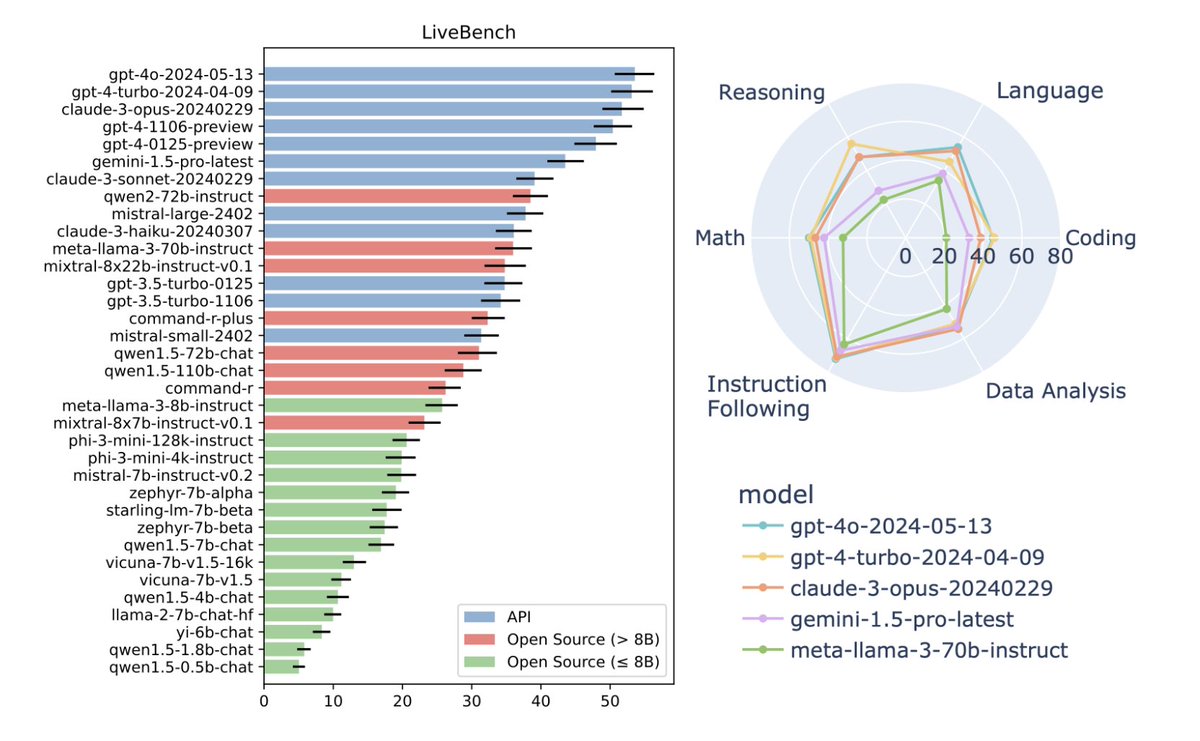

🚨 Announcing LiveBench, a challenging new general-purpose live LLM benchmark! 🚨.Thanks @crwhite_ml and @SpamuelDooley for leading the charge!.Link: Existing LLM benchmarks have serious limitations: 🧵

10

78

342

Do LLMs simply memorize and parrot their pretraining data or do they learn patterns that generalize? Let’s put this to the test! We compute the first generalization guarantees for LLMs. w/ @LotfiSanae, @m_finzi, @KuangYilun, @timrudner, @andrewgwils. 1/9.

3

26

237

We show that neural networks have a remarkable preference for low complexity which overlaps strongly with real-world data across modalities. PAC-Bayes proves that such models generalize, explaining why NNs are almost universally effective.

There're few who can deliver both great AI research and charismatic talks. OpenAI Chief Scientist @ilyasut is one of them. I watched Ilya's lecture at Simons Institute, where he delved into why unsupervised learning works through the lens of compression. Sharing my notes:.-

1

25

193

The new @icmlconf review format is horrendous (no reviewer scores). Students will spend an inordinate amount of time drafting rebuttals for reviewers who have already committed to rejecting their papers. Massive waste of person hours.

1

5

162

The following statement, while a commonly held view, is actually false! “Learning theory says that the more functions your model can represent, the more samples it needs to learn anything”. 1/8.

OK, debates about the necessity or "priors" (or lack thereof) in learning systems are pointless. Here are some basic facts that all ML theorists and most ML practitioners understand, but a number of folks-with-an-agenda don't seem to grasp. Thread. 1/.

7

20

169

🚨Real data is often massively class-imbalanced, and standard NN pipelines built on balanced benchmarks can fail! We show that simply tuning standard pipelines beats all of the newfangled samplers and objectives designed for imbalance. #NeurIPS2023 🚨🧵1/8.

3

19

148

Lessons from ICML @icmlconf: (1) Eliminate short talks, especially pre-recorded ones. (2) Poster sessions ≫ talks so allocate more time to them. (3) NO EVENTS DURING LUNCH/DINNER TIME! Poster sessions ended ~8:30pm and people went without dinner. (4) Don’t serve moldy bagels.

4

7

137

There’s a pervasive myth that the No Free Lunch Theorem prevents us from building general-purpose learners. Instead, we need to select models on a per-domain basis. Is this really true? Let’s talk about it! 🧵 1/16.w/@andrewgwils, @m_finzi, K. Rowan.

9

22

125

#StableDiffusion and #ChatGPT use prompts, but hard prompts (actual text) perform poorly, while soft prompts are uninterpretable and nontransferable. We designed an easy-to-use prompt optimizer PEZ for discovering good hard prompts, complete with a demo.🧵.

4

30

122

If we want to use LLMs for decision making, we need to know how confident they are about their predictions. LLMs don’t output meaningful probabilities off-the-shelf, so here’s how to do it 🧵.Paper: Thanks @psiyumm and @gruver_nate for leading the charge!

2

22

116

I want to point out several problems (areas for improvement) in the @NeurIPSConf review process which I haven't heard talked about. (1) Do not show reviewer scores to other reviewers since these bias score changes via peer pressure (do show scores to authors and ACs). 1/3.

4

7

109

Some people feel that transfer learning (TL) doesn’t apply to tabular data just because there exist unrelated domains (e.g. cc fraud vs. disease diagnosis). However, there are also adjacent tabular domains where TL makes a ton of sense (e.g. diagnosis of different diseases). 1/6.

Saw a new article on transfer learning for tabular data using NNs. I don’t have the time to take a closer look, but my initial reaction is the following:. 1/4.

3

15

107

I’m on the academic job market this year 🚨🥳🚨! Let me know if there are any interesting opportunities I’m likely to have overlooked or catch me at #NeurIPS2022!.

1

10

97

Diffusion models like #StableDiffusion and #dalle2 generate beautiful pictures, but are these images new or are they copies of the images they were trained on? 🧵 #CVPR2023.

4

29

97

Paper found here: . All the awesome collaborators that made this happen:.@arpitbansal297, @EBorgnia, Hong-Min Chu, Jie Li, @hamid_kazemi22, @furongh, @jonasgeiping, @tomgoldsteincs. 7/7.

4

6

76

Virtually all large models today contain huge matrices, and these dominate their compute. By incorporating structure in these matrices, we can improve the performance/compute tradeoff!.

A lot of the computation in pre-training transformers is now spent in the dense linear (MLP) layers. In our new ICML paper, we propose matrix structures with better scaling laws!.w/@ShikaiQiu, Andres P, @m_finzi, @micahgoldblum.1/8

4

0

71

Thrilled that our paper on model selection won the Outstanding Paper Award at ICML 2022. All credit goes to my great collaborators. Check out @LotfiSanae's talk and drop by our poster tomorrow!.

I'm so proud that our paper on the marginal likelihood won the Outstanding Paper Award at #ICML2022!!! Congratulations to my amazing co-authors @Pavel_Izmailov, @g_benton_, @micahgoldblum, @andrewgwils 🎉 .Talk on Thursday, 2:10 pm, room 310.Poster 828 on Thursday, 6-8 pm, hall E

1

2

63

Want to learn more about data poisoning and backdoor attacks? Our survey paper ( clarifies the state of the field for newbies and veterans alike! @dawnsongtweets @tsiprasd @xinyun_chen_ @ChulinXie @A_v_i__S @tomgoldsteincs @aleks_madry.

0

13

60

Transformers seem to work for all sorts of data, made possible by a shared structure that virtually all real data shares. This also allows NNs to be near-universal compressors. The real world is simple, so all we need is models with a simplicity bias.

Language Modeling Is Compression. paper page: It has long been established that predictive models can be transformed into lossless compressors and vice versa. Incidentally, in recent years, the machine learning community has focused on training

0

5

54

#NeurIPS2022 was fun! No moldy bagels like ICML, more poster sessions, fewer talks. Minor suggestions: (1) Don’t schedule poster sessions during meal times (e.g. sessions from 11am-1pm). I noticed a lot of people skipping the lunchtime poster session because they were hungry. 1/3.

2

1

47

Check out our paper, with @m_finzi, Keefer Rowan, @andrewgwils, where we show just how important simplicity bias, formalized using Kolmogorov complexity, is for machine learning. The paper is easy to approach for all audiences! 16/17.

1

2

46

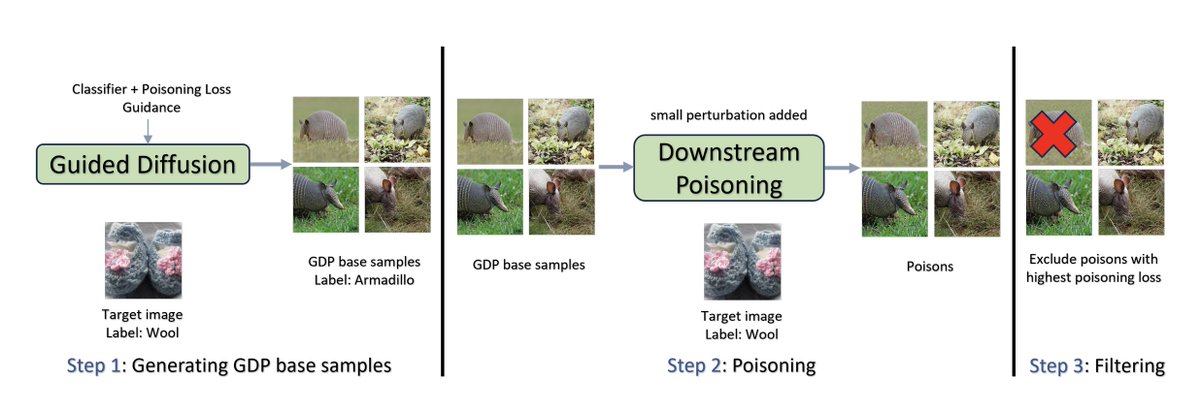

🚨NeurIPS poster Wednesday: 11-1, Hall J #512🚨. Backdoor attacks are dangerous, but existing attacks are easy to detect. We develop a backdoor attack whose poisons are indistinguishable from clean samples. Can you tell which are poisoned? 1/4

5

11

45

Just how important are handcrafted inductive biases like CNNs for computer vision? We can just learn them! ViTs are often actually more translation invariant than CNNs after training.

CNNs are famously equivariant by design, but how about vision transformers? Using a new equivariance measure, the Lie derivative, we show that trained transformers are often more equivariant than trained CNNs!.w/ @m_finzi @micahgoldblum @andrewgwils 1/6

0

3

41

Check out our work here! Thanks to my excellent collaborators Roman, Valeria, @A_v_i__S, @arpitbansal297, @cbbruss, @tomgoldsteincs, @andrewgwils 4/4.

1

2

34

Shout out to all my co-authors who made this work possible:.@ziv_ravid, @arpitbansal297, @cbbruss, @ylecun, @andrewgwils. Paper:

0

1

24

We're happy to see that multiple people have pointed out that types of tabular transfer learning are already widely used in industry and in scientific applications. We hope our work can improve tabular transfer learning and make it even more broadly useful!.

I have won several Kaggle tabular data competitions. I have worked on or with three different AutoML projects for tabular data. I have collaborated with many top experts in the field and some of the largest companies. 1/2.

0

2

23