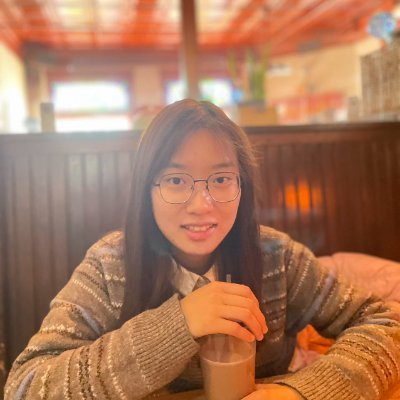

Chulin Xie

@ChulinXie

Followers

667

Following

687

Media

6

Statuses

53

CS PhD student at UIUC and student researcher @GoogleAI ; Ex research intern @MSFTResearch @NvidiaAI

Joined March 2019

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

田中さん

• 539934 Tweets

田中敦子さん

• 516372 Tweets

声優さん

• 239296 Tweets

LINGORM THE STYLER

• 191846 Tweets

攻殻機動隊

• 169118 Tweets

Fate

• 157570 Tweets

ALRIGHT POSTER

• 142823 Tweets

ネルフェス

• 100357 Tweets

ショック

• 79798 Tweets

草薙素子

• 77125 Tweets

Divine Play Of God Kabir

• 67490 Tweets

#西園寺さんは家事をしない

• 61742 Tweets

フランメ

• 54882 Tweets

ラストマイル

• 44298 Tweets

メディアさん

• 42510 Tweets

초코우유

• 34861 Tweets

カーミラさん

• 30392 Tweets

Leao

• 29584 Tweets

OUR BRILLIANT RENJUN

• 29545 Tweets

ベヨネッタ

• 27789 Tweets

Zize

• 27714 Tweets

キャスター

• 27142 Tweets

Arhan

• 26522 Tweets

メアリー

• 19074 Tweets

岡田将生

• 15519 Tweets

レインドット

• 15438 Tweets

#INZM_10Mviews

• 15135 Tweets

スーパームーン

• 14341 Tweets

リサリサ先生

• 14118 Tweets

#يواكيم_اندرسن_مطلب_الاتحاديين

• 11981 Tweets

#それ言ってどうすんだ祭

• 11890 Tweets

美城常務

• 10489 Tweets

Last Seen Profiles

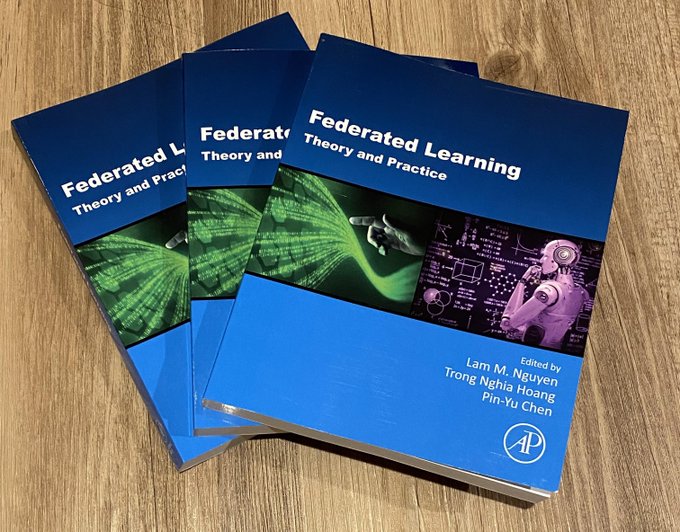

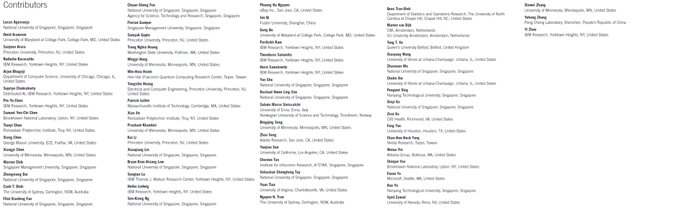

Excited to see the release of the book 🥳 and grateful for the opportunity to contribute a chapter. Big thanks to the three editors!

@pinyuchenTW

@LamMNguyen3

@nghiaht87

Happy to share the release of the book "Federated Learning: Theory and Practice" that I co-edited with

@LamMNguyen3

@nghiaht87

, covering fundamentals, emerging topics, and applications. Kudos to the amazing contributors to make this book happen!

@ElsevierNews

@sciencedirect

2

10

61

1

2

30

Our benchmark is on

@huggingface

leaderboard!!

0

4

27

How many adversarial users (instances) that a user-level (instance-level) DPFL mechanism can tolerate with certified robustness? 🧐

Check out our paper!

👥 Collaboration with

@long_yunhui

,

@pinyuchenTW

,

@Cubeeli

,

@sanmikoyejo

,

@uiuc_aisecure

🥳

1

3

10

Joint work with awesome collaborators

@lin_zinan

, Arturs Backurs,

@gopisivakanth

,

@DaYu85201802

,

@HuseyinAInan

,

@HarshaNori

, Haotian Jiang,

@HuishuaiZhang

,

@YinTatLee

,

Sergey Yekhanin at

@MSFTResearch

, and

@uiuc_aisecure

! 🥳

Code:

[7/n]

0

0

7

@PandaAshwinee

Thanks! We exactly used downstream objectives to evaluate syn data. For Yelp/OpenReview syn data, we evaluate accuracy on downstream classification tasks. For PubMed syn data, we evaluate next-word prediction accuracy of a downstream model, inspired by

2

0

1