Ryan Lowe

@ryan_t_lowe

Followers

5,595

Following

362

Media

32

Statuses

531

what is the place from which we are creating? ❤️✨🤠❤️

Berkeley, CA

Joined May 2009

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Internacional

• 116292 Tweets

Karol G

• 106194 Tweets

土用の丑の日

• 59450 Tweets

ゲリラ豪雨

• 39272 Tweets

デッドプール

• 36779 Tweets

Ingat

• 32585 Tweets

Mets

• 32548 Tweets

Saint Dr MSG Insan

• 28135 Tweets

ジェシー

• 27300 Tweets

Guru Purnima Celebration

• 26643 Tweets

#CarinaPH

• 24528 Tweets

O Inter

• 20777 Tweets

Toy Bank

• 19670 Tweets

Renê

• 17451 Tweets

Boone

• 10732 Tweets

Last Seen Profiles

Here's a ridiculous result from the

@OpenAI

GPT-2 paper (Table 13) that might get buried --- the model makes up an entire, coherent news article about TALKING UNICORNS, given only 2 sentences of context.

WHAT??!!

33

470

1K

I've left OpenAI.

I'm mostly taking some time to rest. But I also have a few projects in the oven 🧑🍳

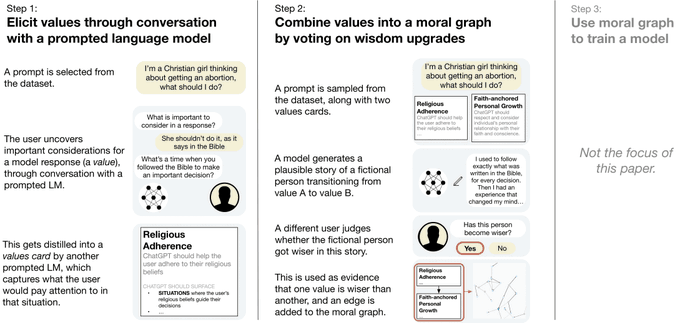

Here's one that I'm really excited about: we have a 🚨new paper🚨 out on aligning AI with human values, with the folk at

@meaningaligned

!! 😊✨🎉

Why I think it's cool:

🧵

“What are human values, and how do we align to them?”

Very excited to release our new paper on values alignment, co-authored with

@ryan_t_lowe

and funded by

@openai

.

📝:

26

74

366

17

68

775

(ahem)

alright friends

I am quite proud and slightly terrified to announce what I (and my alignment homies) have been working on over the past 14 months:

Training a new version of GPT-3 (InstructGPT) that follows instructions

🧵

9

92

708

I've finally decided to start a blog.

I've been a bit frustrated with the Twitter conversation about

@OpenAI

's GPT-2, and I haven't found a post that says all the things I want to say. So I wrote about it here: .

7

94

361

VERY excited to announce new work from our team (one of the safety teams

@OpenAI

)!! 🎉

We wanted to make training models to optimize human preferences Actually Work™.

We applied it to English abstractive summarization, and got some pretty good results.

A thread 🧵: (1/n)

3

48

301

Slides from my talk on "The Problem with Neural Chatbots" given at

@stanfordnlp

and

@GoogleBrain

are now available:

4

57

183

Hey, we got a new paper out! 😊🤠

We train a model that can summarize entire books. We think this will help us understand how to align AI systems in the future, on tasks that are hard for humans to evaluate.

A quick summary (heh):

2

32

181

📢 We're hiring a data scientist for the Alignment team at

@OpenAI

! 😊

We're looking for someone who cares deeply about the data used to train ML systems, potentially w/ experience in participatory design, safety/ social impact of ML systems, etc.

Link:

2

35

149

We are open-sourcing the multi-agent particle environments used for our work

@OpenAI

! Uses Python + Gym interface:

3

42

144

Super excited to be launching ML Retrospectives (). It's a website that hosts 'retrospectives' where researchers talk openly about their past papers. We also have a NeurIPS 2019 workshop where retrospectives can be published. Check it out!! 🎉

Researchers currently don't have a forum for sharing updated thoughts on their past papers.

@ryan_t_lowe

introduces ML Retrospectives, a new NeurIPS workshop that wants to change that.

#ResearchCulture

#NeurIPS

3

30

156

1

29

138

I didn't realize that having no affiliation with a major institution means that your papers get stuck in arXiv purgatory 😅

in any case, our paper is now on arXiv!

I've left OpenAI.

I'm mostly taking some time to rest. But I also have a few projects in the oven 🧑🍳

Here's one that I'm really excited about: we have a 🚨new paper🚨 out on aligning AI with human values, with the folk at

@meaningaligned

!! 😊✨🎉

Why I think it's cool:

🧵

17

68

775

4

7

126

It's been exactly two years since this public Twitter bet.

The question: will 'just scaling up' allow LMs to answer common-sense questions like "do insects have more legs than reptiles" >80% of the time?

Since then we've had GPT-3, PaLM, Gopher, etc.

Which side won?

@carlesgelada

Care to make a concrete prediction, and put some money on it? I'll wager that no model trained by scaling the current paradigm (defined as Transformerish + MLE + text scraped from the internet) will give reasonable responses to the prompts in Tomer's tweet. Settles in 2 years.

2

0

11

3

8

118

The

@MLRetrospective

NeurIPS workshop is happening tomorrow!! 🎉

We have an awesome lineup of speakers (incl.

@shakir_za

@rzshokri

@sina_lana

@ShibaniSan

@mariadearteaga

and Kilian Weinberger), who'll be surveying + analyzing subfields of ML research (and maybe their own work!)

1

24

75

I'll be giving a talk at

@stanfordnlp

tomorrow at 11am on 'The Problem(s) with Neural Chatbots'. Slides likely to be posted afterwards!

2

4

57

Wow. The positive reception to the Retrospectives workshop yesterday has been overwhelming. So many great talks and amazing discussions. Thank you to all of the courageous presenters 🖤. Stay tuned for future developments on retrospectives!!

Videos here:

WOW!! 🤩 1044 people at

#NeurIPS2019

added the Retrospectives workshop to their agenda! Thanks to everyone supporting retrospectives in ML!!!

0

2

24

2

7

55

Our AAMAS paper is finally out!

TL;DR: measuring emergent communication is tricky. It may seem like the agents are communicating, when their messages don't actually do anything.

Instead, use causal influence-style metrics (as proposed by

@natashajaques

)

1

13

52

@zdhnarsil

there are indeed many ways to optimize. OpenAI happens to have a lot of organizational expertise with PPO. but I would be very surprised if that turned it to be the best way to do it

2

0

45

Headed to Rwanda for

#iclr2023

(!!), landing Tuesday

I've been thinking a lot about "what should AI systems optimize?" I'd love to talk with folk at ICLR who are thinking about this, send me a DM!

Also interested in connecting to the AI/tech scene in Kigali, recs welcome 🙂

1

0

36

You don't have to be a 'prestigious' researcher. Your original paper doesn't have to be highly cited. Or cited at all.

This is about building norms of openness + self-reflection in ML research.

Highly encourage everyone to submit!! 🎉👇👇

0

7

35

Great paper by

@MSFTResearch

,

@NvidiaAI

and IIT Madras that critiques our ACL 2017 work on automatic dialogue evaluation: .

Evaluating dialogue systems is hard, and our ADEM model has lots of flaws!

0

2

28

Last tweet for me on the OpenAI GPT-2 thing, but for those interested I think this video really elevates the discussion. Great work by all parties involved.

Really great discussion this eve on

@OpenAI

's recent language model release and the issues and considerations raised. Thanks

@AmandaAskell

@AnimaAnandkumar

@Miles_Brundage

@smerity

@WWRob

for participating. Those who missed it live can catch the replay at

3

35

94

0

8

25

I'm on a podcast! 😊

@longouyang

and I talk about the InstructGPT paper. thanks to

@brian_a_burns

@jason_lopatecki

for having us on!

// Deep Papers

#1

: InstructGPT //

We interview

@ryan_t_lowe

and

@longouyang

, OpenAI scientists behind InstructGPT: precursor to ChatGPT, & one of the first applications of RLHF to LLMs.

More below!

YouTube:

Spotify:

1/9

2

32

236

2

1

25

New blog post 🎉 on the 'Retrospectives' workshop we hosted at the last

@NeurIPSConf

.

I talk about what worked, what didn't work, and some recommendations for hosting workshops like this at other conferences. 🙂

1

3

26

This is a fruitful direction for alignment research. Great work by the

@AnthropicAI

team

0

0

26

👇newly released info should hopefully make it less annoying to use InstructGPT for research.

Also, I’ll be presenting the InstructGPT paper at NeurIPS today, 4pm at poster

#920

. Come by say hi 😊

1

2

25

The original betters were:

myself,

@carlesgelada

, and

@LiamFedus

on the pro-LM side

@jacobmbuckman

,

@cinjoncin

on the anti-LM side

Looking forward to that dinner 😉

1

0

23

Post on our work in multi-agent communication & language acquisition is live! Huge thanks to

@jackclarkSF

& Igor for putting it together.

1

9

24

I'll be giving a talk in Montreal next week! conference proceeds are donated to Centraide

2

2

20

There's a lot of (justified) pessimism about the future of ML in society.

But I've been thinking about

@Spotify

's music recommendations. They've been adding a lot of joy to my life recently. It's nice to take a moment to feel gratitude for the ways the algorithms are helping us.

1

0

17

@edelwax

's thread does a good job of summarizing the paper, go read it! (or, ya know, read the paper)

I'll talk about one of the main reasons I'm compelled by this line of work: this precise way of thinking about values seems to be actually useful for living your actual life

1

0

17

I think this is the best criticism I've heard of

@OpenAI

's release. We need to come up with norms for how we decide to publish potentially harmful research. It's unlikely that the best solution is "approach journalists first".

@ryan_t_lowe

@OpenAI

Imagine if the guys who found Spectre and Meltdown went straight to the media with their findings, instead of Intel. If SOTA LMs really can be harmful, why not conduct user studies to show that? Why is "wow factor" being used to judge such a sensitive technology?

2

1

15

1

2

15

Example: I went with some folk (incl

@flowerornament

and

@JakeOrthwein

) to visit

@edelwax

,

@klingefjord

,

@ellie__hain

,

@AnneSelke

, and others from the "meaning alignment crew" in Berlin last year.

1

1

16

Impressiveness aside, I also want to comment on the ethical implications here. This could enable social manipulation on a massive scale. If people fall for e-mail scams, they will fall for fake comments on news articles designed to sway your opinion in a particular way.

Here's a ridiculous result from the

@OpenAI

GPT-2 paper (Table 13) that might get buried --- the model makes up an entire, coherent news article about TALKING UNICORNS, given only 2 sentences of context.

WHAT??!!

33

470

1K

1

2

15

This is cool! Really interesting work.

We've also found this in language learning: if you start with self-play, you often have to do some un-learning when fine tuning with humans (paper coming soon!)

0

1

15

Annual reminder: Sleepawake is one of the most powerful transformative experiences I've ever witnessed. I was there for 2 weeks last year and will be volunteering for the whole time this year.

I'd particularly recommend it for you if you are 18-30 yrs old and feel stuck in life,

1

4

14

@thenamangoyal

@OpenAI

Good question! The y-axis is "percentage of summaries generated by each model that are rated higher (by humans) than the human-written reference summaries in the dataset".

1

0

14

And of course, a huge thank you to all of my fantastic co-organizers: Jessica Forde,

@joelbot3000

,

@koustuvsinha

, Xavier Bouthillier, Kanika Madan,

@astro_pyotr

,

@WonderMicky

, Shagun Sodhani, Abhishek Gupta, Joelle Pineau & Yoshua Bengio. 🔥

0

0

13

📢Job announcement📢

If you liked our recent paper, or are interested in tackling hard problems in AI alignment (both near-term and long-term), the

@OpenAI

Reflection team is now hiring for research scientist/ engineer roles!

See RE job description here:

VERY excited to announce new work from our team (one of the safety teams

@OpenAI

)!! 🎉

We wanted to make training models to optimize human preferences Actually Work™.

We applied it to English abstractive summarization, and got some pretty good results.

A thread 🧵: (1/n)

3

48

301

1

0

14

Watching

@OpenAI

's bot beat the best human Dota2 players at the office -- incredible accomplishment!!

0

3

14

A good 🧵

For white folks, being called racist is shocking because it means you are a *bad person*. Only *bad people* are racist because racism is bad, right?

Not so. Most good people have racist biases. Defensiveness is natural, but it makes it hard to really self-reflect. 1/

1

0

13

All of this work is with my incredible collaborators on the Reflection team:

@paulfchristiano

, Nisan Stiennon, Long Ouyang, Jeff Wu, Daniel Ziegler, and

@csvoss

, plus

@AlecRad

and Dario Amodei. 🔥❤️

(fin)

1

0

13

Joe et al.’s work on meaning has significantly shifted my thinking about alignment in the last 6 months.

The talk is good and worth checking out!

0

0

11