Nicolay Rusnachenko

@nicolayr_

Followers

260

Following

86

Media

160

Statuses

2,013

Information Retrieval・Medical Multimodal NLP (🖼+📝) Research Fellow @BU_Research ・software developer ・PhD in NLP・Opinions are mine

Bournemouth / London, UK

Joined December 2015

Don't wanna be here?

Send us removal request.

Explore trending content on Musk Viewer

Se o Twitter

• 419151 Tweets

#CrisMorenaDay

• 320716 Tweets

#MaybellineTeddyLand

• 239476 Tweets

Peter

• 206904 Tweets

台風の影響

• 176146 Tweets

#jjk268

• 175746 Tweets

Alexandre de Moraes

• 131112 Tweets

Sukuna

• 87891 Tweets

Megumi

• 80065 Tweets

Lali

• 72274 Tweets

Yuji

• 64352 Tweets

デコピン

• 61638 Tweets

Yuta

• 59176 Tweets

De La Cruz

• 46613 Tweets

Bahia

• 42076 Tweets

焼肉の日

• 41394 Tweets

Luciano

• 34996 Tweets

TEDDYTINT X LOOKKAEW

• 34615 Tweets

Xandão

• 32981 Tweets

安全第一

• 29843 Tweets

TEDDYTINT X FAYE

• 28683 Tweets

Bluesky

• 23694 Tweets

臨時休業

• 18684 Tweets

मेजर ध्यानचंद

• 18568 Tweets

राष्ट्रीय खेल

• 18470 Tweets

#dominATE_BANGKOK

• 16660 Tweets

#NationalSportsDay

• 16655 Tweets

Wesley

• 15992 Tweets

Kaio Jorge

• 14348 Tweets

Uraume

• 14264 Tweets

伯仲燦然

• 13014 Tweets

Bruno Henrique

• 10642 Tweets

Hakari

• 10289 Tweets

Last Seen Profiles

Pinned Tweet

📢During

@wassa_ws

at

@aclmeeting

we present a framework for empathy and emotion extraction developed that exploits application of combined loss for BERT-based models 🤖 made in colab with

@UniofNewcastle

.

📜

🧵[for more details]

1

0

0

📢 Excited to share that our studies on LLMs reasoning capabilities in Target Sentiment Analysis are out 🎉

🧵1/n [More on finding highlights ...]

#llm

#reasoning

#nlp

#sentimentanalysis

#cot

#chainofthought

#zeroshot

#finetuning

1

0

7

So far experimented 🧪 with LLaVa 1.5 and Idefics 9B at scale and they are quite handy out-of-the-box 📦

Eventhough, it is nice to see even smaller versions are out, that based on most recent LLMs 👏👀

0

1

5

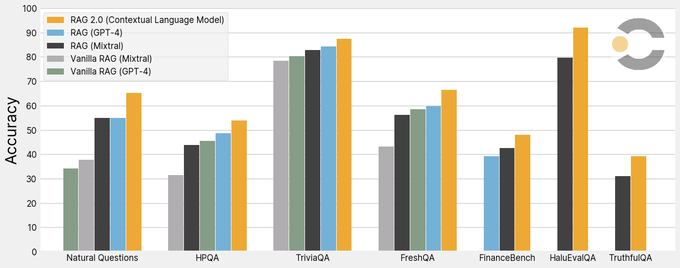

It is nice too see a small step towards the target-oriented LLM adaptation from the prospect of retrieval augmenting techniques and enhanced end-to-end adaptation of 🥞: (I) knowledge (ii) passages (iii) LLM

0

0

5

These findings 👇 on (1) reliability in news articles and (2) in the language models application for generating writer ✍️ feedback generation from the angle of readers 👀📃 view through personalities" are 💎 to skim.

Excited for our

#CHI2024

contributions (1/2):

Reliability Criteria for News Websites, 16 May, 11:30am

Writer-Defined AI Personas for On-Demand Feedback Generation, 15 May, 2:00pm

#CAISontour

5

1

38

0

1

3

The most recent OmniFusion VLLM in which authors adopt merging-features for CLIP-ViT-L and DINOv2 was such an impressive 🔥 and powered by Mistral-7B. This makes me wonder, how far the another 💎 concept for images encoding 👇that involves MoE goes ... 👀

1

0

4

@stevenhoi

@hypergai

Nice to see you publicly contribute to Multimodal AI advances, and LLM in particular 👏

0

0

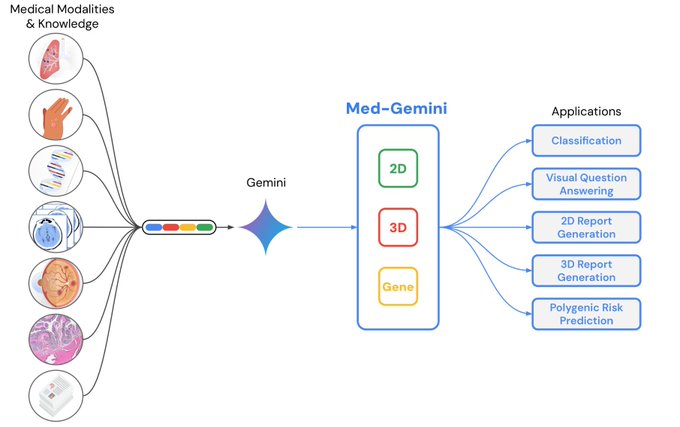

4

@alan_karthi

Well done and thank you for sharing this technical report! 👏 I believe that the access to the Med-Gemini is restricted due to the specifics for the medical domain as well as the result LLM. Nonetheless, is Med-Gemini available for chatting and under which license of so?

1

0

4

Text2Story workshop opener at

#ecir2024

shares a handy methodology aligned studies for processing large texts such as books 📚 aimed at narratives extraction

#nlp

#story

#books

#narratives

0

0

4

Such tools like this end up becoming a swiss knife for deeper understanding and looking 👀 on how one LLM differs from other 💎

0

0

4

📢 This finds me as a valuable milestone 💎 on inputting personality traits into large language models 👀

1

1

3

📢 I believe such instruction tuned LMs may represent a valuable contributions to IR related task advances such as sentiment analysis 💎

📝

0

0

2

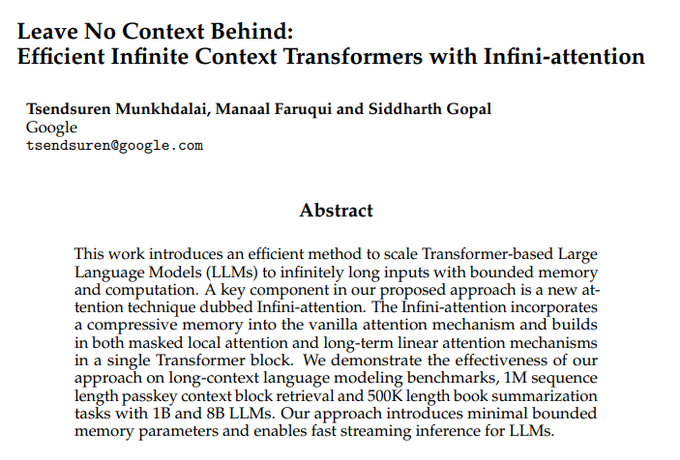

Self-attention -> windowed / sparse-self attention -> local +global self attention -> infini attention 👏✨

0

0

3

An interesting viewpoint on pre-training of the so-called language-centric LLMs in low-resource domain to "preserve" knowledge about rare languages

#ecir2024

#llm

#lowresourcedomain

#pretraining

0

0

3

@kaliisar

@SoLAResearch

@TUMohdKhalil

@mohsaqr

@kamilamisiejuk

@SonsolesLP

@SlateResearch

@UniOslo

@UniOslo_UV

@UniEastFinland

Congratulations, well done! 👏

0

0

3

@burkov

Notably, the generated one tends to be verbosely commented so that skimming through comments for the brief sure of it's correctness

1

0

2

@drivelinekyle

@abacaj

This template varies from task to task, but the impelementation on torch is pretty much is similar.

My personal experience is sentiment analysis, so I can recommend:

0

0

3

A short post which demonstrates pipeline organization for inferring sentiment attitudes from mass-media texts

#deepPavlov

#arekit

#ml

#nlp

#sentimentanalysis

0

1

3

One of the inspirative directions (Dimension) of narrative in long texts that were highlighted at

#ecir2024

#Text2Story

is Spacial 🌎🗺️ ...

Further details on pipeline and timeline tracking ...

🧵[1/3]

1

0

3

@yufanghou

Thanks! By being not physically at

#NAACL2024

this year, rather remotely at

#SemEval

, finding it 💎 a quick poster skimming from the Summary Content Units as well as semantical tree construction for the textual content 👀

0

0

3

@ProfJainendra

@abhi251991

@hmi_iiitd

@WeaveIIITD

@amanparnami

@IIITDelhi

@cseiiitd

@hcdiiitd

@iiitdcai

@iiitdcdnm

Congratulations for being shortlisted on this! 👏 Well deserved.

0

0

2

📢 I am happy to share that our studies made at

@UniofNewcastle

, aimed at fictional character personality extraction from literature novel books 📚, by solely rely on ⚠️ book content⚠️, become ACCEPTED at

#LOD2024

@ Toscana, Italy 🇮🇹 🎉

👨💻:

🧵1/n

1

0

3

@shaily99

Handy to go with Overleaf + DrawIO for concept diagrams. For the overleaf, forming "lastest.tex" 📝 which later become renamed to specific date, so that becomes a 📑 eventually, which could be gathered into "main.tex"

0

0

3

@omarsar0

After a brief paper skimming of the main figure, the ranking idea finds me in such a unique way of reasoning enhancing. Thanks for sharing 👏

0

0

1

@Leik0w0

@Prince_Canuma

Interesting! ... any other prospects on necessity of quantized siglip besides its adaptation for Moondream tiny VLLM?

3

0

1

@docchipinti8

@serrasinem

@m_guerini

Mentioned your work there as the most closest studies to what we did at

@UniofNewcastle

so far

0

2

3

@SemEvalWorkshop

our 🛠️ reforged 🛠️ version of the THoR which has:

✅1. Adapted prompts for Emotions Extraction

✅2. Implemented Reasoning-Revision 🧠

✅3. GoogleColab launch notebook (details in further posts 🧵)

⭐️Github:

🧵 4/n

#NAACL2024

1

1

3

@mudler_it

@LocalAI_API

Thanks for such a verbose explanation on the related differences! I believe I have to first find out more about function calling 👀

0

0

2

📢 Excited to share the details of our submission🥉

@SemEvalWorkshop

Track-3, which is based on CoT reasoning 🧠 with Flan-T5 🤖, as a part of self-titled nicolay-r 📜. Due to remote avalablity at

#NAACL2024

, presenting it by breaking down the system highlights here 👇

🧵1/n

@SemEvalWorkshop

2024 starts tomorrow! Check out our exciting lineup of 65 posters and 10 talks here: Don’t miss our invited talks by

@hengjinlp

(with

@_starsem

) and

@andre_t_martins

!

#mexico

@naaclmeeting

@shashwatup9k

@harish

@seirasto

@giodsm

0

4

6

1

0

3

@Paul_Antara04

The concept is really good, so that making it mobile friendly seems to be a huge step forward ✨👏

0

0

2

Thanks for the provided opportunity to Huizhi Liang and for all students who got an opportunity to attend on the first guest lecture at Newcasle University! 🎉🎉🎉 The presentation was devoted to advances in sentiment attitude extraction

#newcastle

#ml

#nlp

#arekit

#lecture

0

0

3

🤔 Coming to this from the prospect of IR from large textual data, becoming wondered on how it would be interesting to see such a forecasting for extracted stories (series of events) from literature novel books 📚

1

0

3

💎 Fact checking domain and advances in it are important for performing IR from news, and mass-media. These advances may serve with the new potential approaches aimed at enhancing LLMs reasoning capabilities in author opinion mining / Sentiment Analysis ✨

Refine LLM responses to improve factuality with our new three-stage process:

🔎Detect errors

🧑🏫Critique in language

✏️Refine with those critiques

DCR improves factuality refinement across model scales: Llama 2, Llama 3, GPT-4.

w/

@lucy_xyzhao

@jessyjli

@gregd_nlp

🧵

2

24

123

0

0

1

@polkirichenko

@andrewgwils

@sainingxie

@mengyer

@Qi_Lei_

@orussakovsky

@rob_fergus

Congratulations, Phinally Done! 👏🎓

1

0

1

@Francis_YAO_

Wow, can't get enough you're not the only there 😁 💯 ...

All these LLM breakthroughs make me convinced in the robustness of such a timer setup feature 🗣️⏱️😁

0

0

2

@UKPLab

@TUDarmstadt

@CS_TUDarmstadt

@ATHENECenter

@FraunhoferSIT

@Hessian_AI

@ProLOEWE

@emergen_CITY

@ELLISforEurope

Congratulations 👏

1

0

2

@SemEvalWorkshop

we experiment with Flan-T5-base model in GoogleColab, and preliminary results show benefits of using Reasoning-Revision (THoR-cause-rr) over prompt-based and THoR-cause.

After 3 epochs of training Flan-T5-base is ready to use ✨

🧵 3/n

1

0

2

📢

@wassa_ws

as a part of

@aclmeeting

, we presenting studies 🧪 aimed at empathy and emotion detection. Credits to Tian Li

@UniofNewcastle

who is presenting this work, so feel free to catch him during workshop for questions!

🧵📹📊 details in thread 👇

#WASSA2024

#ACL2024

📢Excited to announce that attending 🟢

@aclmeeting

(ACL-2024) and

@wassa_ws

workshop in partcilar by presenting a framework for empathy and emotion extraction developed in collaboration with

@UniofNewcastle

Details:

#reasoning

#empathy

#emotion

1

0

1

1

0

2

Surprisingly for now to see the 7B sized model by

@NexusflowX

among larger competitors above at the top of the Chatbot Arena Leaderboard 💪

Apple MLX: considering the power of Starling-LM-7B-beta from

@NexusflowX

and its ranking on

@lmsysorg

Chatbot Arena Leaderboard, I converted and uploaded 4bit and 8bit versions on HuggingFace mlx-community!

Performance 🔥 on M2 Ultra 76GPU:

- 4bit: tokens/sec Prompt: 158 -

6

10

113

0

0

1

Excited that the report behind the recently announced ✨Med-Gemini ✨sheds the light on the datasets 📊 utilized in training setup 👀

0

0

2

@xue_yihao65785

Not yet an expert in Multimodal NLP, but delighted for such short contributions sharing! 👀 Well deserved 👏

1

0

1

@PMinervini

@ale_suglia

@tetraduzione

That already makes me feel that LLAvA and other similar solutions on top of the textual LLM are just temporary implementation crutches for multimodality problems

1

0

2

Very interesting system concept of LLM based QA-alike systems involving meta-token generation (RET) that emphasizes the necessity of extra information (no-answer) and then (optionally) perform IR to augment the question with context and ask again LLM for the final answer 👏

0

0

2

@UKPLab

@NLPerspectivWS

@MilaNLProc

@webis_de

@ims_stuttgart

@Hessian_AI

@AndreasWaldis

@InformatikStutt

@ArgminingOrg

@aclmeeting

@gesis_org

That would be exciting to see studies and advances in this domain! 👍

1

0

2

Worth to think of embedding such features as IFD scoring into other SFT frameworks for the non low resource domain studies 🤔

0

0

1

@AnkitaBhaumik3

After

@SemEvalWorkshop

2024 Task 3 aimed at emotion caused prediction in dialogues, finding your studies pretty valuable to explore 👀👏 Thanks for sharing!

1

0

1

@kamilazdybal

Congratulations on your milestone! 👏✨ Same here so far so I can imagine how valuable it is!

0

0

1

@JLopez_160

@cohere

Thank you for sharing this, interesting to see how it goes with LLMs 👏👀 Legal documents are tend to be long in length, so how specifically you sample them?

1

0

0

🤔 ... There is actually a huge room on improvements for this. Wonder on the related studies, however it seems that any further customizations are ending up being too domain oriented

0

0

2

@ashpreetbedi

@GroqInc

@streamlit

This is so interesting from the prospect of the news generation for model fine-tuning/training purposes, thank you! 👀👏

0

1

2

📢Can't gen enough, how these studies on opinion mining might be a perfect source of further advances / assessment of reasoning capabilities 🧠 in Sentiment Analysis 💎👀

0

0

2

@mudler_it

@LocalAI_API

Thanks for sharing this! 👀😊 Was the function calling available for the llama 2 and was that a big advance with this version if so?

1

0

2