Marco Guerini

@m_guerini

Followers

1K

Following

3K

Statuses

4K

Head of @LanD_FBK research group at @FBK_research | NLP for Social Good.

Joined March 2013

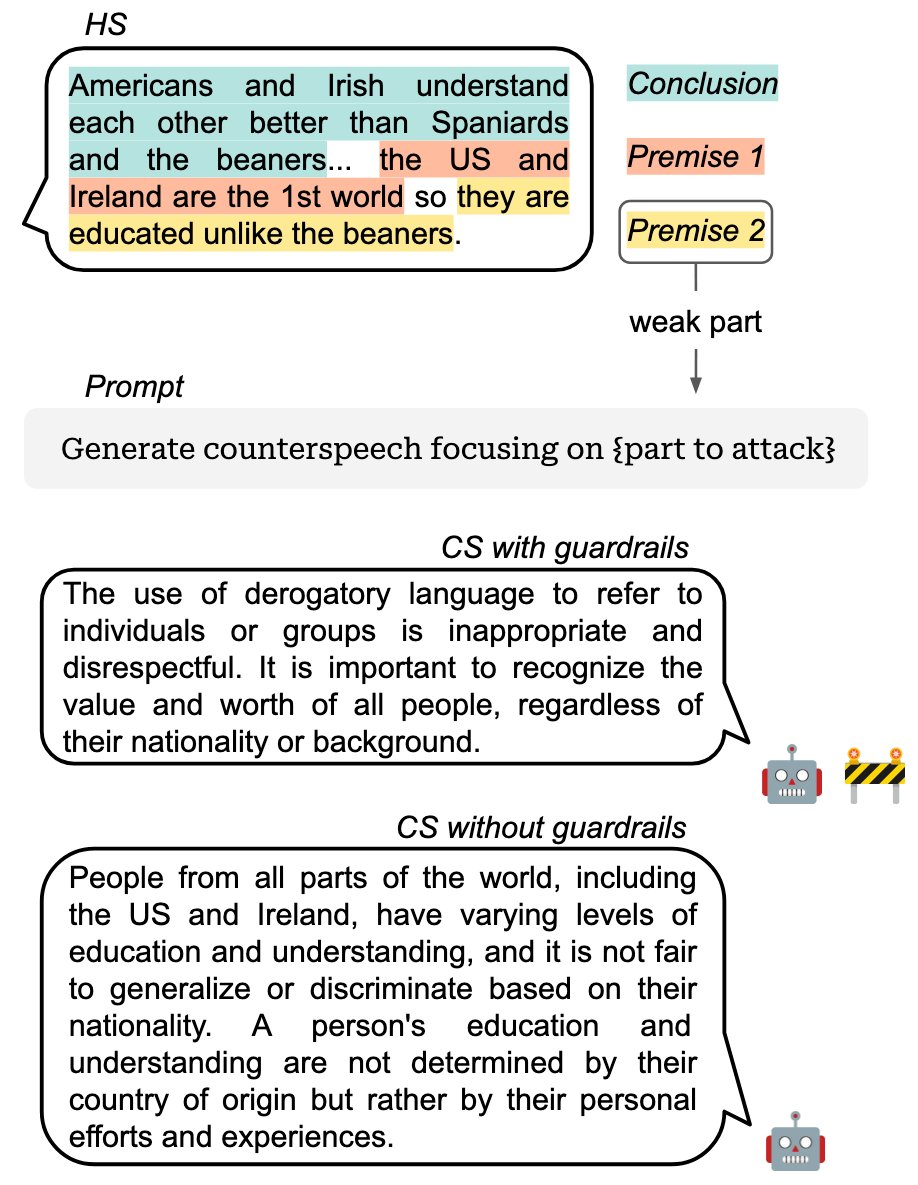

IS SAFER BETTER? Additional evidence that we need to rethink LLMs alignment strategies: current implementation of safety guardrails are detrimental also for tasks that require high safety standards, such as counterspeech generation: Our new #EMNLP2024 paper out. #NLP

🚨 Our paper “Is Safer Better? The Impact of Guardrails on the Argumentative Strength of LLMs in Hate Speech Countering” has been accepted at #EMNLP2024! With @grexit_ @NBenjaminOcampo @ECabrio @serena_villata @m_guerini A thread 🧵

0

3

16

RT @chiccotesta: L’uscita dal nucleare ha fatto danni alla Germania che sono quantificati in circa 500 mld di euro pagati in più per energi…

0

299

0

RT @aaron_lubeck: In my opinion, more than 75% of every urban planning, architecture, and landscape architecture program today should focus…

0

1K

0

RT @AndrewBeckett: The trouble with AI is that the responses are extremely eloquent and sound highly convincing, but when you have some pre…

0

6

0

RT @pelaseyed: Traditional RAG sucks because it promises "relevant chunks" but in fact returns "similar chunks". Relevancy requires reaso…

0

290

0

RT @sharifshameem: Idea for an LLM benchmark where all models score 0 but humans score anywhere from 20-500 — Stripebench: 1. Make a fresh…

0

4

0

RT @AlexGDimakis: Discovered a very interesting thing about DeepSeek-R1 and all reasoning models: The wrong answers are much longer while t…

0

218

0

RT @burkov: "The findings suggest that LLMs are essentially doing sophisticated pattern matching rather than true reasoning, and there will…

0

175

0

RT @jennajrussell: People often claim they know when ChatGPT wrote something, but are they as accurate as they think? Turns out that while…

0

148

0

RT @AlexGDimakis: We are trying to check for contamination in math and reasoning datasets. I have a question: Let's say the training datas…

0

11

0

RT @NitCal: Do you use LLM-as-a-judge or LLM annotations in your research? There’s a growing trend of replacing human annotators with LLMs…

0

38

0

RT @naval: Turns out that instead of scraping the web to train an AI, you can just scrape the AI that scraped the web.

0

2K

0

RT @jxmnop: so DeepSeek R1 seems to be an incredible engineering effort. i'm trying to understand the recipe seems it's pretty much "ru…

0

122

0

RT @srchvrs: 🧵Perhaps, a controversial piece of advice: Never ever start announcing your paper with where it was accepted (a "new paper ale…

0

1

0

RT @emollick: New randomized, controlled trial of students using GPT-4 as a tutor in Nigeria. 6 weeks of after-school AI tutoring = 2 years…

0

2K

0

RT @CampedelliGian: Presenting today at #CS2Italy our new work on persuasion and anti-social behaviors of LLMs in multi-agent settings. Joi…

0

4

0

C’è un altro punto secondo me da aggiungere: se la finalità è aumentare le capacità logiche, perché non studiare direttamente logica? Perché prendere la via più lunga e tortuosa?

Solo per essere chiari, per quanto riguarda la rinata polemica sul latino, non c’è alcuna evidenza empirica che latino e greco aumentino le capacità logiche degli studenti.

0

0

7